Service Mesh Unleashed: A Riveting Dive Into the Istio Framework

This article presents an in-depth analysis of the service mesh landscape, focusing specifically on Istio, one of the most popular service mesh frameworks.

Join the DZone community and get the full member experience.

Join For FreeThis article presents an in-depth analysis of the service mesh landscape, focusing specifically on Istio, one of the most popular service mesh frameworks. A service mesh is a dedicated infrastructure layer for managing service-to-service communication in the world of microservices. Istio, built to seamlessly integrate with platforms like Kubernetes, provides a robust way to connect, secure, control, and observe services. This journal explores Istio’s architecture, its key features, and the value it provides in managing microservices at scale.

Service Mesh

A Kubernetes service mesh is a tool that improves the security, monitoring, and reliability of applications on Kubernetes. It manages communication between microservices and simplifies the complex network environment. By deploying network proxies alongside application code, the service mesh controls the data plane. This combination of Kubernetes and service mesh is particularly beneficial for cloud-native applications with many services and instances. The service mesh ensures reliable and secure communication, allowing developers to focus on core application development.

A Kubernetes service mesh, like any service mesh, simplifies how distributed applications communicate with each other. It acts as a layer of infrastructure that manages and controls this communication, abstracting away the complexity from individual services. Just like a tracking and routing service for packages, a Kubernetes service mesh tracks and directs traffic based on rules to ensure reliable and efficient communication between services.

A service mesh consists of a data plane and a control plane. The data plane includes lightweight proxies deployed alongside application code, handling the actual service-to-service communication. The control plane configures these proxies, manages policies, and provides additional capabilities such as tracing and metrics collection. With a Kubernetes service mesh, developers can separate their application's logic from the infrastructure that handles security and observability, enabling secure and monitored communication between microservices. It also supports advanced deployment strategies and integrates with monitoring tools for better operational control.

Istio as a Service Mesh

Istio is a popular open-source service mesh that has gained significant adoption among major tech companies like Google, IBM, and Lyft. It leverages the data plane and control plane architecture common to all service meshes, with its data plane consisting of envoy proxies deployed as sidecars within Kubernetes pods.

The data plane in Istio is responsible for managing traffic, implementing fault injection for specific protocols, and providing application layer load balancing. This application layer load balancing differs from the transport layer load balancing in Kubernetes. Additionally, Istio includes components for collecting metrics, enforcing access control, authentication, and authorization, as well as integrating with monitoring and logging systems. It also supports encryption, authentication policies, and role-based access control through features like TLS authentication.

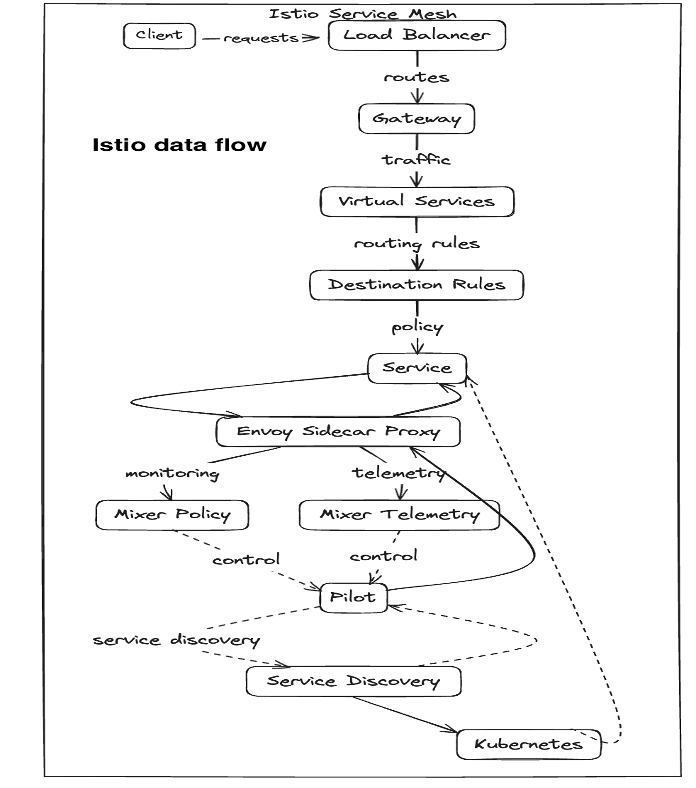

Find the Istio architecture diagram below:

Below, find the configuration and data flow diagram of Istio:

Furthermore, Istio can be extended with various tools to enhance its functionality and integrate with other systems. This allows users to customize and expand the capabilities of their Istio service mesh based on their specific requirements.

Traffic Management

Istio offers traffic routing features that have a significant impact on performance and facilitate effective deployment strategies. These features allow precise control over the flow of traffic and API calls within a single cluster and across clusters.

Within a single cluster, Istio's traffic routing rules enable efficient distribution of requests between services based on factors like load balancing algorithms, service versions, or user-defined rules. This ensures optimal performance by evenly distributing requests and dynamically adjusting routing based on service health and availability.

Routing traffic across clusters enhances scalability and fault tolerance. Istio provides configuration options for traffic routing across clusters, including round-robin, least connections, or custom rules. This capability allows traffic to be directed to different clusters based on factors such as network proximity, resource utilization, or specific business requirements.

In addition to performance optimization, Istio's traffic routing rules support advanced deployment strategies. A/B testing enables the routing of a certain percentage of traffic to a new service version while serving the majority of traffic to the existing version. Canary deployments involve gradually shifting traffic from an old version to a new version, allowing for monitoring and potential rollbacks. Staged rollouts incrementally increase traffic to a new version, enabling precise control and monitoring of the deployment process.

Furthermore, Istio simplifies the configuration of service-level properties like circuit breakers, timeouts, and retries. Circuit breakers prevent cascading failures by redirecting traffic when a specified error threshold is reached. Timeouts and retries handle network delays or transient failures by defining response waiting times and the number of request retries.

In summary, Istio's traffic routing capabilities provide a flexible and powerful means to control traffic and API calls, improving performance and facilitating advanced deployment strategies such as A/B testing, canary deployments, and staged rollouts.

The following is a code sample that demonstrates how to use Istio's traffic routing features in Kubernetes using Istio VirtualService and DestinationRule resources:

- In the code below, we define a

VirtualServicenamedmy-servicewith a hostmy-service.example.com. We configure traffic routing by specifying two routes: one to thev1subset of themy-servicedestination and another to thev2subset. We assign different weights to each route to control the proportion of traffic they receive. TheDestinationRuleresource defines subsets for themy-servicedestination, allowing us to route traffic to different versions of the service based on labels. In this example, we have subsets for versionsv1andv2.

Code Sample

# Example VirtualService configuration

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: my-service

spec:

hosts:

- my-service.example.com

http:

- route:

- destination:

host: my-service

subset: v1

weight: 90

- destination:

host: my-service

subset: v2

weight: 10

# Example DestinationRule configuration

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: my-service

spec:

host: my-service

subsets:

- name: v1

labels:

version: v1

- name: v2

labels:

version: v2

Observability

As the complexity of services grows, it becomes increasingly challenging to comprehend their behavior and performance. Istio addresses this challenge by automatically generating detailed telemetry for all communications within a service mesh. This telemetry includes metrics, distributed traces, and access logs, providing comprehensive observability into the behavior of services.

With Istio, operators can easily access and analyze metrics that capture various aspects of service performance, such as request rates, latency, and error rates. These metrics offer valuable insights into the health and efficiency of services, allowing operators to proactively identify and address performance issues.

Distributed tracing in Istio enables the capturing and correlation of trace spans across multiple services involved in a request. This provides a holistic view of the entire request flow, allowing operators to understand the latency and dependencies between services. With this information, operators can pinpoint bottlenecks and optimize the performance of their applications.

Full access logs provided by Istio capture detailed information about each request, including headers, payloads, and response codes. These logs offer a comprehensive audit trail of service interactions, enabling operators to investigate issues, debug problems, and ensure compliance with security and regulatory requirements.

The telemetry generated by Istio is instrumental in empowering operators to troubleshoot, maintain, and optimize their applications. It provides a deep understanding of how services interact, allowing operators to make data-driven decisions and take proactive measures to improve performance and reliability. Furthermore, Istio's telemetry capabilities are seamlessly integrated into the service mesh without requiring any modifications to the application code, making it a powerful and convenient tool for observability.

Istio automatically generates telemetry for all communications within a service mesh, including metrics, distributed traces, and access logs. Here's an example of how you can access metrics and logs using Istio:

Commands in Bash

- # Access metrics:

istioctl dashboard kiali- # Access distributed traces:

istioctl dashboard jaeger- # Access access logs:

kubectl logs -l istio=ingressgateway -n istio-system

In the code above, we use the istioctl command-line tool to access Istio's observability dashboards. The istioctl dashboard kiali command opens the Kiali dashboard, which provides a visual representation of the service mesh and allows you to view metrics such as request rates, latency, and error rates. The istioctl dashboard jaeger command opens the Jaeger dashboard, which allows you to view distributed traces and analyze the latency and dependencies between services.

To access access logs, we use the kubectl logs command to retrieve logs from the Istio Ingress Gateway. By filtering logs with the label istio=ingressgateway and specifying the namespace istio-system, we can view detailed information about each request, including headers, payloads, and response codes.

By leveraging these observability features provided by Istio, operators can gain deep insights into the behavior and performance of their services. This allows them to troubleshoot issues, optimize performance, and ensure the reliability of their applications.

Security Capabilities

Microservices have specific security requirements, such as protecting against man-in-the-middle attacks, implementing flexible access controls, and enabling auditing tools. Istio addresses these needs with its comprehensive security solution.

Istio's security model follows a "security-by-default" approach, providing in-depth defense for deploying secure applications across untrusted networks. It ensures strong identity management, authenticating and authorizing services within the service mesh to prevent unauthorized access and enhance security.

Transparent TLS encryption is a crucial component of Istio's security framework. It encrypts all communication within the service mesh, safeguarding data from eavesdropping and tampering. Istio manages certificate rotation automatically, simplifying the maintenance of a secure communication channel between services.

Istio also offers powerful policy enforcement capabilities, allowing operators to define fine-grained access controls and policies for service communication. These policies can be dynamically enforced and updated without modifying the application code, providing flexibility in managing access and ensuring secure communication.

With Istio, operators have access to authentication, authorization, and audit (AAA) tools. Istio supports various authentication mechanisms, including mutual TLS, JSON Web Tokens (JWT), and OAuth2, ensuring secure authentication of clients and services. Additionally, comprehensive auditing capabilities help operators track service behavior, comply with regulations, and detect potential security incidents.

In summary, Istio's security solution addresses the specific security requirements of microservices, providing strong identity management, transparent TLS encryption, policy enforcement, and AAA tools. It enables operators to deploy secure applications and protect services and data within the service mesh.

Code Sample

# Example DestinationRule for mutual TLS authentication

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: my-service

spec:

host: my-service

trafficPolicy:

tls:

mode: MUTUAL

clientCertificate: /etc/certs/client.pem

privateKey: /etc/certs/private.key

caCertificates: /etc/certs/ca.pem

# Example AuthorizationPolicy for access control

apiVersion: security.istio.io/v1beta1

kind: AuthorizationPolicy

metadata:

name: my-service-access

spec:

selector:

matchLabels:

app: my-service

rules:

- from:

- source:

principals: ["cluster.local/ns/default/sa/my-allowed-service-account"]

to:

- operation:

methods: ["*"]

In the code above, we configure mutual TLS authentication for the my-service destination using a DestinationRule resource. We set the mode to MUTUAL to enforce mutual TLS authentication between clients and the service. The clientCertificate, privateKey, and caCertificates fields specify the paths to the client certificate, private key, and CA certificate, respectively. We also define an AuthorizationPolicy resource to control access to the my-service based on the source service account. In this example, we allow requests from the my-allowed-service-account service account in the default namespace by specifying its principal in the principals field. By applying these configurations to an Istio-enabled Kubernetes cluster, you can enhance the security of your microservices by enforcing mutual TLS authentication and implementing fine-grained access controls.

Circuit Breaking and Retry

Circuit breaking and retries are crucial techniques in building resilient distributed systems, especially in microservices architectures. Circuit breaking prevents cascading failures by stopping requests to a service experiencing errors or high latency. Istio's CircuitBreaker resource allows you to define thresholds for failed requests and other error conditions, ensuring that the circuit opens and stops further degradation when these thresholds are crossed. This isolation protects other services from being affected. Additionally, Istio's Retry resource enables automatic retries of failed requests, with customizable backoff strategies, timeout periods, and triggering conditions. By retrying failed requests, transient failures can be handled effectively, increasing the chances of success. Combining circuit breaking and retries enhances the resilience of microservices, isolating failing services and providing resilient handling of intermittent issues. Configuration of circuit breaking and retries in Istio is done within the VirtualService resource, allowing for customization based on specific requirements. Overall, leveraging these features in Istio is essential for building robust and resilient microservices architectures, protecting against failures, and maintaining system reliability.

In the code below, we configure circuit breaking and retries for my-service using the VirtualService resource. The retries section specifies that failed requests should be retried up to 3 times with a per-try timeout of 2 seconds. The retryOn field specifies the conditions under which retries should be triggered, such as 5xx server errors or connect failures. The fault section configures fault injection for the service. In this example, we introduce a fixed delay of 5 seconds for 50% of the requests and abort 10% of the requests with a 503 HTTP status code. The circuitBreaker section defines the circuit-breaking thresholds for the service. The example configuration sets the maximum number of connections to 100, maximum HTTP requests to 100, maximum pending requests to 10, sleep window to 5 seconds, and HTTP detection interval to 10 seconds. By applying this configuration to an Istio-enabled Kubernetes cluster, you can enable circuit breaking and retries for your microservices, enhancing resilience and preventing cascading failures.

Code Sample

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: my-service

spec:

hosts:

- my-service

http:

- route:

- destination:

host: my-service

subset: v1

retries:

attempts: 3

perTryTimeout: 2s

retryOn: 5xx,connect-failure

fault:

delay:

fixedDelay: 5s

percentage:

value: 50

abort:

httpStatus: 503

percentage:

value: 10

circuitBreaker:

simpleCb:

maxConnections: 100

httpMaxRequests: 100

httpMaxPendingRequests: 10

sleepWindow: 5s

httpDetectionInterval: 10s

Canary Deployments

Canary deployments with Istio offer a powerful strategy for releasing new features or updates to a subset of users or traffic while minimizing the risk of impacting the entire system. With Istio's traffic management capabilities, you can easily implement canary deployments by directing a fraction of the traffic to the new version or feature. Istio's VirtualService resource allows you to define routing rules based on percentages, HTTP headers, or other criteria to selectively route traffic. By gradually increasing the traffic to the canary version, you can monitor its performance and gather feedback before rolling it out to the entire user base. Istio also provides powerful observability features, such as distributed tracing and metrics collection, allowing you to closely monitor the canary deployment and make data-driven decisions. In case of any issues or anomalies, you can quickly roll back to the stable version or implement other remediation strategies, minimizing the impact on users. Canary deployments with Istio provide a controlled and gradual approach to releasing new features, ensuring that changes are thoroughly tested and validated before impacting the entire system, thus improving the overall reliability and stability of your applications.

To implement canary deployments with Istio, we can use the VirtualService resource to define routing rules and gradually shift traffic to the canary version.

Code Sample

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: my-service

spec:

hosts:

- my-service

http:

- route:

- destination:

host: my-service

subset: stable

weight: 90

- destination:

host: my-service

subset: canary

weight: 10In the code above, we configure the VirtualService to route 90% of the traffic to the stable version of the service (subset: stable) and 10% of the traffic to the canary version (subset: canary). The weight field specifies the distribution of traffic between the subsets. By applying this configuration, you can gradually increase the traffic to the canary version and monitor its behavior and performance. Istio's observability features, such as distributed tracing and metrics collection, can provide insights into the canary deployment's behavior and impact. If any issues or anomalies are detected, you can quickly roll back to the stable version by adjusting the traffic weights or implementing other remediation strategies. By leveraging Istio's traffic management capabilities, you can safely release new features or updates, gather feedback, and mitigate risks before fully rolling them out to your user base.

Autoscaling

Istio seamlessly integrates with Kubernetes' Horizontal Pod Autoscaler (HPA) to enable automated scaling of microservices based on various metrics, such as CPU or memory usage. By configuring Istio's metrics collection and setting up the HPA, you can ensure that your microservices scale dynamically in response to increased traffic or resource demands. Istio's metrics collection capabilities allow you to gather detailed insights into the performance and resource utilization of your microservices. These metrics can then be used by the HPA to make informed scaling decisions. The HPA continuously monitors the metrics and adjusts the number of replicas for a given microservice based on predefined scaling rules and thresholds. When the defined thresholds are crossed, the HPA automatically scales up or down the number of pods, ensuring that the microservices can handle the current workload efficiently. This automated scaling approach eliminates the need for manual intervention and enables your microservices to adapt to fluctuating traffic patterns or resource demands in real time. By leveraging Istio's integration with Kubernetes' HPA, you can achieve optimal resource utilization, improve performance, and ensure the availability and scalability of your microservices.

Code Sample

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: my-service-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: my-service

minReplicas: 1

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

targetAverageUtilization: 50

In the example above, the HPA is configured to scale the my-service deployment based on CPU usage. The HPA will maintain an average CPU utilization of 50% across all pods. By applying this configuration, Istio will collect metrics from your microservices, and the HPA will automatically adjust the number of replicas based on the defined scaling rules and thresholds. With this integration, your microservices can dynamically scale up or down based on traffic patterns and resource demands, ensuring optimal utilization of resources and improved performance. It’s important to note that the Istio integration with Kubernetes' HPA may require additional configuration and tuning based on your specific requirements and monitoring setup.

Implementing Fault Injection and Chaos Testing With Istio

Chaos fault injection with Istio is a powerful technique that allows you to test the resilience and robustness of your microservices architecture. Istio provides built-in features for injecting faults and failures into your system, simulating real-world scenarios, and evaluating how well your system can handle them. With Istio's Fault Injection feature, you can introduce delays, errors, aborts, or latency spikes to specific requests or services. By configuring VirtualServices and DestinationRules, you can selectively apply fault injection based on criteria such as HTTP headers or paths. By combining fault injection with observability features like distributed tracing and metrics collection, you can closely monitor the impact of injected faults on different services in real time. Chaos fault injection with Istio helps you identify weaknesses, validate error handling mechanisms, and build confidence in the resilience of your microservices architecture, ensuring the reliability and stability of your applications in production environments.

Securing External Traffic Using Istio's Ingress Gateway

Securing external traffic using Istio's Ingress Gateway is crucial for protecting your microservices architecture from unauthorized access and potential security threats. Istio's Ingress Gateway acts as the entry point for external traffic, providing a centralized and secure way to manage inbound connections. By configuring Istio's Ingress Gateway, you can enforce authentication, authorization, and encryption protocols to ensure that only authenticated and authorized traffic can access your microservices. Istio supports various authentication mechanisms such as JSON Web Tokens (JWT), mutual TLS (mTLS), and OAuth, allowing you to choose the most suitable method for your application's security requirements. Additionally, Istio's Ingress Gateway enables you to define fine-grained access control policies based on source IP, user identity, or other attributes, ensuring that only authorized clients can reach specific microservices. By leveraging Istio's powerful traffic management capabilities, you can also enforce secure communication between microservices within your architecture, preventing unauthorized access or eavesdropping. Overall, Istio's Ingress Gateway provides a robust and flexible solution for securing external traffic, protecting your microservices, and ensuring the integrity and confidentiality of your data and communications.

Code Sample

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: my-gateway

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "*"

In this example, we define a Gateway named my-gateway that listens on port 80 and accepts HTTP traffic from any host. The Gateway's selector is set to istio: ingressgateway, which ensures that it will be used as the Ingress Gateway for external traffic.

Best Practices for Managing and Operating Istio in Production Environments

When managing and operating Istio in production environments, there are several best practices to follow.

- First, it is essential to carefully plan and test your Istio deployment before production rollout, ensuring compatibility with your specific application requirements and infrastructure.

- Properly monitor and observe your Istio deployment using Istio's built-in observability features, including distributed tracing, metrics, and logging.

- Regularly review and update Istio configurations to align with your evolving application needs and security requirements.

- Implement traffic management cautiously, starting with conservative traffic routing rules and gradually introducing more advanced features like traffic splitting and canary deployments.

- Take advantage of Istio's traffic control capabilities to implement circuit breaking, retries, and timeout policies to enhance the resilience of your microservices.

- Regularly update and patch your Istio installation to leverage the latest bug fixes, security patches, and feature enhancements.

- Lastly, establish a robust backup and disaster recovery strategy to mitigate potential risks and ensure business continuity.

By adhering to these best practices, you can effectively manage and operate Istio in production environments, ensuring the reliability, security, and performance of your microservices architecture.

Conclusion

In the evolving landscape of service-to-service communication, Istio, as a service mesh, has surfaced as an integral component, offering a robust and flexible solution for managing complex communication between microservices in a distributed architecture. Istio's capabilities extend beyond merely facilitating communication to providing comprehensive traffic management, enabling sophisticated routing rules, retries, failovers, and fault injections. It also addresses security, a critical aspect in the microservices world, by implementing it at the infrastructure level, thereby reducing the burden on application code. Furthermore, Istio enhances observability in the system, allowing organizations to effectively monitor and troubleshoot their services.

Despite the steep learning curve associated with Istio, the multitude of benefits it offers makes it a worthy investment for organizations. The control and flexibility it provides over microservices are unparalleled. With the growing adoption of microservices, the role of service meshes like Istio is becoming increasingly pivotal, ensuring reliable, secure operation of services, and providing the scalability required in today's dynamic business environment.

In conclusion, Istio holds a significant position in the service mesh realm, offering a comprehensive solution for managing microservices at scale. It represents the ongoing evolution in service-to-service communication, driven by the need for more efficient, secure, and manageable solutions. The future of Istio and service mesh, in general, appears promising, with continuous research and development efforts aimed at strengthening and broadening their capabilities.

References

- "What is a service mesh?" (Red Hat)

- "Istio - Connect, secure, control, and observe services." (Istio)

- "What is Istio?" (IBM Cloud)

- "Understanding the Basics of Service Mesh" (Container Journal)

Opinions expressed by DZone contributors are their own.

Comments