Serverless Reference Architecture

Collate all Serverless into a set of L0 and L1 reference architectures. Combines FaaS (Serverless Compute) and BaaS (serverless platform services).

Join the DZone community and get the full member experience.

Join For FreeThis article is a part of a series that looks at serverless from diverse points of view and provides pragmatic guides that help in adopting serverless architecture, tackling practical challenges in serverless, and discussing how serverless enables reactive event-driven architectures. The articles stay clear of a Cloud provider serverless services, only referring to those in examples (AWS being a common reference). The articles in this series are published periodically and can be searched with the tag “openupserverless.”

Brief Background

To recap on the broad-based meaning of serverless, serverless is any offering that abstracts out the infrastructure choices and provisioning from DevOps and works on a usage-based cost model. The usage is defined in the context of the service by the platform vendors.

Any service that satisfies ALL the conditions below is serverless:

- Does not force a choice from infrastructure options in the architecture

- Does not have a cost model linked to infrastructure

- Supports a declarative service execution model

- Does not force operating system choices

Before FaaS or FPaaS became popular, hyperscaler clouds already provided platform services that had evolved from being pure IaaS or IaaS based. These services were referred to as BaaS or simply platform services. A formal definition of serverless brought these kinds of cloud platform services into the definition of serverless. And so, architectures evolved that were based purely on serverless building blocks. These architectures solve the infrastructure concerns of the cloud, such as :

- provisioning

- maintenance

- scaling

- patching

- monitoring

Which forced a new kind of serverless architecture thinking that demanded the first-class focus on things such as declarative cloud services, usage metrics, failure management, running at scale, cost optimization decisions, cold-start issues & time-outs.

The serverless code execution is seen as a “glue” that integrates the serverless architecture building blocks. A pure serverless architecture based on serverless services that provide a solution to concern and then integrate with other serverless services via:

- the serverless code execution (with FaaS or serverless containers)

- platform provided pre-designed integration

- Using the SDKs via custom code.

Serverless execution workloads are provided via a FaaS platform which provides a code-execution substrate and execution environment with a certain lifecycle. It is also provided as serverless containers where Kubernetes service-based containers are managed in response to a request or event.

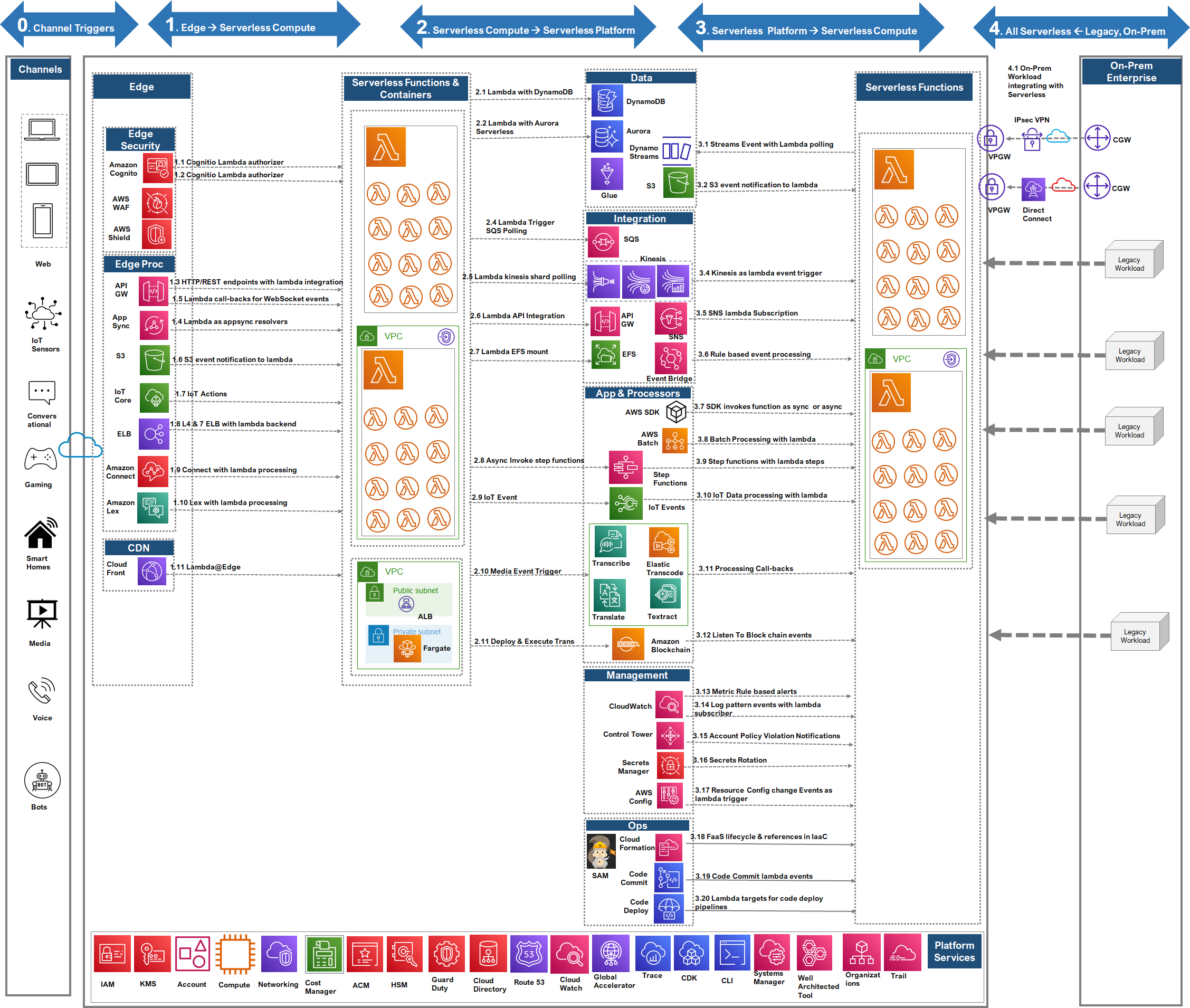

Serverless Reference Architecture (L0)

The L0 serverless reference architecture presents a logical view of application types, solution patterns, and application layers where serverless PaaS (BaaS) and FaaS compute services may be used.

L0 Details

The ref architecture can be used to depict any application architecture in terms of the serverless building blocks used across layers, which can give a quick idea of how the detailed application architecture may look like.

L0 is logically divided into layers that are common architecture concerns and depicts what serverless patterns and choices can be made there. These are not hyper-scale-specific and represent a generic set of services.

Of course, the services are free to be leveraged with non-serverless architecture components via the SDKs or other frameworks, which results in a hybrid model – where concerns such as scale, resilience, fault tolerance, and cost have to be carefully considered. This hybrid model not only combines the processing patterns but the cost models and scale models as well.

App-Types

Serverless can architect most of the application types that can otherwise be implemented with any cloud-native or traditional architectures. Some niche types, such as genomics, big data, scatter-gather type of apps, etc., are not fit for a pure serverless architecture. The nature of these kinds of applications and necessary infrastructure choices to justify costs in these types make these understandably non-serverless at this point.

Data

Hyperscaler provides a serverless database, both RDBMS & NO-SQL. These are, of course, usable with both serverless and traditional workloads, keeping in mind that the usage model and the cost model are different. This layer depicts the common database types available as serverless in at least one cloud platform. Hyperscaler has a varying degree of support for these database types. Few Examples

Document DB: GCP Firestore

Columnar DB: AWS DynamoDB

Key Value: Azure Cosmos

RDBMS: AWS Aurora

Object Store: AWS S3

In addition to databases, this layer also represents serverless data management services like Object Store-based Data Lakes, Change Data Captures based on event bus, data archival, etc.

Integration

Serverless is often seen as a strong enabler of event-driven architecture, with event triggers defined by various event sources within the hyper-scale services as well as from channels. Messaging, Notification, Event Stream Processing, and REST Integration provide serverless integration options. The integrations provide event triggers for FaaS or Serverless containers for any processing. Refer to L1 for patterns of integration. A given architecture can use one or more of these patterns for various user journeys.

Processing

The layers present general processing choices that are enabled with serverless platform services and integration with FaaS. The services can be FaaS event producers or event consumers (pubs or subs or both). Apart from traditional HTTP request processing, stream data processing, BPM, and workflows Transcribe, Transcoding, IoT Event Processing, and Blockchain processing patterns can be architected via pure serverless services.

Analytics & ML

Analytics presents specific processing as well, called out for analytics patterns that are created for data analytics. This is a combination of data and processing layers.

ML cases presented here are not the entire ML architecture but the applicability of serverless processing and FaaS processing triggers for ML workloads.

Crosscutting

Available as core platform serverless services for the entire cloud (serverless or otherwise). These services provide platform-level capabilities with the usual FaaS “processing delegates” pattern. The practices and concerns for scaling & costing are like other serverless services, and the hyperscaler provides guidelines around the usage.

Serverless Architecture (L1)

L1 serverless architecture depicts architecture layers from an event-driven point of view, i.e., as event generators, event consumers, and event processors. L1 builds on the serverless capabilities depicted in L0 and introduces a layered view of serverless.

- Events generation and processing in serverless can be:

- Synchronous, request-response (e.g., API Gateway)

- Asynchronous, request-response (e.g., Webhooks, WebSockets)

- Asynchronous Messaging (e.g., Event Bus, Notifications)

- Asynchronous Polling Messaging (e.g., Message Queue)

- Asynchronous Polling Streaming (e.g., Reactive Streams)

L1 architecture provides a view of these event triggers and processing with the serverless FaaS & serverless platform services.

Channels, Edge, Serverless Code Execution, and Serverless Platform Services for different architecture concerns and event triggers from the legacy enterprise are the event sources, and hopefully, this view makes the “glue code” pattern clearer. The platform services in architecture layers that provide a specific solution block are “glued” together by serverless code execution workloads (functions, containers), which are initiated and executed in small run cycles based on a trigger (sync, async). In a way, creating serverless cloud-native architecture is to be able to assemble these blocks together to form a business solution. A Lego-block architecture analogy is not misplaced here.

Importantly while the L1 view provides a view with unique layers, the real-world architecture may use these patterns in a more complex way, i.e., repeated and forked, till a solution is complete. The serverless architecture patterns would typically be a meshed-up subset of this.

In the L1 view, the event triggers and consumption is detailed in the arrows for each type of trigger pattern.

Event Triggers

0. Channel Triggers: Across all app types depicted in L0, the channels that are in play are event sources. These may be traditional web channels or bots, sensors, smart homes, etc., that utilize a specific serverless architecture

1. Edge -> Serverless Compute: Edge serverless services that interface with channels provide specialized processing patterns relevant to edge traffic. These services are delegated to serverless compute for many scenarios, as depicted in L1. Depending on the use case, this integration is synchronous for a request-response case or asynchronous otherwise.

2. Serverless Compute -> Serverless Platform: The serverless compute works on a cloud provider-provided SDK and can technically invoke any platform service. Some common integration patterns are implemented out of the box by the provider. Then there are platform services that configure FaaS as a “pull” source, for example, via a polling mechanism. In such a case, the serverless compute is closely coupled as a “processing-delegate” of the platform service.

3. Serverless Platform-> Serverless Compute: Again, as “processing-delegates,” the platform serverless services are configurable to call FaaS. There are specific hooks and processing points that need a compute platform like FaaS. These platform services can call FaaS to compute in sync or async way, depending on the service. This layer is divided into the following to provide traceability to the L0 view: Data, Integration, Processing, Management & Ops.

4. Legacy Enterprise -> Serverless: In hybrid cloud architecture, the on prim applications use the cloud SDK to leverage serverless services on the cloud. The enterprise and cloud are integrated via VPN or dedicated fiber connection tunnels.

Not shown in this view if Serverless Platform -> Serverless Platform. In this trigger, the serverless platform services can invoke each other in view synchronous or asynchronous mode. For example, a serverless Log Aggregation service can stream the logs to a Serverless Notification Service or a Serverless Stream Service. This pattern is not shown to keep the view simple and includes only serverless compute triggers.

L1 – AWS

The L1 realization with AWS is provided here with the same event triggers, layers, and integrations. The AWS may provide a subset of all patterns as depicted in vanilla L1. The arrows in this view provide an AWS-specific detail of events and consumption.

Clearly, AWS supports most of the serverless patterns, services, and components.

Thank You,

#openupserverless

Opinions expressed by DZone contributors are their own.

Comments