Securing RESTful Endpoints

Enhance application security, protect data. Learn authentication, encryption, testing. Prioritize security for a resilient digital environment.

Join the DZone community and get the full member experience.

Join For FreeWelcome back, developers and security enthusiasts! In the previous blog, “Implementing RESTful endpoints: a step-by-step guide,” we covered the essential foundations of API security, including authentication, authorization, and secure communication protocols.

Now, it's time to level up and delve into advanced API security. Building upon our previous discussions, this blog takes us deeper into protecting our applications and data from potential threats.

In this sequel, we will explore advanced techniques such as error handling, appropriate error codes and messages, error logging, and safeguarding against information leakage. Additionally, we'll uncover the intricacies of API security testing, including its importance, different types, recommended tools, and vulnerability assessments.

By expanding our knowledge and implementing these advanced security measures, we can fortify our APIs, maintain data integrity, and defend against unauthorized access.

Let's embark on this journey of advancing API security together. By staying informed and implementing best practices, we can create resilient applications that inspire trust among users and clients.

Importance of Securing RESTful Endpoints

Securing RESTful endpoints is of utmost importance in today's interconnected world. With the increasing reliance on APIs for data exchange and service integration, API security plays a vital role in protecting sensitive information and ensuring the integrity of services. There are common threats that RESTful endpoints face, such as unauthorized access, data breaches, injection attacks, and denial of service (DoS) attacks. These threats can have severe consequences, including compromised user data, financial loss, damage to reputation, and even legal implications. By prioritizing API security, organizations can not only safeguard their systems and data but also build trust with users, meet regulatory requirements, and mitigate potential risks. It is essential to recognize the significance of API security and take proactive measures to establish a robust security framework for RESTful endpoints.

Overview of Security Measures for RESTful Endpoints

To ensure the security of RESTful endpoints, it is important to implement a range of security measures. These measures include:

Authentication: Implementing authentication mechanisms to verify the identity of clients accessing the API. This can include token-based authentication, basic authentication, or OAuth and OAuth2.

Authorization: Establishing access control mechanisms to determine what actions and resources clients are allowed to access. This can involve role-based access control (RBAC) or claims-based access control.

Data Encryption: Encrypting sensitive data transmitted over the network using secure communication protocols such as HTTPS. This helps prevent unauthorized access or eavesdropping.

Input Validation and Sanitization: Validating and sanitizing user input to prevent common vulnerabilities such as SQL injection or cross-site scripting (XSS) attacks.

Rate Limiting and Throttling: Implementing mechanisms to limit the number of requests a client can make within a certain timeframe. This helps prevent abuse, protect against Denial of Service (DoS) attacks, and maintain system performance.

Error Handling and Logging: Implementing robust error handling mechanisms to provide appropriate error messages without revealing sensitive information. Additionally, logging and monitoring errors can help identify and address security issues.

API Security Testing: Regularly conducting security testing, such as unit testing, integration testing, and penetration testing, to identify vulnerabilities and weaknesses in the API.

Staying Updated with Best Practices: Keeping up-to-date with the latest security threats, industry best practices, and guidelines to ensure the API's security posture remains strong.

Authentication Mechanisms

Authentication mechanisms are methods used to verify the identity of clients accessing RESTful endpoints. These mechanisms include token-based authentication, basic authentication, and OAuth/OAuth2. Token-based authentication involves exchanging a token for subsequent requests. Basic authentication uses a username and password sent in the request headers. OAuth and OAuth2 are industry-standard protocols allowing delegated access to resources using authorization tokens. Each mechanism has its own advantages and use cases, providing different levels of security and flexibility for authenticating clients.

Types of Authentication Mechanisms

There are several types of authentication mechanisms commonly used for securing RESTful endpoints. Here are some of them, along with their pros and cons:

Token-Based Authentication:

- Pros: Stateless, scalable, and suitable for distributed systems. Can be easily integrated with third-party authentication providers. Allows for fine-grained control over access and supports revoking tokens.

- Cons: Requires additional steps for token generation and validation. Token storage and management can be complex. Tokens may need to be secured against theft or misuse.

Token-Based Authentication Example:

// Install required packages: npm install jsonwebtoken

const jwt = require('jsonwebtoken');

const secretKey = 'your-secret-key';

// Generating a token

const payload = { userId: 123456 };

const token = jwt.sign(payload, secretKey, { expiresIn: '1h' });

// Verifying and extracting token payload

const decoded = jwt.verify(token, secretKey);

console.log(decoded); // { userId: 123456 }

Basic Authentication:

- Pros: Simple to implement and widely supported by clients and servers. Does not require session management on the server side.

- Cons: Insecure for transmitting credentials as they are base64-encoded, making it susceptible to eavesdropping. Does not provide a way to revoke or expire credentials.

Basic Authentication Example:

// Install required packages: npm install express basic-auth

const express = require('express');

const basicAuth = require('basic-auth');

const app = express();

// Middleware for basic authentication

app.use((req, res, next) => {

const credentials = basicAuth(req);

if (!credentials || credentials.name !== 'username' || credentials.pass !== 'password') {

res.status(401).send('Unauthorized');

} else {

next();

}

});

// Protected route

app.get('/api/protected', (req, res) => {

res.send('Authenticated and authorized!');

});

app.listen(3000, () => {

console.log('Server started on port 3000');

});

OAuth and OAuth2:

Pros: Delegated authorization allows users to grant limited access to their resources without sharing credentials. Supports single sign-on and allows integration with various identity providers. Provides fine-grained control over permissions.

Cons: More complex to implement and requires understanding of the protocol. Additional overhead due to token exchange and validation. Requires managing client credentials securely.

Oauth 2.0 in a Web Application Scenario Example:

It's important to consider the specific requirements of your application and choose the authentication mechanism that best aligns with your security needs, scalability requirements, and integration capabilities.

Authorization Server Configuration:

The authorization server is responsible for issuing access tokens and validating authorization requests. Here's an example implementation using the OAuth2 Server library in Node.js:

// Install required packages: npm install oauth2-server

const oauth2 = require('oauth2-server');

const model = require('./oauth-model'); // Your custom model implementation

const oauth = new oauth2({

model: model,

accessTokenLifetime: 3600, // Token expiration time in seconds

});

// Authorization endpoint

app.get('/oauth/authorize', oauth.authorize());

// Token endpoint

app.post('/oauth/token', oauth.token());

app.listen(3000, () => {

console.log('Server started on port 3000');

});Protecting API Endpoints:

Once the authorization server is configured, you can protect your API endpoints by requiring valid access tokens. Here's an example implementation using Express.js:

const express = require('express');

const oauth = require('oauth2-server');

const app = express();

// OAuth middleware for protecting routes

app.use(oauth.authenticate());

// Protected route

app.get('/api/protected', (req, res) => {

res.send('Authenticated and authorized!');

});

app.listen(3000, () => {

console.log('Server started on port 3000');

});

In the above example, the oauth.authenticate() middleware ensures that the request contains a valid access token. If the token is invalid or missing, the middleware responds with a 401 Unauthorized status.

Please note that this is a simplified example, and the actual implementation may vary depending on the OAuth 2.0 library and framework you are using. Additionally, you would need to implement the custom model (oauth-model.js) for managing clients, users, and access tokens according to your application's requirements.

Authorization and Access Control

Authorization and access control are security mechanisms that determine what actions and resources a user or client is allowed to access within a system or application. These mechanisms ensure that only authorized individuals or entities can perform specific operations and access protected resources.

Authorization involves granting or denying permissions to users or clients based on their identity, roles, or other attributes. It establishes rules and policies that define what actions or operations are permitted for a given user or role. Authorization is typically enforced after authentication, where the user's identity has been verified.

Access control focuses on controlling access to specific resources, such as data, files, or functionalities, based on the granted authorization. It ensures that only authorized users can interact with or view particular resources within the system. Access control mechanisms can be role-based, where permissions are assigned to specific roles, or attribute-based, where access is determined by specific attributes or conditions.

Common techniques for implementing authorization and access control include:

- Role-based access control (RBAC): Users are assigned specific roles, and permissions are associated with these roles. Access is granted based on the user's role, regardless of their individual identity.

- Claims-based access control: Access is determined based on the attributes or claims associated with a user. Claims can include user roles, attributes, or any other relevant information used to make access control decisions.

- Access control lists (ACL): A list that specifies the access permissions granted to individual users or groups for specific resources.

Fine-grained access control: Allows for granular control over individual resources or actions within a system, enabling precise access permissions for different users or roles.

Securing an API in Martini

There are times when you must secure your API in order to protect sensitive resources and services.

Create Users and Groups

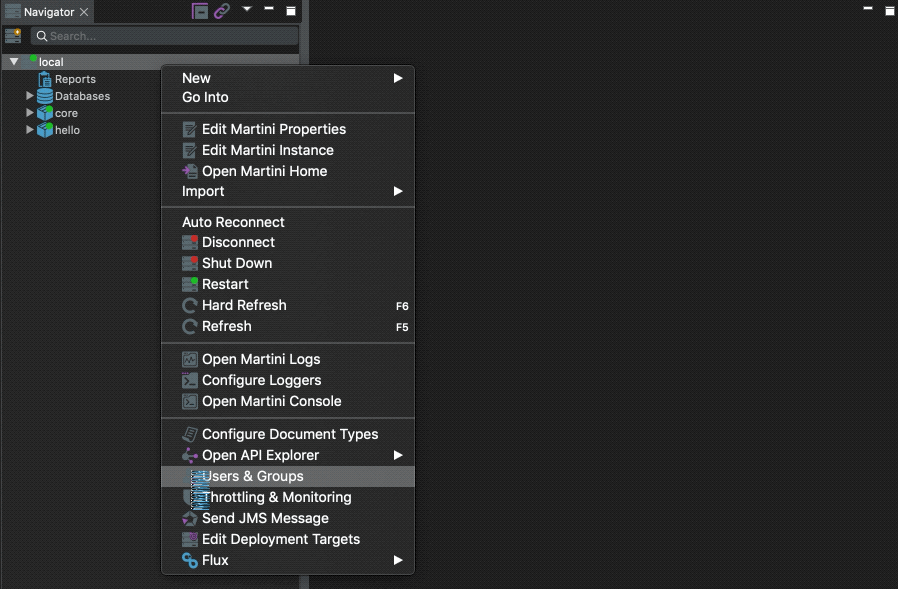

1. Open the Users and Groups dialog by right-clicking on your instance and then selecting Users and Groups from the context menu.

A screenshot in Martini showing how to create users and groups

2. Select the Users tab.

3. Click the green, '+'-labeled button.

A screenshot in Martini showing how to create users and groups

4. Provide the new user's details in the appearing form (making sure it's enabled).

5. Click Save.

6. In Martini Desktop, click the OK button to close the dialog once done.

A screenshot in Martini showing how to create users and groups

A screenshot in Martini showing how to create users and groups

Groups are added in a similar fashion but in the Groups tab.

Securing Data Transmission

Securing data transmission involves measures to protect data as it's sent over networks or communication channels. This includes using secure protocols like HTTPS, encrypting sensitive data, and implementing TLS/SSL certificates for authentication. These measures prevent unauthorized access, interception, and tampering, ensuring data confidentiality and integrity during transit. Protecting against man-in-the-middle attacks is crucial, achieved through secure protocols, certificate validation, and secure key exchange. Overall, securing data transmission safeguards sensitive information, maintains privacy, and prevents unauthorized access or breaches.

Overview of Secure Communication Protocols

An overview of secure communication protocols includes various technologies and protocols that ensure the confidentiality, integrity, and authenticity of data transmitted over networks or communication channels. These protocols provide a secure framework for exchanging information and protect against unauthorized access or tampering.

Here are some commonly used secure communication protocols:

- HTTPS (HTTP Secure): HTTPS is an extension of the HTTP protocol that adds an additional layer of encryption using SSL (Secure Sockets Layer) or its successor, TLS (Transport Layer Security). It encrypts the data exchanged between the client and server, ensuring that it remains confidential and secure.

- SSL/TLS (Secure Sockets Layer/Transport Layer Security): SSL/TLS protocols establish a secure connection between the client and server. They provide encryption, data integrity, and server authentication, protecting against eavesdropping, tampering, and man-in-the-middle attacks.

- IPsec (Internet Protocol Security): IPsec is a protocol suite used for securing Internet Protocol (IP) communications. It provides authentication, encryption, and data integrity for IP packets, ensuring secure communication between network devices.

- SSH (Secure Shell): SSH is a cryptographic network protocol used for secure remote login and file transfer. It provides strong authentication, encrypted data transmission, and secure remote management of network devices.

- SFTP (SSH File Transfer Protocol): SFTP is a secure alternative to FTP (File Transfer Protocol). It uses SSH to provide secure file transfers over the network, encrypting data and protecting it from unauthorized access.

These secure communication protocols play a vital role in protecting data during transmission, and safeguarding sensitive information from interception, tampering, or unauthorized access. By utilizing these protocols, organizations can establish secure communication channels and ensure the confidentiality and integrity of their data.

TLS/SSL Certificates and Data Encryption

TLS/SSL Certificates: TLS (Transport Layer Security) and its predecessor SSL (Secure Sockets Layer) are cryptographic protocols that establish secure communication between a client and a server. TLS/SSL certificates play a crucial role in this process. Here's an overview of their purpose and functionality:

- Authentication: TLS/SSL certificates provide authentication by verifying the identity of the server. They are issued by trusted certificate authorities (CAs) and contain information such as the server's domain name, public key, and the CA's digital signature. The client can validate the server's identity and establish a secure connection.

- Encryption: TLS/SSL certificates enable encryption of data during transmission. They facilitate the negotiation of encryption algorithms and keys between the client and server. This ensures that the data sent between them is encrypted, making it unreadable to unauthorized parties.

Data Encryption: Data encryption is the process of converting plain text or unencrypted data into a format that is unintelligible to anyone without the proper decryption key. Here's a brief overview of data encryption:

- Confidentiality: Encryption ensures the confidentiality of data by scrambling it into ciphertext, which can only be decrypted with the appropriate encryption key. It prevents unauthorized access to sensitive information, even if the data is intercepted or accessed by malicious actors.

- Algorithms and Keys: Encryption algorithms, such as AES (Advanced Encryption Standard) or RSA (Rivest-Shamir-Adleman), are used to perform the encryption and decryption operations. Data encryption relies on the use of encryption keys, which are generated and securely managed to ensure the confidentiality and integrity of the data.

- End-to-End Encryption: End-to-end encryption (E2EE) is a specific form of encryption where data is encrypted on the sender's side, transmitted in an encrypted form, and decrypted by the intended recipient. This ensures that the data remains confidential throughout the entire communication process, even if intermediaries or network nodes are compromised.

Data encryption, combined with TLS/SSL certificates, provides a strong foundation for secure communication. TLS/SSL certificates authenticate the server, while data encryption ensures the confidentiality and integrity of transmitted information, protecting it from unauthorized access and interception.

Input Validation and Sanitization

Input validation and sanitization are techniques used to ensure the integrity and security of user input in an application. They help prevent vulnerabilities and protect against malicious attacks that could exploit the system through manipulated or malicious input. Here's a brief explanation of each:

Input Validation

Input validation is the process of verifying whether user input meets certain criteria, such as format, length, and type, before it is processed or stored by the application. The goal is to ensure that input adheres to expected patterns or constraints, reducing the risk of unexpected behavior or security issues.

Common validation techniques include:

- Data type validation: Checking that the input matches the expected data type (e.g., numeric, string, date).

- Length and format validation: Verifying that the input adheres to specific length or format requirements (e.g., minimum/maximum length, valid email address format).

- Range and boundary validation: Validating that the input falls within acceptable ranges or boundaries (e.g., age, numeric range).

By validating input, developers can detect and handle potential errors or anomalies early in the application flow, reducing the likelihood of security vulnerabilities, data corruption, or system failures.

Input Sanitization

Input sanitization is the process of cleansing or filtering user input to remove or neutralize potentially harmful or unwanted characters, code, or data. This helps prevent security vulnerabilities, such as code injection attacks (e.g., SQL injection, cross-site scripting), by eliminating or neutralizing malicious input.

Common sanitization techniques include:

- HTML/CSS/JavaScript escaping: Converting special characters into their equivalent HTML entities or codes to prevent injection attacks.

- Input filtering: Removing or rejecting input containing certain characters or patterns known to be associated with security risks.

- Parameterized queries or prepared statements: Using parameterized queries in database interactions to prevent SQL injection attacks.

Input sanitization is an important defense mechanism to protect against various security threats and maintain the integrity and security of an application's data and functionality.

By combining thorough input validation and effective sanitization techniques, developers can significantly enhance the security and reliability of their applications, minimizing the risk of vulnerabilities and attacks stemming from user input.

Rate Limiting and Throttling

Rate limiting and throttling are techniques used to control and manage the rate or frequency at which clients or users can access resources or make requests to an application or API. They help prevent abuse, protect system resources, and ensure fair and efficient usage. Here's a brief introduction to each:

Rate Limiting

Rate limiting sets limits on the number of requests or operations that a client or user can make within a specific time period. It restricts the rate at which requests can be made to prevent excessive usage, abuse, or overwhelming of the system. Rate limits are typically defined in terms of a maximum number of requests allowed per second, minute, or hour. When a client exceeds the allowed limit, they may receive an error response or be temporarily blocked from making further requests. Rate limiting helps ensure system stability, maintain quality of service, and protect against denial-of-service (DoS) attacks.

Throttling

Throttling is similar to rate limiting but focuses on regulating the rate of data flow or processing. It limits the speed or frequency at which data or operations can be processed, preventing overload or congestion in the system. Throttling can be applied to various resources, such as network bandwidth, API calls, or server processing capacity. By imposing limits on the rate of resource consumption, throttling helps maintain system stability, optimize performance, and prevent resource exhaustion.

Rate limiting and throttling are effective mechanisms for managing resource usage, protecting against abuse or overload, and ensuring fair access to system resources. They are commonly employed in web applications, APIs, and other systems where controlling the rate of client or user interactions is necessary to maintain system health and optimal performance.

Understanding Rate Limiting and Throttling Use Cases

Rate limiting and throttling are techniques used to control and manage the rate or frequency at which clients or users can access resources or make requests to an application or API. They have various use cases and benefits, including:

Rate Limiting Use Cases

- API Protection: Rate limiting helps protect APIs from abusive or excessive usage, preventing unauthorized or malicious clients from overwhelming the system with a high volume of requests.

- Preventing DoS Attacks: By setting appropriate rate limits, rate limiting can mitigate the risk of denial-of-service (DoS) attacks where an attacker attempts to exhaust system resources by sending a large number of requests.

- Ensuring Fair Usage: Rate limiting ensures fair access to resources by preventing any single client or user from monopolizing system resources, thereby promoting equitable usage among all users.

- API Monetization: Rate limiting allows organizations to implement tiered pricing or subscription models, where different rate limits are assigned to different user tiers or subscription levels.

Throttling Use Cases

- Network Bandwidth Management: Throttling can be used to control the rate of data transfer over a network, preventing congestion and optimizing bandwidth usage.

- API Performance Optimization: Throttling API calls helps manage server load and resource consumption, ensuring optimal performance and preventing degradation due to excessive requests.

- Preventing Resource Exhaustion: Throttling can limit the rate at which resource-intensive operations, such as file uploads or database queries, are processed, preventing resource exhaustion and maintaining system stability.

- Compliance and Regulatory Requirements: Throttling can be employed to adhere to regulatory requirements or agreements, such as data transfer limits imposed by service providers or compliance standards.

By applying rate limiting and throttling techniques, organizations can protect resources, maintain system stability, optimize performance, and ensure fair and efficient usage of their applications or APIs. These techniques offer control over resource utilization and contribute to a secure and reliable user experience.

Throttling Rules in Martini

Martini uses throttling rules so it knows which HTTP endpoints to throttle, and the permissible amount of requests the server will process.

Viewing Throttling Rules

To view an instance's rules, open the Throttling and Monitoring Rules dialog. To open said dialog, right-click on your instance and then click Throttling and Monetization from the context menu. Select the Throttling Rules tab to view existing throttling rules.

Screenshot in Martini that shows Throttling rules

Screenshot in Martini that shows Throttling rules

Creating a New Throttling Rule

To create a new throttling rule using the Throttling and Monitoring Rules dialog:

- Go to the Throttling Rules tab.

- Select the rule you want to update from the list of existing rules.

- Click the edit button located between the add and delete button.

- Populate the appearing form with the configuration you want for your rule.

- Click Save.

Screenshot in Martini that shows how to create a new throttling rule

Removing a Throttling Rule

To delete an existing throttling rule using the Throttling and Monitoring Rules dialog:

- Go to the Throttling Rules tab.

- Select the rule you want to update from the list of existing rules.

- Click the delete button located below the edit button.

- Confirm your action.

Screenshot in Martini that shows how to remove a throttling rule

Error Handling and Logging

Error handling and logging are essential aspects of software development and system administration. They play a crucial role in ensuring the stability, reliability, and security of an application or system. Here's a brief introduction to each:

Error Handling

Error handling is the process of detecting, capturing, and appropriately managing errors or exceptions that occur during the execution of an application. It involves identifying potential error scenarios, defining strategies to handle them, and implementing mechanisms to gracefully recover from errors. Effective error handling improves the user experience, prevents application crashes or unexpected behavior, and helps maintain data integrity. It typically involves techniques such as try-catch blocks, exception handling, and error messages that provide meaningful information to users and developers for troubleshooting and debugging.

Logging

Logging involves recording relevant information about events, actions, and errors that occur within an application or system. It captures data such as timestamps, error messages, stack traces, user interactions, or other relevant details. Logging serves multiple purposes, including debugging, monitoring, auditing, and analysis. It helps developers identify and diagnose issues, track the flow of execution, monitor performance and usage patterns, detect security breaches, and ensure compliance with regulatory requirements. Logging can be configured to store logs locally, send them to centralized log management systems, or integrate with monitoring and alerting tools.

By implementing robust error handling and logging practices, developers and system administrators can effectively detect, diagnose, and resolve issues that arise during application execution. These practices contribute to better software quality, faster troubleshooting, improved system reliability, and enhanced security posture.

API Security Testing

API security testing is the process of assessing the security vulnerabilities and weaknesses in an application programming interface (API). It involves evaluating the API's implementation, configuration, and interaction with external systems to identify potential security flaws that could be exploited by attackers. The primary objective of API security testing is to ensure the confidentiality, integrity, and availability of data and resources exposed through the API.

Importance API Security Testing

API security testing is vital for ensuring the integrity, confidentiality, and availability of APIs. It identifies vulnerabilities, such as injection attacks and data exposure, ensuring they are addressed proactively. By validating access controls, authentication, and authorization mechanisms, it prevents unauthorized access and data breaches. API security testing also assesses secure communication protocols and certificates, protecting data during transmission. Overall, it plays a crucial role in mitigating risks, protecting sensitive data, and maintaining user trust.

Overview of Testing Types and Tools

Here's an overview of different testing types and some popular tools used for API testing:

Unit Testing: Testing individual components or units of code in isolation to ensure they function correctly.

Tools: JUnit, NUnit, pytest, Mocha, Karma

Integration Testing: Testing the integration between multiple components or modules to verify their interactions and functionality as a whole.

Tools: Postman, SoapUI, RestAssured, Karate, JMeter

Functional Testing: Testing the functional requirements of an API, ensuring it behaves as expected and meets the specified criteria.

Tools: Postman, SoapUI, RestAssured, Karate, Jest

Security Testing: Assessing the API's security posture and identifying vulnerabilities or weaknesses that could be exploited by attackers.

Tools: OWASP ZAP, Burp Suite, Nessus, SonarQube

Performance Testing: Evaluating the performance and scalability of the API under expected and peak load conditions.

Tools: JMeter, Gatling, LoadRunner, Artillery, Apache Bench

Load Testing: Testing the API's behavior and performance under a high volume of concurrent requests to assess its capacity and scalability.

Tools: JMeter, Gatling, LoadRunner, Artillery, Apache Bench

Stress Testing: Evaluating the API's stability and resilience by subjecting it to extreme or overload conditions.

Tools: JMeter, Gatling, LoadRunner, Artillery, Apache Bench

Contract Testing: Testing the contracts or agreements between API providers and consumers to ensure compatibility and consistency.

Tools: Pact, Spring Cloud Contract, Postman

Mocking and Virtualization: Creating mock or virtualized API endpoints for testing purposes, simulating responses and behavior.

Tools: WireMock, Mountebank, Hoverfly, Postman Mock Server

Monitoring and Observability: Continuously monitoring API performance, availability, and metrics to identify issues and ensure system health.

Tools: Prometheus, Grafana, Datadog, New Relic, ELK Stack (Elasticsearch, Logstash, Kibana)

Note that these are just some examples of testing types and tools, and the choice of tools may depend on factors such as programming languages, frameworks, and specific testing requirements.

Keeping Up With Security Best Practices

Keeping up with security best practices involves staying updated with the latest security threats and vulnerabilities while following industry guidelines and recommendations. It also involves engaging with the developer community and security experts. Here's a brief explanation of each aspect:

Staying Updated With Security Threats and Following Industry Guidelines

To maintain a secure environment, it is crucial to stay informed about the latest security threats, vulnerabilities, and attack techniques. This can be achieved by regularly monitoring security advisories, subscribing to security mailing lists, and following reputable security blogs or news sources. Additionally, organizations should adhere to industry-specific security guidelines, standards, and best practices, such as OWASP (Open Web Application Security Project) for web applications or CIS (Center for Internet Security) benchmarks for infrastructure.

By staying up to date with security threats and following industry guidelines, organizations can proactively address emerging risks, implement appropriate security controls, and ensure their systems are adequately protected against known vulnerabilities.

Engaging With the Developer Community and Security Experts

Engaging with the developer community and security experts provides valuable opportunities to learn from others, share knowledge, and gain insights into emerging security trends and techniques. Participating in forums, attending conferences, and joining relevant communities or user groups allows developers and security professionals to exchange ideas, discuss challenges, and stay abreast of the evolving security landscape.

Collaborating with security experts can provide additional guidance, validation, and specialized knowledge. Organizations can seek external security assessments, penetration testing, or security consulting services to identify vulnerabilities, validate their security measures, and receive expert recommendations tailored to their specific context.

By actively engaging with the developer community and security experts, organizations can leverage collective knowledge and expertise, enhance their security practices, and continuously improve their ability to mitigate security risks.

Keeping up with security best practices requires a proactive approach of staying informed, adhering to industry guidelines, and fostering collaboration and knowledge sharing within the security community. This helps organizations stay ahead of potential threats, maintain a robust security posture, and protect their systems and data effectively.

Prioritizing Security for Stronger Application and Data Protection

Securing our applications, systems, and data is of utmost importance in today's digital landscape. We have explored various aspects of security, ranging from securing RESTful endpoints and implementing authentication mechanisms to ensuring data transmission integrity and performing API security testing. By following these best practices, we can significantly enhance the security posture of our applications and protect against potential vulnerabilities and attacks.

However, it's important to note that the field of security is constantly evolving. New threats and attack vectors emerge regularly, making it crucial for us to stay vigilant and continuously update our knowledge. By staying informed about the latest security threats, following industry guidelines, and engaging with the developer community and security experts, we can deepen our understanding of security practices and better protect our systems.

We encourage you, our readers, to continue exploring and learning about security best practices. Stay updated with the latest security news, participate in relevant forums and communities, and seek opportunities for professional development. By investing in your security knowledge, you empower yourself to build secure and resilient applications, safeguard sensitive data, and contribute to a safer digital ecosystem.

Remember, security is a shared responsibility. Together, let's continue to prioritize security, learn from each other, and stay one step ahead of potential threats.

Opinions expressed by DZone contributors are their own.

Comments