Secure Terraform Delivery Pipeline – Best Practices

As Terraform is leading the pack in Infrastructure-As-Code solutions, it's important to know why and how to secure your Terraform pipeline.

Join the DZone community and get the full member experience.

Join For FreeWith the beginning of the cloud era, the need for automation of cloud infrastructure has become essential. Although still very young (version 0.12), Terraform has already become the leading solution in the field of Infrastructure-as-Code. A completely new tool in an emerging area, working in a new programming model – this brings a lot of questions and doubts, especially when handling business-essential cloud infrastructure.

At GFT, we face challenges of delivering Terraform deployments at scale: on top of all major cloud providers, supporting large organizations in the highly regulated environment of financial services, with multiple teams working in environments in multiple regions around the world. Automation of Terraform delivery while ensuring proper security and mitigation of common risks and errors is one of the main topics across our DevOps teams.

You might also enjoy Linode's Beginner's Guide to Terraform.

Why Is a Secure Terraform Pipeline Needed?

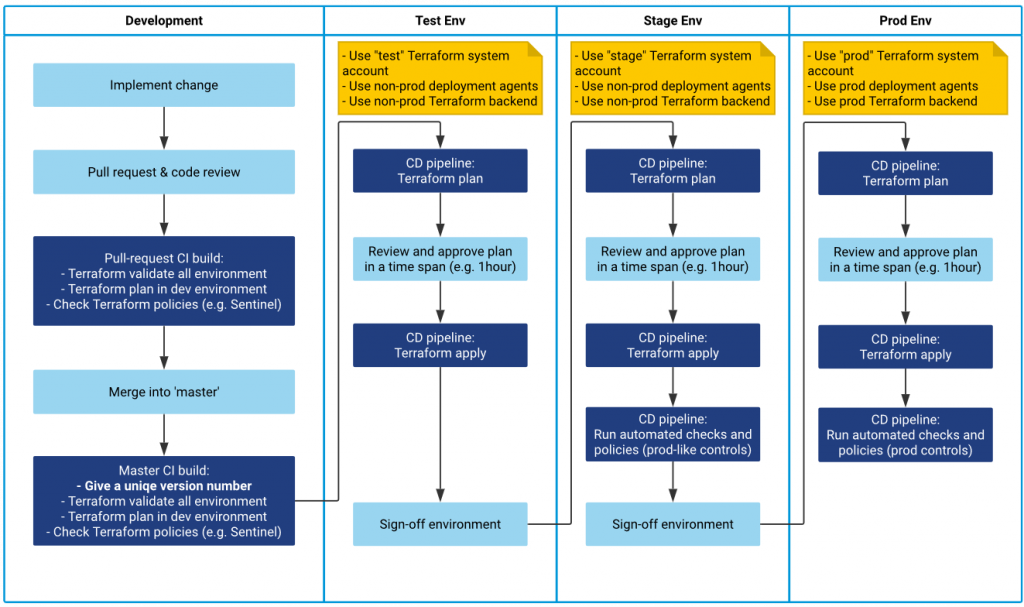

The goal is to create a process that allows a user to introduce changes into a cloud environment without having explicit permissions for manual actions. The process is as follows:

- A change is reviewed and merged with a pull request after a review of the required reviewers. There is no other way to introduce the change.

- The change is deployed to a test environment. Before that, the Terraform plan is reviewed manually and approved.

- The change needs to be tested/approved in a test environment.

- The Terraform plan is approved for the staging environment. The change is exactly the same as in the test environment (e.g. the same revision).

- Terraform changes are applied to staging using a designated Terraform system account. There is no other way to use this Terraform account as in this step of the process.

- Follow the same procedure to promote changes from staging to the production environment.

Non-Functional Requirements

Environments

- Environments (dev/uat/stage/prod) have a proper level of separation ensured:

- Different system accounts are used for Terraform in these environments. Each Terraform system account has permissions only for its own environment.

- Network connectivity is limited between resources across different environments.

- (Optional) Only a designated agent or set of agents configured in a special virtual network is permitted to modify the infrastructure (i.e. run Terraform) and access sensitive resources (e.g. Terraform backend, key vaults, etc.). It is not possible to release using a non-prod build agent.

- There is a way to ensure that Terraform configuration is as similar as possible between environments. (I.e. I cannot forget about the whole module in PROD as compared to UAT)

- Terraform backend in higher environments (e.g. UAT) is not accessible from local machines (network + RBAC limitation). It can be accessed only from build machines and optionally from designated bastion hosts.

System Accounts for Terraform

- Terraform runs with a system account rather than a user account when possible.

- Different system accounts are used for:

- Terraform (a system user that modifies the infrastructure),

- Kubernetes (a system user that is used by Kubernetes to create required resources e.g. load balancers or to download docker images from the repo),

- Runtime application components (as compared to build-time or release-time).

- System accounts that are permitted to Terraform changes can be used only in designated CD pipelines. It is not possible that I can use a production Terraform system account in a newly created pipeline without a special permission.

- (Optional) Access to use the Terraform system account is granted "just-in-time" for the release. Alternatively, the system account is granted permissions only for the time of deployment.

- System accounts in higher environments have permissions limited to only what is required in order to perform actions.

- Limit permissions to only the types of resources that are used.

- Remove permissions for deleting critical resources (e.g. databases, storage) to avoid automated re-creation of these resources and losing data. On such occasions, special permissions should be granted "just-in-time."

Process

- A change to a higher environment can be deployed only if it was previously tested in a lower environment. There is a method to ensure that this is exactly the same Git revision tested. The change can only be introduced with a pull request with a required review process.

- An option to apply Terraform changes can be only allowed after a manual Terraform plan review and approval on each environment.

Implementing a Secure Terraform Pipeline

Take a look at this external documentation to start setting up Terraform in CI/CD pipelines:

- Running Terraform in Automation

- Terraform Cloud

- Terraform Enterprise

- How to Move from Semi-Automation to Infrastructure as Code

- How to Move from Infrastructure as Code to Collaborative Infrastructure as Code

Terraform Backends

Having a shared Terraform backend is the first step to build a pipeline. A Terraform backend is a key component that handles shared state storage, management, as well as locking, in order to prevent infrastructure modification by multiple Terraform processes.

Some initial documentation:

- Terraform Backend Configuration

- backend providers list

- AWS S3

- GCP cloud storage

- Azure storage account

- Remote backend for Terraform Cloud/Enterprise

Make sure that the backend infrastructure has enough protection. State files will contain all sensitive information that goes through Terraform (keys, secrets, generated passwords etc.).

- This will most likely be AWS S3+DynamoDB, Google Cloud Storage, or Azure Storage Account.

- Separate infrastructure (network + RBAC) of production and non-prod backends.

- Plan to disable access to state files (network access and RBAC) from outside of a designated network.

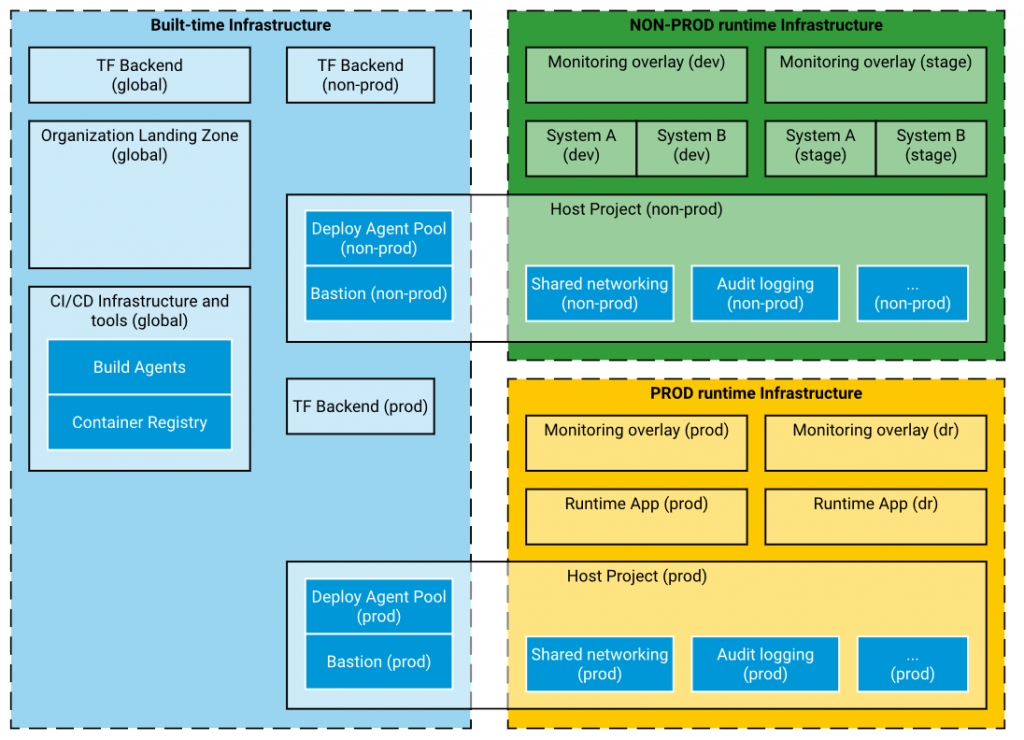

- Do not keep Terraform backend infrastructure with the run-time environment. Use separate account/project/subscription etc.

- Enable object versioning/soft delete options on your Terraform backends to avoid losing changes and state-files, and in order to maintain Terraform state history.

In some special cases, manual access to Terraform state files will be required. Things like refactoring, breaking changes or fixing defects will require running Terraform state operations by operations personnel. For such occasions, plan extraordinary controlled access to the Terraform state using bastion host, VPN, etc.

When using Terraform Cloud/enterprise with a remote backend, the tool will handle requirements for state storage.

Divide Into Multiple Projects

Naturally, Terraform allows you to divide the structure into modules. However, you should consider dividing your entire infrastructure into separate projects. A "Terraform project" in this description is a single piece of infrastructure that can be introduced in many environments, usually with a single pipeline.

Terraform projects will usually match cloud architectural patterns like Shared VPC, landing zone (Azure and AWS), hub-and-spoke network topology. There are many patterns in AWS Well-Architected Framework, Azure Cloud Adoption Framework, Architecture Center or Google Cloud Solutions.

Here are some samples:

Terraform Bootstrap

This is needed when Terraform remote state-files are stored in the cloud. This is going to be a simple project that will create the infrastructure required for the backends of other projects. In general, avoid stateless projects. But this will be one of them (the old chicken and egg problem).

Landing Zone

Have a separate project (or projects) to set-up the presence in the cloud – a network or a VPN connection. Building a landing zone is a separate topic. See, for example, Tranquility Base.

- Shared build-time infrastructure

A piece of company infrastructure, usually global, that handles the built-time operations. For example: - Build agent pools

- Container registry (or registries)

- Core key vaults storing company certificates

- Global DNS configurations

Host Runtime Infrastructure

Usually, runtime environments have some prerequisites and pieces of infrastructure that might be shared between prod and non-prod environments, such as bastion hosts, DNS, key vaults. This is also a good place to configure deployment agent pools separate for production and non-prod environments (you may not need separate environments for dev1, dev2, uat1, uat2, etc.).

Runtime Environments

Naturally, this is the infrastructure under the applications and services serving the business. Be sure that there is an environment to test Terraform scripts, not necessarily the same that the application is tested in, in order to avoid interrupting the QA team’s work when applying potentially imperfect Terraform configurations. Moreover, be prepared to divide runtime environments across teams, services, departments. It might be impossible to have a single project with the whole "company production" environment.

Now let’s take a look at some general situations in which it is worth dividing your infrastructure into projects.

Use Different System Accounts for Different Security Levels

Have a separate project when you need to use a different Terraform system account for pieces of infrastructure. Otherwise, you’ll have to give very wide permissions for a single system account. Examples:

- pieces of infrastructure across multiple projects or organizational units,

- build-time infrastructure vs runtime infrastructure,

- separate systems,

- shared infrastructure vs single system/service,

- serving different regions.

Have a Different Set of Environments

When you identify that for one piece of infrastructure you only need "prod" and "non-prod", and for the other part you will have "dev", "uat", "stage" or "prod", then this is a sign that these pieces of infrastructure should be separate.

Build Layers and Overlays

If the Terraform configuration in one project grows too big, it might become challenging to handle it. Constructing Terraform plans will become slower and refactoring might be very risky. It might be a good idea to divide the entire infrastructure into layers. Overlays may initially look like optional modules required only in certain environments. Here are some theoretical examples:

- Shared networking layer – virtual networks, firewalls, VPNs,

- Core infrastructure – compute, storage, Kubernetes,

- Application layer – messaging services, databases, key vaults, log aggregation,

- Monitoring overlay – custom metrics, health checks, alerting rules.

Serve Different Departments/Systems

Pieces of infrastructure that have different change sources or serve different business areas or departments, usually have different security levels – in such cases, access control may be treated separately with different Terraform pipelines. Try to avoid huge monolithic configurations.

Tip: When resources of multiple projects need to interact with each other and rely on each other, you can use Terraform data sources to "reach" resources from different projects. Terraform allows having multiple providers of the same type that, for example, access different projects/accounts/subscriptions. Example: use separate provider and data source to "find" the company’s global VPN gateway subnet for setting up network connectivity for the runtime environment.

Here is an example of how to divide infrastructure into Terraform projects:

Handle Environments Separately

Prepare to handle multiple environments on day 1. This will be a very complicated change later and may require heavy refactoring.

- Make sure that each environment of each project has its own state-file. Don’t keep multiple environments (dev/stage/prod) in a single Terraform state-file.

- Use different Terraform system accounts for environments. Make sure early on that the system accounts have limited permissions and cannot access each other’s infrastructure.

- Lockdown access to e.g. staging state file early. It will force you to think about building an automated and secure pipeline quickly.

- Prepare non-prod and prod deployment agent pools early. Lockdown network access to storage, key vaults, Kubernetes API etc. from specific virtual networks of deployment agent pools.

Keep in mind that Terraform does not allow using variables in the provider and backend sections. A simple approach with multiple ‚.tfvars’ files may be challenging in the long run.

Here are some options for handling environments:

- Use Terraform Workspaces and terraform init –backend-config option to switch backends and environments. This can be used with or without Terraform Cloud/Enterprise,

- Use the module and directory layout (Terraform main-module approach) that allows the handling of multiple environments by making use of module structure.

When building multiple environments, make sure that you handle differences properly. Different environments will have different pricing tiers, VM sizes, or even some resources totally disabled (WAF, DDoS protection).

- Use parameters and "feature flags" to enable/disable optional features combined with "count" and "for_each" construct in Terraform.

- If you use defaults, make sure that default is the production setting and you override non-prod environment settings.

- You can easily point the differences per environment and you know how feature changes across environments.

- Have an explicit prod-like environment to test with 1:1 production settings. Have an explicit test for that in the release procedure.

Tip: In the pipeline’s build step, always run "terraform validate" for all environments. Make sure that this step fails if you forget to set a property. Make sure that it is not possible to forget about an entire module in your environment.

Organize Into Modules

Terraform modularization is a wide topic with a primary purpose of building a catalogue of reviewed, maintained and reusable infrastructure components. Besides a global, public Terraform Registry, companies build their own module libraries. This can be done either with the use of Terraform Cloud/Enterprise or with the use of Git repositories.

Nevertheless, even if a piece of a Terraform project does not look like a shared module, it might be worth to encapsulate it into a submodule. There are major benefits:

- large infrastructure code decomposition,

- focusing on single responsibility per module,

- the code readability improvement,

- following the same

- tracking the dependencies (variables and outputs) between logical pieces of infrastructure.

Like with any other programming language, modularization brings value even if modules are an integral part of a single repository and are used just once.

Build a Terraform Pipeline with CI/CD

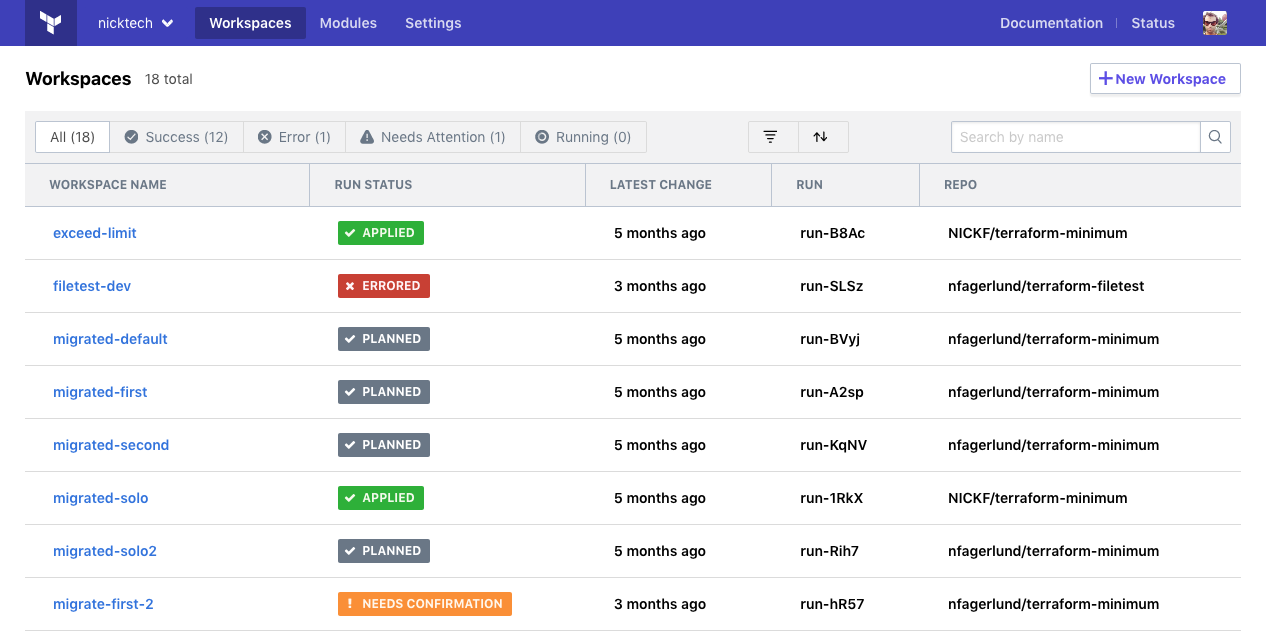

Terraform Cloud/Terraform Enterprise is an opinionated solution addressing some of the aspects of implementing a secure Terraform pipeline like:

- Remote Backend

- Module registry

- Terraform plan review and approval

- Remote Terraform execution

- Secure system accounts credentials handling

Since it can be used through its UI as well as API and CLI interfaces it can be used together with a CI/CD tool, however, Terraform Cloud/Enterprise is not a CI/CD tool itself. This will depend a lot on the tool that is available. Each CI/CD tool will have different features and approaches, more or less terraform specific.

There are two general options:

- Use a built-in mechanism of the CD tool for release control

- Built-in package versioning

- Secured pipeline/build artifacts

- Release pipelines with the environment promotion process

- Manual checks and approvals by entitled people

- Release plans, rollback plans

- Handling system accounts and secrets

- Follow a GitOps approach

- Rely on Git repositories, branches and tags to control the process

- Rely on Git access control, permissions and pull request enforcement

- Use Git webhooks

- Have a separate "code" repository and "delivery" repository for GitOps control

Here are some general steps to implement a secure Terraform pipeline:

- Protect the "Master" branch

Configure your Git in such a way that "push" to the "master" branch is forbidden and allowed only with a pull request (or merge request). Set the required steps (e.g. Terraform "build" must pass) and required number of approvers from a list.

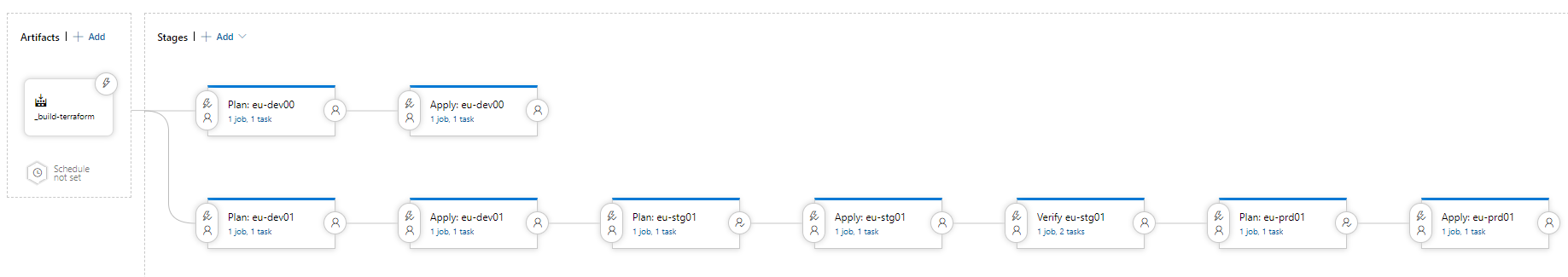

Ask: Can I put an arbitrary change into a branch that is the source of a release? - Build a Multi-Stage Pipeline

If possible, build a pipeline that will visualize that a certain Terraform configuration version is promoted from one environment to another.

Ask: Can I put something in production without testing in staging? Can I deploy something from an arbitrary branch other than "master"? - Rely on Versions Rather than Branches

When you are building your pipeline, make sure that you promote an immutable snapshot of your Terraform configuration. Avoid using branches for this. Promote a build artifact or a Git tag instead.

Ask: Am I 100% sure that I’m deploying exactly the same version as tested before? - Have a Terraform "Build" Step

It is not obvious that the Terraform code can be built. However, at least validating Terraform against configurations of all environments and running a plan on a designated environment will allow catching obvious errors. The Terraform validate action can be executed without connecting to a remote backend. It is worth doing it on the master branch as well as on a pull request. This can also allow setting a unique version number or a tag on the master branch version.

Ask: How do I ensure that the Terraform code has at least proper syntax and complete configuration? - Have a Manual Approval Step of Terraform Plan

This will be one of the hardest requirements to implement in most tools. Start from here. This is to ensure that the plan is reviewed and approved by a person and exactly this plan is applied.

Ask: How do I review what is going to change in each environment? - Ensure that There Is only One Pending Plan per Environment

When a plan is waiting for approval and some other plan is applied, the first plan is not true anymore. Prevent concurrent plans pending on a single environment.

Ask: How do I ensure that no other changes are applied between "plan" and "apply" steps? - Protect Access to Separate System Accounts per Environment

Multiple systems accounts need to be prepared for different environments. The goal of the pipeline is to ensure that the access to the system account is provided securely (i.e. credentials/keys are hidden/secret, write-only, are not logged in the console). Only an approved pipeline should be able to use this system account.

Ask: Am I able to use the production Terraform system account in a non-prod pipeline or non-prod stage? - Protect Access to Separate Agent Pools per Environment

Agent pools for non-prod and prod deployments should be separate. Network access to services like Kubernetes API, key vaults, sensitive storage etc. should be limited, including the deployment build agents. However, non-prod build agents must not have access to the production runtime environment. Only approved pipelines should be able to execute on production deployment agent pool.

Ask: Is it possible to run an arbitrary pipeline on production deployment agent pools? - Have a Rollback Plan

Rolling changes back usually means running a release for a previous version of the Terraform configuration.

Ask: How do I run a previous version? - Control User Permissions to Environments

Make sure that only certain people can deploy changes to certain environments. Implement a four-eye-check (approval by at least 2 people) for production releases. Maintain control over initializing a release and Terraform plan approval.

Ask: Can I approve the Terraform plan in production if I am not permitted? - Have Just-In-Time Access Control for Terraform

Introduce checks into the process to ensure that the production Terraform system account will be available only during the time of a planned release. Alternatively, it can be granted production-level RBAC permissions only for the time of the release. This is to make sure that proper personnel have access to both the CI/CD pipeline as well as to the cloud provider during the release. This is another method of a four-eye-check. Think multi-factor authentication for CI/CD.

Ask: Can I perform production release with access to CI/CD pipeline only? - Run Tests as Part of The Pipeline

Like any other piece of software, virtual infrastructure can and should be tested. Below is the Continuous Compliance section with some testing guidelines. Make sure that after deployment to at least prod-like and prod environments, there is a step to verify compliance, security policies and run some tests, even smoke tests. The purpose is to have quick feedback.

Ask: Will I immediately know if my changed infrastructure is not compliant with non-functional requirements and policies?

Example of A Release Pipeline with The Use of Azure DevOps

- Code version is stored as an artifact and promoted from environment to environment

- All "Plan" and "Apply" stages are controlled by pre-stage and post-sage approvals.

- The tool maintains the system credentials per environment and access to deployment agents.

Example GitOps Process

- There is a "delivery" repository (or repositories) to control the actual deployment to environments,

- The "delivery" repository will import modules from "code" repositories or module registry,

- The release procedure starts with a pull request with a change to a given repository,

- An automated tool will prepare a Terraform Plan,

- The approval of the plan is implemented as pull request approval, the merge will trigger actual release.

The actual pipeline delivery process will depend a lot on the tools you select. Treat the guidelines above as a checklist for your process.

Also, check the second part of this guide that covers testing the infrastructure and compliance as code.

Published at DZone with permission of Piotr Gwiazda. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments