SeaweedFS vs. JuiceFS Design and Features

This article compares the architectures, metadata storage, data storage, access protocols, and extended features of SeaweedFS and JuiceFS.

Join the DZone community and get the full member experience.

Join For FreeSeaweedFS is an efficient distributed file storage system that drew inspiration from Facebook's Haystack. It excels in fast read and write operations for small data blocks. JuiceFS is a cloud-native distributed file system that delivers massive, elastic, and high-performance storage at a low cost.

This article aims to compare the design and features of SeaweedFS and JuiceFS, helping users make informed decisions when selecting a file storage system. The comparison will focus on their architectures, metadata storage, data storage, access protocols, and extended features. To summarize the information, a table will be provided at the end of the article.

Architecture Comparison

SeaweedFS Architecture

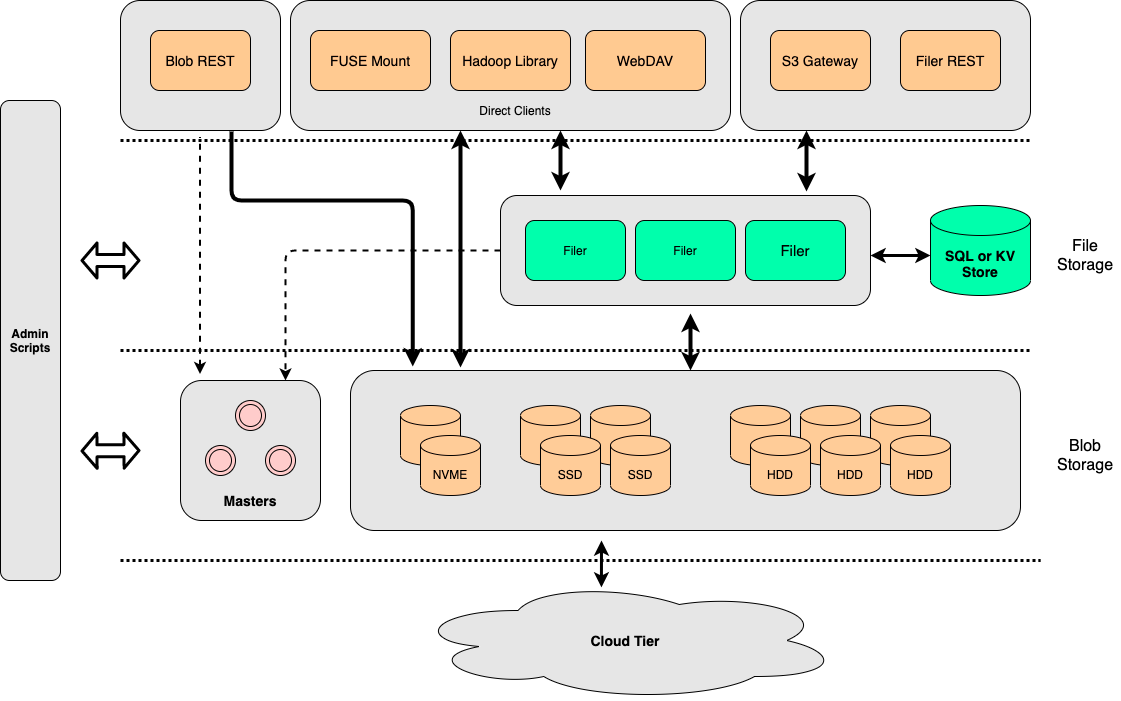

SeaweedFS consists of three parts:

- The volume servers store files in the underlying layer.

- The master servers manage clusters.

- An optional filer component, which provides more features upwards.

SeaweedFS Architecture

Volume Servers and Master Servers

In the system operation, both the volume server and master server are used for file storage:

- The volume server focuses on data read and write operations.

- The master server primarily functions as a management service for clusters and volumes.

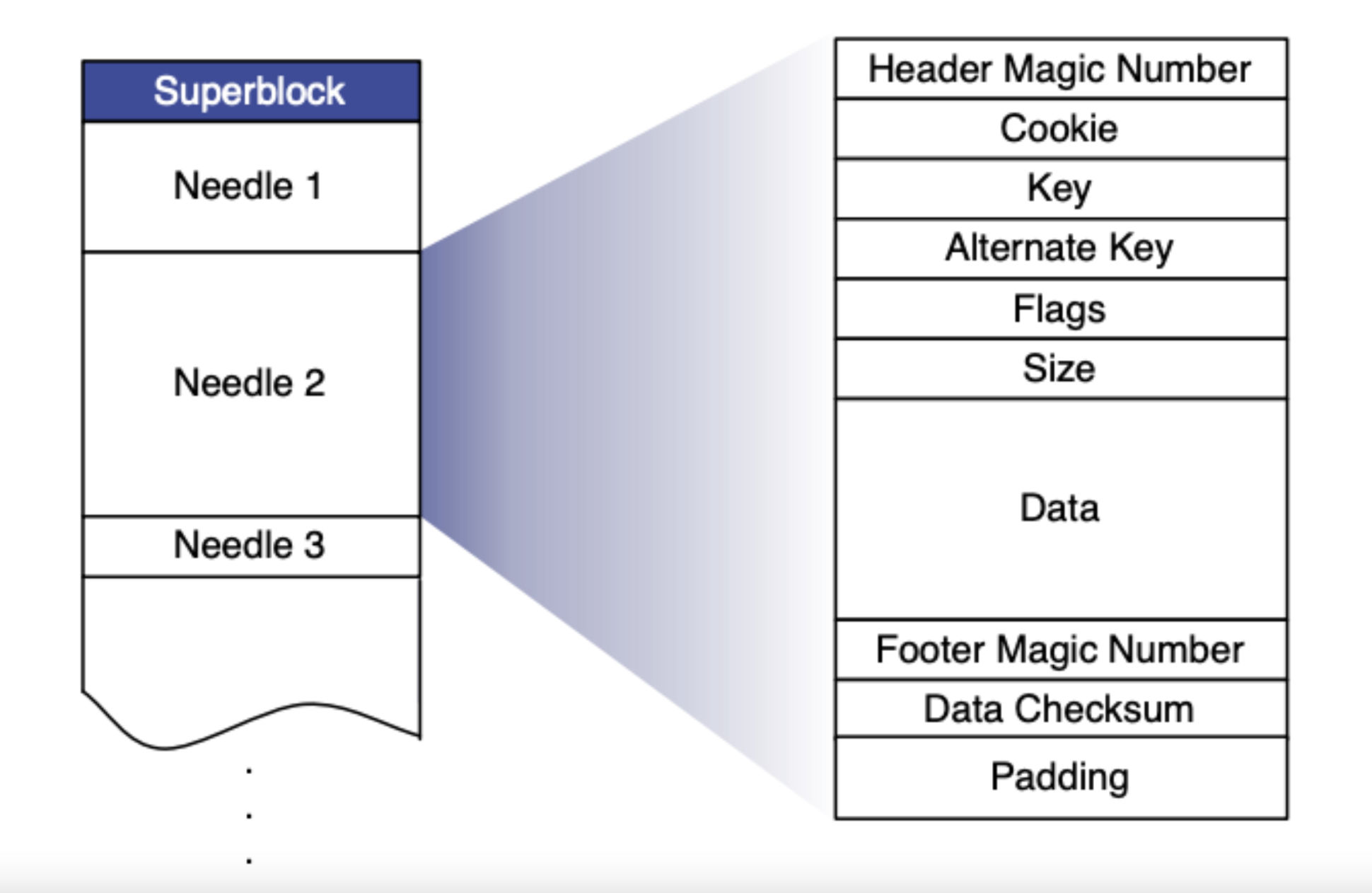

In terms of data access, SeaweedFS implements a similar approach to Haystack. A user-created volume in SeaweedFS corresponds to a large disk file (Superblock in the diagram below). Within this volume, all files written by the user (Needles in the diagram) are merged into the large disk file.

Data write and read process in SeaweedFS:

- Before a write operation, the caller requests a write allocation from SeaweedFS (master server).

- SeaweedFS returns a File ID (composed of Volume ID and offset) based on the current data volume. During the writing process, basic metadata information such as file length and chunk details is also written together with the data.

- After the write is completed, the caller needs to associate the file with the returned File ID and store this mapping in an external system such as MySQL.

- When reading data, since the File ID already contains all the necessary information to compute the file's location (offset), the file content can be efficiently retrieved.

The volume server

Filer

On top of the underlying storage units mentioned above, SeaweedFS provides a component called filer. By integrating with the volume server and master server, it offers a wide range of functionalities and features externally, such as POSIX support, WebDAV, and S3 interfaces. Similar to JuiceFS, filer also requires integration with an external database to store metadata information.

Note that throughout the following text, SeaweedFS includes the filer component.

JuiceFS Architecture

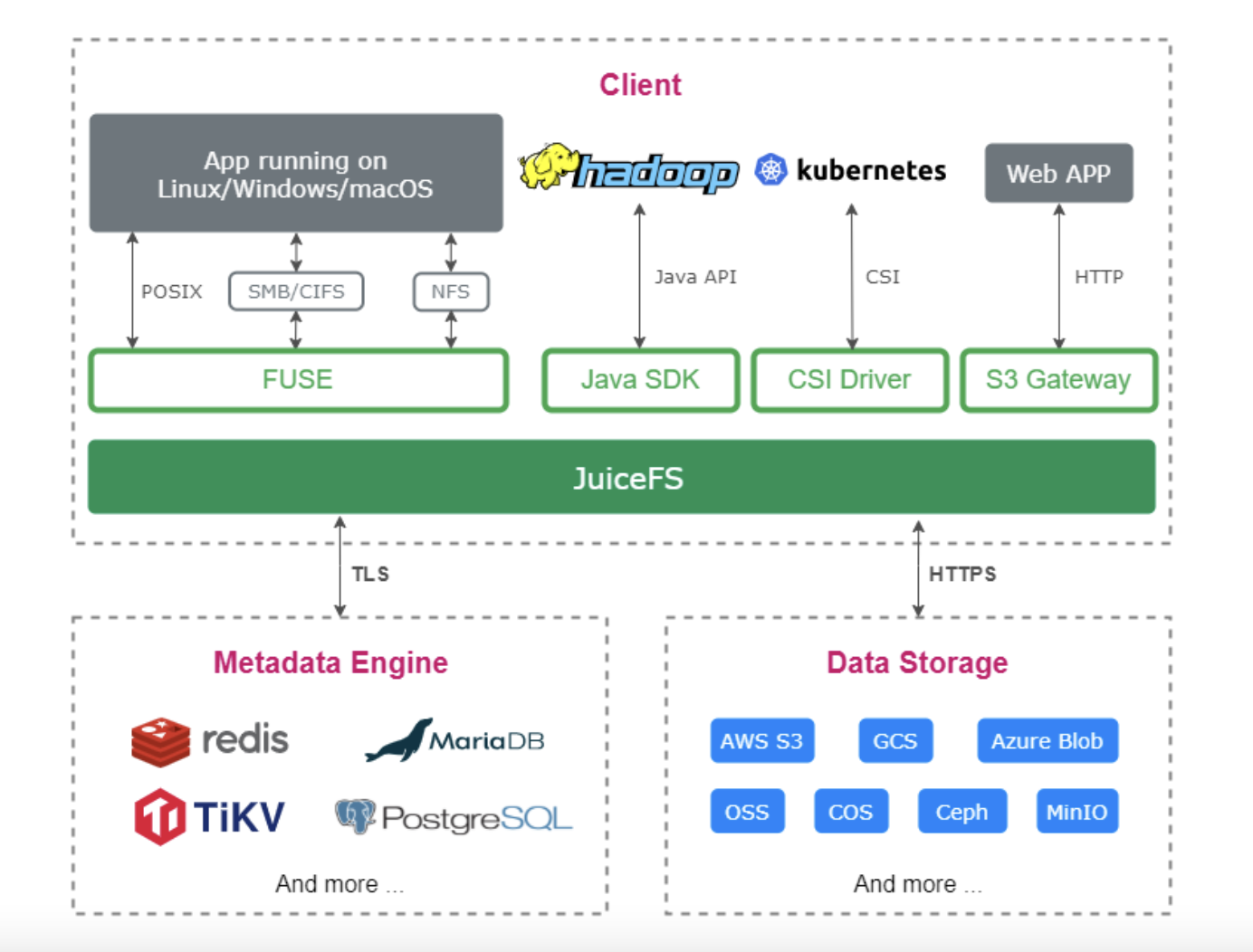

The following figure shows the architecture of JuiceFS in the community edition:

JuiceFS Architecture

JuiceFS consists of three components:

- The JuiceFS client, in which all file I/O happens.

- The data storage stores file data.

- The metadata engine stores file metadata.

JuiceFS adopts an architecture that separates data and metadata storage:

- The file data is split and stored in object storage systems such as Amazon S3.

- Metadata is stored in a user-selected database such as Redis or MySQL.

By sharing the same database and object storage, JuiceFS achieves a strongly consistent distributed file system while also offering features such as full POSIX compatibility and high performance.

Metadata Storage Comparison

In terms of metadata storage, both SeaweedFS and JuiceFS support using external databases to store the file system's metadata information. In terms of database support, SeaweedFS supports up to 24 different databases. JuiceFS has a high requirement for database transaction capability and currently supports 10 transactional databases across three categories.

Atomic Operations

To guarantee the atomicity of all metadata operations, JuiceFS relies on databases with transaction processing capabilities at the implementation level. In contrast, SeaweedFS only utilizes transactions of some databases (SQL, ArangoDB, and TiKV) during Rename operations, with a lower requirement for database transaction capabilities. Additionally, during the Rename operation, SeaweedFS does not lock the original directory or file during the metadata copying process, which may result in data loss for the updates made during that process.

Changelogs

SeaweedFS generates changelogs for all metadata operations, which can be further utilized for functions like data replication and operation auditing. However, JuiceFS has not yet implemented this feature.

Storage Comparison

As mentioned earlier, SeaweedFS implements data storage through volume servers and master servers, supporting features such as "combined storage" and "erasure coding" for small data blocks.

On the other hand, JuiceFS relies on object storage services for data storage, and related features are provided by the user's chosen object storage.

File splitting

Both SeaweedFS and JuiceFS split files into smaller chunks before persisting them in the underlying data systems. SeaweedFS splits files into 8 MB blocks. For very large files (over 8 GB), it also saves chunk indexes to the underlying data system.

JuiceFS first splits files into 64 MB chunks, which are further divided into 4 MB objects. It optimizes performance for random writes, sequential reads, and duplicate writes through an internal concept of slice. For details, see Data Processing Workflow.

Tiered Storage

For newly created volumes, SeaweedFS stores data locally. For older volumes, it supports uploading them to the cloud to achieve separation of hot and cold data. In this aspect, JuiceFS relies on external services.

Data Compression

JuiceFS supports compression using LZ4 or ZStandard for all written data, whereas SeaweedFS determines whether to compress data based on factors such as the file extension and file type.

Storage Encryption

JuiceFS supports encryption in transit and encryption at rest. When encryption at rest is enabled, users need to provide a self-managed key, and all written data is encrypted using this key. For more details, see Data Encryption.

SeaweedFS also supports encryption in transit and encryption at rest. When data encryption is enabled, all data written to the volume server is encrypted using random keys. The corresponding key information is managed by the filer that maintains the metadata.

Access Protocol Comparison

POSIX Compatibility

JuiceFS is fully compatible with POSIX, while SeaweedFS supports basic compatibility with POSIX (see Issue 1558 and Wiki), which is still being improved.

S3 Protocol

JuiceFS leverages the MinIO S3 gateway to provide S3 gateway functionality. It offers a RESTful API that is compatible with S3, allowing users to manage files stored in JuiceFS using tools like s3cmd, AWS CLI, and MinIO Client (mc), even when direct mounting is not convenient.

SeaweedFS supports approximately 20 S3 APIs, covering common operations such as read, write, search, and delete requests. It has also extended functionality for specific requests like read. For details, see Amazon-S3-API.

WebDAV Protocol

Both JuiceFS and SeaweedFS support the WebDAV protocol.

HDFS Compatibility

JuiceFS provides full compatibility with the HDFS API. It supports Hadoop 2.x and Hadoop 3.x, as well as various components within the Hadoop ecosystem.

SeaweedFS offers basic compatibility with the HDFS API. It lacks support for certain operations like truncate, concat, checksum, and about extended attributes.

CSI Driver

Both JuiceFS and SeaweedFS provide Kubernetes CSI drivers to help users utilize the corresponding file systems within the Kubernetes ecosystem.

Extended Feature Comparison

Client Cache

JuiceFS offers various client caching strategies, covering both metadata and data caching. It allows users to optimize based on their application scenarios. However, SeaweedFS does not have client caching capabilities.

Cluster Data Replication

SeaweedFS supports both Active-Active and Active-Passive asynchronous replication modes for data replication between multiple clusters. These modes ensure consistency between different cluster data by applying changelogs with a signature to prevent repeated modifications. In the Active-Active mode with more than two cluster nodes, SeaweedFS imposes certain limitations on operations such as directory renaming.

JuiceFS does not natively support data replication between clusters. It relies on the metadata engine and the data replication capabilities of the object storage itself.

Cloud-Based Data Caching

SeaweedFS can be used as a cache for cloud-based object storage and supports manual cache warmup through commands. Modified cache data is asynchronously synchronized back to the object storage.

JuiceFS stores files in chunks in the object storage and does not provide caching acceleration for existing data in the object storage.

Trash

By default, JuiceFS enables the Trash feature, which automatically moves deleted files to the ".trash" directory under the JuiceFS root directory and retains them for a specified period before permanently deleting them.

However, SeaweedFS does not support this feature.

Operations and Maintenance Tools

JuiceFS provides juicefs stats and juicefs profile sub-commands, which allow users to view real-time performance metrics and replay performance metrics for a specific time period. Additionally, JuiceFS offers a metrics interface for easy integration of monitoring data with Prometheus and Grafana.

SeaweedFS implements both Push and Pull approaches to integrate with Prometheus and Grafana. It also provides the interactive tool weed shell for performing various maintenance tasks, such as checking the current cluster status and listing file directories.

Other Comparisons

- In terms of release date, SeaweedFS was released in April 2015 and currently has accumulated 17.4k stars, while JuiceFS released its open-source version in January 2021 and has accumulated 8.1k stars as of now.

- Both JuiceFS and SeaweedFS adopt Apache License 2.0, which is friendly for commercial use. SeaweedFS is primarily maintained by Chris Lu individually, while JuiceFS is mainly maintained by the Juicedata company.

- JuiceFS and SeaweedFS are both implemented using the Go programming language.

A Comparison Table as the Conclusion

SeaweedFS |

JuiceFS |

|

Metadata engine |

Multi-engine |

Multi-engine |

Atomicity of metadata operations |

Not guaranteed |

Guaranteed through database transactions |

Changelogs |

Yes |

No |

Data storage |

Included |

Reliance on external services |

Code correction |

Supported |

Reliance on external services |

Data merge |

Supported |

Reliance on external services |

File splitting |

8 MB |

64 MB + 4 MB |

Tiered storage |

Supported |

Reliance on external services |

Data compression |

Supported (extension-based) |

Supported (global settings) |

Storage encryption |

Supported |

Supported |

POSIX compatibility |

Basic |

Full |

S3 protocol |

Basic |

Basic |

WebDAV protocol |

Supported |

Supported |

HDFS compatibility |

Basic |

Full |

CSI driver |

Supported |

Supported |

Client cache |

Not supported |

Supported |

Cluster data replication |

Bidirectional asynchronous, multi-mode |

Not supported |

Cloud-based data caching |

Supported(manual synchronization) |

Not supported |

Trash |

Not supported |

Supported |

Operation and maintenance tools |

Provided |

Provided |

Release date |

April 2015 |

January 2021 |

Maintainer |

Individually (Chris Lu) |

By company (Juicedata) |

Language |

Go |

Go |

Open source protocol |

Apache License 2.0 |

Apache License 2.0 |

Opinions expressed by DZone contributors are their own.

Comments