Running Hadoop MapReduce Application from Eclipse Kepler

Join the DZone community and get the full member experience.

Join For Freeit's very important to learn hadoop by practice.

one of the learning curves is how to write the first map reduce app and debug in favorite ide, eclipse. do we need any eclipse plugins? no, we do not. we can do hadoop development without map reduce plugins

this tutorial will show you how to set up eclipse and run your map reduce project and mapreduce job right from your ide. before you read further, you should have setup hadoop single node cluster and your machine.

you can download the eclipse project from github .

use case:

we will explore the weather data to find maximum temperature from tom white’s book hadoop: definitive guide (3rd edition) chapter 2 and run it using toolrunner

i am using linux mint 15 on virtualbox vm instance.

in addition, you should have

- hadoop (mrv1 am using 1.2.1) single node cluster installed and running, if you have not done so, would strongly recommend you do it from here

- download eclipse ide, as of writing this, latest version of eclipse is kepler

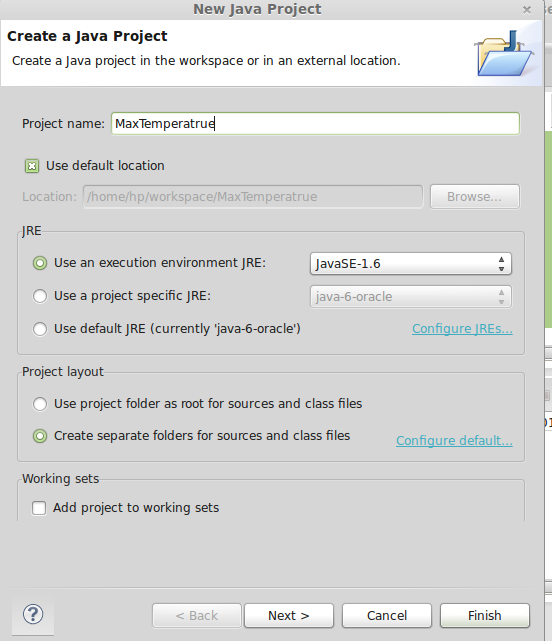

1. create new java project

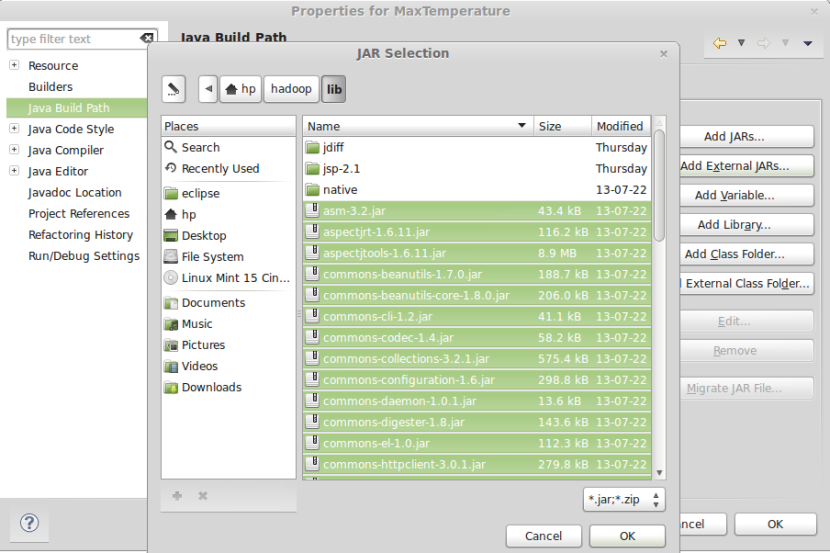

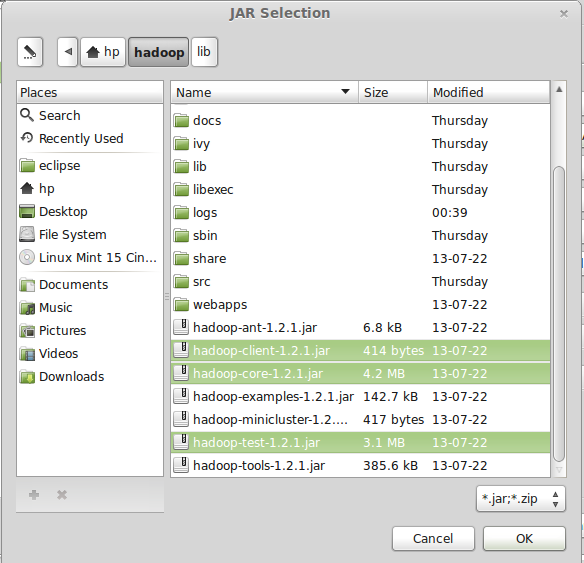

2. add dependencies jars

right click on project properties and select java build path

add all jars from $hadoop_home/lib and $hadoop_home (where hadoop core and tools jar lives)

3. create mapper

package com.letsdobigdata;

import java.io.ioexception;

import org.apache.hadoop.io.intwritable;

import org.apache.hadoop.io.longwritable;

import org.apache.hadoop.io.text;

import org.apache.hadoop.mapreduce.mapper;

public class maxtemperaturemapper extends

mapper<longwritable, text, text, intwritable> {

private static final int missing = 9999;

@override

public void map(longwritable key, text value, context context)

throws ioexception, interruptedexception {

string line = value.tostring();

string year = line.substring(15, 19);

int airtemperature;

if (line.charat(87) == '+') { // parseint doesn't like leading plus

// signs

airtemperature = integer.parseint(line.substring(88, 92));

} else {

airtemperature = integer.parseint(line.substring(87, 92));

}

string quality = line.substring(92, 93);

if (airtemperature != missing && quality.matches("[01459]")) {

context.write(new text(year), new intwritable(airtemperature));

}

}

}4. create reducer

package com.letsdobigdata;

import java.io.ioexception;

import org.apache.hadoop.io.intwritable;

import org.apache.hadoop.io.text;

import org.apache.hadoop.mapreduce.reducer;

public class maxtemperaturereducer

extends reducer<text, intwritable, text, intwritable> {

@override

public void reduce(text key, iterable<intwritable> values,

context context)

throws ioexception, interruptedexception {

int maxvalue = integer.min_value;

for (intwritable value : values) {

maxvalue = math.max(maxvalue, value.get());

}

context.write(key, new intwritable(maxvalue));

}

}5. create driver for mapreduce job

map reduce job is executed by useful hadoop utility class toolrunner

package com.letsdobigdata;

import org.apache.hadoop.conf.configured;

import org.apache.hadoop.fs.path;

import org.apache.hadoop.io.intwritable;

import org.apache.hadoop.io.text;

import org.apache.hadoop.mapreduce.job;

import org.apache.hadoop.mapreduce.lib.input.fileinputformat;

import org.apache.hadoop.mapreduce.lib.output.fileoutputformat;

import org.apache.hadoop.util.tool;

import org.apache.hadoop.util.toolrunner;

/*this class is responsible for running map reduce job*/

public class maxtemperaturedriver extends configured implements tool{

public int run(string[] args) throws exception

{

if(args.length !=2) {

system.err.println("usage: maxtemperaturedriver <input path> <outputpath>");

system.exit(-1);

}

job job = new job();

job.setjarbyclass(maxtemperaturedriver.class);

job.setjobname("max temperature");

fileinputformat.addinputpath(job, new path(args[0]));

fileoutputformat.setoutputpath(job,new path(args[1]));

job.setmapperclass(maxtemperaturemapper.class);

job.setreducerclass(maxtemperaturereducer.class);

job.setoutputkeyclass(text.class);

job.setoutputvalueclass(intwritable.class);

system.exit(job.waitforcompletion(true) ? 0:1);

boolean success = job.waitforcompletion(true);

return success ? 0 : 1;

}

public static void main(string[] args) throws exception {

maxtemperaturedriver driver = new maxtemperaturedriver();

int exitcode = toolrunner.run(driver, args);

system.exit(exitcode);

}

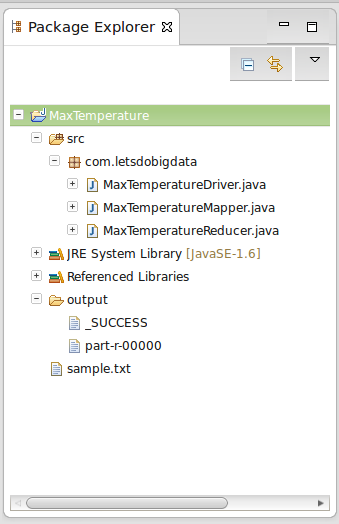

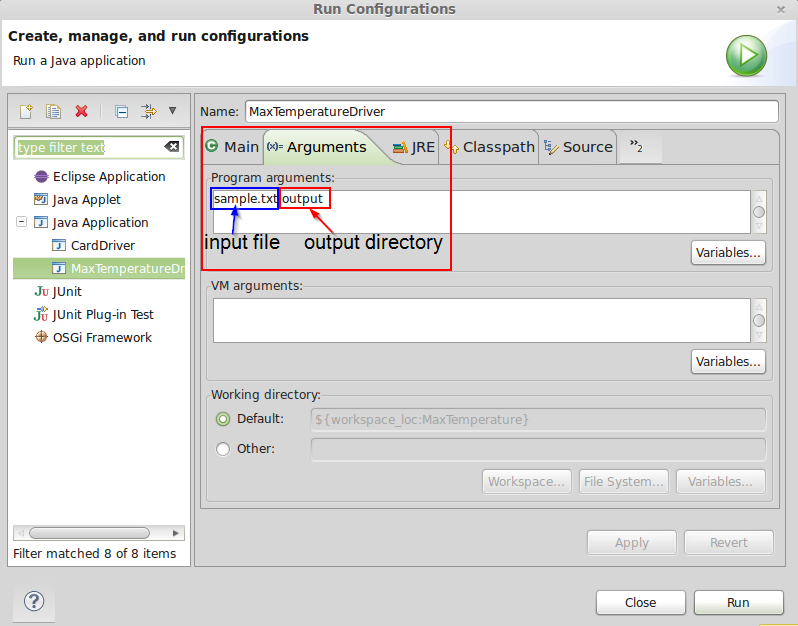

}6. supply input and output

we need to supply input file that will be used during map phase and the final output will be generated in output directory by reduct task. edit run configuration and supply command line arguments. sample.txt reside in the project root. your project explorer should contain following

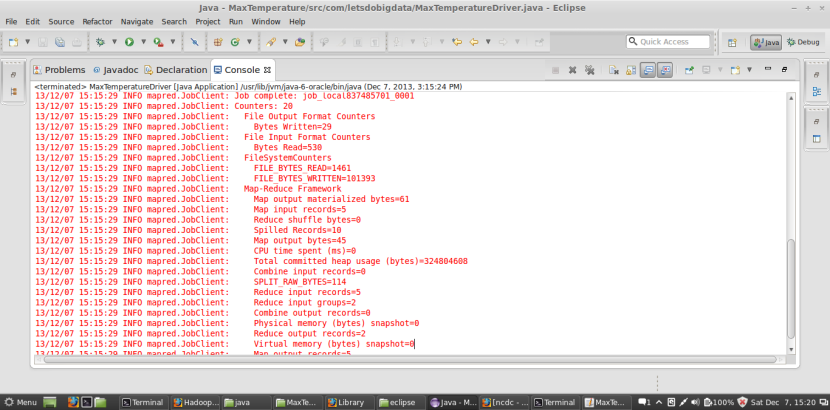

7. map reduce job execution

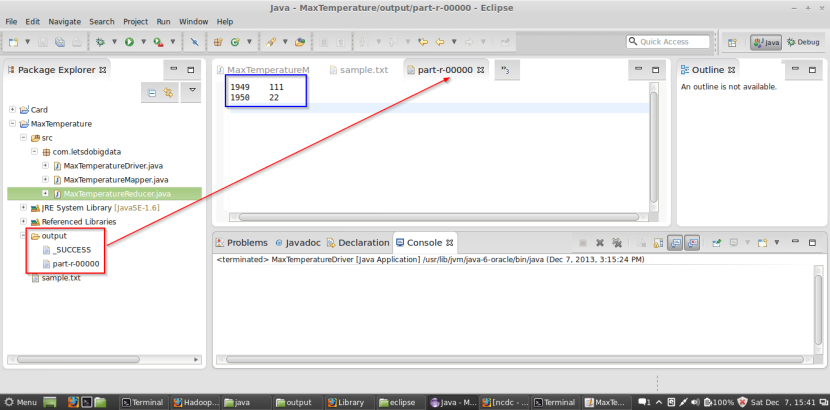

8. final output

if you managed to come this far, once the job is complete, it will create output directory with _success and part_nnnnn , double click to view it in eclipse editor and you will see we have supplied 5 rows of weather data (downloaded from ncdc weather) and we wanted to find out the maximum temperature in a given year from input file and the output will contain 2 rows with max temperature in (centigrade) for each supplied year

1949 111 (11.1 c)

1950 22 (2.2 c)

make sure you delete the output directory next time running your application else you will get an error from hadoop saying directory already exists.

happy hadooping!

Published at DZone with permission of Hardik Pandya, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments