Revamped ShardingSphere-On-Cloud: What’s New in Version 0.2.0 with CRD ComputeNode

This article discusses Apache ShardingSphere-On-Cloud version 0.2.0 includes a new CRD ComputeNode for ShardingSphere Operator.

Join the DZone community and get the full member experience.

Join For FreeThe latest version of Apache ShardingSphere-On-Cloud, version 0.2.0, has introduced a new CRD ComputeNode for ShardingSphere Operator. This brand-new addition provides users with the ability to define computing nodes entirely within the ShardingSphere structure.

Introduction to ComputeNode

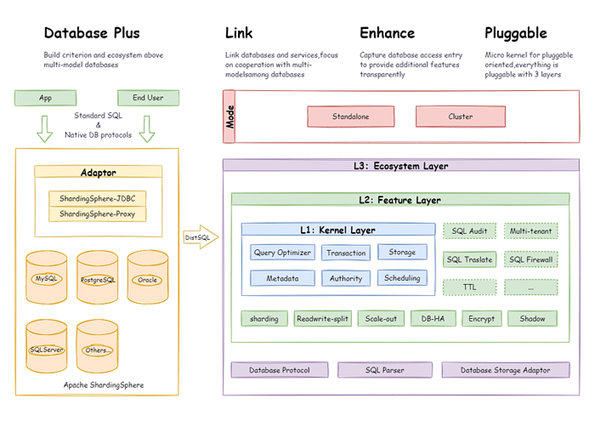

The primary building blocks of Apache ShardingSphere's traditional architecture are computing nodes, storage nodes, and governance nodes. The ShardingSphere Proxy acts as the computing node and serves as the entry point for all data traffic. It's also in charge of data governance functionalities like distribution and balancing. The storage node is where all ShardingSphere metadata is stored, including sharding rules, encryption rules, and read-write splitting rules. Governance node components like Zookeeper and Etcd make up the governance node.

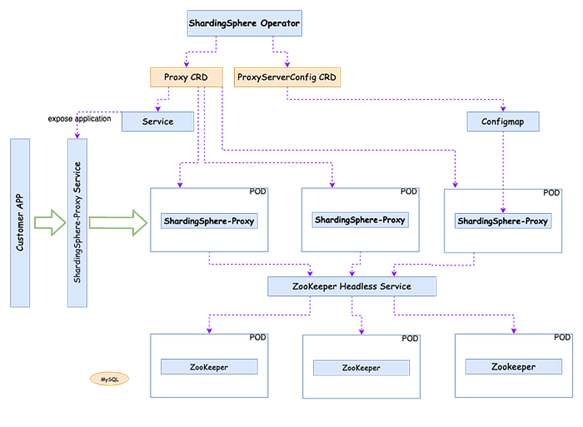

In version 0.1.x of ShardingSphere Operator, two CRD components, Proxy and ProxyServerConfig, were introduced to describe the deployment and configuration of ShardingSphere Proxy, as shown in the figure below.

In version 0.1.x of ShardingSphere Operator, two CRD components, Proxy and ProxyServerConfig, were introduced to describe the deployment and configuration of ShardingSphere Proxy, as shown in the figure below.

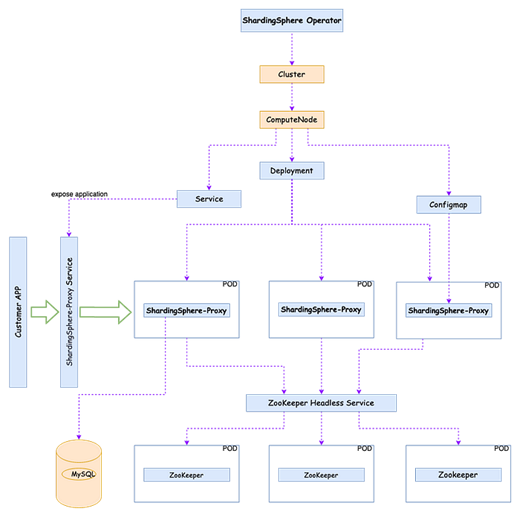

These components provide fundamental maintenance and deployment abilities for ShardingSphere Proxy that are sufficient for Proof of Concept (PoC) settings. However, for the Operator to be beneficial in production environments, it must be equipped to handle various situations and challenges. Such situations include cross-version upgrades, graceful session terminations, horizontal elastic scaling with several metrics, location-aware traffic scheduling, configuration security, cluster-level high availability, and more. ShardingSphere-On-Cloud has introduced ComputeNode to address these management abilities, which can manage these tasks within a specific group of objects. The first item in this group is ComputeNode, which is illustrated in the figure below:

Compared with Proxy and ProxyServerConfig, ComputeNode brings changes such as cross-version upgrades, horizontal elastic scaling, and configuration security. ComputeNode is still in the v1alpha1 stage and needs to be enabled through a feature gate.

ComputeNode Practice

Quick Installation of ShardingSphere Operator

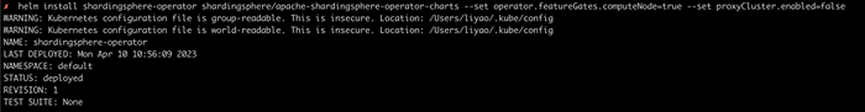

To quickly set up a ShardingSphere Proxy cluster using ComputeNode, execute the following helm command:

helm repo add shardingsphere-on-cloud https://charts.shardingsphere.io helm install shardingsphere-on-cloud/shardingsphere-operator - version 0.2.0 - generate-name

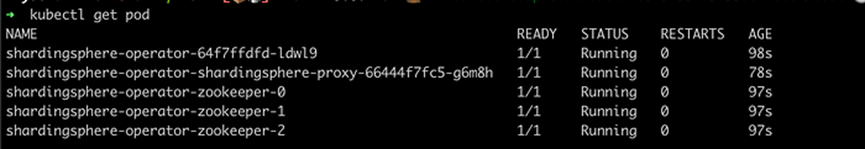

- The deployment status of the ShardingSphere Proxy cluster can be checked using

kubectl get pod:

Now, a complete cluster managed by ShardingSphere Operator has been deployed.

Checking the Shardingsphere Proxy Cluster Status Using Kubectl Get

ShardingSphere Proxy cluster’s status can be checked using kubectl get pod. The ComputeNode status includes READYINSTANCES, PHASE, CLUSTER-IP, SERVICEPORTS, and AGE.

READYINSTANCES represent the number of ShardingSphere Pods in the Ready state, PHASE represents the current cluster status, CLUSTER-IP represents the ClusterIP of the current cluster Service, SERVICEPORTS represents the port list of the current cluster Service, and AGE represents the creation time of the current cluster.

kubectl get computenode

Customizing ComputeNode Configuration

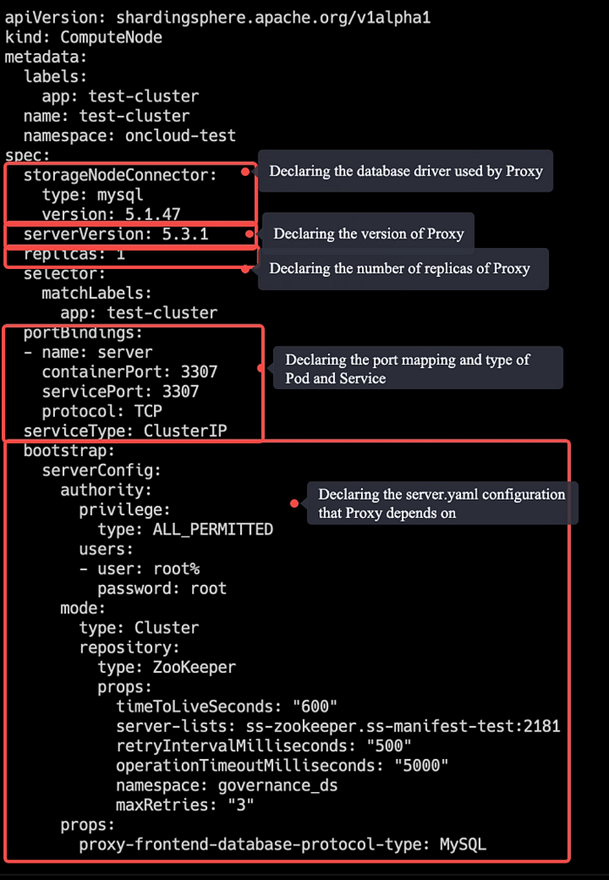

In case the ComputeNode installed by the operator's default charts is unable to fulfill the usage requirements, it is necessary to manually write a ComputeNode yaml file and submit it to Kubernetes for deployment:

apiVersion: shardingsphere.apache.org/v1alpha1

kind: ComputeNode

metadata:

labels:

app: foo

name: foo

spec:

storageNodeConnector:

type: mysql

version: 5.1.47

serverVersion: 5.3.1

replicas: 1

selector:

matchLabels:

app: foo

portBindings:

- name: server

containerPort: 3307

servicePort: 3307

protocol: TCP

serviceType: ClusterIP

bootstrap:

serverConfig:

authority:

privilege:

type: ALL_PERMITTED

users:

- user: root%

password: root

mode:

type: Cluster

repository:

type: ZooKeeper

props:

timeToLiveSeconds: "600"

server-lists: shardingsphere-operator-zookeeper.default:2181

retryIntervalMilliseconds: "500"

operationTimeoutMilliseconds: "5000"

namespace: governance_ds

maxRetries: "3"

props:

proxy-frontend-database-protocol-type: MySQLSave the above configuration as foo.yml and execute the following command to create it:

kubectl apply -f foo.yml

The above example can be directly found in our Github repository.

Other Improvements

Version 0.2.0 of Apache ShardingSphere-On-Cloud also includes additional enhancements such as the ability to support rolling upgrade parameters in the ShardingSphereProxy CRD's annotations, addressing problems with readyNodes and Conditions in certain scenarios in the ShardingSphereProxy Status field, and other improvements.

- Introduced the scale subresource to ComputeNode to support kubectl scale #189

- Separated the construction and update logic of ComputeNode and ShardingSphereProxy #182

- Wrote NodePort back to ComputeNode definition #187

- Fixed NullPointerException caused by non-MySQL configurations #179

- Refactored Manager configuration logic and separated command line configuration #192

- Fixed Docker build process in CI #173

Wrap Up

To sum up, the latest version, 0.2.0, of Apache ShardingSphere-On-Cloud has introduced the new CRD ComputeNode for ShardingSphere Operator, which delivers a wide range of management abilities that are crucial in production environments. With ComputeNode, users can define computing nodes completely within the ShardingSphere structure and address various scenarios and challenges, such as cross-version upgrades, graceful session terminations, horizontal elastic scaling, location-aware traffic scheduling, configuration security, and cluster-level high availability.

Community Contribution

This ShardingSphere-On-Cloud 0.2.0 release is the result of 22 merged PRs, made by two contributors. Thank you for your love and passion for open source!

GitHub ID:

- mlycore

- xuanyuan300

Relevant Links:

Published at DZone with permission of Tony Zhu. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments