What You Need to Know to Test Kafka

Kafka and distributed streams can come in handy when working with microservices. Read on for an introduction to testing Kafka and make sure you're on the right track.

Join the DZone community and get the full member experience.

Join For Free1. Introduction

In this article, we will quickly explore some of the fundamental concepts of Kafka distributed streams. Also, we will learn how to test an application built using Kafka.

This will give us some insight into what we can test in a microservices architecture involving Kafka.

For more detailed about automated testing Kafka application with running examples in GitHub, please visit A Quick and Practical Example of Kafka Testing in DZone.

1.1. How Kafka is Different from Other MQs

All Message Queues(MQs) such as Kafka, ActiveMQ and RabbitMQ etc are all messaging technologies used for asynchronous communication. They all support decoupling i.e. decoupling between server and client while sending/receiving messages.

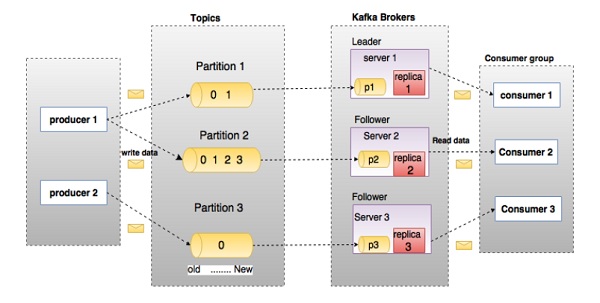

Whereas Kafka is a distributed, parallel processing, partitioned, replicated messaging service. Though it provides the functionality of a simple messaging system, at the same time it(Kafka) has been uniquely designed for a high-throughput distributed messaging system.

Image credit:tutorial-point

2. Things We Need To Know

Kafka is a distributed messaging system. While the word "distributed" here solves loads of real-world problems, at the same time it also causes us to think of testing scenarios and challenges to address.

When we deal with Kafka applications, we need to know at least the below topics:

- Broker

- Topic

- Partitions

- Record

- Produce

- Consume

We need to know where the topic resides inside the brokers and what type of messages, a.k.a. records, are produced to the topic, as well as what happens when the messages are consumed by the listeners.

Once we know these basic things, we should be able to test any Kafka application easily.

For more details about Kafka, please visit Kafka Architecture and More.

2.1. What Does a Broker(s) Do?

A broker is a Kafka server. A broker allows the producers to send messages, and consumers to fetch messages by topic, partition, and offset.

2.2. What Is a Topic and Partitions?

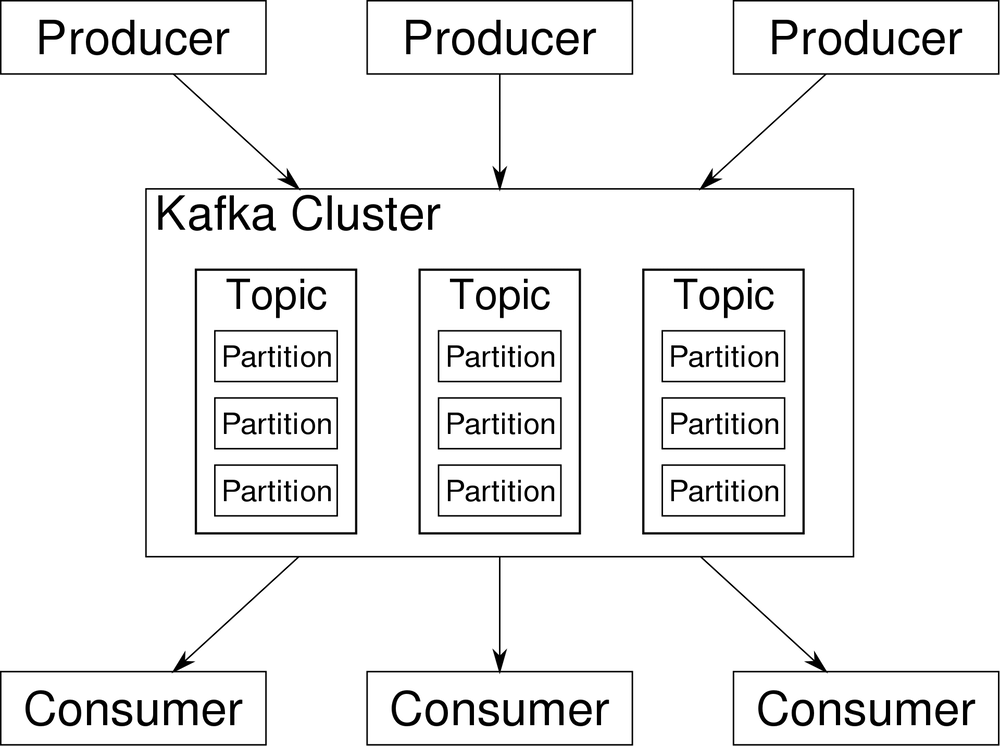

According to Kevin Sookocheff, "Kafka topics are divided into a number of partitions. Partitions allow you to parallelize a topic by splitting the data in a particular topic across multiple brokers — each partition can be placed on a separate machine to allow multiple consumers to read from a topic in parallel."

2.3. Knowing the Record Format

A record is a message which can be written to or fetched from a topic. A record can be of various formats, e.g. RAW, JSON, CSV, AVRO, etc., and many others.

Records are represented in Key, Value pair,

e.g. "key":"1234", "value":"Hello World"

2.4. Producer Testing

'Produce' simply means writing one or more records (key-value pairs) to a topic. For example, we produce a record with the following.

i.e. "key":"1234", "value":"Hello World"

2.5. Consumer Testing

'Consume' simply means fetching one or more records (key-value pairs) from one or more topic(s).

Assert that the same record was present in the response (fetched record) i.e. "key": "1234", "value": "Hello World",

because we might have consumed more than one record if they were produced to the same topic before we started consuming from the topic.

3. Things We Can Test in a Kafka Applications

Put simply, we can assert whether we have successfully produced (written) a particular record or stream of records to a topic. And we can also assert the consumed (fetched) record or stream of records from one or more topic(s).

Also, we can dive in further and assert at granular levels, for instance :

- Whether we have produced the record to a particular partition.

- The type of record we are able to produce or consume.

- The number of records written to a topic or fetched from a topic.

- The offset of the record.

- Sending and receiving AVRO or JSON records and asserting the outcome.

- Assert the DLQs (Dead Letter Queue) record(s) and the record-metadata.

- Schema Registry for AVRO and validate records.

- And KSQL of querying streaming data in a SQL fashion and validate the result.

4. Conclusion

In this short tutorial, we learned some fundamental concepts of Kafka and the minimum things we need to know before proceeding with Kafka Testing. Also, we learned what all things we can cover in our testing.

For more and practical Kafka Testing, please visit A Quick and Practical Example of Kafka Testing in DZone.

Opinions expressed by DZone contributors are their own.

Comments