Analyze Massive Data Graphs On Your PC With This Device

Here's a cool new device dedicated solely to sort/reduce massive data graphs. It's fast, cheap, and uses far less power.

Join the DZone community and get the full member experience.

Join For FreeWe like our computers to be general-purpose, but specialty hardware has been creeping in since the beginning. Remember when Intel introduced the 287 math coprocessor, a dedicated chip that only did math? It was faster, more efficient, and allowed logic operations to be done concurrently. Today, lots of high-level functionality is done in auxiliary devices. Here's a cool new device dedicated solely to sort/reduce massive data graphs: Fast, cheap, and uses far less power.

Everyone is aware of the mounting problem we're having with the astronomical power consumption of our new High-Performance Computing (HPC). All of the astonishing machine learning were doing these days continually spotlights the situation. In fact power consumption is so great that the heat dissipation is a major consideration for locating new computational centers. Google tries to build them next to rivers just to take advantage of the water as a heat sink. Microsoft is building HPC computer arrays that are sealed in watertight containers and placed on the sea floor (where the water is very cold). These are obviously extreme solutions.Researchers at MIT CSAIL Found a smarter way to do Graph Analysis algorithms in dedicated hardware. We're all familiar with the Nvidia GPGPUs, and Google has devised its specialized hardware to do tensor (matrix) manipulations called Tensor Processing Units (TPUs).

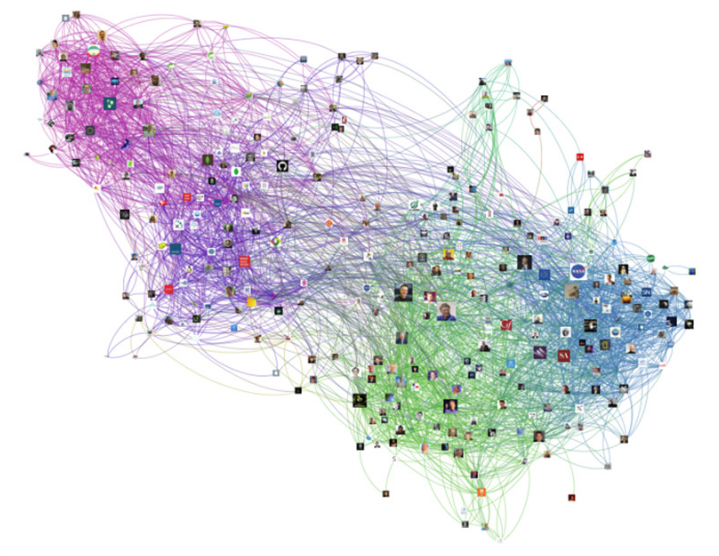

The CSAIL researchers tackled the problem of analyzing graphs. Conceptually, graphs are just a collection of nodes with connections drawn as lines between them (mathematicians call them "edges"). Visualize a large website. Imagine each page as a node and all of the links to other pages as lines drawn between the nodes. It can be a very large graph, but lots of other things are huge graphs:

The CSAIL researchers tackled the problem of analyzing graphs. Conceptually, graphs are just a collection of nodes with connections drawn as lines between them (mathematicians call them "edges"). Visualize a large website. Imagine each page as a node and all of the links to other pages as lines drawn between the nodes. It can be a very large graph, but lots of other things are huge graphs:

the huge social graph represented by Facebook friends

the gigantic graph represented by neurons in the brain

In order for it to be practical to navigate through such graphs, examining nodes of interest and exploring where they lead the graph must be in computer RAM. Why in RAM? The connections between nodes are simply memory pointers to other nodes. While the graph seems structured as a concept, it is actually implemented as random jumps all over the data space. Linked nodes are rarely near each other in memory. The graphs we manipulate today consist of billions of nodes and many billions of connections between those nodes. So, it's not surprising that machines used for graph manipulation typically have 128 GB of DRAM. Even at today's memory prices, that much memory is expensive. However, even with all that memory available, there is the power issue. Power usage goes up as a direct function of memory access. Viewing data at a specific location and/or altering that data is a direct determinant of how much power is consumed, and when one is ferociously traversing the graph, most of the work being done is memory access as opposed to CPU computation. When the graph gets larger than a single computer can hold, then the problems become worse because data must be shuttled between servers, which is orders of magnitude more power-hungry (and slower).

Initial attempts to combat the power-hungry DRAM were to replace it with flash memory. Flash uses much less power and is physically much smaller, but in a random access application, it is orders of magnitude slower (flash memory access speeds are somewhere between hard drives and RAM). Something more was needed, and in this case, they added searching algorithm special sauce. The idea was to sort the incoming stream of graph node address requests into something resembling more efficient walks through the tree picking up nodes as they went. Then, of course, the resulting nodes had to be merged with their original node address requests. Essentially, the hardware device implements a complete sort merge process using a small amount of DRAM and a large amount of flash RAM; but more on this a little later.

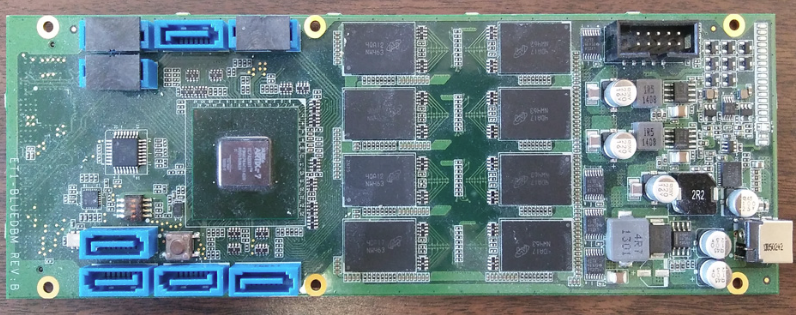

The research team tested their system against traditional hardware using the Web Data Commons Hyperlink Graph (3.5 billion nodes and 128 billion edges). The 128 gigabytes DRAM conventional server hardware cost about $10,000 USD. The device the team created was based around 1 GB of DRAM and 1 TB of inexpensive flash memory. It was designed to plug into a conventional PC. The device was also architected to be modular so multiple devices could be plugged together. In their tests, they demonstrated that two units plugged into a conventional PC ran at the same speed as the high-end, memory laden server. Again, because they are modular, the team could plug in several of these accelerator boards and process data graphs far larger than the high-end server was even capable of. Massive graphs containing up to 4 billion nodes and up to 128 billion edges could be processed in a PC box tucked away under your desk.

If the marketplace finds a demand for these types of devices, it's clear that an entire new world of research based on graph analytics will emerge. Kids in high school will be able to do science fair projects based on computational genetics that would've seemed undoable by the pioneers of genetic researchers just a decade ago. The cooling and power savings for large data centers will be significant. I'm always attracted to higher performance, but if it gets cheaper and at the same time it gets greener, what's not to like?

Sang Woo Jun is a CSAIL graduate student team member and first author of the introductory paper to be presented at the International Symposium on Computer Architecture (ISCA).

[GraFBoost: Using accelerated flash storage for external graph analytics - Sang-Woo Jun (MIT), Andy Wright (MIT), Sizhuo Zhang (MIT), Shuotao Xu (MIT), Arvind (MIT) ]

Woo Jun said, “The bottom line is that we can maintain performance with much smaller, fewer, and cooler — as in temperature and power consumption — machines.”

Analyzing graphs is a universal problem in today's big data computational environment. One of the co-authors on the paper is Arvind (Johnson Professor in Computer Science Engineering) pointed this out: “Graph processing is such a general idea. What does page ranking have in common with gene detection? For us, it’s the same computation problem — just different graphs with different meanings. The type of application someone develops will determine the impact it has on society.”

Some Background

Real-world graphs are usually very sparse structures. In order to navigate them, the algorithms need to follow links (pointers), which are usually a single word (eight bytes in a 64-bit architecture), in order to hop to the next connected node. This next node is potentially anywhere in the data space where the graph resides. As a result, many reads of word size chunks of data are required. This works fast when the entire graph is in the RAM, but as you recall, the RAM is expensive, and a commercially or scientifically interesting graph will require dozens of gigabytes (or even terabytes) of memory. Flash memory is cheap and very low power (the last 256 GB flash drive I bought cost less than $40 USD). It also has the advantage of retaining data with no power at all! The only disadvantage to flash is that it accesses the storage in blocks similar to the way disk drives read data in sectors. The block sizes are dependent on the particular flash device, but they usually range between 4K and 16 K in size. So, much like reading from a hard drive, you must read the entire block into RAM and then find the specific word within that block. The same thing happens when you write data to a flash drive. So, reading one 8 byte word from a flash device that uses 8K blocks is inherently 1000 times slower. On average, it probably uses more power than the be RAM because it's forced to access 1000 times as much data. Jun sums it up by saying, “If you need to access the entire 8 kilobytes, and use only 8 bytes and toss the rest, you end up throwing 1,000 times performance away.”

Enter, Sort, and Reduce

This new device is programmed to implement a “sort-reduce” algorithm. It optimizes the way the blocks are accessed so that more requested data can be extracted from each block read from the flash device. In essence, all of the individual word read requests are collected, buffered, and then sorted into groups that reside in the same flash block. So, for the cost of one flash block read, many individual word address requests can be fulfilled, which dramatically improves the throughput efficiency.

The algorithm also does a merge operation, which merges duplicate requests to read the same memory location. After duplicates are culled, the final operation sorts the remaining memory locations in order to simplify and speed up the unloading of the individual words from the retrieved flash data block. The researchers claim that using this sort-reduce tactic on two very large graphs saved about 90% of the flash memory access.

Custom Onboard Acceleration

Also, since all this sorting and reducing is a computational heavy lift, the research team added a custom processor on board the device. This custom accelerator handles all of the sorting and reducing required for the speed up and presents the data to the host PC as "already sorted and reduced." The PCs load is relatively light and relegated to mostly managerial tasks. As Arvind put it, “Accelerators are supposed to help the host compute, but we’ve come so far [with the computations] that the host becomes unimportant.”

Keshav Pingali, a professor of computer science at the University of Texas at Austin, added, “The MIT work shows a new way to perform analytics on very large graphs. Their work exploits flash memory to store the graphs and exploits ‘field-programmable gate arrays’ [custom integrated circuits] in an ingenious way to perform both the analytics and the data processing required to use flash memory effectively. In the long run, this may lead to systems that can process large amounts of data efficiently on laptops or desktops, which will revolutionize how we do big-data processing.”

Because the host can be so low-powered, Jun says, a long-term goal is to create a general-purpose platform and software library for consumers to develop their own algorithms for applications beyond graph analytics. “You could plug this platform into a laptop, download [the software], and write simple programs to get server-class performance on your laptop,” he says.

It seems like someday soon that there will be no network that is too big to be analyzed, and that's a net positive!

Opinions expressed by DZone contributors are their own.

Comments