Modern Cloud-Native Jakarta EE Frameworks: Tips, Challenges, and Trends.

Changes brought by cloud-native architecture impact applications in ways that weren't critical before.

Join the DZone community and get the full member experience.

Join For FreeJava has been evolving a lot over its 25 years, mainly in performance, integration with containers, and also since it is always updating every six months. But, how are Java frameworks going in this new era, where new demands for software architecture appear, such as the current cloud-native? What are the challenges they will face to keep Java alive for another 25 years? The purpose of this article is to talk a little about the history and challenges for new generations and the trends of the frameworks that, as Java developers, we will use in our day today. It is vital to understand a little of both the history of computing and software architecture. Let's talk about the relationship between man and machine, an essential point where we can divide it into three stages:

A machine for many people.

One machine for one person.

Many machines for many people.

The abundance of machines also had an impact on software architecture, mainly on the number of physical layers:

A single-tier: Related to the mainframe, where the computer is responsible for both processing and the database, and the interaction is on the computer itself.

Three-tier: The most used model today. It is very similar to the classic MVC architecture, but for physical layers. In this model, we have a server for the database, one for the application and the client accesses everything on another device, be it a computer, cell phone, tablet or smartwatch.

The era of microservices: The low price of computers helped to increase the number of physical layers. It is very common for a single request to handle multiple servers, be it an orchestration with microservices, or with servers using load balancers.

An important point is that the cloud environment, together with automation, accelerated several processes, from the number of physical layers to the number of deploys. For example, there was a time in history when the normal was one deployment per year. With the Agile methodology, the culture of integrating the development sector with that of operations and many other techniques, made this frequency increase to once a month, per day to even instant deploys, including Friday at 6pm.

What's Wrong With Java?

With the evolution of architectures to an environment focused on the cloud, constant deploys and automations such as CI/CD, several requirements have changed and consequently came the various complaints of Java, including:

Startup: Certainly the biggest complaint. Comparing with other languages the startup time is very high;

Cold start: JIT is a wonderful resource that allows several improvements to the code at runtime, however, what about the application that performs constant deploys? JIT is not as critical as a better boot.

Memory: Certainly, the JVM is still one of the few languages that can enjoy a native thread, however, this has a big problem when compared to Green Thread, which is related to memory consumption. This is one of the reasons why we use Thread Pools. However, do the features of the native thread make sense in a reactive programming environment? Another point that requires a lot of memory resources are the JIT (Just in time compiler) and GC (Garbage Collector) that also work in several threads but do we need this resource when we work with serverless? After all, it tends to run only once, and later the resource will be returned to the operating system. As a matter of curiosity, Java is working on the concept of Virtual Thread.

Despite this, Java is still a popular language, especially when it comes to performance and performance. It is common to see technology companies that move out of other languages and migrate to Java-like Spotify, which left Python aside and migrated to Java, precisely to have more performance in their applications. Another important point is in Big Data applications, where most of them are developed in Java as Hadoop, the NoSQL Cassandra database, and the Lucene text index.

Looking more closely at the codes of the corporate applications, on average, 90% of the code of the projects belong to third parties; that is, frameworks make almost all the code of a Java application.

Metadata in the Java World

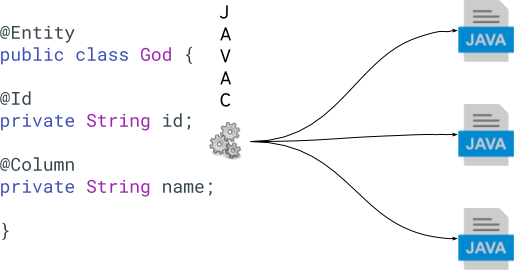

But why do we use so many frameworks? A simple answer would be, the vast majority of these tools facilitate the process in some way, especially in a conversion or a mapper. For example, when converting Java entities to XML files or databases.

The goal is to try to decrease the impedance between the paradigms. For example, reducing the distance from relational databases and Java objects, not to mention the good practices where Java works with camelCase and relational databases work with snake_case. One of the strategies for doing this service is using the creation of metadata. It is these metadata that ensure that we make the relationship between the database with a Java class. For example, it is very common in the first specifications of the Java world to work with this type of approach like the orm.xml performed by JPA.

For example, in creating an entity that represents the gods of Greek mythology, the class would normally look like this:

xxxxxxxxxx

public class God {

private String id;

private String name;

private Integer age;

//getter and setter

}

The next step would be to create an XML file to make the relationship between the Java class and the database mapping statements. This file will be read at run time to generate metadata at the same time.

xxxxxxxxxx

<entity class="entity.God" name="God">

<table name="God"/>

<attributes>

<id name="id"/>

<basic name="name">

<column name="NAME" length="100"/>

</basic>

<basic name="age"/>

</attributes>

</entity>

This metadata is read and generated at run time and it is this data that will make the most dynamic part of the language. However, this data generation would be useless if there is no resource that allows changes to be made at run time. All of this is possible thanks to a computer science resource that gives the ability to process, examine and perform introspection of the types of data structures. We are talking about Reflection.

The Reflection feature, unlike what many Java developers think, is not an exclusive feature within this language, it also exists in the languages GO, C #, PHP and Python. In the Java world, the package for realizing the Reflection APIs has existed since version 1.1. This feature allows the creation of generic tools or frameworks, which increase productivity. However, many people have had problems with this approach.

With the evolution of the language and of the entire Java ecosystem, the developers noticed that making this generated metadata very distant from the code (as it was until then done with XML files) was often not intuitive, not to mention increasing the difficulty in time to perform maintenance, as it was necessary to change it in two places, for example, when it was necessary to update a field, it was necessary to change the Java class and the database.

In order to make this even easier, one of the improvements that took place within Java 5 in mid-2004 was the Metadata facility for Java JSR 175, known affectionately to insiders as Java notations. For example, going back to the previous entity, a second file for the configuration would no longer be needed. All the information would be put together in a single file.

xxxxxxxxxx

public class God {

private String id;

private String name;

private Integer age;

}

Certainly, the combination of reflection and notations has brought several benefits to the world of Java tools over the years. There are several advantages from which we can highlight connectivity, that is since all validation takes place at the time of execution, it is possible to add libraries that will work in the “plug and play” style. After all, who has never been amazed by ServiceLoader and all the "magic" that can be done with it?

Reflection also guarantees a more dynamic side to the language. Thanks to this feature, it is possible to make a very strong encapsulation, such as creating attributes without getter and setter that in execution all this will be solved with the setAccessible method.

xxxxxxxxxx

Class<Good> type = ...;

Object value =...;

Entity annotation = type.getAnnotation(Entity.class);

Constructor<?>[] constructors = type.getConstructors();

T instance = (T) constructors[0].newInstance();

for (Field field : type.getDeclaredFields()) {

field.setAccessible(true);

field.set(instance, value);

}

Even Reflection Has Problems

Como toda decisão e escolha de arquitetura, há impactos negativos. O importante neste caso é que mesmo o uso do Reflection traz alguns problemas para as aplicações que utilizarão esses frameworks.

The first point is that its greatest benefit comes in conjunction with the harms of runtime processing. Thus, the first step that an application needs to do is precisely to execute the metadata information, so that the application will only be functional when the whole process is finished. Imagine a dependency injection container, which, like CDI, will need to scan the classes to check their contexts and dependencies. This time tends to increase a lot with the popularization of the CDI ecosystem thanks to the CDI Extension.

In addition to the time to start an application, thanks to runtime processing, there is also the problem of memory consumption. It is very natural that frameworks, in order to avoid constant access to the reflections APIs, create their own memory structure. However, in addition to this memory consumption when using any resource that needs reflection within a class instance, it will load all information at once into the ReflectionData class as software reference. If you are not familiar with the references in Java, it is important to say that the SoftwareReference will only be eligible for the GC when it does not have enough memory inside the Heap.

That is, a simple getSimpleName will need to load all the information from the class.

xxxxxxxxxx

//java.lang.Class

public String getSimpleName() {

ReflectionData<T> rd = reflectionData();

String simpleName = rd.simpleName;

if (simpleName == null) {

rd.simpleName = simpleName = getSimpleName0();

}

return simpleName;

}

Based on this information, when using the combination of Reflection and notations at run times, we will have both a problem in processing and also when starting an application when we analyze the memory consumption. Each framework has its purpose and, as working with these instances in Java, the CDI searches for beans to define the scopes and the JPA to perform the mapping between Java and the relational database, it is natural that each performs its own processing and has the metadata according to your purpose.

This type of design is not a problem until the present moment, however, as we already mentioned, the way we are making software has been changing quite drastically from programming to deploy. It was very common to deploy in a process window where a system idle period was created, usually during the night, to the point that the “engine heating” process was never a problem. However, with several deployments throughout the day, the horizontal scalability automatically, and other changes in the software development process, made the start-up time for some applications a minimum requirement.

Another point worth mentioning is certainly related to the cloud era.

Currently, the number of services that the cloud provides has grown dramatically. Among them is serverless, since the current approach does not make sense in a process that will be executed only once and then be destroyed. Graeme Rocher talks about these challenges in the world of the Java framework in one of his most famous lectures to introduce Micronaut.

Furthermore, to the problems of delay loading everything into memory and latency at startup concerning Reflection, there is also another problem, the speed and performance of execution compared to native code, in addition to using Reflection to make the solution slower than a generated code. As much as there are several optimizations within the JIT world, working with it makes it even better, but these optimizations are still very timid when compared to native code.

An option to improve the optimizations and guarantee the dynamic validations within the frameworks and the generation of classes that do this type of manipulation at the time of execution, would be to read the information of the classes via Reflection, but instead of manipulating the information through it, the objective would be to create classes at execution time so that they do this task. In other words, during the runtime read the information from the notations and create classes that perform such manipulations.

This type of manipulation is possible thanks to JSR 199, making these manipulation classes give a stronger optimization to JIT.

xxxxxxxxxx

JavaSource<Entity> source = new JavaSource() {

public String getName() {

return fullClassName;

}

public String getJavaSource() {

return source;

}

};

JavaFileObject fileObject = new JavaFileObject(...);

compiler.getStandardFileManager(diagnosticCollector, ...);

However, the big point is that within the process, in addition to reading the class information, the text interpolation to generate a class in addition to the class compilation will be included. That is, memory consumption tends to increase dramatically along with the time and computational power to start an application.

In an analysis comparing direct access, reflection and combined with the compilation API, Geoffrey De Smet mentions in the article that when using the compile approach at runtime the code is about 5% slower when compared to the code native, that is, it is 104% slower than only with reflection. However, there is a very high cost when starting the application.

The Cold Start Solution in Java

As we mentioned in the history of applications within the Java world, Reflection has been used extensively, mainly for its connectivity, however, this has brought the application startup time beyond high memory consumption as a major challenge. A possible solution is that to generate these metadata at runtime, they were made within the scope of compilation. This approach would have some advantages:

Cold-start: The whole process would take place at compilation time, that is, when starting the application, the metadata would already be generated and ready for use.

Memory: The processed data is not necessary, for example to access the classes' ReflectionData information, saving memory.

Speed: As already mentioned, access via Reflection tends not to be as fast as the compiled code, in addition to being subject to JIT optimizations.

![JIT optimization]()

Fortunately, this solution already exists in the Java world thanks to the JSR 269 Pluggable Annotation Processing API. In addition to the advantages we mentioned above, there is also a feature that emerged in Java 9, the JPE 295 Ahead of Time Compilation that among its advantages is the possibility of compiling a class for native code, very interesting for applications that want a startup fast. However, it fails in some points: The plug and play effect, since all validations are made during compilation, a new library will have to be explicitly used. Another point is in the package. Thinking of a database mapper, it is important to leave either the public attribute or the getter and setter visible in some way, even if it does not make sense for the business, as when working with an auto-generated id, since the framework will provide this ID, a setter of this attribute tends to be an encapsulation failure.

("org.soujava.medatadata.api.Entity")

public class EntityProcessor extends AbstractProcessor {

public boolean process(Set<? extends TypeElement> annotations,

RoundEnvironment roundEnv) {

//....

}

}

The Use of Native code

One of the great features of serverless is that it works as a backend as a service, that is, we pay for the execution of the process. Following this line of reasoning, the use of a JVM at many times is not necessary since it has features that do not make sense for a single execution. Among them we can mention the GC and also the JIT. One of the strategies would be to use GraalVM to create native images. This approach makes the JVM not necessary at the time of execution. Based on Andrew S. Tanenbaum's book Structured Computer Organization, we would have the following layers with and without the JVM.

Very useful for a single execution, however, it is important to note that when we have many executions, there are cases where the performance can be superior with the JVM instead of using only the native code.

Conclusion

The number of frameworks in the Java world tends to increase, especially when we take into account the diverse requirements and styles that applications tend to have, either focusing on a could-start, or focusing on connectivity. The direction that the architectures will take is still uncertain, whether in microservices, macroservices, or simply, the return to the monolith. However, it is very likely that there will be several options even more in an era where the adoption of the cloud is getting stronger, not to mention the growth and styles of services existing in this path. And it will be up to institutions like the Eclipse Foundation with Jakarta EE to understand all these paths and work on the specifications that will support these styles.

Source sample: https://github.com/soujava/annotation-processor

Opinions expressed by DZone contributors are their own.

Comments