Oracle vs. Snowflake

Take a look at Oracle vs. Snowflake from someone who has worked for both.

Join the DZone community and get the full member experience.

Join For Free Source: Pexels.com

Source: Pexels.com

Why I Abandoned Oracle After 30 years

I first started working with Oracle in 1987 on Oracle Version 5. That was not only before PL/SQL and row-level locking, but The Cloud and even The Internet itself had yet to be invented.

Back then, Oracle was an amazing product. The competition was hierarchical and network databases or Index Sequential (ISAM) files on big iron IBM mainframes. Using Oracle on a DEC VAX was like going from a model T-Ford to a Porsche 911 at half the cost.

Fast forward 30 years, and Oracle is barely clinging to the top spot on the database ranking table, at a mere 5% ahead of the MySQL database, while MongoDB and PostgreSQL both grew by over 60% in the past year.

Maybe it was because of the aggressive sales tactics or because of a selfish and arrogant attitude where customers are always put last.

The massive growth in popularity of Hadoop and open source alternatives including MySQL and PostgreSQL have been eating into the Oracle market, and niche database alternatives from NoSQL vendors including MongoDB, Elasticsearch, and Redis have become increasingly popular as businesses deal with web-scale data volumes and need millisecond performance.

Finally, as I have indicated before, Oracle is no longer a really sought-after skill. It doesn’t even make it in the top 10 most sought-after database skills in 2019, as illustrated below.

But Enterprise-grade products like Oracle don’t just fall out of fashion. They either fail to innovate or like the dinosaurs, they die because they refuse to accept, let alone adapt to a rapidly changing world. One in which The Cloud is already dominating the IT landscape.

Where Did It All Go Wrong?

As early as 2005, the Turing award winner, professor Michael Stonebraker of MIT, was predicting the demise of the dominance of Oracle, Microsoft, and IBM in his seminal paper “One Size Fits All” – An idea who’s time has come and Gone. In this paper, he accurately predicted that the database market would fragment and that the data warehouse market would be dominated by new players with the emergence of column-oriented database solutions.

Three years later, he published OLTP through the looking glass, which demonstrated the H-Store database, a stripped back open source database with a pure OLTP focus. This was an astonishing achievement, as the TCP-C world record was around 1,000 transactions per second per CPU core, and yet he managed over 35,000 on an Intel 2.8GHz desktop.

In total, the prototype delivered an incredible 70,000 transactions per second, and he went on to build a highly successful commercial venture VoltDB, which recent benchmarks demonstrate single-digit millisecond latency while maintaining a serializable isolation level and complete transactional consistency that is simply not possible on Oracle.

“The architecture of most DBMSs is essentially identical to that of System R”. – Dr Michael Stonebraker. MIT.

The underlying reason the team at MIT was able to achieve such an astonishing result is illustrated in the chart below and highlights the fact that the Oracle architecture is based on a design conceived in the 1970s for the “System R” database.

In summary, they found that every commercial database product (ie. Oracle, SQL Server, and DB2), spent around 93% of the time on overhead tasks and just 7% doing any useful work. They discovered the database spent the majority of the time coordinating the in-memory buffer cache, writing to log files, and in-memory latching and locking.

Essentially, the architecture was formulated at a time when memory was about 13 million times more expensive than today, and the entire architecture was based around the careful management of a historically expensive resource.

Even the release of Oracle in Memory, which I personally demonstrated a 27 times performance improvement, is still a sticking plaster on the problem, and I was unable to deploy this for a customer because of the massive license cost per CPU core.

Data Warehouse Alternative?

Firstly, any modern data warehouse needs to be cloud-based to gain the huge benefits of agility, scalability, elasticity, and end-to-end security. Snowflake stands out as the only data warehouse designed specifically for the cloud. So how does it compare to Oracle on-premise?

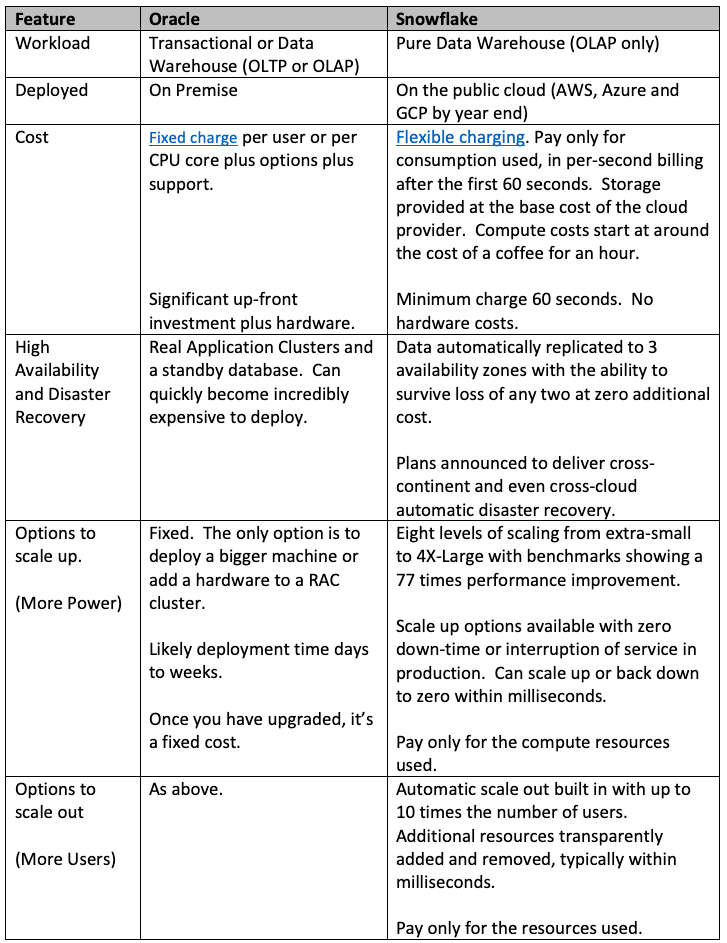

Oracle vs. Snowflake: Primary Differences

Technical Differences

Overall Differences

In discussing the differences between Snowflake and Oracle, rather than criticizing Oracle, let’s consider what you don’t need with Snowflake:

- Installation: There is no hardware to install and no operating system or database software to deploy. There are no patches to apply or database upgrades to schedule. In fact, switching to Snowflake may well be the last upgrade you ever need to do, and you can run with zero downtime.

- Be Locked In: Snowflake currently runs on Amazon AWS and Microsoft Azure platform and was recently announced on the Google Cloud Platform.

- Database Management: There are no indexes to manage, no physical data partitioning needed, no statistics to capture or fear of a query performance cliff-edge if they are not correctly captured. In fact, Snowflake demonstrates near-zero database administration effort.

- Tug of War: Snowflake has abolished the tug of war for machine resources using workload isolation. Instead of a single, massive multi-core database server, you can deploy an unlimited number of completely independent virtual warehouses. This means you can be loading terabytes of data on one warehouse while transforming the data on another, and analyzing the results on yet another.

- Disk Upgrades: Never run out of disk space ever again. Snowflake supports literally unlimited data volumes on both Amazon AWS, Microsoft Azure and soon Google Cloud Platform. On Snowflake, some customers hold as much as a petabyte in a single table.

- Data Compression: There is no need to pay the license cost of the OLTP option or carefully load data to maximize data compression using insert append on Oracle. With Snowflake, all your data is automatically compressed using columnar compression, often to a factor of between 3 and 6 times.

- Migrate to a Bigger Server: As the chart below illustrates, Snowflake is incrementally scalable, with a simple set of T-Shirt sizes and can be increased from an extra-small to a 4X-Large server within milliseconds. The chart demonstrates the reduction in an elapsed time of a 256Gb table join as the server size is increased.

- Deploy for High Availability: There is no requirement to deploy an expensive hot-standby data center, with data replication and fail-over for high availability. Snowflake transparently writes data to three Availability Zones within a region and can automatically survive the loss of any two. Indeed, they recently announced the launch of cross-region and cross-cloud replication and automatic fail-over. This means a customer in the US West Coast will be able to provide an automatic fail-over to the East Coast, and even a fail-over from Amazon AWS to Microsoft Azure or Google Cloud.

- Spend hours producing backups: Snowflake provides up to 90 days of time travel, including the ability to undrop a table, schema, or even an entire database within seconds. This means you can query the data as it was up to 90 days ago, and with zero copy clones, take a transactionally consistent backup of a terabyte of data within five seconds, with recovery equally fast.

- Worry about Security: Snowflake includes end-to-end encryption with automatic key rotation, Multi-Factor Authentication, and even the option of dedicated cloud hardware with the Virtual Private Snowflake option.

Impact of Cloud Computing

"What the Hell is Cloud Computing?...I’ve no idea what everyone’s talking about." Larry Ellison. Oracle CEO.

Of course, despite the protests of Larry Ellison back in 2008 that cloud computing was “complete gibberish”, it now seems Oracle has completely embraced a future in the cloud, which is just as well, since according to a RightScale survey, cloud services are currently witnessing growth rates of up to 50% per year.

However, as the above chart illustrates, Oracle is way behind in the race to the cloud. It shows Enterprise survey respondents cloud plans, with 83-86% using or planning to use AWS and/or Microsoft Azure with Oracle in fifth place at just 37%.

In my next article, I’ll review the state of Oracle cloud services.

Conclusion

In conclusion, while the rest of the world was looking towards Hadoop to resolve the challenges of “Big Data”, Snowflake quietly redefined what’s possible. The solution can be deployed within minutes, with nothing more complex than a credit card and an extra-small server running for an hour costs less than a cup of coffee. As a platform, it’s remarkably flexible and will scale up performance to execute massive terabyte-sized queries, reducing the elapsed time from 5.5 hours down to just two minutes. Equally, as the UK-based food delivery service Deliveroo found, it can automatically scale out to cope with a huge number of concurrent users and then silently scale back when not needed.

Unlike Oracle, it is incredibly simple to manage with no statistics to capture, no indexes to manage, and data partitioning, compression, and encryption, which are automatic and completely transparent.

If you’d like to try Snowflake for yourself, you can start a free trial with $400 of credit. There’s no hardware to configure or software to install, and the online documentation is equally easy to follow with lots of getting-started videos.

Disclaimer: The opinions expressed in my articles are my own, and will not necessarily reflect those of my employer (past or present).

Note: This article was first published on my personal blog site as: Oracle vs Snowflake — Why I abandoned Oracle after 30 Years.

Published at DZone with permission of John Ryan, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments