Optimizing Serverless Functions Through AI Integration

Serverless computing enhances scalability and cost efficiency; AI optimizes resources, improving performance and security in serverless environments.

Join the DZone community and get the full member experience.

Join For FreeOverview of Serverless Computing: Benefits and Challenges

- Event-driven architecture: Serverless computing is frequently based totally on event-pushed architecture, in which features or programs are prompted by means of specific occasions or requests.

- Automatic scalability: Serverless structures mechanically scale resources up or down primarily based on a call, ensuring premiere overall performance without the want for guide intervention.

- Pay-per-use model: Users are charged based on the actual resources eaten up and the execution time of features, mainly to cost efficiency.

Benefits

- Cost-effective: Serverless computing removes the want to preserve and provision servers, lowering infrastructure fees and permitting customers to pay the handiest for resources used.

- Increased productivity: Developers can focus on writing code and building applications without worrying about server management, allowing for faster development cycles.

- Reduced complexity: Serverless architectures summarize underlying infrastructure complexities, making it less complicated to install and manage programs.

- Automatic maintenance: Cloud carriers take care of device preservation, updates, and security patches, lowering the burden on IT groups.

Challenges

- Cold start delays: Serverless functions may also revel in a put-off upon preliminary invocation (bloodless start) due to the time needed to initialize assets.

- Vendor lock-in: Adopting serverless answers from a particular cloud issuer can result in dealer lock-in, probably limiting flexibility within the destiny.

- Limited execution time: Serverless capabilities commonly have execution closing dates imposed via cloud companies, which may additionally constrain positive workloads.

- Monitoring and debugging: Monitoring and debugging serverless applications can be tougher as compared to standard server-based total architectures.

- Security concerns: Serverless environments may additionally introduce protection risks which include fact leaks, unauthorized right of entry, and misconfigurations that need to be cautiously controlled.

Importance of Optimizing Serverless Functions

Here are some key motives highlighting the importance of optimizing serverless functions:

- Cost efficiency: Optimizing serverless features can help lessen expenses by means of minimizing aid utilization and optimizing runtime performance. By making sure that features are green and well-tuned, users can avoid pointless expenses for unused resources.

- Improved performance: Optimized serverless functions cause quicker execution times, decreased latency, and improved responsiveness, enhancing the general overall performance of packages. Efficient code and proper aid allocation can help attain the best response instances for cease-users.

- Enhanced user experience: Optimized serverless capabilities make contributions to higher personal enjoyment by handing over faster response instances, reduced latency, and progressed reliability. A properly-optimized serverless structure enhances a person's pleasure and engagement with the utility.

Role of AI in Enhancing Serverless Architecture

AI plays a significant function in enhancing serverless architecture by enabling shrewd automation, optimizing useful resource allocation, enhancing scalability, and enhancing the general overall performance of serverless packages. Here are some key methods AI enhances serverless architecture:

- Fault detection and recovery: AI can proactively discover potential troubles, anomalies, or performance bottlenecks within a serverless architecture. By leveraging AI-based monitoring and predictive analytics, serverless systems can quickly discover screw-ups, provoke restoration procedures, and ensure the high availability of programs.

- Optimized function placement: AI-driven serverless platforms can intelligently determine where to area capabilities based totally on elements that include proximity to information assets, community latency, and useful resource availability. This optimization enhances common overall performance and decreases latency for end-users.

- Enhanced security: AI can bolster security in serverless architectures by means of detecting and mitigating ability threats, identifying vulnerabilities, and imposing proactive security measures. AI-powered security functions assist guard serverless applications from cyber threats and make data private.

The Need for AI in Serverless Environments

Without AI, there are several challenges related to dynamic resource allocation in serverless environments:

Static Resource Provisioning

In the absence of AI, serverless systems may depend on static useful resource provisioning, in which resources are allotted based on predefined thresholds or rules. This technique can cause underutilization or over-provisioning of sources, resulting in inefficiency and multiplied expenses.

Manual Scaling

Without AI-driven automation, scaling resources in reaction to fluctuating workloads is based on guide intervention. This manual process may be time-consuming, error-prone, and might not respond quickly enough to unexpected spikes in demand, impacting the software's overall performance.

Lack of Real-Time Optimization

In the absence of AI, dynamic resource allocation in serverless environments won't be able to optimize resources in real-time based on changing workload characteristics. This difficulty can bring about underperforming packages or wasted sources in the course of periods of low demand.

Performance Bottlenecks

In the absence of AI-driven optimization, serverless environments may additionally revel in performance bottlenecks because of suboptimal useful resource allocation. This can lead to latency problems, decreased responsiveness, and negatively impact people.

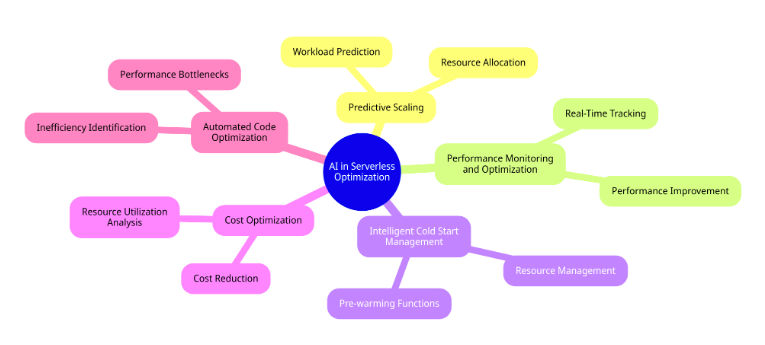

Core Areas of AI Integration

Here are a few core regions in which AI integration can play a key role in optimizing serverless capabilities:

Predictive Scaling

Utilize AI algorithms to expect workload styles and automatically scale serverless features in anticipation of demand spikes. By studying the historical data and actual-time metrics, AI can optimize useful resource allocation to ensure the most excellent performance at some point of height masses.

Performance Monitoring and Optimization

AI-powered monitoring gear can tune the performance of serverless capabilities in actual time, figuring out regions for optimization and development. By analyzing overall performance metrics and code execution, AI can advocate optimizations to decorate the performance and responsiveness of Serverless functions.

Intelligent Cold Start Management

AI can predict and manipulate cold starts via pre-warming features primarily based on utilization styles and anticipated demands. By proactively addressing cold start delays via clever scheduling and useful resource control, AI integration can enhance the general consumer experience.

Cost Optimization

AI algorithms can examine useful resource utilization, workload patterns, and fee metrics to optimize useful resource allocation and minimize expenses. By intelligently handling assets and scaling functions based on cost-performance metrics, AI integration can help groups lessen their cloud computing prices.

Automated Code Optimization

AI equipment can analyze serverless characteristic code to identify inefficiencies, overall performance bottlenecks, and areas for optimization.

Techniques and Tools

Overview of AI Models and Algorithms Used for Serverless Optimization

- Neural networks: They can predict and manage the scaling behavior of serverless functions based on incoming request patterns. Neural networks can engage in analysis that predicts scheduling needs and facilitates appropriate resource scaling, thus reducing latencies.

- Regression models: They rely on experimental data on how long the different functions take to execute and help analyze the behavior of the functions executing historically, suggesting the timing of when and the amount of resource provisioning required.

Other methods that can be inappropriately employed when scaled based on real-time generated data to make a decision when to keep the lambda alive or deactivate it due to the currently excessive computation of incoming queries are decision trees and ensemble.

Tools and Platforms That Support AI Integration With Serverless Functions

AWS Lambda With AI Layer

AWS Lambda supports the capability to add AI features directly to your serverless applications through AWS Lambda layers. These layers may contain a lot of pre-built machine learning models that you can easily hook up and execute in the lambda function. Examples involve deploying TensorFlow/PyTorch models as a layer to do image recognition and real-time data forecasts directly on the lambda host.

Azure Machine Learning

Azure Machine Learning enables AI-driven predictions and processing in Azure’s serverless compute options. Developers can use the experience of deploying machine learning models to deploy them alongside Azure Functions, making real-time data analytics and decision-making possible. The AI models hosted in it can be called from Azure Functions at scale when needed, based on requirements. For example, Functions can call an AI model in Azure Machine Learning for fraud detection or demand forecasting.

Google AI Platform

Google AI Platform can be linked with Google Cloud Functions to enhance serverless applications with AI capabilities. This setup allows developers to deploy machine learning models that can interact with serverless functions, enabling scalable and efficient AI processing. A typical use case includes deploying a model on an AI Platform to analyze incoming data and using Cloud Functions to handle the data flow and response actions based on AI insights.

Challenges and Considerations

Data Privacy and Security Concerns With AI in Serverless Environments

Serverless environments raise serious data privacy and security issues when AI is involved. Most AI models use data spread across different geographies hosted in many locations. With such models, it becomes the business’s responsibility to provide robust security measures, although the decentralization and temporal nature of serverless computing make it difficult to establish strong security perimeters. Furthermore, compliance with relevant data protection laws and guidelines like the GDPR and HIPAA should be of primary concern. Compliance helps the business avoid lawsuits and creates user trust since it guarantees them that their private data is used responsibly and securely for the entire lifecycle of the AI implementation.

The Complexity of Integrating AI With Existing Serverless Architectures

It is challenging to include AI with the existent serverless architecture. The technical complexity arises from the inability to embed advanced AI models in light, ephemeral serverless functions built for simple, stateless operations. Such incorporation generally requires substantial architecture modifications to handle the AI processes’ computing power and memory requirements, which often exceed the existing capabilities of serverless environments designed to support extremely limited memory tasks.

Another complexity is dependency management. Serverless structures typically isolate dependencies to streamline startup and reduce resource usage. However, integrating AI involves ensuring that the AI library used is compatible with the serverless platform. This management involves an organized process of managing library versions and dependencies. These dependencies need to be well-managed, and the library on the cloud is optimized to guarantee no runtime errors.

Conclusion

In conclusion, it powerfully underscores the transformative potential of merging AI with serverless computing. This integration is indeed not just a passing trend but a significant shift towards more efficient and adaptive cloud services. While the path is strewn with challenges, particularly in terms of complexity and security, the continuous advancements in AI capabilities are set to revolutionize the management and deployment of serverless applications.

Opinions expressed by DZone contributors are their own.

Comments