Open Source Datasets for Computer Vision

In this article, we discuss some of the most popular and effective datasets used in the domain of Deep Learning (DL) to train state-of-the-art ML systems for CV tasks.

Join the DZone community and get the full member experience.

Join For FreeComputer Vision Models Trained With Open Source Datasets

Computer Vision (CV) is one of the most exciting subfields within the Artificial Intelligence (AI) and Machine Learning (ML) domain. It is a major component for many modern AI/ML pipelines and It is transforming almost every industry, enabling organizations to revolutionize the way machines and business systems work.

Academically, CV has been a well-established area of computer science for many decades, and over the years, a lot of research work has gone into this field to make it better. However, the use of deep neural networks has recently revolutionized the field and given it new fuel for accelerated growth.

There is a diverse array of application areas for computer vision such as:

- Autonomous driving.

- Medical imaging analysis and diagnostics.

- Scene detection and understanding.

- Automatic image caption generation.

- Photo/face tagging on social media.

- Home security.

- Defect identification in manufacturing industries and quality control.

In this article, we discuss some of the most popular and effective datasets used in the domain of Deep Learning (DL) to train state-of-the-art ML systems for CV tasks.

Choose the Right Open-Source Datasets Carefully

Training machines on image and video files is a serious data-intensive operation. A singular image file is a multi-dimensional, multi-megabytes digital entity containing only a tiny fraction of 'insight' in the context of the whole 'intelligent image analysis' task.

In contrast, a similar-sized retail sales data table can lend much more insight into the ML algorithm with the same expenditure on computational hardware. This fact is worth remembering while talking about the scale of data and computing required for modern CV pipelines.

Consequently, in almost all cases, hundreds (or even thousands) of images are not enough to train a high-quality ML model for CV tasks. Almost all modern CV systems use complex DL model architectures and they will remain under-fitted if not supplied with a sufficient number of carefully selected training examples, i.e., labeled images. Therefore, it is becoming a highly common trend that robust, generalizable, production-quality DL systems often require millions of carefully chosen images to train on.

Also, for video analytics, the task of choosing and compiling a training dataset can be more complicated given the dynamic nature of the video files or frames obtained from a multitude of video streams.

Here, we list some of the most popular ones (consisting of both static images and video clips).

Popular Open Source Datasets for Computer Vision Models

Not all datasets are equally suitable for all kinds of CV tasks. Common CV tasks include:

- Image classification.

- Object detection.

- Object segmentation.

- Multi-object annotation.

- Image captioning.

- Human pose estimation.

- Video frame analytics.

We show a list of popular, open-source datasets which cover most of these categories.

ImageNet (Most Well-Known)

ImageNet is an ongoing research effort to provide researchers around the world with an easily accessible image database. It is, perhaps, the most well-known image dataset out there and is quoted as the gold standard by researchers and learners alike.

This project was inspired by an ever-growing sentiment in the image and vision research field – the need for more data. It is organized according to the WordNet hierarchy. Each meaningful concept in WordNet, possibly described by multiple words or word phrases, is called a "synonym set" or "synset". There are more than 100,000 synsets in WordNet. Similarly, ImageNet aims to provide on average 1000 images to illustrate each synset.

The ImageNet Large Scale Visual Recognition Challenge (ILSVRC) is a global annual competition that evaluates algorithms (submitted by teams from university or corporate research groups) for object detection and image classification at a large scale. One high-level motivation is to allow researchers to compare progress in detection across a wider variety of objects — taking advantage of the quite expensive labeling effort. Another motivation is to measure the progress of computer vision for large-scale image indexing for retrieval and annotation. This is one of the most talked-about annual competitions in the entire field of machine learning.

CIFAR-10 (For Beginners)

This is a collection of images that are commonly used to train machine learning and computer vision algorithms by beginners in the field. It is also one of the most popular datasets for machine learning research for quick comparison of algorithms as it captures the weakness and strength of a particular architecture without placing an unreasonable computational burden on the training and hyperparameter tuning process.

It contains 60,000, 32×32 color images in 10 different classes. The classes represent airplanes, cars, birds, cats, deer, dogs, frogs, horses, ships, and trucks.

MegaFace and LFW (Face Recognition)

Labeled Faces in the Wild (LFW) is a database of face photographs designed for studying the problem of unconstrained face recognition. It contains 13,233 images of 5,749 people, scraped and detected from the web. As an additional challenge, ML researchers can use pictures for 1,680 people who have two or more distinct photos in the dataset. Consequently, it is a public benchmark for face verification, also known as pair matching (requiring at least two images of the same person).

MegaFace is a large-scale open-source face recognition training dataset that serves as one of the most important benchmarks for commercial face recognition problems. It includes 4,753,320 faces of 672,057 identities and is highly suitable for large DL architecture training. All images are obtained from Flickr (Yahoo's dataset) and licensed under Creative Commons.

IMDB-Wiki (Gender and Age Identification)

It is one of the largest and open-sourced datasets of face images with gender and age labels for training. In total, there are 523,051 face images in this dataset where 460,723 face images are obtained from 20,284 celebrities from IMDB and 62,328 from Wikipedia.

MS Coco (Object Detection and Segmentation)

COCO or Common Objects in COntext is large-scale object detection, segmentation, and captioning dataset. The dataset contains photos of 91 object types which is easily recognizable and has a total of 2.5 million labeled instances in 328k images. Furthermore, it provides resources for more complex CV tasks such as multi-object labeling, segmentation mask annotations, image captioning, and key-point detection. It is well-supported by an intuitive API that assists in loading, parsing, and visualizing annotations in COCO. The API supports multiple annotation formats.

MPII Human Pose (Pose Estimation)

This dataset is used for the evaluation of articulated human pose estimation. It includes around 25K images containing over 40K people with annotated body joints. Here, each image is extracted from a YouTube video and provided with preceding and following un-annotated frames. Overall the dataset covers 410 human activities and each image is provided with an activity label.

Flickr-30k (Image Captioning)

It is an image caption corpus consisting of 158,915 crowd-sourced captions describing 31,783 images. This is an extension of the previous Flickr 8k Dataset. The new images and captions focus on people involved in everyday activities and events.

20BN-SOMETHING-SOMETHING (Video Clips of Human Action)

This dataset is a large collection of densely-labeled video clips that show humans performing pre-defined basic actions with everyday objects. It was created by a large number of crowd workers which allows ML models to develop a fine-grained understanding of basic actions that occur in the physical world.

Here is a subset of common human activities that are captured in this dataset,

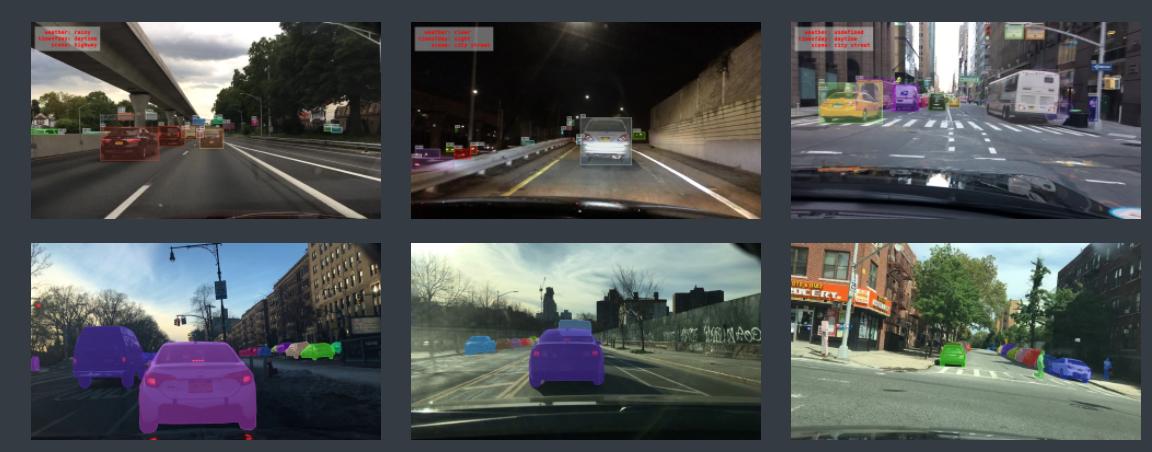

Barkley DeepDrive (For Autonomous Vehicle Training)

The Berkeley DeepDrive dataset by UC Berkeley comprises over 100K video sequences with diverse kinds of annotations including object bounding boxes, drivable areas, image-level tagging, lane markings, and full-frame instance segmentation. Furthermore, the dataset features wide diversity in representing various geographic, environmental, and weather conditions.

This is highly useful for training robust models for autonomous vehicles so that they are less likely to be surprised by ever-changing road and driving conditions.

The Right Hardware and Benchmarking for These Datasets

Needless to say, having only these datasets is not enough to build a high-quality ML system or business solution. A mix of the right choice of dataset, training hardware, and clever tuning and benchmarking strategy is required to obtain the optimal solution for any academic or business problem.

That's why high-performance GPUs are almost always paired with these datasets to deliver the desired performance.

GPUs were developed (primarily catering to the video gaming industry) to handle a massive degree of parallel computations using thousands of tiny computing cores. They also feature large memory bandwidth to deal with the rapid dataflow (processing unit to cache to the slower main memory and back), needed for these computations when the neural network is training through hundreds of epochs. This makes them the ideal commodity hardware to deal with the computation load of computer vision tasks.

However, there are many choices for GPUs on the market and that can certainly overwhelm the average user. There are some good benchmarking strategies that have been published over the years to guide a prospective buyer in this regard. A good benchmarking exercise must consider multiple varieties of (a) deep neural network (DNN) architecture, (b) GPU, and (c) widely used datasets (like the ones we discussed in the previous section).

For example, this excellent article here considers the following,

- Architecture: ResNet-152, ResNet-101, ResNet-50, and ResNet-18.

- GPUs: EVGA (non-blower) RTX 2080 ti, GIGABYTE (blower) RTX 2080 ti, and NVIDIA TITAN RTX.

- Datasets: ImageNet, CIFAR-100, and CIFAR-10.

Also, multiple dimensions of performance must be considered for a good benchmark.

Performance Dimensions to Consider

There are three primary indices:

- SECOND-BATCH-TIME: Time to finish the second training batch. This number measures the performance before the GPU has run long enough to heat up. Effectively, no thermal throttling.

- AVERAGE-BATCH-TIME: Average batch time after 1 epoch in ImageNet or 15 epochs in CIFAR. This measure takes into account thermal throttling.

- SIMULTANEOUS-AVERAGE-BATCH-TIME: Average batch time after 1 epoch in ImageNet or 15 epochs in CIFAR with all GPUs running simultaneously. This measures the effect of thermal throttling in the system due to the combined heat given off by all GPUs.

Which Open-Source Datasets are Best for Your Computer Vision Models?

In this article, we discussed the need for having access to high-quality, noise-free, large-scale datasets for training complex DNN models which are gradually becoming ubiquitous in computer vision applications.

We gave examples of multiple open-source datasets that are widely used for diverse types of CV tasks — image classification, pose estimation, image captioning, autonomous driving, object segmentation, etc.

Finally, we also touched upon the need of pairing these datasets with proper hardware and benchmarking strategies to ensure their optimal use in commercial and R&D space.

Published at DZone with permission of Kevin Vu. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments