Not Only Cars: The Six Levels of Autonomous Testing

What do autonomous cars have in common with autonomous testing? How close are we to completely automated tests? Read on for the answers.

Join the DZone community and get the full member experience.

Join For FreeThere is something similar about driving and testing. While testing is an exercise in creativity, parts of it are boring — just like driving is. Regression testing is tedious in that you need to do the same tests over and over again, every time a release is created, just like your daily commute. And just like during your daily commute, doing something repetitively is a recipe for mistakes, so repetitive testing, just like driving, is a dangerous activity, as can be seen from the various crash sites strewn over our commute highways. Or by the various

bugs that slipped from us during our regression testing.

Which is why we automate testing. We write code that runs our tests and run them whenever we want. But even that gets repetitive. Another day, another form field to check. Another form, another page. And one gets the feeling that writing those tests is a repetitive process. That it too can be automated.

Can AI automate this process? Can we make some, or all parts of our testing, and writing our tests, autonomous? Can AI do to writing tests what it is doing to driving?

Autonomous Driving

On the first day of May, 2012, in Las Vegas, Google passed Nevada’s Self-driving test. This can be thought of as the firing shot in an all-out war for domination over what appears to be the future of automobiles, a war that includes hi-tech giants such as Google, Apple, and Intel, but also automotive giants like Ford, Mercedes, and Volvo.

Credit: Google.

What’s the big deal? Why is everybody in a panic to be a player in this market? Why are self-driving cars such a big deal? Why are they perceived to be the future of cars? Because everybody “knows” that long-term, nobody is going to be driving their own cars. Because, as we said, driving is a boring activity. Everybody would rather teleport to the office than spend an hour driving there. And if teleporting is not an option (yet!), then a driver that drives you there is almost as good an option. While they are driving, you can get work done, catch up with friends, or take a nap. And if the boredom is taken out of the equation, the accidents that come with it are taken out too.

Because of consumer appeal, the possibility of saving billions in insurance money, and because of the possibility that self-driving cars will solve the present traffic jams, car manufacturers and hi-tech companies are scrambling to implement this technology.

But what exactly defines a self-driving autonomous car? Is every autonomous car like another? How is Tesla’s driving-assistance similar to Volkswagen’s automatic braking system? And how are they related to Google’s cute self-driving car that doesn’t even have a steering wheel? To this end, in 2014, SAE International published a classification system for autonomous cars, a classification that was adopted by the National Highway Traffic Safety Administration (NHTSA) and is the standard by which all autonomous cars are classified.

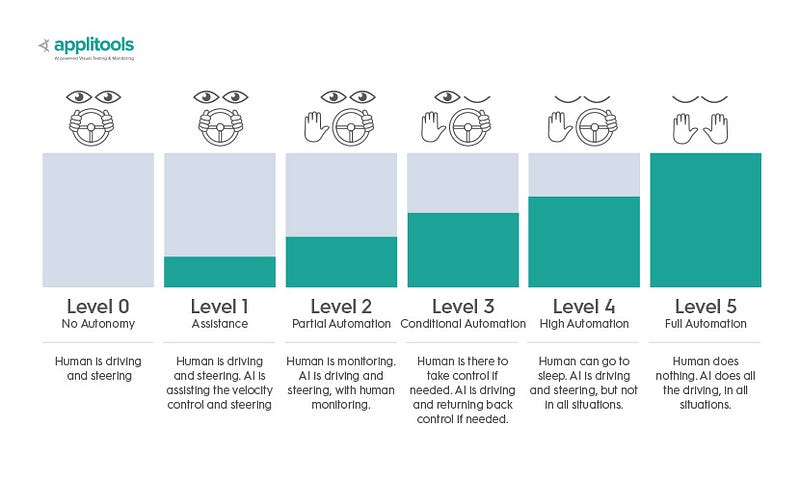

Levels of Driving Automation

Credit: Applitools.

The classification adopts six levels of driving automation, and a car is classified for a level depending on the amount of autonomy it exhibits.

The first level of driving automation is Level 0 — no automation whatsoever. Congratulations! You are already the proud owner of an autonomous car, Level 0. Level 1 is where we are today, in some cars on the road. It is named “Driving Assistance”, and is where things like the modern Cruise Control and automatic braking systems are today. In this mode, the human is still under control — the AI is only assisting while the human is driving.

Level 2 is “Partial Automation.” This is the level where Tesla’s Autopilot and GM’s Super Cruise reside. In this level, the human is still in charge, but the AI takes care of the boring details of acceleration/deceleration and steering while assuming that the human can take over on the first problem. We’re talking mostly highways here, and not city driving.

Level 3 is “Conditional Automation.” This is the first level where the car drives itself. But only under certain conditions, and always under the assumption that the human can take over when the AI notifies it that it is incapable of responding to a certain situation.

Level 4 is “High Automation.” In this level, the AI totally takes overall driving and does not assume that the human can take over. In other words, this car can drive without a steering wheel. Google’s cars are at this level. The caveat is that there are times and places that the car cannot be autonomous, like in a snowstorm, or during fog.

Level 5 is the highest level — “Full Automation.” The car can be autonomous anytime and anywhere. We have yet to reach that level of automation, but we’re getting there.

Level 6 is “SkyNet Automation.” No human is even in the car, because the robots have killed all of them! (Just joking — this level doesn’t really exist.)

Autonomous Testing

So back to autonomous testing. My company, Applitools, has just hired a new guy, an addition to our algorithm and AI team. During lunch, we started talking about the similarities between driving and testing, and just brainstorming this idea of “levels of autonomous testing”. These are our thoughts on this.

But Caveat Emptor! There is a saying in Hebrew that since the destruction of the (second) temple, prophecy is only given to fools and children. So please take these levels with a grain of salt. On the other hand, while these thoughts reflect our fantasies, they also reflect where we think the testing world is going in terms of using AI.

Are you worried that AI will soon replace all the testers? If so, skip to the last section to get your answer. But if you like your rides with suspense, then fasten your seat belts, because we’re going on a ride through the 6 levels of autonomous testing.

The Six Levels of Testing Autonomy.

Level 0 — “No Autonomy”

Congratulations! You’re there. You write code that tests the application, and you’re very happy because you can run the tests again and again on every release, instead of boringly repeating the same tests again and again. This is perfect because now you can concentrate on the important aspects of testing—thinking:

But nobody’s helping you write that automation code. And writing the code itself is repetitive. Any field added to a form means adding a test. Any form added to a page means adding a test that checks all the fields. And any page added means checking all the components and forms in that page.

Moreover, the more tests you have, the more they fail when there is a sweeping change in the application. Each and every failed test needs to be checked to verify whether this is a real bug, or just a new baseline.

Level 1 — “Drive Assistance”

An interesting thing we saw when we researched autonomous driving, is that the better the vision system of the autonomous car, the better its autonomy is. This is why we believe the better the AI can see the app, the more autonomous it can be. This means that the AI should be able to see not only a snapshot of the DOM of the page, but also a visual picture of the page. The DOM is only half the picture — the fact that there is an element with text on it, does not mean that the user can see it: it may be obscured by other elements, or not in the correct position. The visuals enable you to concentrate on the data that the user sees.

Once the testing framework can see the page, and look at it holistically, whether through the DOM or through a screenshot, it can help you write those checks that you write manually.

If we take a screenshot of the page, we can holistically check all form fields and texts in the page in one fell swoop. Instead of writing code that checks each and every field, we can test all of them at once against the baseline of the previous test.

Checking the whole form in one fell swoop.

This level exists today in testing tools like Applitools Eyes. Previous tools that tried to do this failed because comparing images pixel by pixel never works. For it to work, the tool needs AI algorithms to figure out what changes are not really changes and which ones are real.

This is how AI today assists you in writing your test code, by writing all your checks for you. The human is still driving the tests, but some of the checks are done automatically by the AI.

Also, the AI can check that a test passes, but when it fails it still needs to notify the human to check whether the failure is a real one, or happened because of a correct change in the software. The human will then have to confirm that the change is good, or reject it because it’s a bug.

And having the AI “see” the application means that the AI can check the visual aspects of an application against a baseline. This is something that until now could only be covered by manual testing.

But the human still needs to assert every change.

Level 2 — “Partial Automation”

With Level 1 autonomy, the tester can pass on the tedious aspect of writing the checks for all the fields on a page onto the AI by having the AI test against a baseline. And the AI can now test the visual aspects of the page.

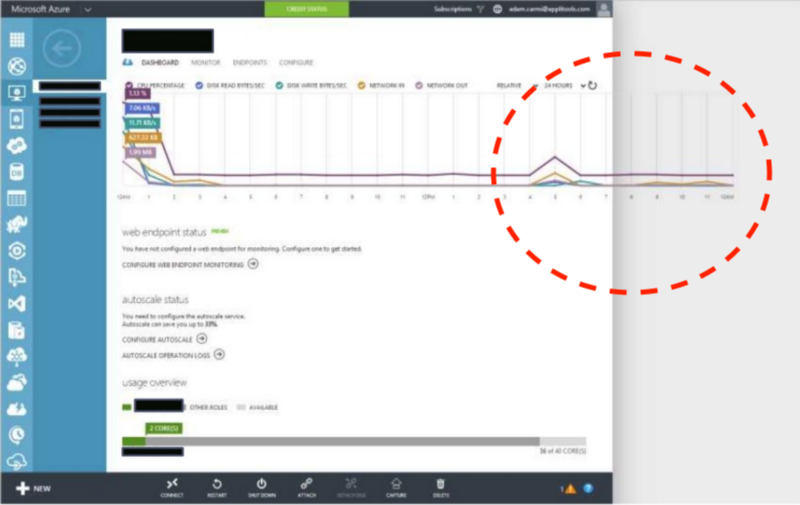

But checking each and every test failure to verify whether it’s a “good” failure or a real bug can be tedious, especially if one change is reflected in many test failures. A Level 2 AI needs to understand the difference, not in terms of bitmap differences, but in terms a human can understand — in terms the user of the application should be able to understand. Thus, a Level 2 AI will be able to group changes from lots of pages, as it will be able to understand that semantically, they are the same “change”. We’re starting to see tools like Applitools Eye using AI-techniques to do just that.

A Level 2 AI will be able to group these changes, and tell the human that these are the “same” change, and would they like to please confirm or reject all these changes as a group.

Grouping similar changes in Applitools Eyes.

In other words, a Level 2 AI will assist the human in checking changes against the baseline and will turn what used to be a tedious effort into a simple one.

Level 3 — “Conditional Automation”

In Level 2, any failure or change detected in the software still needs to be vetted by a human. A Level 2 AI can help to analyze the change, but cannot understand whether a page is correct or not just by looking at the page. It needs a baseline to compare against. But a Level 3 AI can do that and much more because it can apply machine learning techniques to the page.

For example, a Level 3 AI can look at the visual aspects of a page and figure out whether the design is off, based on standard rules for design, rules like alignment rules, whitespace use, color and font usage, and layout rules.

A Level 3 AI will autonomously determine that this is a bug.

And what about the data aspects? A Level 3 AI will also be able to look at the data and figure out that all the numbers up to now, in this field or that, need to be between a specific range, and that this field is an email, and this one needs to be the sum of these fields above it. It can figure out that in this page, this table needs to be sorted by this column.

The AI can now look at the pages and determine whether the page is OK, without human intervention, just by understanding the design and data rules. And even if there was a change in the page, the AI can still understand that this page is OK, and not submit it to a human to vet.

And because that AI is looking at hundreds of test results and seeing how things change over time, it can apply machine learning techniques to detect anomalies in the changes, and only submit a test to a human to verify if such an anomaly is detected.

Level 4 — “High Automation”

Up to now, all the AI did was run the checks automatically. The human is still driving the test, and clicking on the links (albeit using automation software). Level 4 is where the AI is driving the test itself!

The Level 4 AI will be able to understand some pages, by visually looking at the page and understanding what type of page it is. Because it can look at the page, and understand it as a human does, it will understand that this page is a login page, this one is a profile page, and that one is a registration page or a shopping cart page.

A Level 4 AI will be able to look at user interactions over time, visualizing the interactions, and understanding the pages and the flow through them. Once the AI understands the type of page, using techniques like reinforcement learning, it can start driving the tests on it automatically, without human intervention. It will be able to write the tests themselves, and not just the checks for the tests. Obviously, it will use the visual (and other) techniques in Level 3 and 2 to find the bugs in each page.

Level 5 — “Full Automation”

This level is really Scifi, and we’re starting to fly on wings of fantasy: at this level, the AI will be able to converse with the product manager, understand the application, and fully drive the tests itself!

Unfortunately, given that no human has been able to intelligently understand a product manager’s description of an application, AI-s will need to be much smarter than humans to reach this level!

Level 6 — “Skynet Automation”

At this level, due to the Robot Apocalypse, no humans are left alive, so there’s nothing to test, is there? Then again, who’s testing the robot software? Hm…

Are We There Yet?

Are we there yet? Do we have to start looking for a job because computers are going to automate all software testing?

Most emphatically, no! Software Testing is similar to driving in some aspects, but in other aspects, it is much more complicated, as it entails understanding complex human interactions. An AI today doesn’t really understand what it’s doing. It’s just automating tasks based on lots and lots of historical data.

Where are we now in terms of using AI? Advanced tools like Applitools Eyes are currently at level 1, and progressing nicely in Level 2 functionality. While companies are working at level 2, a level 3 AI will need a lot more work and research (but is definitely doable). A level 4 AI is very far away in the future. This is good news — in the next decade or so, we will be able to enjoy the fruits of AI-assisted testing, without the nasty side-effects.

But will we one day, in the far far future, be out of a job? Who knows — since the destruction of the (second) temple, prophecy is only given to fools and children!

Published at DZone with permission of Gil Tayar. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments