New LAMP Stack: Illuminating Innovation in Generative AI Development

The LAMP stack is becoming essential for generative AI development and deployment in various domains.

Join the DZone community and get the full member experience.

Join For FreeIn the dynamic world of Information Technology (IT), the architecture and frameworks that power web applications have undergone significant evolution. However, one paradigm that has stood the test of time and continues to be a cornerstone in web development is the LAMP stack. The acronym LAMP stands for Linux, Apache, MySQL, and PHP/Python/Perl, representing a powerful combination of open-source technologies that work synergistically to create robust and scalable web applications.

Linux

The foundation of the LAMP stack is Linux, an open-source operating system renowned for its stability, security, and versatility. Linux provides a solid base for hosting web applications, offering a wealth of tools and resources for developers. Its open nature fosters collaboration and innovation, aligning perfectly with the ethos of the LAMP stack.

Apache

At the heart of the LAMP stack, Apache serves as the web server, responsible for handling incoming requests, processing them, and delivering the appropriate responses. Apache's modular architecture allows for flexibility, enabling developers to customize and extend its functionality based on specific project requirements. With a robust community and a long history of development, Apache has become synonymous with reliable web hosting.

MySQL

The 'M' in LAMP represents MySQL, a popular open-source relational database management system (RDBMS). MySQL seamlessly integrates with the other components of the stack, providing a scalable and efficient solution for data storage and retrieval. Its support for transactions, indexing, and relational data modeling makes it the de facto choice for project teams aiming to build high-performance database-driven applications.

PHP/Python/Perl

The final component of the LAMP stack represents the server-side scripting language, denoted by 'P'. While PHP is the most commonly associated language, the stack is flexible enough to accommodate alternatives like Python and Perl. These scripting languages empower developers to create dynamic and interactive web pages, facilitating the seamless integration of data from the MySQL database into the web application.

LAMP Applications

The LAMP stack's practical application extends across a spectrum of industries and use cases. Its open-source nature makes it a cost-effective solution for businesses of all sizes, enabling them to harness the power of cutting-edge technologies without the burden of licensing fees. The stack's versatility is evident in its ability to support a myriad of applications, from content management systems (CMS) like WordPress to e-commerce platforms like Magento. Furthermore, the LAMP stack excels in scalability, making it suitable for projects ranging from small-scale startups to enterprise-level applications. The modular design of each component allows developers to tailor the stack to meet the specific needs of their projects, ensuring optimal performance and resource utilization.

The LAMP stack has demonstrated resilience and adaptability in the rapidly evolving world of IT. While newer technologies and frameworks emerge, the LAMP stack remains a solid choice for developers seeking a reliable and proven foundation for web development. The collaborative ethos of the open-source community, coupled with the individual strengths of Linux, Apache, MySQL, and PHP/Python/Perl, makes the LAMP stack a timeless and pragmatic solution for building robust and scalable web applications.

The LAMP stack's enduring popularity can be attributed to its seamless integration of open-source technologies, providing a foundation that is stable, scalable, and cost-effective. As the IT landscape continues to evolve, the LAMP stack stands as a testament to the enduring value of collaboration, openness, and practicality in the field of web development.

New LAMP

The realm of artificial intelligence (AI) is rapidly evolving, with transformative potential across diverse industries. Generative AI (GenAI), a subset of AI, has emerged as a game-changer, empowering machines to create novel and original content, from realistic images and videos to captivating music and insightful text. To effectively harness the power of generative AI, a robust and seamless development environment is crucial.

Enter the new LAMP stack, a novel combination of tools tailored specifically for generative AI development. This open-source bundle, comprising LangChain, Aviary, MLFlow, and Pgvector, offers a comprehensive and integrated framework for building, training, deploying, and managing generative AI applications, as illustrated in Figure 1.

Figure 1: GenAI LAMP Stack

LangChain: The Power of AI-driven Programming

At the heart of the GenAI LAMP stack lies LangChain, a revolutionary framework specifically designed for generative AI applications. LangChain seamlessly integrates AI capabilities into the programming process, enabling developers to utilize AI models directly within their code. This groundbreaking approach streamlines the development of sophisticated generative AI solutions, empowering developers to focus on their creative vision rather than the intricacies of AI model implementation.

LangChain's unique features include:

- Declarative programming: LangChain adopts a declarative programming paradigm, allowing developers to express their intent in a straightforward manner rather than explicitly coding every step of the AI process.

- AI-integrated data structures: LangChain introduces AI-integrated data structures, enabling developers to manipulate data using AI models directly within their code.

- AI-powered debugging: LangChain provides AI-powered debugging tools that help developers identify and resolve issues more efficiently.

Aviary: A Unified Runtime Environment for Generative AI

Aviary, the second component of the GenAI LAMP stack, is a large language model (LLM) serving solution that makes it easy to deploy and manage a variety of open source LLMs. Open sourced by Anyscale, Aviary capitalizes on the strengths of open-source models by leveraging Ray, a recognized framework for scalable AI. Specifically, it harnesses the power of Ray Serve, a highly flexible serving framework integrated into Ray. Aviary seamlessly integrates with LangChain, providing a comprehensive repository of pre-trained generative AI models. This readily available collection of models accelerates the development process by eliminating the need to train models from scratch.

Aviary’s key features include:

- Extensive model collection: Aviary offers a comprehensive suite of pre-configured open source LLMs with user-friendly defaults that function seamlessly out of the box. It facilitates the addition of new LLMs within minutes in most cases.

- Unified framework: Aviary implements acceleration techniques, such as DeepSpeed, in conjunction with the bundled LLMs. It streamlines the deployment of multiple LLMs.

- Innovative scalability: Aviary provides unparalleled autoscaling support, including scale-to-zero – a pioneering feature in the open-source domain.

Aviary Light Mode distinguishes itself by combining features that exist individually in other solutions, building on their foundations, such as Hugging Face’s text-generation-inference. However, none of the existing solutions seamlessly integrates these capabilities in a user-friendly manner. The project commits to expanding Aviary's feature set, with plans to introduce support for streaming, continuous batching, and other enhancements.

MLFlow: Managing the Generative AI Model Lifecycle

MLFlow, the third component of the GenAI LAMP stack, serves as a central platform for managing the lifecycle of generative AI models. MLFlow facilitates model training, experimentation, deployment, and monitoring, providing a unified framework for managing the entire model development process.

MLFlow's key features include:

- Providing a streamlined environment for training and experimenting with generative AI models, enabling developers to optimize model performance and select the most effective approaches.

- Simplifying the deployment of generative AI models to production environments, ensuring that models are accessible and can be seamlessly integrated into real-world applications.

- Offering comprehensive model monitoring capabilities, allowing developers to track model performance over time and identify potential issues promptly.

Pgvector: Efficient Processing of Generative AI Data

Pgvector, the final component of the GenAI LAMP stack, provides a highly efficient and scalable data processing engine specifically optimized for generative AI applications. Pgvector accelerates the processing of large volumes of data, enabling the rapid training and rollout of complex generative AI models.

Pgvector's key features include:

- Vectorized data operations: Pgvector supports vectorized data operations, enabling the efficient manipulation of large data matrices frequently encountered in generative AI applications.

- Massively parallel processing: Pgvector utilizes massively parallel processing techniques to handle large datasets efficiently, significantly reducing processing time.

- Scalable architecture: Pgvector's scalable architecture can accommodate growing data volumes and computational demands, ensuring that it can support the ever-evolving needs of generative AI development.

The GenAI LAMP stack, composed of LangChain, Aviary, MLFlow, and Pgvector, represents a transformative approach to generative AI development. This integrated framework empowers developers to focus on their creative vision rather than the technical complexities of AI model implementation. By leveraging the power of LangChain's AI-driven programming, Aviary’s autoscaling features, MLFlow's comprehensive model management capabilities, and Pgvector’s performant vector operations, developers can streamline their GenAI workflows, accelerate model development, and achieve superior performance.

Benefits of GenAI LAMP

The new LAMP stack for generative AI offers a number of benefits over the traditional LAMP stack in the AI space, including:

- Improved architecture: LangChain adopts containerization and a microservices architecture, promoting better resource utilization, easier maintenance, and scalability, in contrast with the monolithic structure of the old LAMP stack.

- Improved runtime management: Aviary’s operations management features make it easier to organize, manage, and clean data and models, which is essential for developing high-quality AI models.

- Improved model management: MLFlow's model management features make it easier to track, manage, and deploy AI models.

- Improved vector storage and manipulation: Pgvector's vector storage and manipulation features make it easier to store and manipulate AI model vectors.

The new LAMP stack for generative AI is a powerful and versatile platform that can be used to develop, deploy, and manage AI models. The stack's improved security, data management, model management, and vector storage and manipulation features make it an ideal platform for developing and deploying cutting-edge AI applications.

Pros and Cons

Some advantages of GenAI LAMP include:

- LangChain's Linguistic Features: LangChain offers robust linguistic features, facilitating the development of Natural Language Processing (NLP) applications. Its capabilities in language understanding and processing contribute significantly to the effectiveness of LLM applications.

- Aviary's Versatility in Data Processing: Aviary excels in data processing and manipulation, providing a versatile platform for handling diverse datasets. Its compatibility with various data formats ensures flexibility in preparing input data for LLM applications.

- MLFlow's Model Management and Tracking: MLFlow's comprehensive model management and tracking capabilities streamline the development and deployment of LLM applications. Efficient version control, experiment tracking, and model packaging enhance the overall workflow and collaboration among development teams.

- Pgvector's Integration with PostgreSQL: Pgvector's seamless integration with PostgreSQL adds a powerful vector similarity search functionality to LLM applications. This enables efficient handling of vectorized data, enhancing the model's ability to retrieve relevant information and improve overall performance.

Some disadvantages of GenAI LAMP are:

- Integration Challenges: Combining LangChain, Aviary, MLFlow, and Pgvector may pose integration challenges, as each tool comes with its own set of dependencies and requirements. Ensuring smooth interoperability may demand additional development efforts and careful consideration of compatibility issues.

- Learning Curve: The diverse nature of these tools may result in a steep learning curve for inexperienced development teams. Adapting to the unique features and functionalities of LangChain, Aviary, MLFlow, and Pgvector could slow down the initial development phase and impact overall project timelines.

- Resource Intensiveness: The collective use of these tools may lead to increased resource utilization, both in terms of computational power and storage. This could potentially escalate infrastructure costs, especially when dealing with large-scale LLM applications.

- Maintenance Complexity: With multiple tools in play, the ongoing maintenance of the system might become complex. Regular updates, bug fixes, and ensuring compatibility across the entire stack could pose challenges, demanding a dedicated effort from the IT team.

GenAI LAMP in Action

The new LAMP's synergistic combination of LangChain, Aviary, MLFlow, and Pgvector empowers developers and organizations to unleash the full potential of generative AI as a transformative force. This powerful framework enables the creation of groundbreaking applications that can revolutionize industries and enhance human capabilities.

For instance, in the healthcare sector, LAMP-based generative AI applications can be trained to analyze medical images, identify abnormalities, and assist in diagnosis. This technology has the potential to improve patient outcomes and reduce the burden on healthcare professionals.

In the area of education, generative AI solutions powered by LAMP can personalize learning experiences, adapt to individual student needs, and provide real-time feedback. This personalized approach to education can enhance student engagement, improve learning outcomes, and close achievement gaps.

Moreover, generative AI systems based on LAMP can revolutionize the creative industries, enabling artists, designers, and musicians to explore new frontiers of expression. This technology can generate novel ideas, produce original content, and assist in the creative process, expanding the boundaries of artistic expression.

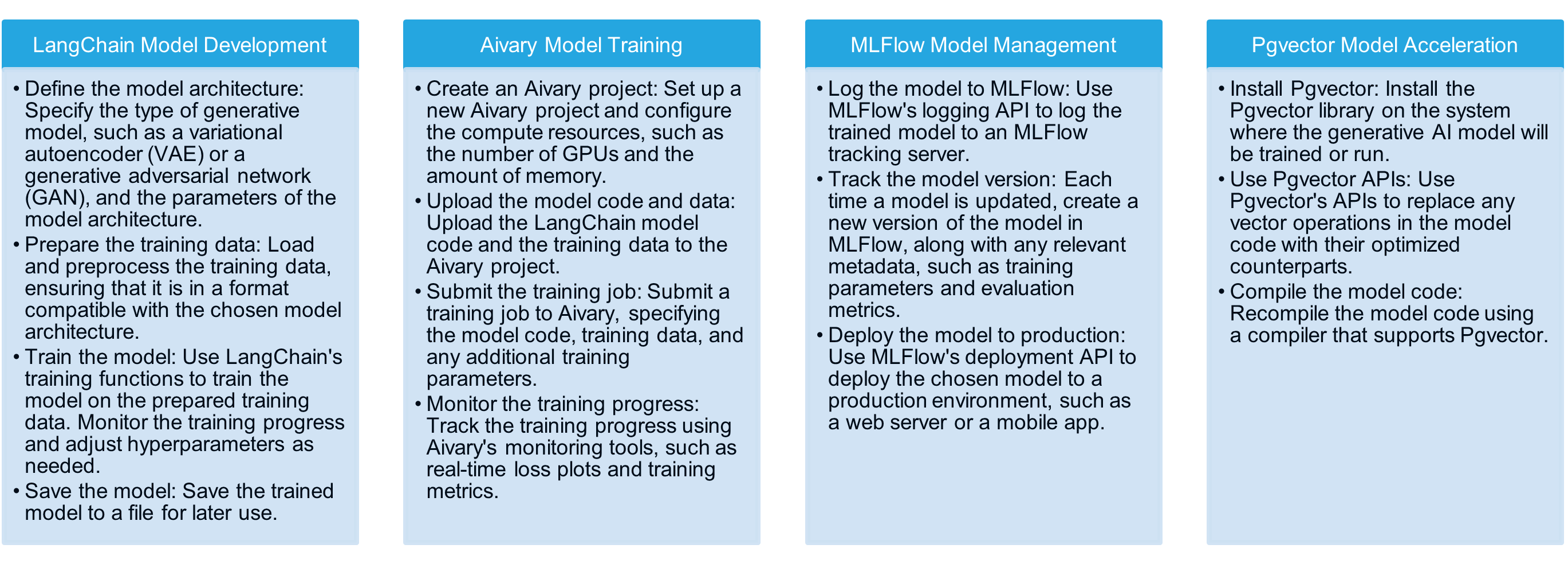

As an implementation example for a generative AI model that generates images from text descriptions, the following steps outline the process of using GenAI LAMP to develop and deploy this solution:

- Model Development: Use LangChain to define the model architecture based on GAN.

- Data Preparation: Gather a dataset of paired text descriptions and corresponding images. Preprocess the text descriptions to extract relevant features and the images to ensure consistency in size and format.

- Model Training: Train the LangChain model on the prepared dataset using Aviary's scalable computing resources. Monitor the training progress and adjust hyperparameters to optimize the model's performance.

- Model Management: Log the trained model to MLFlow and track its version. Deploy the model to a web server using MLFlow's deployment API.

- Model Acceleration: Install Pgvector and use its APIs to accelerate the model's training and inference. Recompile the model code using a compiler that supports.

The detailed steps in each stage of the reference implementation process are shown in Figure 2.

Figure 2 Implementation Steps of GenAI LAMP Stack

According to a recent survey by Sequoia Capital, a substantial 88% of respondents strongly emphasize the integral role of a retrieval mechanism, particularly a vector database, within their technological portfolio. The significance of this mechanism lies in its ability to furnish relevant context for models, thereby elevating the quality of results, mitigating the occurrence of inaccuracies known as "hallucinations," and effectively addressing challenges associated with data freshness. The diversity in approaches is noteworthy, with some opting for purpose-built vector databases such as Pinecone, Weaviate, Chroma, Qdrant, Milvus, and others, while others leverage well-established solutions like Pgvector or AWS offerings.

Another significant finding reveals that 38% of participants expressed keen interest in adopting an LLM orchestration and application development framework akin to LangChain. This interest spans both prototyping and production environments, showcasing a noteworthy surge in adoption over recent months. LangChain emerges as a valuable asset for developers in the realm of LLM applications, as it adeptly abstracts away common challenges. This includes the intricate process of amalgamating models into higher-level systems, orchestrating multiple calls to models, establishing connections between models and various tools and data sources, crafting agents capable of operating these tools, and, crucially, facilitating flexibility by mitigating the complexities of vendor lock-in, thereby simplifying the process of switching between language models.

In essence, the survey findings underscore the industry's recognition of the pivotal role retrieval mechanisms and advanced frameworks play in enhancing the robustness and efficiency of LLM applications. The nuanced approaches and preferences exhibited by the respondents reflect the dynamism of the field, with an evident trend towards adopting sophisticated tools and frameworks like GenAI LAMP for more seamless and effective AI development.

Conclusion

As generative AI continues to evolve, the new LAMP stack is poised to become an essential tool for developers, researchers, and businesses. By providing a powerful, versatile, and cost-effective platform, this open-source stack will enable the development and deployment of innovative, generative AI applications that will transform industries and reshape our world.

In addition to the core components of the GenAI LAMP stack, several other tools and technologies are playing an important role in the solution lifecycle of generative AI. These include:

- Cloud computing: Cloud-based platforms such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) provide access to the powerful computing resources required for training and running generative AI models.

- Quantum computer: Quantum computing employs quantum bits or qubits, which can exist in multiple states simultaneously, a phenomenon known as superposition. The entanglement, wherein qubits become interconnected, and the state of one qubit instantaneously influences the state of another, regardless of the physical distance between them, enables quantum computers to perform certain calculations at an exponential speedup compared to classical computers.

- Hardware accelerators: Specialized hardware accelerators such as GPUs and TPUs are increasingly being used to accelerate the training and inference of generative AI models.

The GenAI LAMP stack and the surrounding ecosystem of tools and technologies are enabling a new era of innovation in generative AI. By making it easier and more affordable to develop and deploy these models, we can expect to see an explosion of new applications that will change the way we live, work, and interact with the world around us.

Opinions expressed by DZone contributors are their own.

Comments