More Harm Than Good? On DORA Metrics, SPACE and DevEx

DORA metrics measure against flawed endpoints, driving burnout instead of addressing on-time delivery, whilst surveys like SPACE and DevEx can report inaccurate data.

Join the DZone community and get the full member experience.

Join For FreeThe DORA Four Key Metrics have quickly become the “industry standard” for software engineering metrics, and their successor metrics frameworks, SPACE and DevEx, are being trialed in engineering teams already.

However, new research has found two fatal flaws in how these models map to reality. It is essential for those looking to adopt these frameworks to be aware of these potential pitfalls before they materialize.

Data Collection Flaws

The first flaw concerns how data collection has been undertaken. The DORA State of DevOps reports have used surveys of software engineers to collect data, and the DevEx framework asserts, “Surveys, in particular, are a crucial tool for measuring DevEx.”

However, research at a population level has highlighted profound flaws with using surveys to assess performance when done at a team or organizational level, where these flaws cannot be managed effectively.

Data collected from research conducted by Survation for the software auditing firm Engprax has found that subjective surveys often result in inflated self-assessments where 94% of software engineers rate their job performance as average or above. Men are 26% more likely than women to consider themselves better than average performers. A historical study was consistent with this, finding that software engineers tend to overestimate their performance, with up to 42% rating themselves in the top 5%.

Moreover, “those with the lowest programming skill” are most likely to be most over-optimistic at evaluating software delivery performance in large projects.

This positivity doesn’t extend to management — software engineers are nearly 17% more likely, on average, to agree to a great/moderate extent that other managers in the industry are generally good compared to their own.

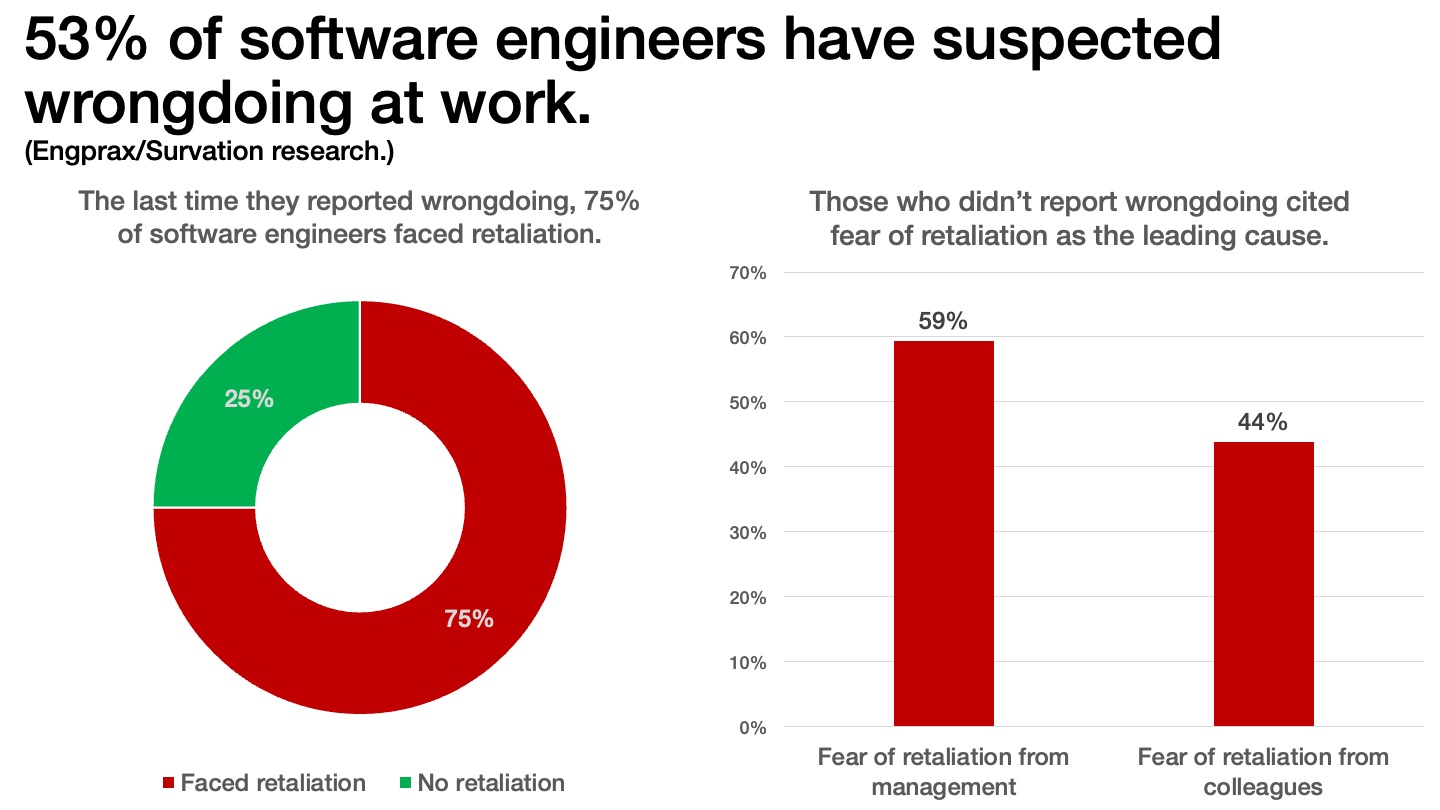

This research has also highlighted other barriers that stop engineers from speaking up. Both a majority (75%) of software engineers who did speak up about something they’ve seen at work reported facing retaliation, and a majority (59%) of those who didn’t said they didn’t because of a fear of retaliation.

The research has also highlighted the risk of excluding software engineers when these practices are used. Nearly one in three (31%) either didn’t feel their achievements at work were well-celebrated at all or only “to a small extent.” With nearly one in four software engineers saying they were unable to take calculated risks without fear of negative consequences, this research has highlighted the challenges of using such surveys in team settings.

Often, when surveys are used in a team setting, those conducting them will notice that as improvements are made — results worsen as the team feels more psychologically safe to report failures — demonstrating how these metrics fail to correctly quantify the true state of affairs.

Framework Flaws

The second flaw concerns what endpoints the frameworks measure against. The DORA model measures team performance against “Four Key Metrics,” which are all concerned with speed. Essentially, it measures the speed to deploy and fix bugs.

However, 2021 research by Haystack and Survation found that 83% of software engineers suffer from burnout, and 2023 research found that the introduction of DORA metrics across the industry failed to materialize into “a statistically significant decrease in burnout.”

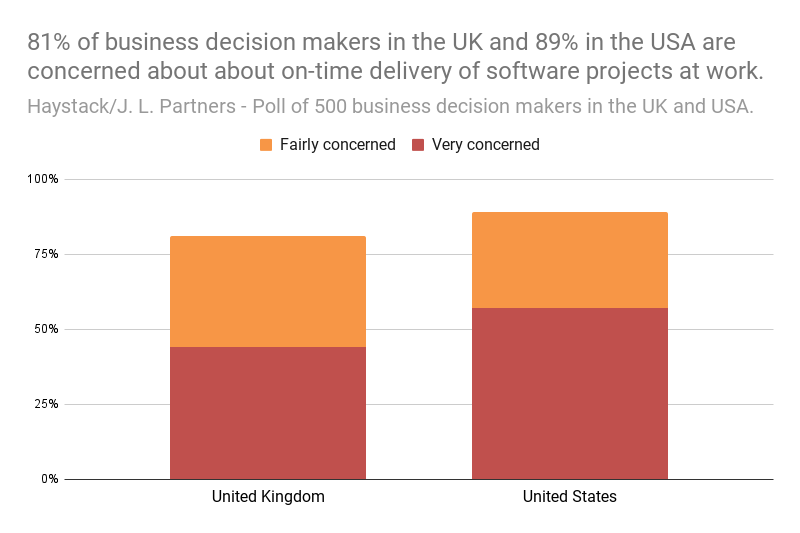

Instead, in 2023, research by J.L. Partners and the Software Delivery Ops Platform, Haystack found that instead of speed being a bottleneck, the key issue is actually the on-time delivery of software. The research into on-time delivery of software found that 70% of software failed to be delivered on time and that 89% of US business leaders (and 81% in the UK) were concerned about the on-time delivery of software at work.

DORA metrics merely measure going faster as the endpoint, not actually addressing the problem of on-time delivery: “This causes an endless cycle of failure to deliver on-time, leading to tougher expectations (now often enforced by productivity metrics), leading to developer burnout. Developer burnout inevitably leads to problems which result in greater failure to deliver on time.”

This plethora of developer productivity tooling, by blaming software engineers and pushing them to the limit, can lead to unhealthy practices within a team — for example:

- Increased levels of cortisol — the stress hormone that causes burnout and a whole plethora of adverse health effects.

- Higher levels of churn and resentment in your workforce — meaning you need to undergo the costly exercise of onboarding new hires more frequently.

Both ultimately end up making on-time delivery harder.

As the “north star” measures of the DORA research have focussed on speed and used subjective surveys to measure associations, the data presents an intrinsic bias towards factors that support this. For example, suppose a proportion of the population was asked, “Are you smart?” and then “Do you workout frequently?” — the people who think better about themselves would likely be more likely to answer yes to both questions, but that does not lead to scientific causation between intelligence and working out frequently. While there may be some correlation between these two factors when measured empirically, as the mantra goes, “causation is not correlation,” and for someone looking to improve their intelligence, there may be better approaches than going to the gym and lifting weights.

To add to this, research by Engprax and Survation has also found that, despite DORA metrics prioritizing speed — software engineers and the public at large agree it’s the least important factor. The research finds that end users care most about data security, data accuracy, and preventing serious bugs — the above factors include getting the latest features as quickly as possible or fixing bugs as fast as possible.

The Alternative

The alternative to solve this problem is to fix the problem of on-time delivery. Don’t try and engineer around it by making teams work ever faster, as this just causes burnout, leading to more failures.

It is, of course, important to measure, but it’s important to measure real-world outcomes by using measures and risk indicators that are suitable for the risk/reward appetite in a given environment.

Opinions expressed by DZone contributors are their own.

Comments