Monitoring OpenStack With the ELK Stack

Elasticsearch, Logstash, and Kibana (ELK) form an open source stack that has become a a leading log analysis platform to monitor, in this case, OpenStack.

Join the DZone community and get the full member experience.

Join For Freeopenstack is an open source project that allows enterprises to implement private clouds. well-known companies such as paypal and ebay have been using openstack to run production environments and mission-critical services for years.

however, establishing and running a private cloud it not an easy task — it involves being able to control a large and complex system assembled from multiple modules. issues occur more frequently in such it environments, so operations teams should log and monitor system activities at all times. this will help them solve performance issues before they even occur.

the elasticsearch, logstash and kibana (elk) open source stack is one of the leading logging platforms due to its scalability, performance, and ease of use. it’s well-suited for this purpose. here, i will discuss openstack monitoring and provide a step-by-step guide that shows how to retrieve, ship, and analyze the data using the elk stack.

retrieving the logs

most openstack services such as nova, cinder, and swift write their logs to subdirectories of the /var/log directory. (for example, nova’s raw log file is in /var/log/nova .) in addition, openstack allows you to retrieve the logs using its rest api and cli . here, we will use the api because it returns the data in a structured json format, making the logging and shipping process simpler due to its good compatibility with logstash and elasticsearch.

authentication

to use the openstack apis, you need an authentication token, which you can get from keystone using the curl command:

curl -x post -h "content-type: application/json" -d '{

"auth": {

"tenantname": "<tenant_name>",

"passwordcredentials": {

"username": "<username>",

"password": "<password>"

}

}

}' "http://<openstack_ip>:35357/v2.0/tokens/"

where the tenant_name is replaced with the name of the project for which we want to monitor, username and password are your openstack environment admin credentials, and openstack_ip is the ip address of your openstack.

the output:

{

"access": {

"token": {

"issued_at": "2016-10-30t21:12:50.000000z",

"expires": "2016-10-30t22:12:50z",

"id": "39e077949d85401282651c96d257a2a2",

"tenant": {

"description": "",

"enabled": true,

"id": "e11130035c0d484cbf7fa28862dfa867",

"name": "<tenant_name>"

},

"audit_ids": [

"n0i9wuudrlunlaebzcmgqg"

]

},......

the id under the access.token property is the token that we use to access the apis. in addition, make sure to keep the tenant id because we will use it later to monitor the specific tenant resources.

nova metrics

similar to every other cloud, the core of openstack cloud is in nova — the compute module. nova is responsible for provisioning and managing the virtual machines. nova monitoring can be segmented into three different layers — from the underlying hypervisor, through the single server/vm, and finally per specific tenant .

hypervisor metrics expose the underlying infrastructure performance. the server metrics provide information on the virtual machines’ performance. tenant metrics provide detailed information about user usage.

hypervisor metrics

monitoring the hypervisor is very important. issues with this layer will lead to broad failure and issues with vm provisioning and performance. the hypervisor exposes a lot of metrics, but you will need to pick the ones that are most important to you. we picked the following ones that we believe provide the baseline transparency that is required to keep a healthy environment:

- current_workload: number of tasks, for example build, snapshot and migrate

- running_vms: number of vms.

- vcpus: number of use/available cpus

- free_disk_gb: free hard drive capacity in gb

- free_ram_mb: amount of available memory

these include available capacities for both computation and storage so that you can understand the load and lack of resources that can eventually harm your openstack cloud performance.

to retrieve this information, use the following:

get http://<ip>:8774/v2.1/os-hypervisors/statistics

the output:

{

"hypervisor_statistics": {

"count": 1,

"vcpus_used": 2,

"local_gb_used": 2,

"memory_mb": 3952,

"current_workload": 0,

"vcpus": 4,

"running_vms": 2,

"free_disk_gb": 43,

"disk_available_least": 36,

"local_gb": 45,

"free_ram_mb": 2416,

"memory_mb_used": 1536

}

}

server metrics

nova server metrics contain information about individual instances that operate on the computation nodes. monitoring the instances helps to ensure that loads are being distributed evenly and that network activities and cpu times are being reported.

to retrieve the metrics information use the following:

get http://<ip>:8774/v2.1/<tenant_id>/servers/<server_id>/diagnosticsthe output:

{

"tap884a7653-01_tx": 4956,

"vda_write": 9477120,

"tap884a7653-01_rx_packets": 64,

"tap884a7653-01_tx_errors": 0,

"tap884a7653-01_tx_drop": 0,

"tap884a7653-01_tx_packets": 88,

"tap884a7653-01_rx_drop": 0,

"vda_read_req": 177,

"vda_read": 1609728,

"vda_write_req": 466,

"memory-actual": 524288,

"tap884a7653-01_rx_errors": 0,

"memory": 524288,

"memory-rss": 271860,

"tap884a7653-01_rx": 8062,

"cpu0_time": 21850000000,

"vda_errors": -1

}

the list of the servers per project:

get http://<ip>:8774/v2.1/<tenant_id>/servers

the output is the list of servers in the project:

{

"servers": [

{

"id": "6ba298fb-9a8f-42da-ba67-c7fa6fea0528",

"links": [

{

"href": "http://localhost:8774/v2.1/8e5274818a1b49268c55e5c94b3aa654/servers/6ba298fb-9a8f-42da-ba67-c7fa6fea0528",

"rel": "self"

},

{

"href": "http://localhost:8774/8e5274818a1b49268c55e5c94b3aa654/servers/6ba298fb-9a8f-42da-ba67-c7fa6fea0528",

"rel": "bookmark"

}

],

"name": "test-instance-2"

},

{

"id": "b7f7d288-f2d2-4c5e-b1b7-d50d16508560",

"links": [

{

"href": "http://localhost:8774/v2.1/8e5274818a1b49268c55e5c94b3aa654/servers/b7f7d288-f2d2-4c5e-b1b7-d50d16508560",

"rel": "self"

},

{

"href": "http://localhost:8774/8e5274818a1b49268c55e5c94b3aa654/servers/b7f7d288-f2d2-4c5e-b1b7-d50d16508560",

"rel": "bookmark"

}

],

"name": "test-instance-1"

}

]

}

tenant metrics

the tenant (or project) is the group of users that has access to specific resources and where resources quotas are defined. monitoring the quota with the instances inside of each project can be very useful in identifying the need for change with particular quotas in line with resource allocation trends.

get the quota per tenant looks, for example:

get http://<ip>:8774/v2.1/os-quota-sets/<tenant_id>

{

"quota_set": {

"injected_file_content_bytes": 10240,

"metadata_items": 128,

"server_group_members": 10,

"server_groups": 10,

"ram": 51200,

"floating_ips": 10,

"key_pairs": 100,

"id": "8e5274818a1b49268c55e5c94b3aa654",

"instances": 10,

"security_group_rules": 20,

"injected_files": 5,

"cores": 20,

"fixed_ips": -1,

"injected_file_path_bytes": 255,

"security_groups": 10

}

}the fourth component: rabbitmq

in addition to these three groups, nova components use rabbitmq for both remote procedure calls (rpcs) and internal communication. it is crucial to log and monitor its performance because it is the default openstack messaging system. if this fails, it will disrupt your whole cloud deployment.

the following metrics will be collected using rabbitmqctl :

count

: number of active queues

command:

rabbitmqctl list_queues name | wc -l

memory

: size of queues in bytes

command:

rabbitmqctl list_queues name memory | grep compute

output:

cinder-scheduler 14200

cinder-scheduler.dc-virtualbox 13984

cinder-scheduler_fanout_67f25e5fa06646dc86ae392673946734 22096

cinder-volume 13984

cinder-volume.dc-virtualbox@lvmdriver-1 14344

cinder-volume_fanout_508fab760c8b4449b77ec714efd0810a 13984

compute 13984

compute.dc-virtualbox 14632

compute_fanout_faf59434422844628c489ed035f68407 13984consumers : number of consumers by queue

command: rabbitmqctl list_queues name consumers | grep compute

log shipping

the next step is to aggregate all of the logs and ship them to elasticsearch. here, we will present two methods: one using logstash and the second using an amazon s3 bucket.

using logstash

one of the most fundamental tools for moving logs is logstash, which is one of the three components of the elk stack that i mentioned earlier. when using logstash, the input, output, and filter should be specified. together, they define the transportation and transformation of the logs.

(new to logstash? learn how to get started with logstash !)

the logstash configuration file can look like the following:

the input block below defines the source of log data and shows how a particular log source will be processed. this includes parameters such as the frequency ( interval ) of new incoming data.

input {

exec {

command => "curl -x get -h 'x-auth-token: 4357b37bbfed49e089099b8aca5b1bd7' http://<ip>:8774/v2.1/os-hypervisors/statistics"

interval => 2

type => "hypervisor-stats"

}

exec {

command => "curl -x get -h 'x-auth-token: 4357b37bbfed49e089099b8aca5b1bd7' http://<ip>:8774/v2.1/<tenant-id>/servers/<server-id>/diagnostics"

interval => 2

type => "instance-1"

}

exec {

command => "curl -x get -h 'x-auth-token: 4357b37bbfed49e089099b8aca5b1bd7' http://localhost:8774/v2.1/<tenant-id>/servers/<server-id>/diagnostics"

interval => 2

type => "instance-2"

}

exec {

command => "curl -x get -h 'x-auth-token: 4357b37bbfed49e089099b8aca5b1bd7' http://localhost:8774/v2.1/os-quota-sets/<tenant-id>"

interval => 2

type => "tenant-quota"

}

exec {

command => "sudo rabbitmqctl list_queues name | wc -l"

interval => 2

type => "rabbit-mq-count"

}

exec {

command => "sudo rabbitmqctl list_queues name memory | grep compute"

interval => 2

type => "rabbit-mq-memory"

}

exec {

command => "rabbitmqctl list_queues name consumers | grep compute"

interval => 2

type => "rabbit-mq-consumers"

}

}

the filters below are used to process the logs in the logstash pipeline and can drop, convert, or even replace part of a log.

filter {

if [type] == "hypervisor-stats" {

} else if [type] == "instance-1" {

json {

source => "message"

}

} else if [type] == "instance-2" {

json {

source => "message"

}

} else if [type] == "tenant-quota" {

json {

source => "message"

}

} else if [type] == "rabbit-mq-count" {

grok {

match => {"message" => "%{number:count}"}

}

mutate {

convert => { "count" => "integer" }

}

} else if [type] == "rabbit-mq-memory" {

split {

}

csv {

columns => ["name", "amount"]

separator => " "

convert => { "amount" => "integer" }

}

} else if [type] == "rabbit-mq-consumers" {

split {

}

csv {

columns => ["name", "count"]

separator => " "

convert => { "count" => "integer" }

}

}

mutate {

add_field => { "token" => "xmqhxxbnvnxanecxeffmnejrelirjmaw" }

}

}

the output block is there to define where the data will be sent.

output {

elasticsearch {

hosts => ["<ip_address_of_elasticsearch:9200"],

codec => "json_lines"

}

}

if you are a logz.io user, you can use the following.

output {

tcp {

host => "listener.logz.io"

port => 5050

codec => json_lines

}

}note : this logstash example is not ideal because we have to work with a fixed number of tenants and instances per project. however, if your environment is highly dynamic, you will need to develop a mechanism that auto-discovers and updates the tenant and instance information.

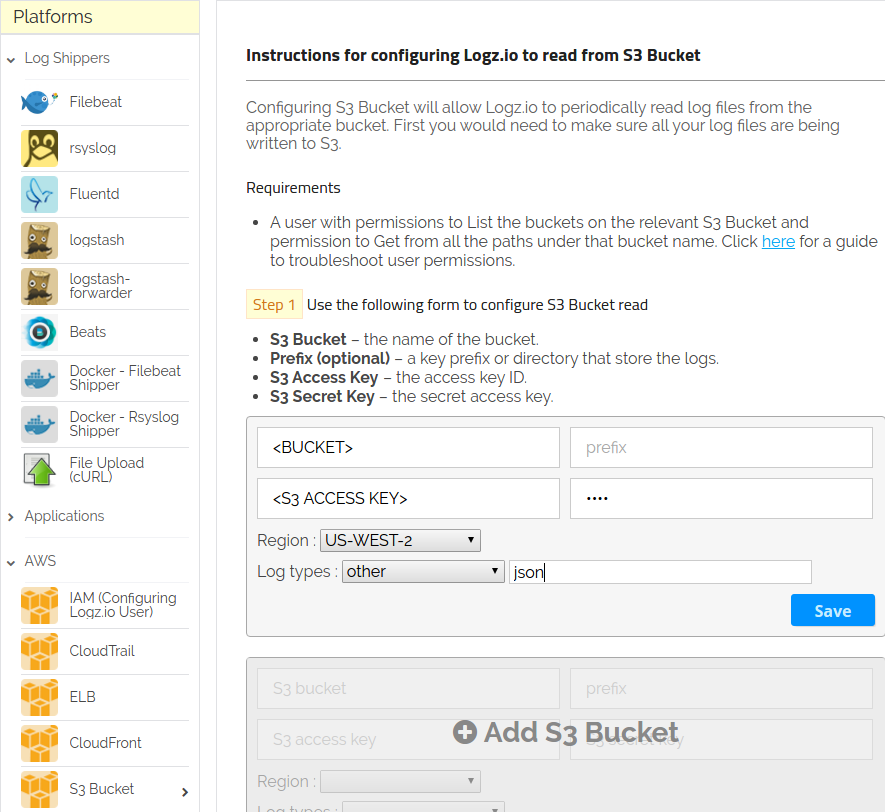

using s3

there are several ways to store files in s3, but using logz.io makes it easy to configure and seamlessly ship logs to the elk stack with s3. you will not have to automate the export and import of data.

in this example, we will reuse our previous logstash configuration to store the logs in an s3 bucket that will be continuously tracked and used by the logz.io elk stack. for that purpose, we will change the last part of the logstash configuration (the output section) to point to the s3 bucket:

……

output {

s3{

access_key_id => "<s3_access_key>"

secret_access_key => "<s3_secret_key>"

region => "<region>"

bucket => "<bucket>"

size_file => 2048

time_file => 5

}

}

after executing ./bin/logstash -f <path_to_the_logstash_config> the files will be there. now, they’re ready to be shipped to elasticsearch.

next, log into your logz.io account and select log shipping . (don’t have a logz.io account? start your free trial here !) then, look for the s3 bucket option in the left-hand menu and fill out the input fields with the required information that is shown below:

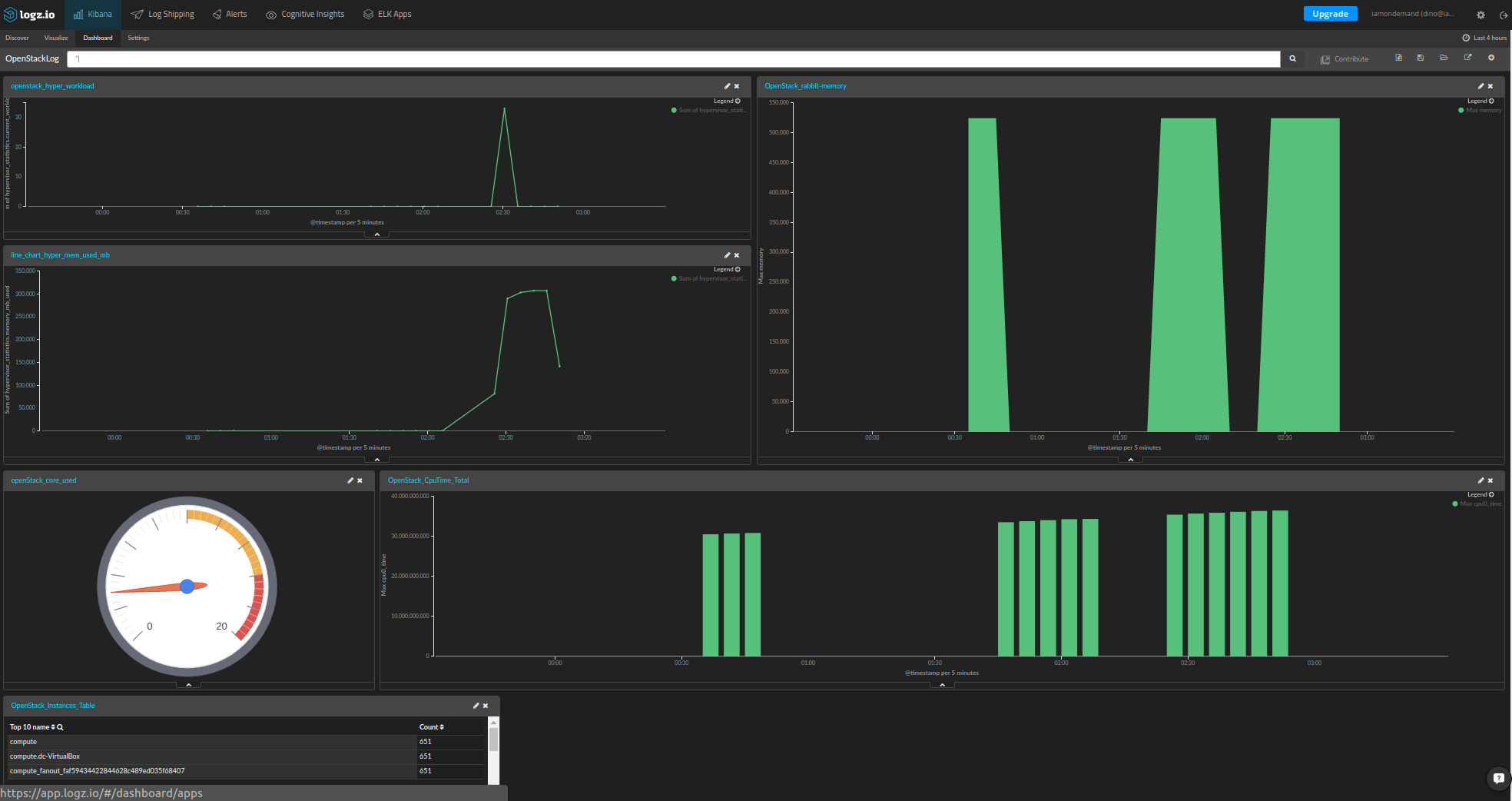

build the dashboard

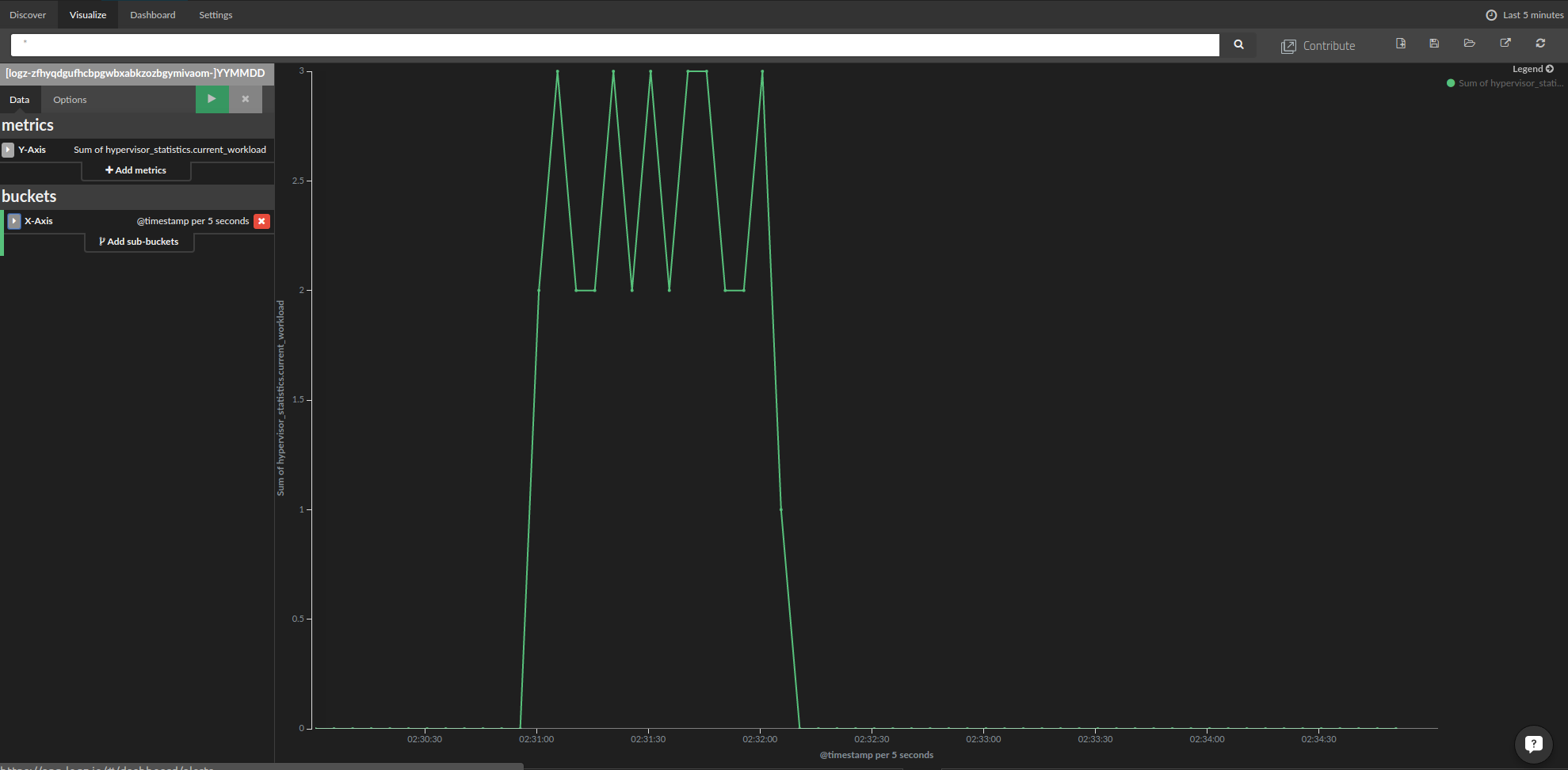

now, we are ready to present the shipped metrics data. first, we will start with the hypervisor metrics:

- to create the chart, click on the visualize item in the menu at the top of the page and select the type of chart that you want to use. in our case, we used a line chart.

- in the metrics settings, select the type of aggregation. we used the sum over the hypervisor current workload field.

- in the buckets settings, we selected date histogram for the x axis in the dropdown menu and left “automatic” for the interval.

the result chart is shown below:

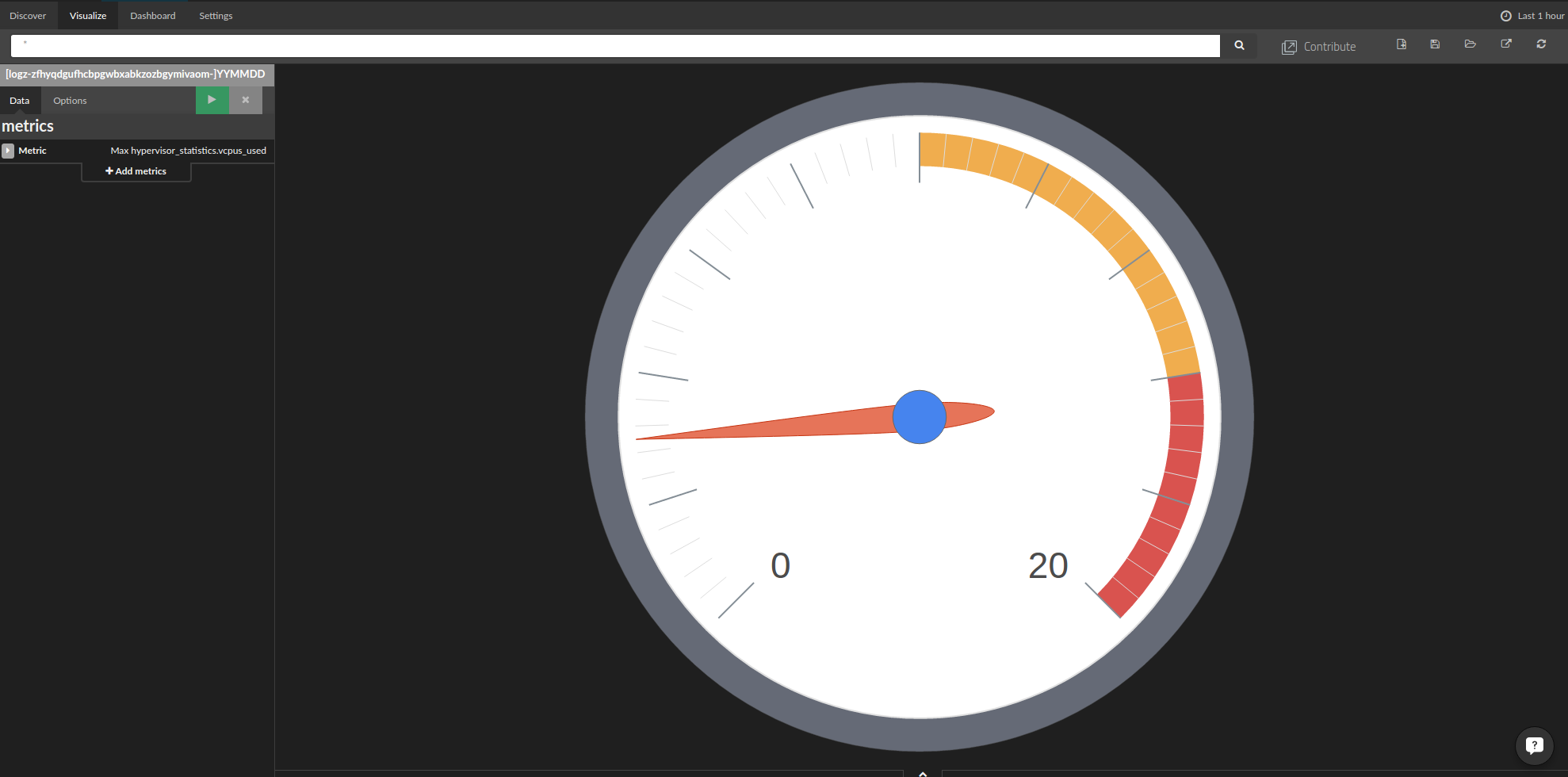

we followed almost the same steps to create the following charts for the hypervisor memory usage and the number of cores ( hypervisor_statistics.vcpus_used) in use:

to create the above image, we defined the minimum and maximum values so that we can easily see if the tenants are about to hit their core limits.

kibana’s flexibility on top of the openstack logs in elasticsearch allow us to create a comprehensive and rich dashboard to help us to control and monitor our cloud.

the conclusion

by its nature, the openstack cloud is a complex and evolving system that continuously generates vast amounts of log data. however, not all of this data can be accessed easily without a robust and structured monitoring system.

the elk stack not only provides the robustness and supports the real-time performance required, but when correctly deployed, it is also flexible enough to support the ongoing monitoring and control that cloud operations teams must have.

Published at DZone with permission of Asaf Yigal, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments