Modernizing an IBM-MQ Application With Kong and TriggerMesh on Kubernetes

Expose a REST API for your mainframe applications with the Kong API gateway and an event-driven flow implemented in Kubernetes using the TriggerMesh API.

Join the DZone community and get the full member experience.

Join For FreeIntroduction

While it seems that everyone is migrating their applications to the cloud, there are still a lot of legacy applications that cannot be moved easily. This is the case for old system of records running on mainframes that you interact with using IBM-MQ. With the enterprise application landscape being a set of APIs, exposing a REST API in front of IBM-MQ so that you expose your system of record to a modern world is crucial. Typically, this can be done with expensive integration solutions like MuleSoft.

In this post, however, we are going to show you how you can use the Kong API Gateway and the TriggerMesh open source system to expose a REST API in front of an event driven integration to interact with IBM-MQ. In other words, you can front your asynchronous event flow with a synchronous API — the same thing you can do with MuleSoft, but using open-source technologies.

This is particularly relevant for architects and developers who need to bridge legacy systems into their cloud-native architecture and a DevOps mindset. This is also very useful for enterprises moving away from systems like MuleSoft in favor of an open-source system that can leverage event-driven infrastructure, whether it is Kafka-based or running on Kubernetes.

Architecture

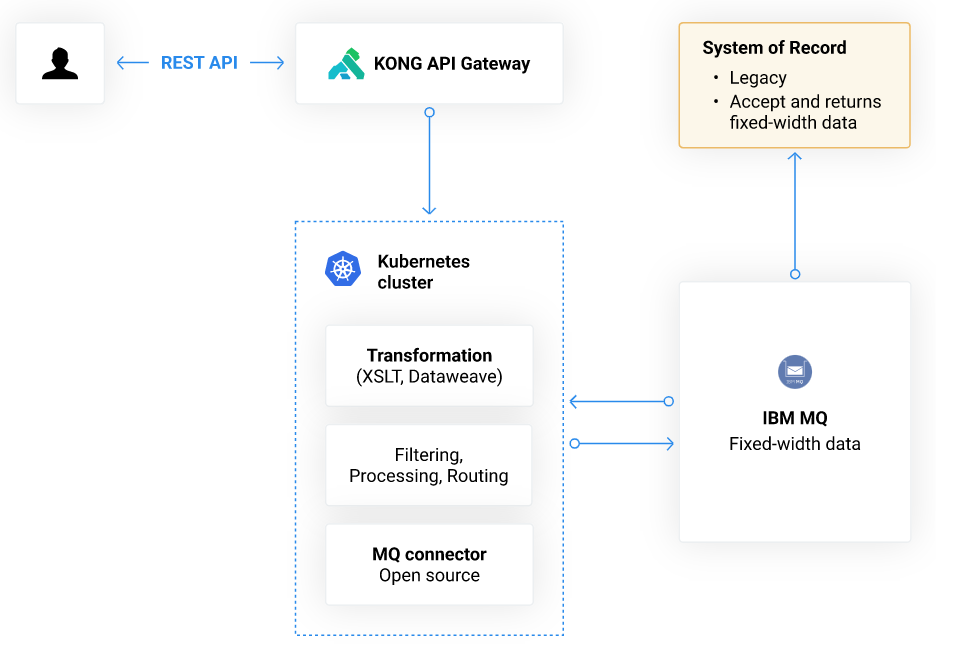

So how would you modernize an old integration with IBM-MQ? How would you create a REST API for it and how would you run it in Kubernetes?

The figure below shows you how. We are going to:

Use Kong to expose a REST endpoint that will generate a CloudEvent

Run TriggerMesh in a Kubernetes cluster with Knative

Use TriggerMesh declarative API for transformation and connection with MQ

First, a REST Endpoint

To be able to expose a REST API in front of it, you can use Kong instead of a costly MuleSoft Anypoint platform.

Kong gives you the “REST” mechanics, but to plug it into TriggerMesh and generate a CloudEvent event, we developed a Kong Plugin. This plugin allows you to transform a REST request into a CloudEvent. This event specification is the lingua franca of Knative (now part of CNCF) and TriggerMesh. It allows you to benefit from features such as auto-scaling and event routing. The Kong configuration would look like this:

services:

- name: dispatcher

url: http://synchronizer-dispatcher.default.svc.cluster.local

routes:

- name: bar-route

paths:

- /bar

plugins:

- name: ce-plugin

config:

eventType: io.triggermesh.flow.barThis defines a Kong service called dispatcher. For requests made to the /bar route it transforms the body of the request and creates a CloudEvent of type of io.triggermesh.flow.bar and forwards it to the url of the dispatcher service.

The net result of this plugin is that a simple curl request posting some JSON data will emit a CloudEvent with some attributes such as id, source, type, and timestamp, as can be seen in the picture below.

curl -v $KONG_ADDRESS -d '{"hello":"CloudEvents"}' -H "Content-Type: application/json"

With this Kong transformation, we are bridging the REST endpoint exposed via Kong to an event-driven system managed by Kubernetes. We can then interact with IBM-MQ.

Second, an IBM-MQ Connector in Kubernetes

In the latest release of TriggerMesh, we shipped an IBM-MQ source and target. All of it runs in Kubernetes and is available as a declarative API, just like any Kubernetes workload. You can find the complete demo in a GitHub repository. Here I will just highlight the main steps.

A sample IBM-MQ source manifest looks like this:

apiVersion: sources.triggermesh.io/v1alpha1

kind: IBMMQSource

metadata:

name: mq-output-channel

spec:

channelName: DEV.APP.SVRCONN

connectionName: ibm-mq.default.svc.cluster.local(1414)

credentials:

password:

valueFromSecret:

key: password

name: ibm-mq-secret

username:

valueFromSecret:

key: username

name: ibm-mq-secret

queueManager: QM1

queueName: DEV.QUEUE.2

sink:

ref:

apiVersion: flow.triggermesh.io/v1alpha1

kind: Synchronizer

name: ibm-mq

The connectionName points to your IBM-MQ server. The credentials references a Kubernetes secrets which holds the username and password. The queue is specified, and you set a sink as the destination for the event that you consume from MQ.

Just like that with a declarative API you consume from a legacy system and you emit an event in the CloudEvent format of CNCF. In addition, this consumer is containerized and managed by Kubernetes.

The same thing can be done to produce events into IBM-MQ using a TriggerMesh so-called Target. You can find the API specification in the documentation.

Finally, Add a Synchronizer and Transformations

To bring it all together, we have to deal with synchronization. Indeed, REST exposes a synchronous API, and event-driven systems are by nature asynchronous. Mulesoft, for example, has long offered an IBM-MQ connector which leverages a correlation and reply-to metadata of MQ messages to build a synchronous connector. In TriggerMesh, you can now do the same declaratively, open-source, and in Kubernetes.

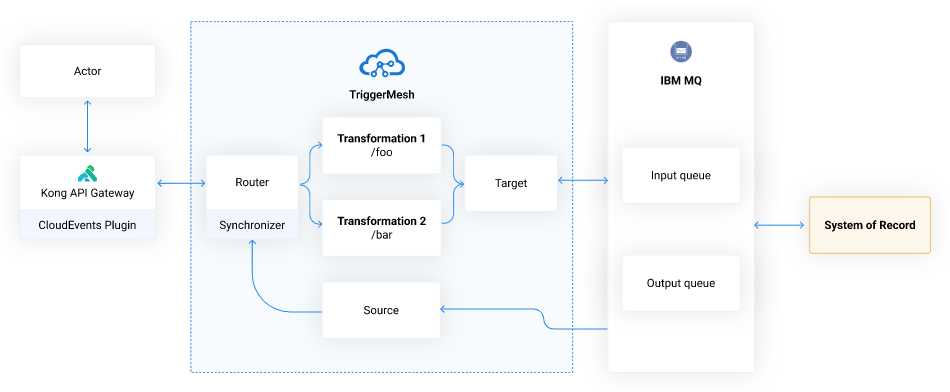

The diagram below represents the full flow of the system. You can try it for yourself with the manifests available at https://github.com/triggermesh/kong-ce-demo/tree/main/3-mq. To ease up the testing, you might need the TriggerMesh DSL. What the flow also showcases is the ability to do different types of transformations depending on the routes being called via REST. Since each route can be configured to generate a different event type, the even broker can route events to different transforming functions before going to IBM-MQ.

Think about fixed-width to JSON transformation, COBOL copybook transformations, Dataweave transformations, and even good old XSLT transformations.

Conclusion

Moving to the cloud does not mean throwing away decades of enterprise efforts, performance optimization, workflows, and system of records. You do not need to lift and shift everything at once. What you can definitely do is modernize your approach to software development and start bringing in new technologies to your entire software and infrastructure stack.

Doing so you can extend the lifetime of legacy systems like IBM-MQ or Oracle DB and make them more relevant in the cloud era.

In this post, we showed you a practical way to build a REST interface in front of a system of record that you interact with via IBM-MQ. All components are configured via declarative API implemented as extensions to the Kubernetes API. Such a modernization gives you a slew of features derived from Kubernetes: role-based access control (RBAC), logging, monitoring, auto-scaling, and the ability to manage your integration with a GitOps workflow. In addition, it saves you a considerable amount of money by getting away from costly proprietary integration solutions and embracing open-source systems like Kong, TriggerMesh, and Kubernetes.

Published at DZone with permission of Sebastien Goasguen, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments