Migrate HDFS Data to Azure

A developer and Hadoop expert runs through the processes he and his team used to transfer their data over network with TLS encryption when switching to Azure.

Join the DZone community and get the full member experience.

Join For FreeDuring the middle of last year, my team decided to move our Hadoop workloads to Azure, including our data and applications. This article provides some of the best practices we used in migrating on-premises HDFS data to Azure HDInsight. Mentioned below are two approaches that we adopted to transfer the data over network with TLS encryption.

Method 1

The ExpressRoute Azure service uses a private connection between Azure and on-premise data centers (ExpressRoute offers higher security, reliability, and speeds with lower latencies than typical connections over the Internet). We took advantage of Data Factory's native data copy functionality using the integration runtime to migrate the data. Data Factory's self-hosted integration runtime (SHIR) should be installed on a pool of Windows VMs on an Azure virtual network. The VMs can be scaled out to multiple VMs to fully utilize network and storage IOPS or bandwidth.

This approach is recommended when there is a need to migrate huge amounts of data (i.e. data volume > 50 GB) for legacy applications.

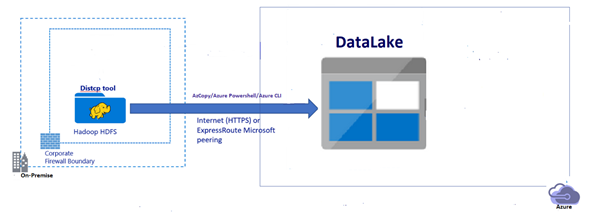

Method 2

Transfer your data to Azure storage over a regular internet connection using any one of several tools, such as: AzCopy, Azure PowerShell, and Azure CLI.

This approach is used to copy smaller volumes of data (i.e. 2-5 GB or less), or, for ad-hoc purposes, PoC environment data, or to transfer data to Azure that does not involve any transformations.

Example 1:

azcopy copy '<local-directory-path>' 'https://<storage-account-name>.file.core.windows.net/<file-share-name>' --recursive

The above azcopy command copies an entire directory (recursively) using azcopy and all the underlying files in that directory to an Azure container. The result is a directory in the Azure container by the same name.

Example 2:

xxxxxxxxxx

az storage fs file upload -s "C:\myFolder\upld.txt " -p testdir/upld.txt -f testcont --account-name teststorgeaccount --auth-mode login

The above az cli command uploads a file named upld.txt from the local file system to a directory testdir in an Azure container named testcon under the storage account teststorgeaccount.

Example 3:

xxxxxxxxxx

$contxt = New-AzStorageContext -StorageAccountName '<storage-account-name>' -StorageAccountKey '<storage-account-key>'

$localSrcFile = "upld.txt"

$containerName = "testcont"

$dirname = "testdir/"

$destPath = $dirname + (Get-Item $localSrcFile).Name

New-AzDataLakeGen2Item -Context $contxt -FileSystem $containerName -Path $destPath -Source $localSrcFile -Force

The above set of PowerShell commands uploads a file named upld.txt from the local file system to a directory called testdir in the Azure container named testconr.

Opinions expressed by DZone contributors are their own.

Comments