Making Your SSR Sites 42x Faster With Redis Cache

Let's look at how you can leverage Redis Cache with Node.JS and Express

Join the DZone community and get the full member experience.

Join For FreeRedis is an in-memory store that is primarily used as a database. You may have heard of Redis and heard how cool it is but never had an actual use case for it. In this tutorial, I'll show you how you can leverage Redis to speed up your Server Side Rendered (SSR) web application. If you're new to Redis, check out my guides on Installing Redis and creating key-value pairs to understand better how it works.

In this article, we'll look at how to adjust your Node.JS Express application to build in lightning-fast caching with Redis.

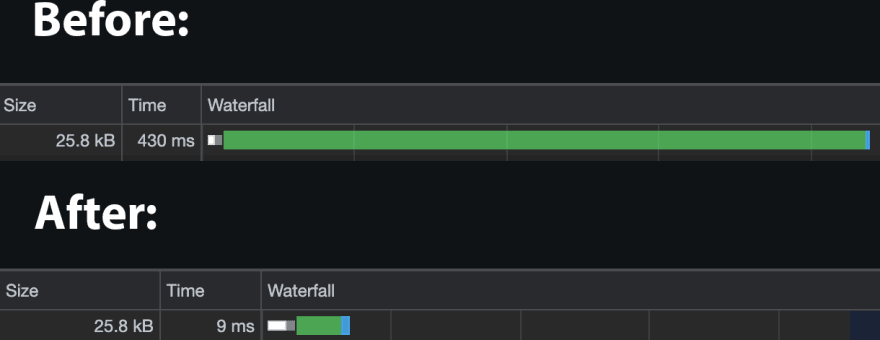

The outcome of this was quite dramatic. I was able to speed up page load time by, on average, 95%:

Background to the Problem

On Fjolt, I use Express to render web pages on the server and send them to the user. As time has gone on, and I've added more features, the server rendering has increased in complexity - for instance, I recently added line numbers to the code examples, which required quite a bit of extra processing on the server. The server is still high-speed, but as more complexity is added, there is a chance that the server calculations will take longer and longer.

Ultimately that means a slower page load time for users, especially those on slow 3G connections. I have had this on my radar for a while, as I want everyone to enjoy reading content fast and because page speed has significant SEO implications.

When a page loads on Fjolt, I run an Express route like this:

import express from 'express';

const articleRouter = express.Router();

// Get Singular Article

articleRouter.get(['/article/:articleName/', async function(req, res, next) {

// Process article

// A variable to store all of our processed HTML

let finalHtml = '';

// A lot more Javascript goes here

// ...

// Finally, send the Html file to the user

res.send(finalHtml);

});Every time anyone loads a page, the article is processed from the ground up - and everything is processed at once. That means a few different database calls, a. fs.readFile functions and some quite computationally complex DOM manipulations to create the code linting. It's not worryingly complicated, but it also means the server is doing a lot of work to process multiple users on multiple pages.

In any case, as things scale, this will become a problem of increasing size. Luckily, we can use Redis to cache pages and display them to the user immediately.

Why Use Redis to Cache Web Pages

Caching with Redis can turn your SSR website from a slow, clunky behemoth into a blazingly fast, responsive application. When we render things on the server-side, we ultimately do a lot of packaging, but the end product is the same - one full HTML page delivered to the user:

How SSR Websites Serve Content to a User

The faster we can package and process the response, the quicker the experience will be for the user. If you have a page that has a high process load, meaning that a lot of processing is required to create the final, served page, you have two real options:

- Start removing processes and optimize your code. This can be a drawn-out process but will result in faster processing on the server side.

- Use Redis, so the web page is only ever processed in the background, and a cached version is always displayed to the user.

In all honesty, you should probably be doing both - but Redis provides the quickest way to optimize. It also helps when the reality is there aren't many optimizations left for you to do;

Adding Redis to Your Express Routes

First, we need to install Redis if you don't have Redis installed on your server or computer. You can find out how to install Redis here.

Next, install it in your Node.JS project by running the following command:

npm i redisNow that we're up and running, we can change our Express Routes. Remember our route from before looked like this?

import express from 'express';

const articleRouter = express.Router();

// Get Singular Article

articleRouter.get(['/article/:articleName/', async function(req, res, next) {

// Process article

// A variable to store all of our processed HTML

let finalHtml = '';

// A lot more Javascript goes here

// ...

// Finally, send the Html file to the user

res.send(finalHtml);

});Let's add in Redis:

import express from 'express';

import { createClient } from 'redis';

const articleRouter = express.Router();

// Get Singular Article

articleRouter.get(['/article/:articleName/', async function(req, res, next) {

// Connect to Redis

const client = createClient();

client.on('error', (err) => console.log('Redis Client Error', err));

await client.connect();

const articleCache = await client.get(req.originalUrl);

const articleExpire = await client.get(`${req.originalUrl}-expire`);

// We use redis to cache all articles to speed up content delivery to user

// Parsed documents are stored in redis, and sent to the user immediately

// if they exist

if(articleCache !== null) {

res.send(articleCache);

}

if(articleCache == null && articleExpire == null || articleExpire < new Date().getTime()) {

// A variable to store all of our processed HTML

let finalHtml = '';

// A lot more Javascript goes here

// ...

// Finally, send the Html file to the user

if(articleCache == null) {

res.send(mainFile);

}

// We update every 10 seconds.. so content always remains roughly in sync.

// So this not only increases speed to user, but also decreases server load

await client.set(req.originalUrl, mainFile);

await client.set(`${req.originalUrl}-expire`, new Date().getTime() + (10 * 1000));

}

});The changes we've made here aren't too complicated. In our code, we only need to set two Redis keys - one which will store the cached HTML content of the page and another to store an expiry date, so we can ensure the content is consistently up to date.

Code Summary

Let's dive into the code in a little more detail:

- First, import Redis so that it's available to use via createClient.

- Whenever a user goes to our article endpoint, we load up Redis instead of jumping right into parsing and displaying an article.

- We check for two keys in our Redis database (

await client.get('key-name')). One key is req.currentUrl. For example, this page might be/article/redis-caching-ssr-site-nodejs. The other is an expiry, which is stored in, i.e./article/redis-caching-ssr-site-nodejs-expire - If a cached version of our article exists, we immediately send it to the user - leading to lightning-fast page loads. However, if this is the first time anyone has visited this page, or if the expired key has expired, we have to parse the article the long way.

- You might have thought that means every 10 seconds, the page has to be loaded the long way - but that is not true, users will always be sent the cached version if it exists, but we'll update the cache every 10 seconds so that the latest content is available. This update has no impact on user load times. Therefore the only time a slow load will occur is if this page has never been accessed before. In addition, since we use the URL to store the data, we can be certain that unique routes will have individual data stored against them in our Redis database. Ultimately, this led to an improvement in time to first byte (TTFB) of 95% for my website, as shown in the image at the top.

Caveats and Conclusion

Since my route was becoming overly complex, the time saving here was considerable. Of course, if your route is not super complex, your time saving may be less. However, you are still likely to get quite a significant speed boost using this method.

This example proves how a simple change can have a massive performance impact on any SSR site and is a great use case for Redis, showing how powerful it can be. I hope you've found this helpful and find a way to use it on your sites and applications.

Published at DZone with permission of Johnny Simpson, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments