Machine Learning Libraries For Any Project

There are many libraries out there that can be used in machine learning projects. Explore a comprehensive guide on which libraries to use in your projects.

Join the DZone community and get the full member experience.

Join For FreeThere are many libraries out there that can be used in machine learning projects. Of course, some of them gained considerable reputations through the years. Such libraries are the straight-away picks for anyone starting a new project which utilizes machine learning algorithms. However, choosing the correct set (or stack) may be quite challenging.

The Why

In this post, I would like to give you a general overview of the machine learning libraries landscape and share some of my thoughts about working with them. If you are starting your journey with a machine learning library, my text can give you some general knowledge of the machine learning libraries and provide a better starting point for learning more.

The libraries described here will be divided by the role they can play in your project.

The categories are as follows:

- Model Creation - Libraries that can be used to create machine learning models

- Working with data - Libraries that can be used both for feature engineering, future extraction, and all other operations that involve working with features

- Hyperparameters optimization - Libraries and tools that can be used for optimizing model hyperparameters

- Experiment tracking - Libraries and tools used for experiment tracking

- Problem-specific libraries - Libraries that can be used for tasks like time series forecasting, computer vision, and working with spatial data

- Utils - Non-strictly machine learning libraries but nevertheless the ones I found useful in my projects

Model Creation

PyTorch

Developed by people from Facebook and open-sourced in 2017, it is one of the market's most famous machine learning libraries - based on the open-source Torch package. The PyTorch ecosystem can be used for all types of machine learning problems and has a great variety of purpose-built libraries like torchvision or torchaudio.

The basic data structure of PyTorch is the Tensor object, which is used to hold multidimensional data utilized by our model. It is similar in its conception to NumPy ndarray. PyTorch can also use computation accelerators, and it supports CUDA-capable NVIDIA GPUs, ROCm, Metal API, and TPU.

The most important part of the core PyTorch library is nn modules which contains layers and tools to build complex models layer by layer easily.

class NeuralNetwork(nn.Module):

def __init__(self):

super().__init__()

self.flatten = nn.Flatten()

self.linear_relu_stack = nn.Sequential(

nn.Linear(28*28, 512),

nn.ReLU(),

nn.Linear(512, 512),

nn.ReLU(),

nn.Linear(512, 10),

)

def forward(self, x):

x = self.flatten(x)

logits = self.linear_relu_stack(x)

return logitsAn example of a simple neural network with 3 linear layers in PyTorch

Additionally, PyTorch 2.0 is already released, and it makes PyTorch even better. Moreover, PyTorch is used by a variety of companies like Uber, Tesla, and Facebook, just to name a few.

PyTorch Lightning

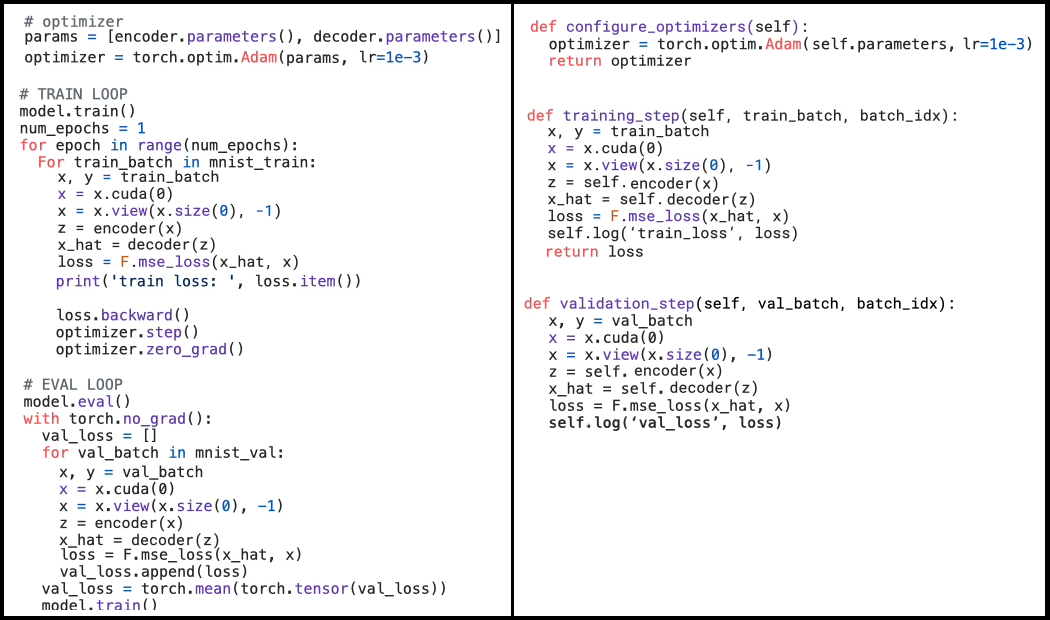

It is a sort of “extension” for PyTorch, which aims to greatly reduce the amount of boilerplate code needed to utilize our models.

Lightning is based on the concept of hooks: functions called on specific phases of the model train/eval loop. Such an approach allows us to pass callback functions executed at a specific time, like the end of the training step.

Trainers Lighting automates many functions that one has to take care of in PyTorch; for example, loop, hardware call, or zero grads.

Below are roughly equivalent code fragments of PyTorch (Left) and PyTorch Lightning (Right).

TensorFlow

A library developed by a team from Google Brain was originally released in 2015 under Apache 2.0 License, and version 2.0 was released in 2019. It provides clients in Java, C++, Python, and even JavaScript.

Similar to PyTorch, it is widely adopted throughout the market and used by companies like Google (surprise), Airbnb, and Intel. TensorFlow also has quite an extensive ecosystem built around it by Google. It contains tools and libraries such as an optimization toolkit, TensorBoard (more about it in the "Experiment Tracking" section below), or recommenders. The TensorFlow ecosystem also includes a web-based sandbox to play around with your model’s visualization.

Again the tf.nn module plays the most vital part providing all the building blocks required to build machine learning models. Tensorflow uses its own Tensor (flow ;p) object for holding data utilized by deep learning models. It also supports all the common computation accelerators like CUDA or RoCm (community), Metal API, and TPU.

class NeuralNetwork(models.Model):

def __init__(self):

super().__init__()

self.flatten = layers.Flatten()

self.linear_relu_stack = models.Sequential([

layers.Dense(512, activation='relu'),

layers.Dense(512, activation='relu'),

layers.Dense(10)

])

def call(self, x):

x = self.flatten(x)

logits = self.linear_relu_stack(x)

return logitsNote that in TensorFlow and Keras, we use the Dense layer instead of the Linear layer used in PyTorch. We also use the call method instead of the forward method to define the forward pass of the model.

Keras

It is a library similar in meaning and inception to PyTorch Lightning but for TensorFlow. It offers a more high-level interface over TensorFlow. Developed by François Chollet and released in 2015, it provides only Python clients. Keras also has its own set of Python libraries and problem-specific libraries like KerasCV or KerasNLP for more specialized use cases.

Before version 2.4 Keras supported more backends than just TensorFlow, but after the release, TensorFlow became the only supported backend. As Keras is just an interface for TensorFlow, it shares similar base concepts as its underlying backend. The same holds true for supported computation accelerators. Keras is used by companies like IBM, PayPal, and Netflix.

class NeuralNetwork(models.Model):

def __init__(self):

super().__init__()

self.flatten = layers.Flatten()

self.linear_relu_stack = models.Sequential([

layers.Dense(512, activation='relu'),

layers.Dense(512, activation='relu'),

layers.Dense(10)

])

def call(self, x):

x = self.flatten(x)

logits = self.linear_relu_stack(x)

return logitsNote that in TensorFlow and Keras, we use the Dense layer instead of the Linear layer used in PyTorch. We also use the call method instead of the forward method to define the forward pass of the model.

PyTorch vs. TensorFlow

I wouldn’t be fully honest If I would not introduce some comparison between these two.

As you could read, a moment before, both of them are quite similar in offered features and the ecosystem around them. Of course, there are some minor differences and quirks in how both work or the features they provide. In my opinion, these are more or less insignificant.

The real difference between them comes from their approach to defining and executing computational graphs of the machine and deep learning and models.

- PyTorch uses dynamic computational graphs, which means that the graph is defined on-the-fly during execution. This allows for more flexibility and intuitive debugging, as developers can modify the graph at runtime and easily inspect intermediate outputs. On the other hand, this approach may be less efficient than static graphs, particularly for complex models. However, PyTorch 2.0 attempts to address these issues via torch.compile and FX graphs.

- TensorFlow uses static computational graphs, which are compiled before execution. This allows for more efficient execution, as the graph can be optimized and parallelized for the target hardware. However, it can also make debugging more difficult, as intermediate outputs are not readily accessible.

Another noticeable difference is that PyTorch seems to be more low level than Keras while being more high level than pure TensorFlow. Such a setting makes PyTorch more elastic and easier to use for making tailored models with many customizations.

As a side note, I would like to add that both libraries are on equal terms in the case of market share. Additionally, despite the fact that TensorFlow uses the call method and PyTorch uses the forward method both libraries support call semantics as a shorthand for calling model(x).

Working With Data

pandas

A library that you must have heard of if you are using Python, it is probably the most famous Python library for working with data of any type. It was originally released in 2008, and version 1.0 in 2012. It provides functions for filtering, aggregating, and transforming data, as well as merging multiple datasets.

The cornerstone of this library is a DataFrame object which represents a multidimensional table of any type of data. The library heavily focuses on performance with some parts written in pure C to boost performance.

Besides being performance-focused, pandas provide a lot of features related to:

- Data cleaning and preprocessing

- Removing duplicates

- Filling null values or nan values.

- Time series analysis

- Resampling

- Windowing

- Time shift

Additionally, it can perform a variety of input/output operations:

- Reading from/to .csv or .xlsx files

- Performing database queries

- Load data from GCP BigQuery (with the help of pandas-gbq)

NumPy

It is yet another famous library for working with data - mostly numeric data science in kind. The most famous part of NumPy is an ndarray - a structure representing a multidimensional array of numbers.

Besides ndarray, NumPy provides a lot of high-level mathematical functions and mathematical operations used to work with this data. It is also probably the oldest library in this set as the first version was released in 2005. It was implemented by Travis Oliphant, based on an even older library called numeric (released in 1996).

NumPy is extremely focused on performance with contributors trying to get more and more of the currently implemented algorithm to reduce the execution time of NumPy functions even more.

Of course, as with all libraries described here, numpy is also open source and uses a BSD license.

SciPy

It is a library focused on supporting scientific computations. It is even older than NumPy (2005), released in 2001. It is built atop NumPy, with ndarray being the basic data structure used throughout SciPy. Among other things, the library adds functions for optimization, linear algebra, signal processing, interpolation, and spares matrix support. In general, it is more high-level than NumPy and thus can provide more complex functions.

Hyperparameter Optimizations

Ray Tune

It is part of the Ray toolset, a bundle of related libraries for building distributed applications focusing on machine learning and Python. The Tune part of the ML library is focused on providing hyper-priming optimization features by providing a variety of search algorithms; for example, grid search, hyperband, or Bayesian Optimization.

Ray Tune can work with models created in most programming languages and libraries available on the market. All of the libraries described in the paragraph about model creation are supported by Ray Tune.

The key concepts of Ray Tune are:

- Trainables - Objects pass to Tune runs; they are our model we want to optimize parameters for

- Search space - Contains all the values of hyperparameters we want to check in the current trial

- Tuner - An object responsible for executing runs, calling tuner.fit() starts the process of searching for optimal hyperparameters set. It requires passing at least a trainable object and search space

- Trial- Each trail represents a particular run of a trainable object with an exact set of parameters from a search space. Trials are generated by Ray Tune Tuner. As it represents the output of running the tuner, Trial contains a ton of information, such as:

- Config used for a particular trial

- Trial ID

- Many others

- Search algorithms - The algorithm used for a particular execution of Tuner.fit; if not provided, Ray Tune will use RadomSearch as the default

- Schedulers - These are objects that are in charge of managing runs. They can pause, stop and run trials within the run. This can result in increased efficiency and reduced time of the run. If none is selected, the Tune will pick FIFO as default - run will be executed one by one as in a classic queue.

- Run analyses - The object conniving the results of Tuner.fit execution in the form of ResultGrid object. It contains all the data related to the run like the best result among all trials or data from all trials.

BoTorch

BoTorch is a library built atop PyTorch and is part of the PyTorch ecosystem. It focuses solely on providing hyperparameter optimization with the use of Bayesian Optimization.

As the only library in this part that is designed to work with a specific model library, it may make it problematic for BoTorch to be used with libraries other than PyTorch. Also as the only library it is currently in version beta and under intensive development, so some unexpected problems may occur.

The key feature of BoTorch is its integration with PyTorch, which greatly impacts the ease of interaction between the two.

Experiment Tracking

Neptune.ai

It is a web-based tool that serves both as an experiment tracking and model registry. The tool is cloud-based in the classic SaaS model, but if you are determined enough, there is the possibility of using a self-hosted variant.

It provides a dashboard where you can view and compare the results of training your model. It can also be used to store the parameters used for particular runs. Additionally, you can easily version the dataset used for particular runs and all the metadata you think may come in handy.

Moreover, it enables easy version control for your models. Obviously, the tool is library agnostic and can host models created using any library. To make the integration possible, Neptune exposes a REST-style API with its own client. The client can be downloaded and installed via pip or any other Python dependency tool. The API is decently documented and quite easy to grasp.

The tool is paid and has a simple pricing plan divided into three categories. Yet if you need it just for a personal project or you are in the research or academic unit, then you can apply to use the tool for free.

Neptune.ai is a new tool, so some features known from other experiment tracing tools may not be present. Despite that fact, Neptune.ai support is keen to react to their user feedback and implement missing functionalities – at least, it was the thing in our case as we use Neptune.ai extensively in The Codos Project.

Weights & Biases

Also known as WandB or W&B, this is a web-based tool that exposes all the needed functionalities to be used as an experiment tracing tool and model registry. It exposes a more or less similar set of functionalities as neptune.ai.

However, Weights & Biases seem to have better visualization and, in general, is a more mature tool than Neptune. Additionally, WandB seems to be more focused on individual projects and researchers with less emphasis on collaboration.

It also has a simple pricing plan divided into 3 categories with a free tier for private use. Yet W&B has the same approach as Neptune to researchers and academic units - they can always use Weights & Biases for free.

Weights & Biases also expose an REST-like API to ease the integration process. It seems to be a better document and offers more feathers than the one exposed by Neptune.ai. What is curious is that they expose the client library written in Java - if, for some reason, you wrote a machine learning model in Java instead of Python.

TensorBoard

It is a dedicated visualization toolkit for the TensorFlow ecosystem. It is designed mostly to work as an experiment tracking tool with a focus on metrics visualizations. Despite being a dedicated TensorFlow tool, it can also be used with Keras (not surprisingly) and PyTorch.

Additionally, it is the only free tool from all three described in this section. Here you can host and track your experiments. However, TensorBoard is missing the functionalities responsible for model registry which can be quite problematic and force you to use some 3rd party tool to cover this missing feature. Anyway, there is for sure a tool for this in the TensorFlow ecosystem.

As it is a directory part of the TensorFlow ecosystem, its integration with Keras or TensorFlow is much smoother than any of the previous two tools.

Problem Specific Libraries

tsaug

One of a few libraries for augmentation of time series, it is an open-source library created and maintained by a single person under the GitHub name nick tailaiw, released in 2019, and is currently in version 0.2.1. It provides a set of 9 augmentations; Crop, Add Noise, or TimeWrap, among them. The library is reasonably well documented for such a project and easy to use from a user perspective.

Unfortunately, for unknown reasons (at least to me), the library seems dead and has not been updated for 3 years. There are many open issues but they are not getting any attention. Such a situation is quite sad in my opinion, as there are not so many other libraries that provide augmentation for time series data.

However, if you are looking for a time series for data mining or augmentation and want to use a more up-to-date library, Tsfresh may be a good choice.

OpenCV

It is a library focused on providing functions for working with image processing and computer vision. Developed by Intel, it is now open source based on the Apache 2 license.

OpenCV provides a set of functions related to image and video processing, image classification data analysis, and tracking alongside ready-made machine learning models for working with images and video.

If you want to read more about OpenCV, my coworker, Kamil Rzechowski, wrote an article that quite extensively describes the topic.

GeoPandas

It is a library built atop pandas that aims to provide functions for working with spatial and data structures. It allows easy reading and writing data in GeJSON, shapefile formats, or reading data from PostGIS systems. Besides pandas, it has a lot of other dependencies on spatial data libraries like PyGEOS, GeoPy, or Shapely.

The library's basic structure is:

- GeoSeries - A column of geospatial data, such as a series of points, lines, or polygons

- GeoDataFrame - Tabular structure holding a set of GeoSeries

Utils

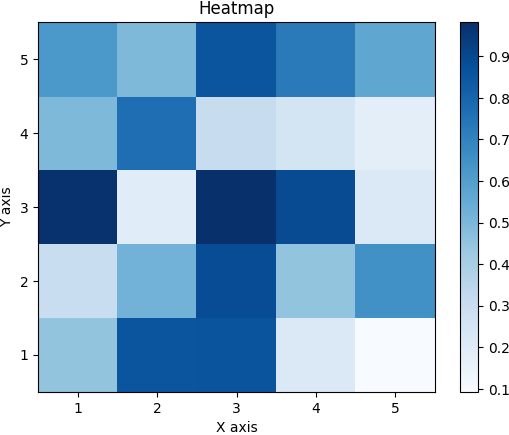

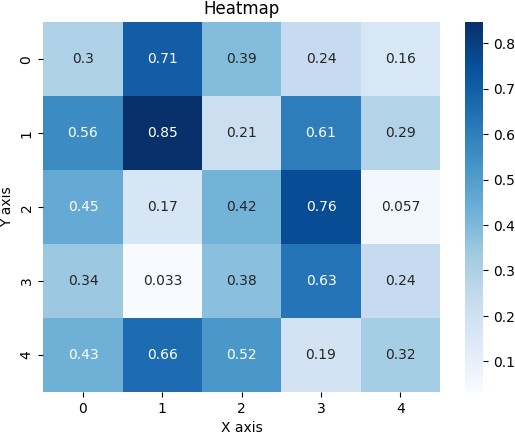

Matplotlib

As the name suggests, it is a library for creating various types of plots ;p. Besides basic plots like lines or histograms, matplotlib allows us to create more complex plots: 3d shapes or polar plots. Of course, it also allows us to customize things like color plots or labels.

Despite being somewhat old (released in 2003, so 20 years old at the time of writing), it is actively maintained and developed. With around 17k stars on GitHub, it has quite a community around it and is probably the second pick for anyone needing a data visualization tool.

It is well-documented and reasonably easy to grasp for a newcomer. It is also worth noting that matplotlib is used as a base for more high-level visualization libraries.

Seaborn

Speaking of which, we have Seaborn as an example of such a library. Thus the set of functionalities provided by Seaborn is similar to one provided by Matplotlib.

However, the API is more high-level and requires less boilerplate code to achieve similar results. As for other minor differences, the color palette provided by Seaborn is softer, and the design of plots is more modern and nice looking. Additionally, Seaborn is easiest to integrate with pandas, which may be a significant advantage.

Below you can find code used to create a heatmap in Matplotlib and Seaborn, alongside their output plots. Imports are common.

import matplotlib.pyplot as plt

import numpy as np

import seaborn as sns

data = np.random.rand(5, 5)

fig, ax = plt.subplots()

heatmap = ax.pcolor(data, cmap=plt.cm.Blues)

ax.set_xticks(np.arange(data.shape[0])+0.5, minor=False)

ax.set_yticks(np.arange(data.shape[1])+0.5, minor=False)

ax.set_xticklabels(np.arange(1, data.shape[0]+1), minor=False)

ax.set_yticklabels(np.arange(1, data.shape[1]+1), minor=False)

plt.title("Heatmap")

plt.xlabel("X axis")

plt.ylabel("Y axis")

cbar = plt.colorbar(heatmap)

plt.show()

sns.heatmap(data, cmap="Blues", annot=True)

# Set plot title and axis labels

plt.title("Heatmap")

plt.xlabel("X axis")

plt.ylabel("Y axis")

# Show plot

plt.show()

![Heatmap 2]() Hydra

Hydra

In every project, sooner or later, there is a need to make something a configurable value. Of course, if you are using a tool like Jupyter, then the matter is pretty straightforward. You can just move a desired value to the .env file - et voila, it is configurable.

However, if you are building a more standard application, things are not that simple. Here Hydra shows its ugly (but quite useful) head. It is an open-source tool for managing and running configurations of Python-based applications.

It is based on OmegaConf library, and quoting from their main page: “The key feature is the ability to dynamically create a hierarchical configuration by composition and override it through config files and the command line.”

What proved quite useful for me is the ability described in the quote above: hierarchical configuration. In my case, it worked pretty well and allowed clearer separation of config files.

coolname

Having a unique identifier for your taint runs is always a good idea. If, for various reasons, you do not like UUID or just want your ids to be humanly understandable, coolname is the answer. It generates unique alphabetical word-based identifiers of various lengths from 2 to 4 words.

As for the number of combinations, it looks more or less like this:

- 4 words length identifier has 1010 combinations

- 3 words length identifier has 108 combinations

- 2 words length identifier has 105 combinations

The number is significantly lower than in the case of UUID, so the probability of collision is also higher. However, comparing the two is not the point of this text.

The vocabulary is hand-picked by the creators. Yet they described it as positive and neutral (more about this here), so you will not see an identifier like you-ugly-unwise-human-being. Of course, the library is fully open source.

tqdm

This library provides a progress bar functionally for your application. Despite the fact that having such information displayed is maybe not the most important thing you need. It is still nice to look at and check the progress made by your application during the execution of an important task.

Tqdm also uses complex algorithms to estimate the remaining time of a particular task which may be a game changer and help you organize your time around. Additionally, tqdm states that it has barely noticeable performance overhead - in nanoseconds.

What is more, it is totally standalone and needs only Python to run. Thus it will not download half of the internet to your disk.

Jupyter Notebook (+JupyterLab)

Notebooks are a great way to share results and work on the project. Through the concept of cells, it is easy to separate different fragments and responsibilities of your code.

Additionally, the fact that the single notebook file can contain code, images, and complex text outputs (tables) together only adds to its existing advantages.

Moreover, notebooks allow run pip install inside the cells and use .env files for configuration. Such an approach moves a lot of software engineering complexity out of the way.

Summary

These are all the various libraries for machine learning that I wanted to describe for you. I aimed to provide a general overview of all the libraries alongside their possible use cases enriched by a quick note of my own experience with using them. I hope that my goal was achieved and this article will deepen your knowledge of the machine learning libraries landscape.

Published at DZone with permission of Bartłomiej Żyliński. See the original article here.

Opinions expressed by DZone contributors are their own.

Hydra

Hydra

Comments