Logging to Infinity and Beyond: How To Find the Hidden Value of Your Logs

Though it can feel like trouble, sorting through logs is crucial. Logs hold info on performance problems, security issues, and user behavior.

Join the DZone community and get the full member experience.

Join For FreeIf your environment is like many others, it can often seem like your systems produce logs filled with a bunch of excess data. Since you need to access multiple components (servers, databases, network infrastructure, applications, etc.) to read your logs — and they don’t typically have any specific purpose or focus holding them together — you may dread sifting through them. If you don’t have the right tools, it can feel like you’re stuck with a bunch of disparate, hard-to-parse data. In these situations, I picture myself as a cosmic collector, gathering space debris as it floats by my ship and sorting the occasional good material from the heaps of galactic material.

Though it can feel like more trouble than it’s worth, sorting through logs is crucial. Logs hold many valuable insights into what’s happening in your applications and can indicate performance problems, security issues, and user behavior. In this article, we’re going to take a look at how logging can help you make sense of your log data without much effort. We'll talk about best practices and habits and use some of the Log Analytics tools from Sumo Logic as examples. Let’s blast off and turn that cosmic trash into treasure!

The Truth Is Out There: Getting Value Just From the Things You’re Already Logging

One massive benefit offered by a log analytics platform to any system engineer is the ability to utilize a single log interface. Rather than needing to SSH into countless machines or download logs and parse through them manually, viewing all your logs in a centralized aggregator can make it much easier to see simultaneous events across your infrastructure. You’ll also be able to clearly follow the flow of data and requests through your stack.

Once you see all your logs in one place, you can tap into the latent value of all that data. Of course, you could make your own aggregation interface from scratch, but often, log aggregation tools provide a number of extra features that are worth the additional investment. Those extra features include capabilities such as powerful search and fast analytics.

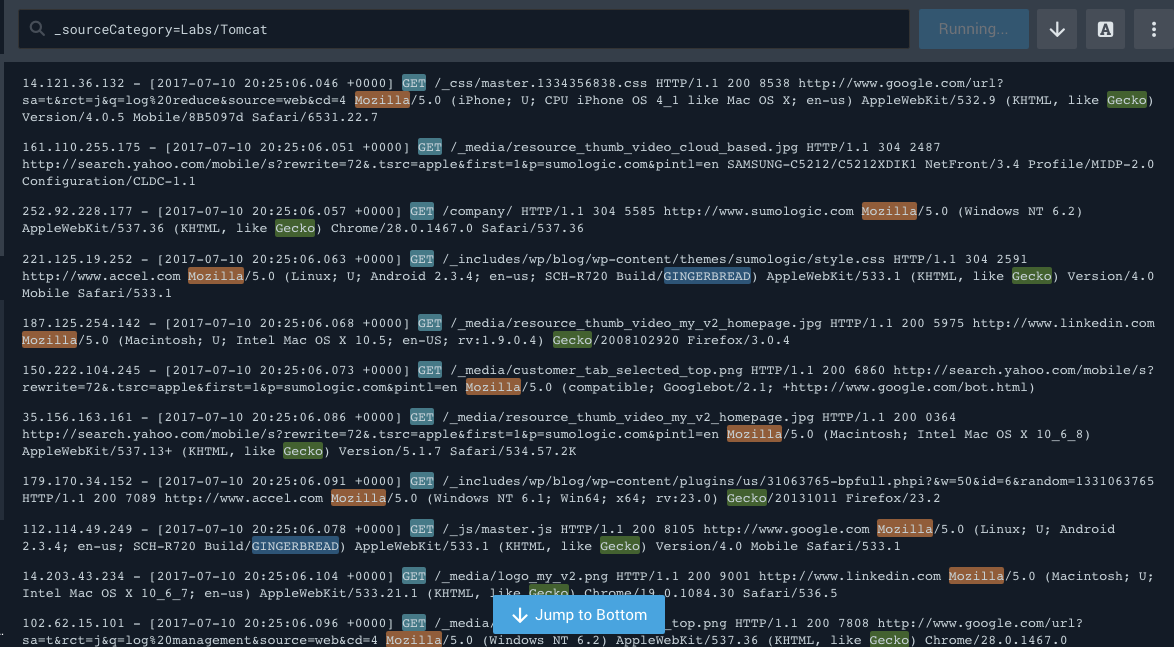

Searching Through the Void: Using Search Query Language To Find Things

You’ve probably used grep or similar tools for searching through your logs, but for real power, you need the ability to search across all of your logs in one interface. You may have even investigated using the ELK stack on your own infrastructure to get going with log aggregation. If you have, you know how valuable putting logs all in the same place can be.

Some tools provide even more functionality on top of this interface. For example, with Log Analytics, you can use a Search Query Language that allows for more complex searches. Because these searches are being executed across a vast amount of log data, you can use special operations to harness the power of your log aggregation service. Some of these operations can be achieved with grep, so long as you have all of the logs at your disposal. But others, such as aggregate operators, field expressions, or transaction analytics tools, can produce extremely powerful reports and monitoring triggers across your infrastructure.

To choose just one tool as an example, let’s take a closer look at field expressions. Essentially, field expressions allow you to create variables in your queries based on what you find through your log data. For example, if you wanted to search across your logs, and you know your log lines follow the format “From Jane To: John,” you can parse out the “from” and “to” with the following query:

* | parse "From: * To: *" as (from, to)

This would store “Jane” in the “from” field and “John” in the “to” field.

Another valuable language feature you could tap into would be keyword expressions. You could use this query to search across your logs for any instances where a command with root privileges failed:

(``su`` OR ``sudo`` ) AND (fail* OR error)

Here is a listing of General Search Examples that are drawn from parsing a single Apache log message.

Light-Speed Analytics: Making Use of Real-Time Reports and Advanced Analytics

One other aspect of searching is that it’s typically looking into the past. Sometimes, you need to be looking at things as they happen. Let’s take a look at Live Tail and LogReduce — two tools to improve simple searches. Versions of these features exist on many platforms, but I like the way they work on Sumo Logic’s offering, so we’ll dive into them.

Live Tail

At its simplest, Live Tail lets you see a live feed of your log messages. It’s like running tail-f on any one of your servers to see the logs as they come in, but instead of being on a single machine, you’re looking across all logs associated with a Sumo Logic Source or Collector. Your Live Tail can be modified to automatically filter for only specific things. Live Tails also supports highlighting keywords (up to eight of them) as the logs roll in.

LogReduce

LogReduce gives you more insight into–or a better understanding of–your search query’s aggregate log results. When you run LogReduce on a query, it performs fuzzy logic analysis on messages meeting the search criteria you defined and then provides you with a set of “Signatures” that meet your criteria. It also gives you a count of the logs with that pattern and a rating of the relevance of the pattern when compared to your search. You then have tools at your disposal to rank the generated signatures and even perform further analysis on the log data.

This is all pretty advanced and can be hard to understand without a demo, so you can dive deeper by watching this video.

Integrated Log Aggregation

Often, you’ll need information from systems you aren’t running directly mixed in with your other logs. That’s why it’s important to make sure you can integrate your log aggregator with other systems.

Many log aggregators provide this functionality. Elastic, which underlies the ELK stack, provides a bunch of integrations that you can hook into your self-hosted or cloud-hosted stack. Of course, integrations aren’t only available on the ELK stack. Sumo Logic also provides a whole list of integrations as well. Regardless, the power of connecting your logs with the many systems you use outside of your monitoring and operational stack is phenomenal. Want to get logs sent from your company’s 1Password account into the rest of your logs? Need more information from AWS than you are getting on your individual instances or services? ELK and Sumo Logic provide great options.

The key to understanding this concept is that you don’t need to be the one controlling the logs to make it valuable to aggregate them. Think through the full picture of what systems keep your business running, and consider putting all of the logs in your aggregator together.

Conclusion

This has been a brief tour through some of the features available with log aggregation. There’s a lot more to it, which shouldn’t be surprising given the vast amount of data generated every second by our infrastructure. The really amazing part of these tools is that these insights are available to you without installing anything on your servers. You just need to have a way to export your log data to the aggregation service. Whether you need to track compliance or monitor the reliability of your services, log aggregation is an incredibly powerful tool that can let you unlock infinite value from your already existing log data. That way, you can become a better cosmic junk collector!

Opinions expressed by DZone contributors are their own.

Comments