How You Can Use Logs To Feed Security

Learn how to leverage logs with tools and AI to enhance security, detect threats, manage costs, and capture all your data for proactive threat prevention.

Join the DZone community and get the full member experience.

Join For FreeIf your system is facing an imminent security threat—or worse, you’ve just suffered a breach—then logs are your go-to. If you’re a security engineer working closely with developers and the DevOps team, you already know that you depend on logs for threat investigation and incident response. Logs offer a detailed account of system activities. Analyzing those logs helps you fortify your digital defenses against emerging risks before they escalate into full-blown incidents. At the same time, your logs are your digital footprints, vital for compliance and auditing.

Your logs contain a massive amount of data about your systems (and hence your security), and that leads to some serious questions:

- How do you handle the complexity of standardizing and analyzing such large volumes of data?

- How do you get the most out of your log data so that you can strengthen your security?

- How do you know what to log? How much is too much?

Recently, I’ve been trying to use tools and services to get a handle on my logs. In this post, I’ll look at some best practices for using these tools—how they can help with security and identifying threats. And finally, I’ll look at how artificial intelligence may play a role in your log analysis.

How To Identify Security Threats Through Logs

Logs are essential for the early identification of security threats. Here’s how:

Identifying and Mitigating Threats

Logs are a gold mine of streaming, real-time analytics, and crucial information that your team can use to its advantage. With dashboards, visualizations, metrics, and alerts set up to monitor your logs you can effectively identify and mitigate threats.

In practice, I’ve used both Sumo Logic and the ELK stack (a combination of Elasticsearch, Kibana, Beats, and Logstash).

These tools can help your security practice by allowing you to:

- Establish a baseline of behavior and quickly identify anomalies in service or application behavior. Look for things like unusual access times, spikes in data access, or logins from unexpected areas of the world.

- Monitor access to your systems for unexpected connections. Watch for frequent and unusual access to critical resources.

- Watch for unusual outbound traffic that might signal data exfiltration.

- Watch for specific types of attacks, such as SQL injection or DDoS. For example, I monitor how rate-limiting deals with a burst of requests from the same device or IP using Sumo Logic’s Cloud Infrastructure Security.

- Watch for changes to highly critical files. Is someone tampering with config files?

- Create and monitor audit trails of user activity. This forensic information can help you to trace what happened with suspicious—or malicious—activities.

- Closely monitor authentication/authorization logs for frequent failed attempts.

- Cross-reference logs to watch for complex, cross-system attacks, such as supply chain attacks or man-in-the-middle (MiTM) attacks.

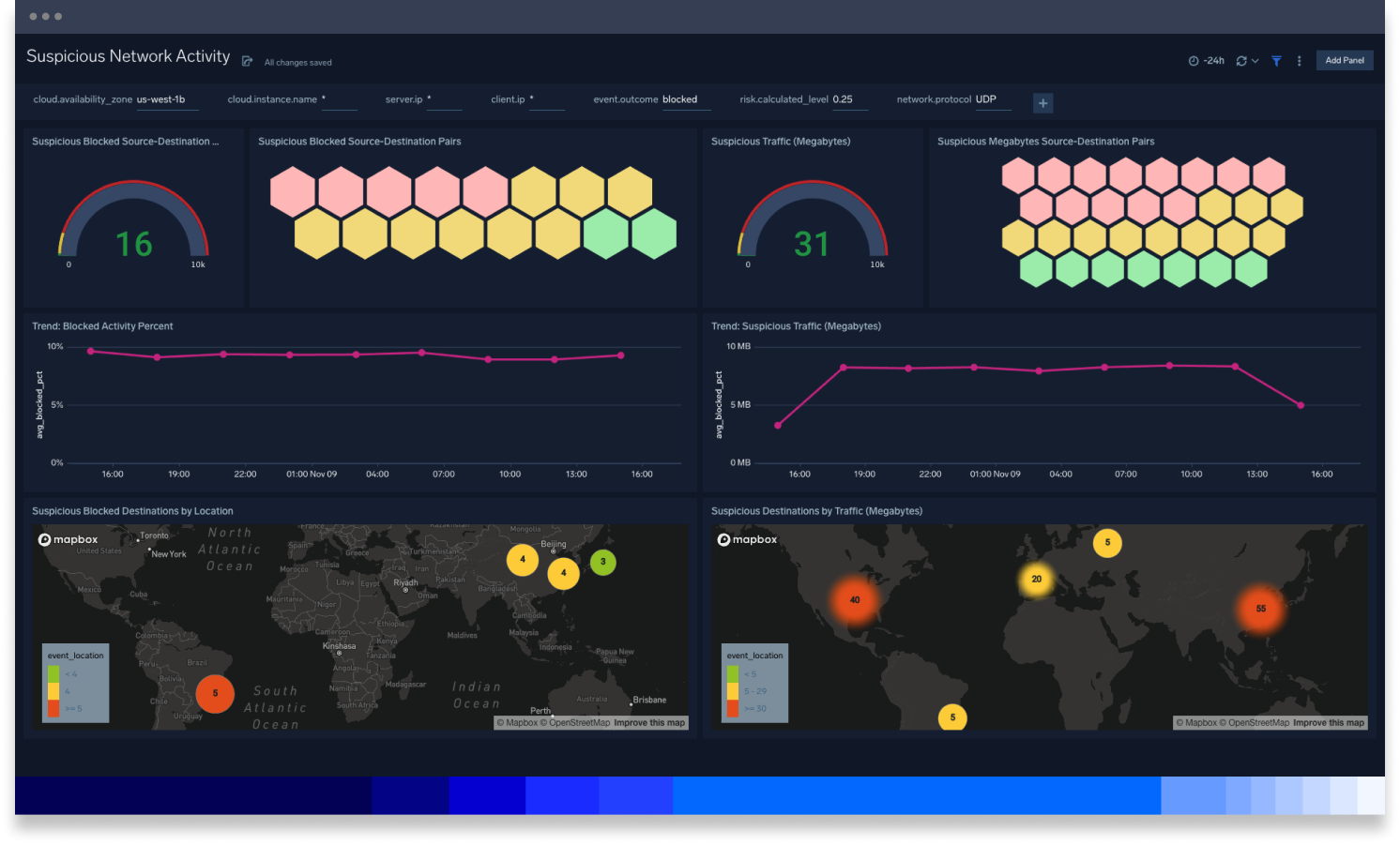

Using a Sumo Logic dashboard of logs, metrics, and traces to track down security threats

It’s also best practice to set up alerts to see issues early, giving you the lead time needed to deal with any threat. The best tools are also infrastructure agnostic and can be run on any number of hosting environments.

Insights for Future Security Measures

Logs help you with more than just looking into the past to figure out what happened. They also help you prepare for the future. Insights from log data can help your team craft its security strategies for the future.

- Benchmark your logs against your industry to help identify gaps that may cause issues in the future.

- Hunt through your logs for signs of subtle IOCs (indicators of compromise).

- Identify rules and behaviors that you can use against your logs to respond in real-time to any new threats.

- Use predictive modeling to anticipate future attack vectors based on current trends.

- Detect outliers in your datasets to surface suspicious activities

What to Log. . . And How Much to Log

So we know we need to use logs to identify threats both present and future. But to be the most effective, what should we log?

The short answer is—everything! You want to capture everything you can, all the time. When you’re first getting started, it may be tempting to try to triage logs, guessing as to what is important to keep and what isn’t. But logging all events as they happen and putting them in the right repository for analysis later is often your best bet.

In terms of log data, more is almost always better.

But of course, this presents challenges.

Who’s Going To Pay for All These Logs?

When you retain all those logs, it can be very expensive. And it’s stressful to think about how much money it will cost to store all of this data when you just throw it in an S3 bucket for review later. For example, on AWS a daily log data ingest of 100GB/day with the ELK stack could create an annual cost of hundreds of thousands of dollars.

This often leads to developers “self-selecting” what they think is — and isn’t — important to log.

Your first option is to be smart and proactive in managing your logs. This can work for tools such as the ELK stack, as long as you follow some basic rules:

- Prioritize logs by classification: Figure out which logs are the most important, classify them as such, and then be more verbose with those logs.

- Rotate logs: Figure out how long you typically need logs and then rotate them off servers. You probably only need debug logs for a matter of weeks, but access logs for much longer.

- Log sampling: Only log a sampling of high-volume services. For example, log just a percentage of access requests but log all error messages.

- Filter logs: Pre-process all logs to remove unnecessary information, condensing their size before storing them.

- Alert-based logging: Configure alerts based on triggers or events that subsequently turn logging on or make your logging more verbose.

- Use tier-based storage: Store more recent logs on faster, more expensive storage. Move older logs to cheaper, slow storage. For example, you can archive old logs to Amazon S3.

These are great steps, but unfortunately, they can involve a lot of work and a lot of guesswork. You often don’t know what you need from the logs until after the fact.

A second option is to use a tool or service that offers flat-rate pricing; for example, Sumo Logic’s $0 ingest. With this type of service, you can stream all of your logs without worrying about overwhelming ingest costs. Instead of a per-GB-ingested type of billing, this plan bills based on the valuable analytics and insights you derive from that data. You can log everything and pay just for what you need to get out of your logs.

In other words, you are free to log it all!

Looking Forward: The Role of AI in Automating Log Analysis

The right tool or service, of course, can help you make sense of all this data. And the best of these tools work pretty well.

The obvious new tool to help you make sense of all this data is AI. With data that is formatted predictably, we can apply classification algorithms and other machine-learning techniques to find out exactly what we want to know about our application.

AI can:

- Automate repetitive tasks like data cleaning and pre-processing

- Perform automated anomaly detection to alert on abnormal behaviors

- Automatically identify issues and anomalies faster and more consistently by learning from historical log data

- Identify complex patterns quickly

- Use large amounts of historical data to more accurately predict future security breaches

- Reduce alert fatigue by reducing false positives and false negatives

- Use natural language processing (NLP) to parse and understand logs

- Quickly integrate and parse logs from multiple, disparate systems for a more holistic view of potential attack vectors

AI probably isn’t coming for your job, but it will probably make your job a whole lot easier.

Conclusion

Log data is one of the most valuable and available means to ensure your applications’ security and operations. It can help guard against both current and future attacks. And for log data to be of the most use, you should log as much information as you can. The last problem you want during a security crisis is to find out you didn’t log the information you need.

Opinions expressed by DZone contributors are their own.

Comments