Lightweight Kubernetes k3s: Installation and Spring Application Example in Azure Cloud

Take a look at this fascinating project that puts Kubernetes's technology closer to the edge than ever.

Join the DZone community and get the full member experience.

Join For FreeIntroduction

The k3s is a lightweight Kubernetes distribution by Rancher Labs. Currently, under early stages of development, the distribution aims to support the deployment of complex software to smaller IT facilities, so-called 'Edge Data Centers." In this article, we will demonstrate how to install k3s in an Ubuntu virtual machine (VM) in the Microsoft Azure cloud and how to deploy an example application developed using Spring framework in the k3s environment.

The background information introduced below is based on a recent Rancher Labs online meetup "k3s: The Lightweight Kubernetes Distribution Built for the Edge." For additional details see the presentation slides and video recording of the meetup available from that link.

Background Information

An edge data center (also see this article) is typically characterized by having a small hardware footprint, a small number of users and adjacency between the data center and its users. A major advantage of edge data centers is that they have faster response times than their centralized counterparts. Due to reduced latency, they are suitable to deliver cached streaming content to local users or serve as clearinghouses for time-sensitive data generated and used by IoT devices.

There is an increasing demand to use Kubernetes for deployment and management of containerized software in edge data centers. However, there exist challenges specific to the infrastructure in the edge. In particular:

Most Kubernetes distributions do not support ARM-based hardware, although ARM technology is widely used in edge computing centers due to their energy efficient design.

High memory consumption of Kubernetes (up to 4GB in some instances) may not be suitable for data centers that have restricted hardware resources.

The k3s is a streamlined Kubernetes distribution developed by Rancher Labs to address those and similar challenges. The k3s:

has been built for production operations (not tailored toward development).

consumes in the range of 512MB memory for a single cluster operation and 40MB-70MB memory for a worker node.

has a downloadable deployment binary with size ~40MB.

consists of a single process with integrated Kubernetes master, Kubelet, and SQLite in addition to etcd.

supports x86_64, ARM64 and ARMv7 architectures.

In k3s, the following have been removed from the core Kubernetes distribution:

alpha features;

in-tree (artifacts that reside in core Kubernetes repository) cloud provider code and storage driver code; (k3s does not aim deep integration with cloud providers!)

most legacy features (those that are already deprecated or about to be deprecated);

some of the non-default features that cannot be turned on in a cloud-hosted Kubernetes environment, e.g. Amazon EKS or Azure AKS;

Docker, in favor of containerd (however, k3s can still be run with Docker as an optional component).

Organization of the Article

In the following section, we will discuss the steps to install k3s in an Ubuntu VM in Azure cloud. In the following section, we will develop a simple application via the Spring framework to run within k3s. Next, we will deploy and run the application in a k3s pod. Finally, we will give some concluding remarks.

This git repository contains all the code used in the sample application.

Installing k3s

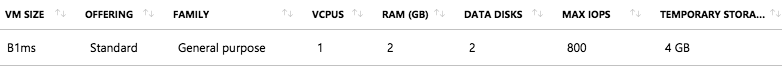

To demonstrate installation, we first created a relatively low-end Ubuntu 18.04.1 VM in Azure cloud that has a single core CPU, 2GB memory and 4GB HDD. In Azure terminology, that is a "Standard B1ms" server.

Figure. 'Standard B1ms' server.

The VM is named VMUbuntuOne, where we also created a user named adminUser that has sudo privilege. After logging on our server as adminUser, let us create a download folder.

cd ~

mkdir downloads

cd downloadsLet us make sure wget is already loaded:

which wgetResponse should read

/usr/bin/wgetNow, download k3s (a single executable binary):

wget https://github.com/rancher/k3s/releases/download/v0.3.0/k3sAfter wget completes the download, we should have k3s binary under ~/downloads. Let us make it executable and then move it under /bin:

chmod +x k3s

sudo mv k3s /binBecause /bin is already included in PATH

which k3sshould return

/bin/k3sLet us start k3s in the background:

sudo k3s server &Follow the start-up logs. After seeing "k3s is up and running," run a basic test:

sudo k3s kubectl --all-namespaces=true get allshould display something like

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system pod/coredns-7748f7f6df-7x9dh 1/1 Running 0 20m

kube-system pod/helm-install-traefik-dn45f 0/1 Completed 0 20m

kube-system pod/svclb-traefik-5654767c9-j6mtj 2/2 Running 0 20m

kube-system pod/traefik-5cc8776646-mfpjd 1/1 Running 0 20m

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 20m

kube-system service/kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 20m

kube-system service/traefik LoadBalancer 10.43.214.152 10.0.1.4 80:32496/TCP,443:30137/TCP 20m

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

kube-system deployment.apps/coredns 1/1 1 1 20m

kube-system deployment.apps/svclb-traefik 1/1 1 1 20m

kube-system deployment.apps/traefik 1/1 1 1 20m

NAMESPACE NAME DESIRED CURRENT READY AGE

kube-system replicaset.apps/coredns-7748f7f6df 1 1 1 20m

kube-system replicaset.apps/svclb-traefik-5654767c9 1 1 1 20m

kube-system replicaset.apps/traefik-5cc8776646 1 1 1 20m

NAMESPACE NAME COMPLETIONS DURATION AGE

kube-system job.batch/helm-install-traefik 1/1 34s 20m

Also, let us look at our node by entering

sudo k3s kubectl get nodewhich should display something like this:

NAME STATUS ROLES AGE VERSION

vmubuntuone Ready <none> 21m v1.13.5-k3s.1Congratulations! We have already installed k3s and started the server. It is that simple!

Spring Application

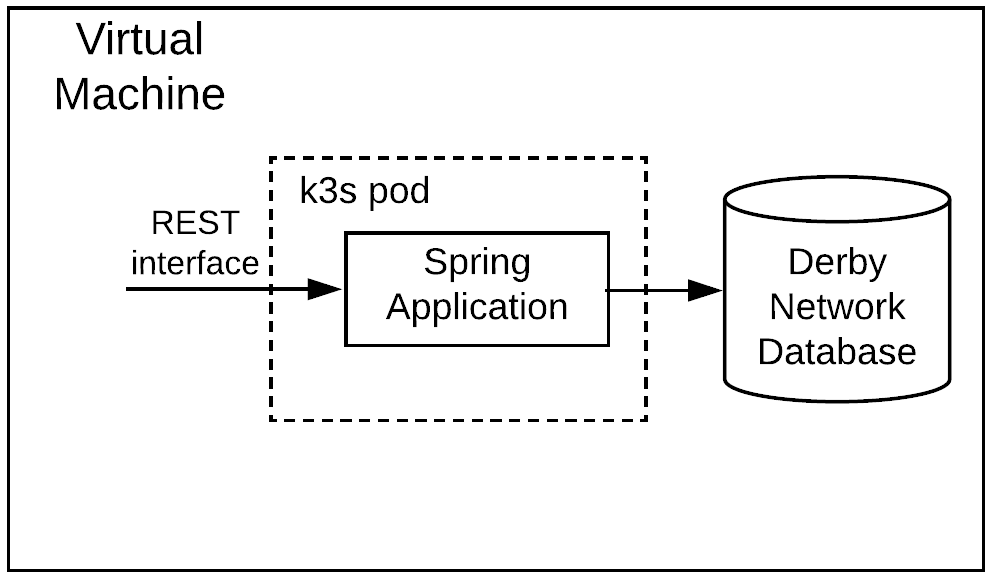

Let us now develop a simple application with the Spring framework and deploy it in k3s. Our application will provide a REST (representational state transfer) interface to a Derby network database. For the sake of the example, we will assume that a table in the database stores key/value pairs that can be used as configuration parameters for various software components and our Spring-based application, named configuration-service, provides a web front-end to access that table.

The application will be deployed inside a k3s pod in our VM, where the VM will also host the database as shown in the diagram below.

Figure. Sample application running in k3s pod.

The relational database has a table called PARAMETERS with two columns:

configkey VARCHAR(128) PRIMARY KEY

- configvalueVARCHAR(512)

The Spring application has the following class model (similar to the microservice pattern we followed in a recent article):

Figure. Class model.

A rest package is the entry point for web service calls and consists of ConfigApplication, which performs basic application setup, and ConfigResource, which generates the response for various REST calls.

The dao (data access objects) and dao.entities packages provide functionality for the data access layer. The ConfigParamEntity is an object representation of a key/value pair in PARAMETERS table and ConfigRepository specifies methods to create, view, and update key/value pairs. An example representation of a key/value pair in JSON (JavaScript Object Notation) format is as follows:

{"configKey":"key1","configValue":"somevalue"}.

The ConfigService in the service package serves as an intermediary between the rest and dao packages and interfaces with the ConfigRepository to create, view, and update key/value pairs according to the requests coming from its client, ConfigResource.

The model package consists of ConfigParam, that has similar attributes to ConfigParamEntity. However, while ConfigParamEntity has persistence awareness, ConfigParam is a plain Java bean.

The rest package accepts input data and generates output data both in JSON format. Data input from the web service calls are mapped to ConfigParam objects in the rest package and they are converted to ConfigParamEntity objects in the service package while forwarding the requests to the dao package. Conversely, a response from the dao package in the form of ConfigParamEntity objects is transformed back to a response in the form of ConfigParam objects in the service package.

The design above implements the Model and Controller in a Model-View-Controller pattern. The View is omitted for simplicity and the test of the REST interface will be done via curl calls as shown later.

Technology Components

The application uses the following main components.

Spring Boot 2.1.2

Spring Cloud Greenwich

Spring Data JPA 2.1.4

- Spring ORM 5.1.4

We will also use Derby Network Database version 10.14.2.0 with client version 10.10.1.1. The development environment is Mac OS.

Code Review

The file system structure for the application development environment is as follows.

- Dockerfile

- pom.xml

- src

-- main

--- java

------- org

--------- k3sexamples

------------- configuration

---------------------- dao

-------------------------- ConfigRepository.java

-------------------------- entities

----------------------------- ConfigParamEntity.java

---------------------- model

-------------------------- ConfigParam.java

---------------------- rest

-------------------------- ConfigApplication.java

-------------------------- ConfigResource.java

---------------------- service

-------------------------- ConfigService.java

--- resources

------ configuration-application.yaml

------ logback.xmlConfigParamEntity.java

This file represents an entity class corresponding to the PARAMETERS table. We rely on the Spring Data JPA framework for the implicit definition of findAll and findById queries.

package org.k3sexamples.configuration.dao.entities;

import java.io.Serializable;

import javax.persistence.Column;

import javax.persistence.Entity;

import javax.persistence.Id;

import javax.persistence.Table;

@Entity

@Table(name = "parameters")

public class ConfigParamEntity implements Serializable {

private static final long serialVersionUID = 1L;

@Id

@Column(nullable = false, length = 128, name = "configkey")

protected String configKey;

@Column(nullable = false, length = 512, name = "configvalue")

protected String configValue;

public String getConfigKey() {

return configKey;

}

public void setConfigKey(String configKey) {

this.configKey = configKey;

}

public String getConfigValue() {

return configValue;

}

public void setConfigValue(String configValue) {

this.configValue = configValue;

}

}ConfigRepository.java

This interface encapsulates operations against the PARAMETERS table, for finding all key/value pairs, creating a new key/value pair, and updating an existing key/value pair. The interface extends JpaRepository in the Spring Data JPA framework. Because the JpaRepository takes care of all the operations behind the scenes, we don't need to define any methods. In particular, to insert or update a record in PARAMETERS table, the JpaRepository will provide a built-in save method (see usage in ConfigService.java below).

package org.k3sexamples.configuration.dao;

import org.k3sexamples.configuration.dao.entities.ConfigParamEntity;

import org.springframework.data.jpa.repository.JpaRepository;

import org.springframework.stereotype.Repository;

import org.springframework.transaction.annotation.Transactional;

@Repository

@Transactional

public interface ConfigRepository extends

JpaRepository<ConfigParamEntity,Integer>{

}

ConfigParam.java

This class represents a key/value model. It has similar attributes to the ConfigParamEntity class.

package org.k3sexamples.configuration.model;

public class ConfigParam {

protected String configKey;

protected String configValue;

public String getConfigKey() {

return configKey;

}

public void setConfigKey(String configKey) {

this.configKey = configKey;

}

public String getConfigValue() {

return configValue;

}

public void setConfigValue(String configValue) {

this.configValue = configValue;

}

}ConfigService.java

This class performs service layer operations by invoking appropriate methods on ConfigRepository. It carries out necessary transformations between the ConfigParam and ConfigParamEntity classes. The rest package directly interfaces with this class rather than ConfigRepository. This class has an awareness of both ConfigParam and ConfigParamEntity classes and provides transformations between them.

The getAllConfigurationParameters method returns all records in the database. The transformToConfigParamEntity and transformToConfigParam methods provide transformations between the ConfigParam and ConfigParamEntity classes.

The setConfigParam method creates a new key/value pair or updates the value if the key already exists. This method utilizes the built-in save method supplied by JpaRepository.

package org.k3sexamples.configuration.service;

import org.k3sexamples.configuration.dao.ConfigRepository;

import org.k3sexamples.configuration.dao.entities.ConfigParamEntity;

import org.k3sexamples.configuration.model.ConfigParam;

import org.springframework.stereotype.Service;

import org.springframework.beans.factory.annotation.Autowired;

import java.util.Collection;

import java.util.stream.Collectors;

@Service

public class ConfigService {

protected ConfigRepository configRepository;

@Autowired

public ConfigService(ConfigRepository configRepository){

this.configRepository = configRepository;

}

public Collection<ConfigParam> getAllConfigurationParameters(){

return configRepository.findAll().stream().map(this::transformToConfigParam).collect(Collectors.toList());

}

public void setConfigParam(ConfigParam configParam){

configRepository.save(transformToConfigParamEntity(configParam));

}

private ConfigParamEntity transformToConfigParamEntity(ConfigParam configParam){

ConfigParamEntity configParamEntity = new ConfigParamEntity();

configParamEntity.setConfigKey(configParam.getConfigKey());

configParamEntity.setConfigValue(configParam.getConfigValue());

return configParamEntity;

}

private ConfigParam transformToConfigParam(ConfigParamEntity configParamEntity){

ConfigParam configParam = new ConfigParam();

configParam.setConfigKey(configParamEntity.getConfigKey());

configParam.setConfigValue(configParamEntity.getConfigValue());

return configParam;

}

}

ConfigResource.java

This is a controller class that accepts REST calls and translates them to commands to be used by ConfigService. The URI path configuration precedes any other segment defined at the method level. The methods getConfigParams and setConfigParam are mapped to the REST calls configuration/configParameters and configuration/setConfigParam, respectively, and direct the associated call to ConfigService.

package org.k3sexamples.configuration.rest;

import org.k3sexamples.configuration.model.ConfigParam;

import org.k3sexamples.configuration.service.ConfigService;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestMethod;

import org.springframework.web.bind.annotation.RestController;

import java.util.Collection;

@RestController

@RequestMapping(value = "/configuration")

public class ConfigResource {

@Autowired

protected ConfigService service;

@Autowired

public ConfigResource(ConfigService service){

this.service = service;

}

@RequestMapping(value = "/configParameters", produces = { "application/json" }, method= {RequestMethod.GET})

public Collection<ConfigParam> getConfigParams(){

return service.getAllConfigurationParameters();

}

@RequestMapping(value = "/setConfigParam", consumes = { "application/json" }, method= {RequestMethod.POST})

public void setConfigParam(@RequestBody ConfigParam configParam){

service.setConfigParam(configParam);

}

}

ConfigApplication.java

As the main entry point for the application, this class performs various setup tasks. In particular, @EntityScan and @EnableJpaRepositories designate the base packages for JPA entities and repositories, respectively. We also declare the ConfigRepository instance, to be auto-injected by Spring, and define the service() method to initialize the ConfigService and return it. Here we also give the main entry method for the application and indicate the name of its configuration file (configuration-application.yaml, to be reviewed later).

package org.k3sexamples.configuration.rest;

import org.k3sexamples.configuration.dao.ConfigRepository;

import org.k3sexamples.configuration.service.ConfigService;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.boot.autoconfigure.domain.EntityScan;

import org.springframework.context.annotation.Bean;

import org.springframework.data.jpa.repository.config.EnableJpaRepositories;

@SpringBootApplication

@EntityScan("org.k3sexamples.configuration.dao.entities")

@EnableJpaRepositories("org.k3sexamples.configuration.dao")

public class ConfigApplication {

@Autowired

protected ConfigRepository configRepository;

public static void main(String[] args) {

System.setProperty("spring.config.name", "configuration-application");

SpringApplication.run(ConfigApplication.class, args);

}

@Bean

public ConfigService service(){

return new ConfigService(configRepository);

}

}

logback.xml: This is a simple log configuration file. Details are omitted. Please see the git repository that contains all the files discussed in this article.

configuration-application.yaml: This configuration file is referenced by ConfigApplication.java as discussed above. The environment variables DB_HOST, DB_PORT, and SERVER_PORT correspond to the database server host, database server port, and server port number for the application to listen to. Those environment variables will be passed to Docker executable in command, as will be discussed below. The database user name and password are hardcoded in the file, which is acceptable for our purposes. However, in a real enterprise application, those would be supplied in a more secure way, e.g. as environment variables specific to development, QA, and production environments.

The application context path is defined as k3sSpringExample, which will precede any web service endpoints exposed by the application.

spring:

application:

name: configuration-service

datasource:

url: jdbc:derby://${DB_HOST}:${DB_PORT}/ConfigDB;create=false

username: demo

password: demopwd

driver-class-name: org.apache.derby.jdbc.ClientDriver

jpa:

hibernate:

ddl-auto: none

# HTTP Server

server:

servlet:

context-path: /k3sSpringExample

port: ${SERVER_PORT}Dockerfile

Our image will be built on the openjdk:8-jdk-alpine image. The original archive will be copied to app.jar and run as an executable jar.

FROM openjdk:8-jdk-alpine

VOLUME /tmp

ARG JAR_FILE

COPY ${JAR_FILE} app.jar

ENTRYPOINT ["java","-jar","/app.jar"]pom.xml

The Maven coordinates of our application are org.k3s.demo:configuration:1.0. We use the Spring version 2.1.2 and Spring Cloud Greenwich releases.

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.1.2.RELEASE</version>

</parent>

<groupId>org.k3s.demo</groupId>

<artifactId>configuration</artifactId>

<version>1.0</version>

<properties>

<start-class>

org.k3sexamples.configuration.rest.ConfigApplication

</start-class>

</properties>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>Greenwich.RELEASE</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

...The pom.xml file continues with Spring, Spring Data Commons, Spring Cloud, and Spring Data JPA dependencies. Finally, we declare dependencies for the Derby database.

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.data</groupId>

<artifactId>spring-data-commons</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-jpa</artifactId>

</dependency>

<dependency>

<groupId>org.apache.derby</groupId>

<artifactId>derby</artifactId>

<version>10.14.2.0</version>

</dependency>

<dependency>

<groupId>org.apache.derby</groupId>

<artifactId>derbyclient</artifactId>

<version>10.10.1.1</version>

</dependency>

</dependencies>Lastly, we provide build plugins. The first one is the Spring Maven Plugin. The other one is the Dockerfile Maven Plugin from Spotify. Note that konuratdocker/spark-examples is the name of my personal Docker repository, to be replaced with yours if you intend to run those examples. We name the Docker image configuration-service.

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<executions>

<execution>

<goals>

<goal>repackage</goal>

</goals>

</execution>

</executions>

</plugin>

<plugin>

<groupId>com.spotify</groupId>

<artifactId>dockerfile-maven-plugin</artifactId>

<version>1.3.6</version>

<configuration>

<tag>configuration-service</tag>

<repository>konuratdocker/spark-examples</repository>

<imageTags>

<imageTag>configuration-service</imageTag>

</imageTags>

<buildArgs>

<JAR_FILE>target/${project.build.finalName}.jar</JAR_FILE>

</buildArgs>

</configuration>

</plugin>

</plugins>

</build>

</project>Build and Export Image

Now, we will build the application and export the Docker image for deployment into k3s pod. Go to the application development root folder where pom.xml resides and execute:

mvn clean installfollowed by:

mvn install dockerfile:build -DpushImageTagNow, if we run

docker images -awe should see Docker image of our application has been created:

REPOSITORY TAG IMAGE ID CREATED SIZE

...

konuratdocker/spark-examples configuration-service 58316a559f12 About a minute ago 154MB

...Let us run the following command to export the image as a tar file:

docker save konuratdocker/spark-examples:configuration-service \

-o configuration-service.tarWe're done with application development. Using the scp command, copy

configuration-service.tar to the Ubuntu VM where k3s has been installed. For example,

scp configuration-service.tar adminUser@x.x.x.x:/home/adminUserDeploying Application in k3s

Preparing the VM For Deployment

We're back in the Ubuntu VM where k3s has been installed. The Derby network database used by the application has to be separately installed. That is shown next.

Make sure unzip is installed in the VM, e.g.

which unzipshould return

/usr/bin/unzipNote that if unzip is not installed, you can install it via

sudo apt-get install unzipWe also need to install jdk8. For that purpose, execute

sudo apt updatefollowed by

sudo apt install openjdk-8-jdkAfter installation is done, verification can be done by running

which javawhich should return

/usr/bin/javaThen download Apache Derby:

wget http://mirror.reverse.net/pub/apache//db/derby/db-derby-10.14.2.0/db-derby-10.14.2.0-bin.zipMove the downloaded zip file under /opt and unzip it:

sudo mv db-derby-10.14.2.0-bin.zip /opt

cd /opt

sudo unzip db-derby-10.14.2.0-bin.zipAt this point, Apache Derby database has been extracted under /opt/db-derby-10.14.2.0-bin.

Because we no longer need the zip file, remove it:

sudo rm /opt/db-derby-10.14.2.0-bin.zipStart Database

The following commands will start the Apache Derby database. Because it is installed under /opt, we need to perform those instructions as root.

sudo su -

cd /opt/db-derby-10.14.2.0-bin/bin

export "DERBY_OPTS=-Dderby.connection.requireAuthentication=true \

-Dderby.authentication.provider=BUILTIN -Dderby.user.demo=demopwd"

./startNetworkServer &Now, the database has started. We need to execute the following commands only once, in order to create the table ('>ij' indicates the prompt for Derby ij utility).

sudo su -

cd /opt/db-derby-10.14.2.0-bin/bin

export "DERBY_OPTS=-Dderby.connection.requireAuthentication=true \

-Dderby.authentication.provider=BUILTIN -Dderby.user.demo=demopwd"

./ij

ij> connect 'jdbc:derby://localhost:1527/ConfigDB;create=true;user=demo;password=demopwd';

ij> CREATE TABLE parameters(configkey VARCHAR(128) PRIMARY KEY, configvalue VARCHAR(512));For verification, if you execute show tables, it will list the newly created table under the demo schema. That is,

ij> show tables;

TABLE_SCHEM |TABLE_NAME |REMARKS

------------------------------------------------------------------------

...

DEMO |PARAMETERS |

You can exit the ij utility:

ij> exit;At this point, the database has started and the PARAMETERS table has been created. You can exit the root shell.

Note: When not needed, the Derby database can be stopped by executing the following commands:

sudo su -

cd /opt/db-derby-10.14.2.0-bin/bin

export "DERBY_OPTS=-Dderby.connection.requireAuthentication=true \

-Dderby.authentication.provider=BUILTIN -Dderby.user.demo=demopwd"

./stopNetworkServer -user demo -password demopwdDeploy Docker Image

One of the ways to deploy a Docker image in a k3s pod is via a direct copy of the exported tar file to /var/lib/rancher/k3s/agent/images/ folder followed by k3s kubectl create -f <deployment.yaml> command. For this purpose, consider the previously copied configuration-service.tar file. Let us assume that the file is under the home directory of the adminUser.

Execute the following command as the adminUser to create the image deployment folder /var/lib/rancher/k3s/agent/images/and to move the image there. (Unless otherwise noted, the commands in this section should be executed as adminUser.)

sudo mkdir /var/lib/rancher/k3s/agent/images/

sudo mv ~/configuration-service.tar /var/lib/rancher/k3s/agent/images/Now, if we start k3s and execute crictl command we should be able to see the image. (If k3s processes are already running, identify their process ids via grep k3s and kill them before starting k3s again!)

sudo k3s server &After k3s starts, execute

sudo k3s crictl imagesYou should see that the application's image is listed:

IMAGE TAG IMAGE ID SIZE

...

docker.io/konuratdocker/spark-examples configuration-service 19fa0dbd4feb8 156MB

...Let us now create the deployment file in yaml format.

mkdir ~/config

vi ~/config/configuration-service-deployment.yamlInside the vi editor type the following:

apiVersion: v1

kind: Service

metadata:

name: database

spec:

ports:

- port: 1527

targetPort: 1527

protocol: TCP

---

kind: Endpoints

apiVersion: v1

metadata:

name: database

subsets:

- addresses:

- ip: 127.0.0.1

ports:

- port: 1527

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: configuration-service

name: configuration-service-deployment

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: configuration-service

template:

metadata:

labels:

app: configuration-service

spec:

containers:

-

args:

- "-jar"

- /app.jar

command:

- java

env:

-

name: DB_HOST

value: database

-

name: DB_PORT

value: "1527"

-

name: SERVER_PORT

value: "9081"

image: "konuratdocker/spark-examples:configuration-service"

name: container-service

ports:

-

containerPort: 9081

Observe that the file consists of three sections. Starting from the top, the first two are the service definition for our database and the corresponding endpoint definition, which declare that the service is available in localhost (127.0.0.1) at port 1527. (For a general reference in that topic, see this original Kubernetes documentation.) The third section consists of the deployment definition for the actual application. Here, observe that the environment variables DB_HOST and DB_PORT are assigned values referencing the database service and its endpoint. The SERVER_PORT environment variable and the corresponding containerPort definition indicate the port number the REST endpoint will listen to. Finally, the image attribute references the Docker image we had copied under /var/lib/rancher/k3s/agent/images/.

Now, we can deploy the application as follows.

cd ~/config

sudo k3s kubectl create -f ./configuration-service-deployment.yamlYou should see a response as follows.

service/database created

endpoints/database created

deployment.apps/configuration-service-deployment createdIf you execute

sudo k3s kubectl get pods -o wideyou should see something like

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

configuration-service-deployment-564d9694bb-f6wqn 1/1 Running 0 57s 10.42.0.21 vmubuntuone <none> <none>Take a note of the values under NAME (for pod name) and IP columns. Then, the application logs can be observed by executing:

sudo k3s kubectl logs <pod name>, e.g.

sudo k3s kubectl logs configuration-service-deployment-564d9694bb-f6wqnTo test that the application is running properly, we can utilize the IP address listed above to send requests via curl:

curl -d '{"configKey":"key1","configValue":"val12"}' \

-H "Content-Type: application/json" \

-X POST http://10.42.0.21:9081/k3sSpringExample/configuration/setConfigParamNow, executing

curl http://10.42.0.21:9081/k3sSpringExample/configuration/configParameterswill yield

[{"configKey":"key1","configValue":"val12"}]Submit another key/value pair:

curl -d '{"configKey":"key2","configValue":"22"}' \

-H "Content-Type: application/json" \

-X POST http://10.42.0.21:9081/k3sSpringExample/configuration/setConfigParamNow,

curl http://10.42.0.21:9081/k3sSpringExample/configuration/configParameterswill yield

[{"configKey":"key1","configValue":"val12"},{"configKey":"key2","configValue":"22"}]Note: If you make any mistakes and want to redeploy the application, execute the following to remove original deployments.

sudo k3s kubectl delete service/database

sudo k3s kubectl delete deployment.apps/configuration-service-deploymentConclusions

In this article, we demonstrated how to install k3s, a lightweight Kubernetes distribution from Rancher Labs, in an Ubuntu VM in the Microsoft Azure cloud. We also developed a sample application using Spring framework and deployed it in a k3s pod. The application integrated with an Apache Derby network server external to the pod.

Given that the main purpose of k3s to be lightweight, we intentionally chose a relatively low-end VM as our testbed with a single core CPU and 2GB memory. Although we have not run any formal load tests to quantify the performance, it appears that k3s starts up fast. The response times of the application and the database were also satisfactory while running alongside k3s.

The k3s technology is still in early stages of development and from our experience so far, it is on the right track!

Opinions expressed by DZone contributors are their own.

Comments