Exposing Services to External Applications in Kubernetes (Part 1)

This article shows how one developer exposed his Kubernetes services to applications.

Join the DZone community and get the full member experience.

Join For FreeKubernetes (K8s) has now become the most popular production-grade container management and orchestration system. Recently, I was involved in a project where an on-premises application needed to be containerized, managed by a Kubernetes cluster, and be able to connect to other apps outside cluster.

Here, I share my experience of running a container in a local K8s cluster and various options for networking externally to cluster. There are many posts related to or touching this topic, but most of them followed the minikube installation (single-node K8s cluster) whereas my cluster was installed using kubeadm. There are certain differences in K8s install options, as noted in the references below.

I will not dwell into how to create containers and deploy in K8s. My focus in this post will be on the networking aspect of apps inside and across the cluster, minus the heavy text found in K8s documentation.

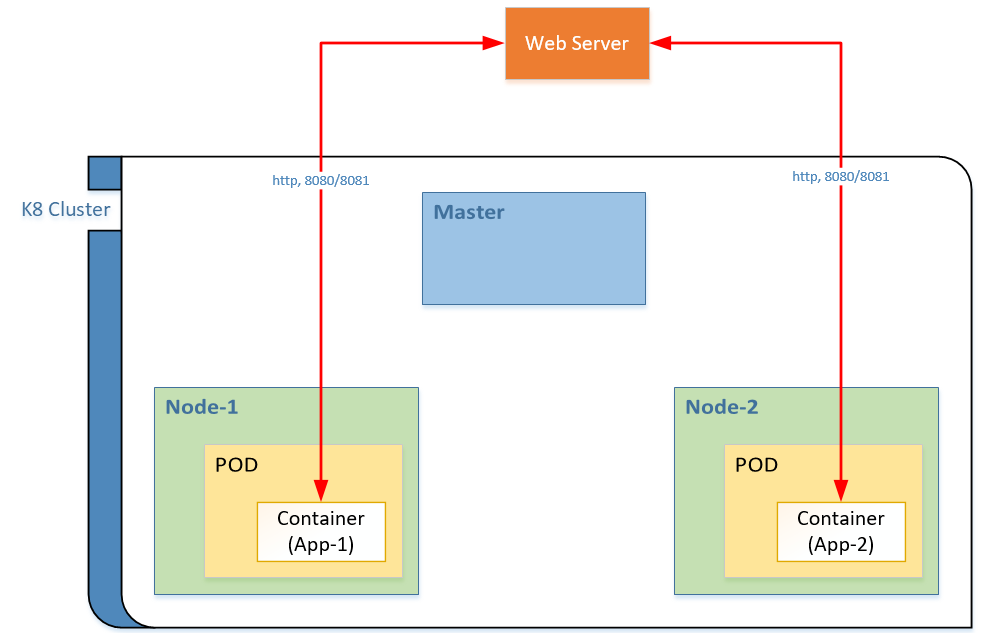

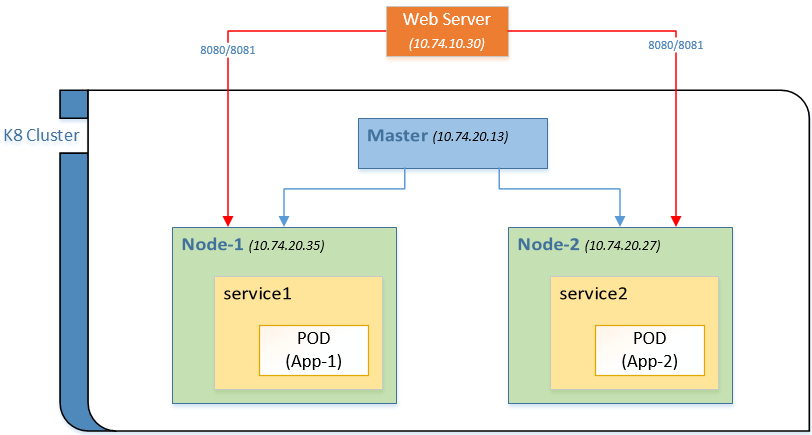

This figure shows what I want to acheive.

There were the following conditions to our use case:

- All the communications from WebServer to the app must use IP Address only

- Each container is a separate instance of the same application with no inter-pod communication

I understand that the above conditions look unusual, but given the legacy design of our application and complexity involved, we didn’t have time to reengineer (at least in phase 1).

After a few experiments, I realized that the above diagram does not represent true networking in K8s, but I will leave this diagram as it is. In later sections, I am going to share how it was improved incrementally.

Before we dive in, let us have a quick introduction to basic components in K8s. You can read them in detail here.

Container – Application binary packaged with all dependencies minus any guest OS, may contain a startup script or init command. In Kubernetes, the container is not always from Docker; Kubernetes supports a variety of container runtimes. In my case, I preferred using a Docker container.

Pod – Basic deployment unit in K8s, a group of one or more containers, with shared storage/network, and a specification for how to run the containers. A pod’s containers interact with each other without any external network tuning. I didn’t use a Pod directly but through a controller as recommended by many and K8s itself.

Deployment – A declarative way to create Pods which, when submitted to K8s, will ensure that the intent is satisfied to the best of effort. It is recommended to have the desired state supplied in a YAML file. Of many things, you can also mention ports through which traffic will be flowing and a label which defines each pod.

Service – Another high-level abstraction, it is a set of Pods having a certain label and defines them as a named unit (service name). This was an interesting component for me because it permits exposing my app to outside world. It also allows Pods to talk to each other using by looking up the service name, which is either through environment variables or DNS server.

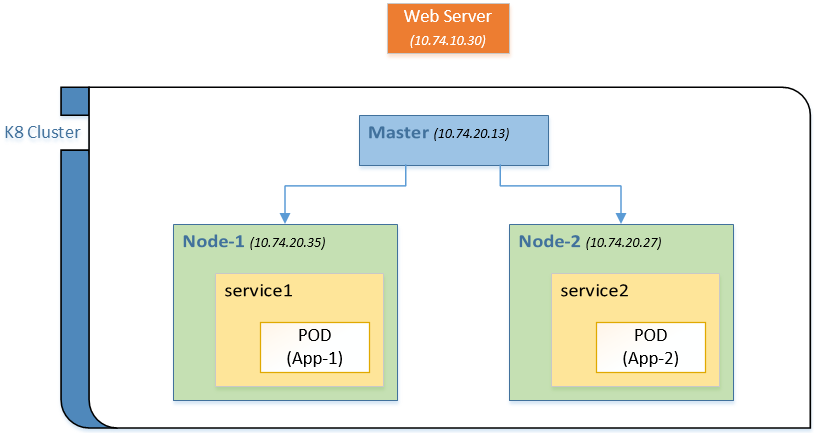

So after creating deployment and service yamls and applying them using kubeclt, the diagram looks like this:

Each service has its own label and the pods also have unique labels, even in the same app. I have added the VM’s IP Address to each node but removed mentioning private IPs of PODs since my use case here is not on inter-pod communication.

Each service has its own label and the pods also have unique labels, even in the same app. I have added the VM’s IP Address to each node but removed mentioning private IPs of PODs since my use case here is not on inter-pod communication.

Now that we have the basic infrastructure in place, let me start with various options I tried for networking, which is the HTTP traffic on ports (8080, 8081) from Web Server to containers.

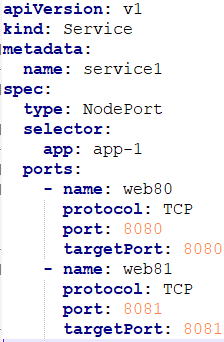

This is my service yaml file:

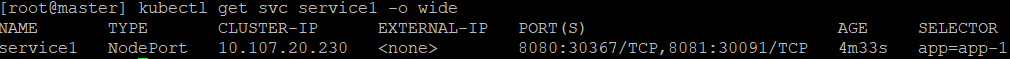

and the output of kubectl get svc:

Well, Kubernetes opened two new ports, 30367 and 30091, instead of 8080 and 8081. These ports numbers from a range of 30000-32767 are called nodeports. This option will not let my web server use 8080/8081 to connect to apps. The cluster-ip seen in above command output is internal IP and not accessible from outside.

To solve this issue, I wanted to use specific ports. It turns out K8s has the option to override the default port range (--service-node-port-range), but most users discourage it for various reasons.

HostPort: Resolving the above port issue, K8s has another option of using hostPort directly in the deployment. This option opens the port on the VM itself and I can access my app using hostIP:hostPort. The challenge here is the tight coupling between the hostIP and the app. If the pod is created on a different node then the port is accessible, but on different IP. It turns out, K8s has a solution called nodeSelector which lets you bind your pod to a specific: node.

Now my cluster looks like this:

Now, I have my HTTP traffic flowing with tight coupling with the IP address. I agree that this is not an optimal way of exposing services out of the cluster and will not work in cases where the VM IPs changes. Also, in the options used so far, I haven’t touched the master. After exploring a bit further, I found another option called Ingress which will be the focus of next part.

Opinions expressed by DZone contributors are their own.

Comments