How to Set Up Multiple JMS Pods With a Single jmsstore File per Queue in Maximo Application Suites (MAS8)

Explore a solution to configure the MAS 8 application with a distinct JMS store file for each queue.

Join the DZone community and get the full member experience.

Join For FreeProblem Statement

By default, the IBM Manage JMS setup creates a single JMS store file for all queues, which effectively places all queues in one repository. This approach poses a risk: if one queue becomes corrupt and a message is affected, you would have to delete the store file for all queues to resolve the issue.

How to Overcome the Problem

We developed a solution to configure the MAS 8 application with a distinct JMS store file for each queue.

Design and Solution Implementation

In this example, I'll demonstrate the setup for four queues: cqin, cgout, sqin, and sqout. You can have more queues if they are enabled in your Maximo web.xml configuration. I will modify the IBM configuration to show how to assign separate JMS store files for each queue, ensuring that a problem in one queue does not impact the others.

Ensure you create a Persistent Volume Claim (PVC) for your JMS store files within your MAS Core Suite application. In my case, the PVC is named jmsstore and is located at /jmsstore, with a storage size of 20GB for this demo. However, you can always allocate more storage as needed.

If you have access to OpenShift, navigate to your managed namespace and check if pvc has been created:

$oc project mas-masivt810x-manage

$oc get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

masivt810x-wkspivt810x-custom-logs Bound pvc-cba1d87d-ab9f-4213-96fa-f52a135b2863 20Gi RWX nfs-storage 18d

masivt810x-wkspivt810x-doclink Bound pvc-981fa13b-0be6-4b4c-b595-e587473109ae 10Gi RWX nfs-storage 18d

masivt810x-wkspivt810x-globaldir Bound pvc-b8c9ffd6-9c4c-403e-9706-d750c8fa4394 20Gi RWX nfs-storage 18d

masivt810x-wkspivt810x-jmsstore Bound pvc-c6a2bbdc-85dc-4e57-a2ac-4a284c1e1976 20Gi RWX nfs-storage 18d

masivt810x-wkspivt810x-migration Bound pvc-2e06b37b-b7ff-4914-b532-e482ea5bb233 10Gi RWX nfs-storage 18dYou can also navigate to the maxinst pod to check if the /jmsstore directory was created.

$ oc exec -n mas-masivt810x-manage $(oc get -n mas-masivt810x-manage -l mas.ibm.com/appType=maxinstudb --no-headers=true pods -o name | awk -F "/" '{print $2}') -- ls -ltrh / |grep jms

drwxrwxrwx. 13 root 1000330000 0 Jul 3 11:32 jmsstoreAfter your MAS Core instance reconciliation, you should see the names of your PVCs, including a new pvc for the jmsstore.

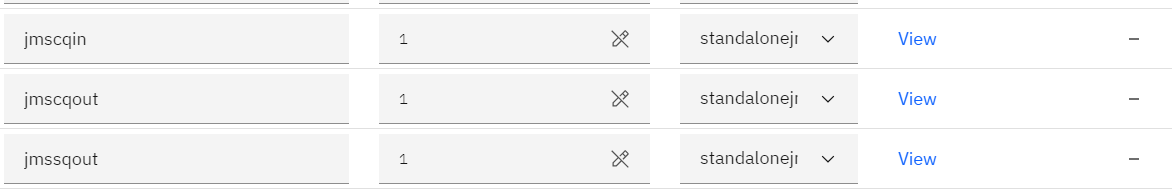

In the Server bundles section, if the System managed checkbox is selected, clear it. Click Add bundle. We are going to add four JMS server bundles:

- jmscqin

- jmscqout

- jmssqout

- jmssqin

In the Type column, select standalonejms:

To configure the queues, complete the following steps:

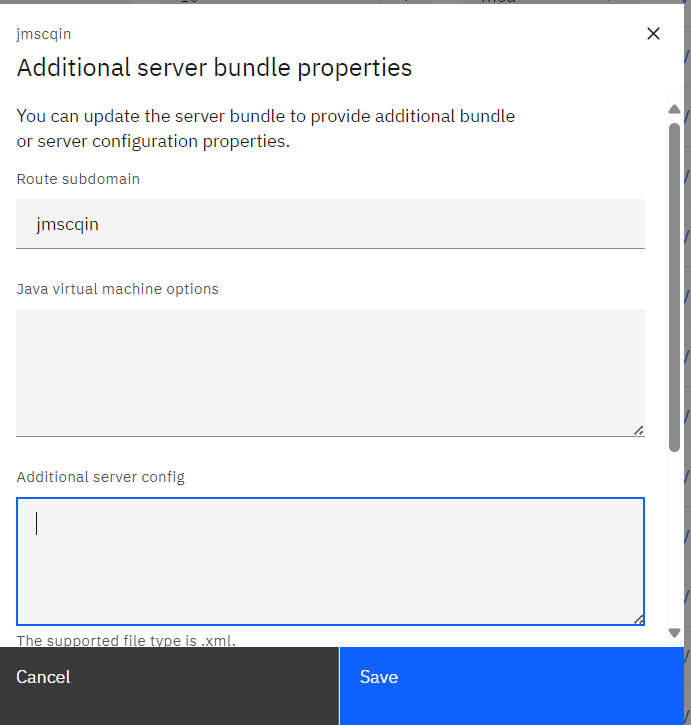

- In the Additional properties column for your JMS server bundle, click View.

- Set a route subdomain that matches the name of your bundles. We will use the pod's service as the remoteServerAddress.

- In the Additional server config section, use XML to specify default and custom queues.

- You can specify a queue as:

- Outbound sequential

- Outbound continuous

- Inbound sequential

- Inbound continuous

Use this XML configuration for the jmscqin bundle. I have added the cqinerror engine to run alongside the cqin engine.

<?xml version="1.0" encoding="UTF-8"?>

<server description="new server">

<!-- Enable features -->

<featureManager>

<feature>wasJmsSecurity-1.0</feature>

<feature>wasJmsServer-1.0</feature>

</featureManager>

<applicationManager autoExpand="true"/>

<wasJmsEndpoint host="*" wasJmsSSLPort="7286" wasJmsPort="7276" />

<messagingEngine>

<fileStore path="/jmsstore/cqin" fileStoreSize="4096" logFileSize="4000" />

<queue id="cqinerrbd" maxMessageDepth="4000000" failedDeliveryPolicy="KEEP_TRYING"/>

<queue id="cqinbd" maxMessageDepth="8000000" exceptionDestination="cqinerrbd"/>

</messagingEngine>

</server>Use this XML configuration for the jmscqout bundle. I have added cqouterror engine to run alongside the cqout engine.

<?xml version="1.0" encoding="UTF-8"?>

<server description="new server">

<!-- Enable features -->

<featureManager>

<feature>wasJmsSecurity-1.0</feature>

<feature>wasJmsServer-1.0</feature>

</featureManager>

<applicationManager autoExpand="true"/>

<wasJmsEndpoint host="*" wasJmsSSLPort="7286" wasJmsPort="7276" />

<messagingEngine>

<fileStore path="/jmsstore/cqout" fileStoreSize="4096" logFileSize="4000" />

<queue id="cqouterrbd" maxMessageDepth="4000000" failedDeliveryPolicy="KEEP_TRYING"/>

<queue id="cqoutbd" maxMessageDepth="8000000" exceptionDestination="cqouterrbd"/>

</messagingEngine>

</server>The jmssqout bundle runs the sqout engine alone.

<server description="new server">

<!-- Enable features -->

<featureManager>

<feature>wasJmsSecurity-1.0</feature>

<feature>wasJmsServer-1.0</feature>

</featureManager>

<applicationManager autoExpand="true"/>

<wasJmsEndpoint host="*" wasJmsSSLPort="7286" wasJmsPort="7276" />

<messagingEngine>

<fileStore path="/jmsstore/sqout" fileStoreSize="4096" logFileSize="4000" />

<queue id="sqoutbd" maintainStrictOrder="true" maxMessageDepth="2000000" failedDeliveryPolicy="KEEP_TRYING" maxRedeliveryCount="-1"/>

</messagingEngine>

</server>The jmssqin bundle runs the sqin engine alone.

<?xml version="1.0" encoding="UTF-8"?>

<server description="new server">

<!-- Enable features -->

<featureManager>

<feature>wasJmsSecurity-1.0</feature>

<feature>wasJmsServer-1.0</feature>

</featureManager>

<applicationManager autoExpand="true"/>

<wasJmsEndpoint host="*" wasJmsSSLPort="7286" wasJmsPort="7276" />

<messagingEngine>

<fileStore path="/jmsstore/sqin" fileStoreSize="4096" logFileSize="4000"/>

<queue id="sqinbd" maintainStrictOrder="true" maxMessageDepth="4000000" failedDeliveryPolicy="KEEP_TRYING" maxRedeliveryCount="-1"/>

</messagingEngine>

</server>Now you will need the service that has been created for these bundles. Essentially, the bundles will create pods, services, and routes. Since it is within the cluster, we can use the service for our remoteServerAddress. Note: Your remoteServerAddress must be in the following format:

remoteServerAddress="<InstanceId>-<workspaceId>-<serverbundlename>.mas-<InstanceId>-manage.svc:7276:BootstrapBasicMessaging"

remoteServerAddress="masivt810x-wkspivt810x-jmsscqin.mas-masivt810x-manage.svc:7276:BootstrapBasicMessaging"Alternatively, you can go to your OpenShift GUI and get the hostname routing created on the service. Use this as the remoteServerAddress. You can get the names of the pods and services by running these commands:

$ oc get svc |grep jms

masivt810x-wkspivt810x-jmscqin ClusterIP None <none> 9080/TCP,443/TCP,7276/TCP,7286/TCP 23h

masivt810x-wkspivt810x-jmscqout ClusterIP None <none> 9080/TCP,443/TCP,7276/TCP,7286/TCP 23h

masivt810x-wkspivt810x-jmssqin ClusterIP None <none> 9080/TCP,443/TCP,7276/TCP,7286/TCP 23h

masivt810x-wkspivt810x-jmssqout ClusterIP None <none> 9080/TCP,443/TCP,7276/TCP,7286/TCP 23h

$ oc get pods |grep jms

masivt810x-wkspivt810x-jmscqin-0 1/1 Running 0 23h

masivt810x-wkspivt810x-jmscqout-0 1/1 Running 0 23h

masivt810x-wkspivt810x-jmssqin-0 1/1 Running 0 23h

masivt810x-wkspivt810x-jmssqout-0 1/1 Running 0 23hYou can also check your maxinst pods to see if the jmsstore directory was created by running this command:

oc exec -n mas-masivt810x-manage $(oc get -n mas-masivt810x-manage -l mas.ibm.com/appType=maxinstudb --no-headers=true pods -o name | awk -F "/" '{print $2}') -- ls -ltrh /jmsstore/

total 0

drwxr-x---. 2 1001040000 root 0 Jul 3 11:20 cqin

drwxr-x---. 2 1001040000 root 0 Jul 3 11:27 cqout

drwxr-x---. 2 1001040000 root 0 Jul 3 11:28 sqout

drwxr-x---. 2 1001040000 root 0 Jul 3 11:29 sqinIf you have the Maximo MEA bundle, you can use the XML file below. If you have a single server (all) bundle, change maximomea to maximo-all in the XML.

<?xml version='1.0' encoding='UTF-8'?>

<!-- Custom JMS Server XML by Wasia Maya, 2024 Multiple JMS pods-->

<server description="new server">

<featureManager>

<feature>jndi-1.0</feature>

<feature>wasJmsClient-2.0</feature>

<feature>jmsMdb-3.2</feature>

<feature>mdb-3.2</feature>

</featureManager>

<!-- JMS Queue Connection Factories -->

<jmsQueueConnectionFactory jndiName="jms/maximo/int/cf/intcf-01" connectionManagerRef="mifjmsconfact-01">

<properties.wasJms remoteServerAddress="masivt810x-wkspivt810x-jmscqin.mas-masivt810x-manage.svc:7276:BootstrapBasicMessaging"/>

</jmsQueueConnectionFactory>

<jmsQueueConnectionFactory jndiName="jms/maximo/int/cf/intcf-02" connectionManagerRef="mifjmsconfact-02">

<properties.wasJms remoteServerAddress="masivt810x-wkspivt810x-jmscqout.mas-masivt810x-manage.svc:7276:BootstrapBasicMessaging"/>

</jmsQueueConnectionFactory>

<jmsQueueConnectionFactory jndiName="jms/maximo/int/cf/intcf-03" connectionManagerRef="mifjmsconfact-03">

<properties.wasJms remoteServerAddress="masivt810x-wkspivt810x-jmssqout.mas-masivt810x-manage.svc:7276:BootstrapBasicMessaging"/>

</jmsQueueConnectionFactory>

<jmsQueueConnectionFactory jndiName="jms/maximo/int/cf/intcf-04" connectionManagerRef="mifjmsconfact-04">

<properties.wasJms remoteServerAddress="masivt810x-wkspivt810x-jmssqin.mas-masivt810x-manage.svc:7276:BootstrapBasicMessaging"/>

</jmsQueueConnectionFactory>

<!-- Connection Managers -->

<connectionManager id="mifjmsconfact-01" maxPoolSize="20"/>

<connectionManager id="mifjmsconfact-02" maxPoolSize="20"/>

<connectionManager id="mifjmsconfact-03" maxPoolSize="20"/>

<connectionManager id="mifjmsconfact-04" maxPoolSize="20"/>

<!-- JMS Queues -->

<jmsQueue jndiName="jms/maximo/int/queues/sqout">

<properties.wasJms queueName="sqoutbd"/>

</jmsQueue>

<jmsQueue jndiName="jms/maximo/int/queues/sqin">

<properties.wasJms queueName="sqinbd"/>

</jmsQueue>

<jmsQueue jndiName="jms/maximo/int/queues/cqin">

<properties.wasJms queueName="cqinbd"/>

</jmsQueue>

<jmsQueue jndiName="jms/maximo/int/queues/cqinerr">

<properties.wasJms queueName="cqinerrbd"/>

</jmsQueue>

<jmsQueue jndiName="jms/maximo/int/queues/cqout">

<properties.wasJms queueName="cqoutbd"/>

</jmsQueue>

<jmsQueue jndiName="jms/maximo/int/queues/cqouterr">

<properties.wasJms queueName="cqouterrbd"/>

<!-- JMS Activation Specs -->

<jmsActivationSpec id="maximomea/mboejb/JMSContQueueProcessor-1" maxEndpoints="50">

<properties.wasJms destinationLookup="jms/maximo/int/queues/cqin" maxConcurrency="50" maxBatchSize="200" connectionFactoryLookup="jms/maximo/int/cf/intcf-01"/>

</jmsActivationSpec>

<jmsActivationSpec id="maximomea/mboejb/JMSContQueueProcessor-2" maxEndpoints="10">

<properties.wasJms destinationLookup="jms/maximo/int/queues/cqinerr" maxConcurrency="10" maxBatchSize="200" connectionFactoryLookup="jms/maximo/int/cf/intcf-01"/>

</jmsActivationSpec>

<jmsActivationSpec id="maximomea/mboejb/JMSContOutQueueProcessor-1" maxEndpoints="50">

<properties.wasJms destinationLookup="jms/maximo/int/queues/cqout" maxConcurrency="50" maxBatchSize="200" connectionFactoryLookup="jms/maximo/int/cf/intcf-02"/>

</jmsActivationSpec>

<jmsActivationSpec id="maximomea/mboejb/JMSContOutQueueProcessor-2" maxEndpoints="10">

<properties.wasJms destinationLookup="jms/maximo/int/queues/cqouterr" maxConcurrency="10" maxBatchSize="200" connectionFactoryLookup="jms/maximo/int/cf/intcf-02"/>

</jmsActivationSpec>

</server>If you have UI, report, and cron bundles, use this XML:

<?xml version='1.0' encoding='UTF-8'?>

<!-- Custom JMS Server XML by Wasia Maya, 2024 Multiple JMS pods-->

<server description="new server">

<featureManager>

<feature>jndi-1.0</feature>

<feature>wasJmsClient-2.0</feature>

<feature>jmsMdb-3.2</feature>

<feature>mdb-3.2</feature>

</featureManager>

<!-- JMS Queue Connection Factories -->

<jmsQueueConnectionFactory jndiName="jms/maximo/int/cf/intcf-01" connectionManagerRef="mifjmsconfact-01">

<properties.wasJms remoteServerAddress="masivt810x-wkspivt810x-jmscqin.mas-masivt810x-manage.svc:7276:BootstrapBasicMessaging"/>

</jmsQueueConnectionFactory>

<jmsQueueConnectionFactory jndiName="jms/maximo/int/cf/intcf-02" connectionManagerRef="mifjmsconfact-02">

<properties.wasJms remoteServerAddress="masivt810x-wkspivt810x-jmscqout.mas-masivt810x-manage.svc:7276:BootstrapBasicMessaging"/>

</jmsQueueConnectionFactory>

<jmsQueueConnectionFactory jndiName="jms/maximo/int/cf/intcf-03" connectionManagerRef="mifjmsconfact-03">

<properties.wasJms remoteServerAddress="masivt810x-wkspivt810x-jmssqout.mas-masivt810x-manage.svc:7276:BootstrapBasicMessaging"/>

</jmsQueueConnectionFactory>

<jmsQueueConnectionFactory jndiName="jms/maximo/int/cf/intcf-04" connectionManagerRef="mifjmsconfact-04">

<properties.wasJms remoteServerAddress="masivt810x-wkspivt810x-jmssqin.mas-masivt810x-manage.svc:7276:BootstrapBasicMessaging"/>

</jmsQueueConnectionFactory>

<!-- Connection Managers -->

<connectionManager id="mifjmsconfact-01" maxPoolSize="20"/>

<connectionManager id="mifjmsconfact-02" maxPoolSize="20"/>

<connectionManager id="mifjmsconfact-03" maxPoolSize="20"/>

<connectionManager id="mifjmsconfact-04" maxPoolSize="20"/>

<!-- JMS Queues -->

<jmsQueue jndiName="jms/maximo/int/queues/sqout">

<properties.wasJms queueName="sqoutbd"/>

</jmsQueue>

<jmsQueue jndiName="jms/maximo/int/queues/sqin">

<properties.wasJms queueName="sqinbd"/>

</jmsQueue>

<jmsQueue jndiName="jms/maximo/int/queues/cqin">

<properties.wasJms queueName="cqinbd"/>

</jmsQueue>

<jmsQueue jndiName="jms/maximo/int/queues/cqinerr">

<properties.wasJms queueName="cqinerrbd"/>

</jmsQueue>

<jmsQueue jndiName="jms/maximo/int/queues/cqout">

<properties.wasJms queueName="cqoutbd"/>

</jmsQueue>

<jmsQueue jndiName="jms/maximo/int/queues/cqouterr">

<properties.wasJms queueName="cqouterrbd"/>

</jmsQueue>

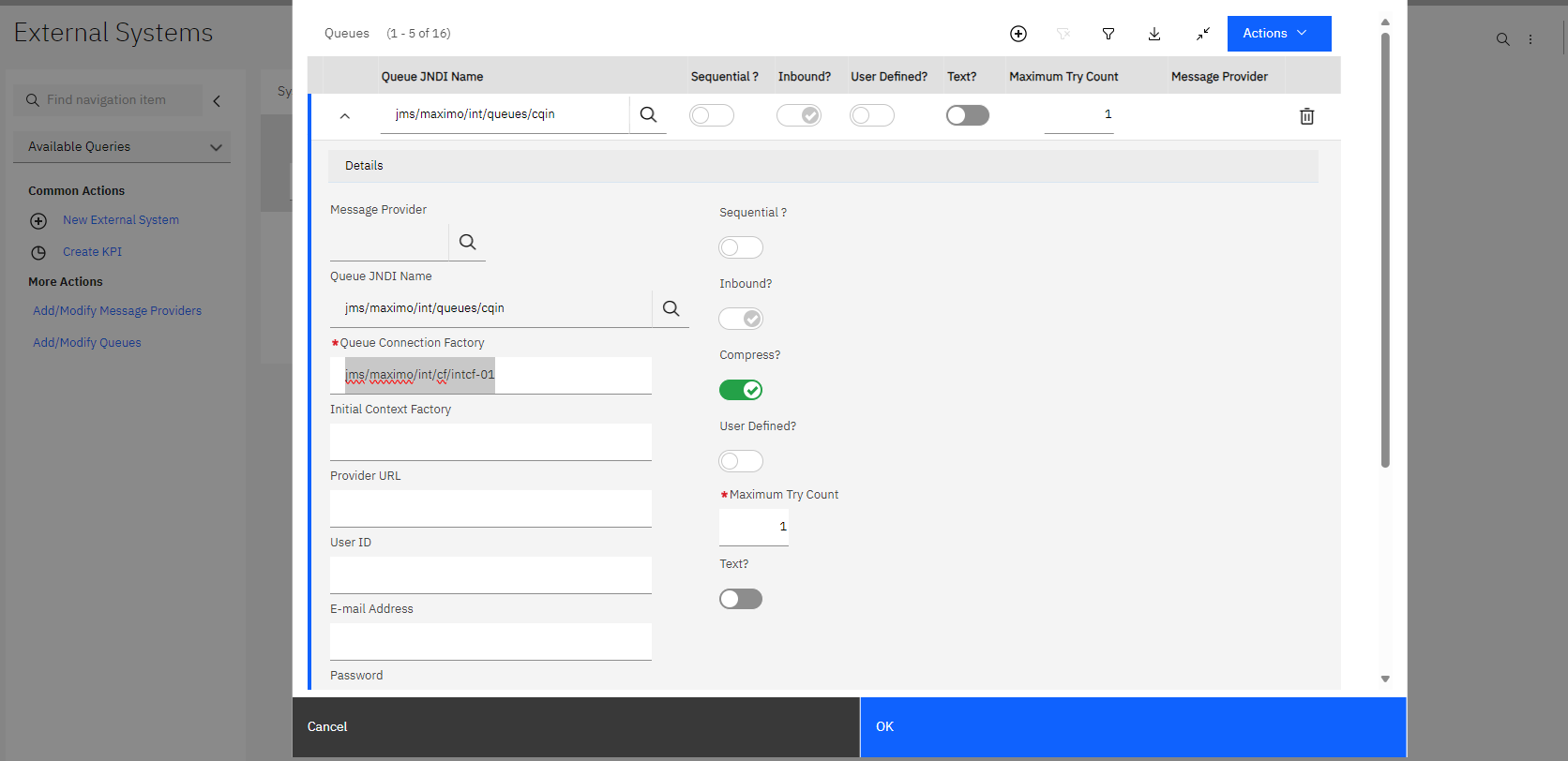

</server>Our XML has the following list of jmsQueueConnectionFactory JNDI names from the provided XML:

- jms/maximo/int/cf/intcf-01

- jms/maximo/int/cf/intcf-02

- jms/maximo/int/cf/intcf-03

- jms/maximo/int/cf/intcf-04

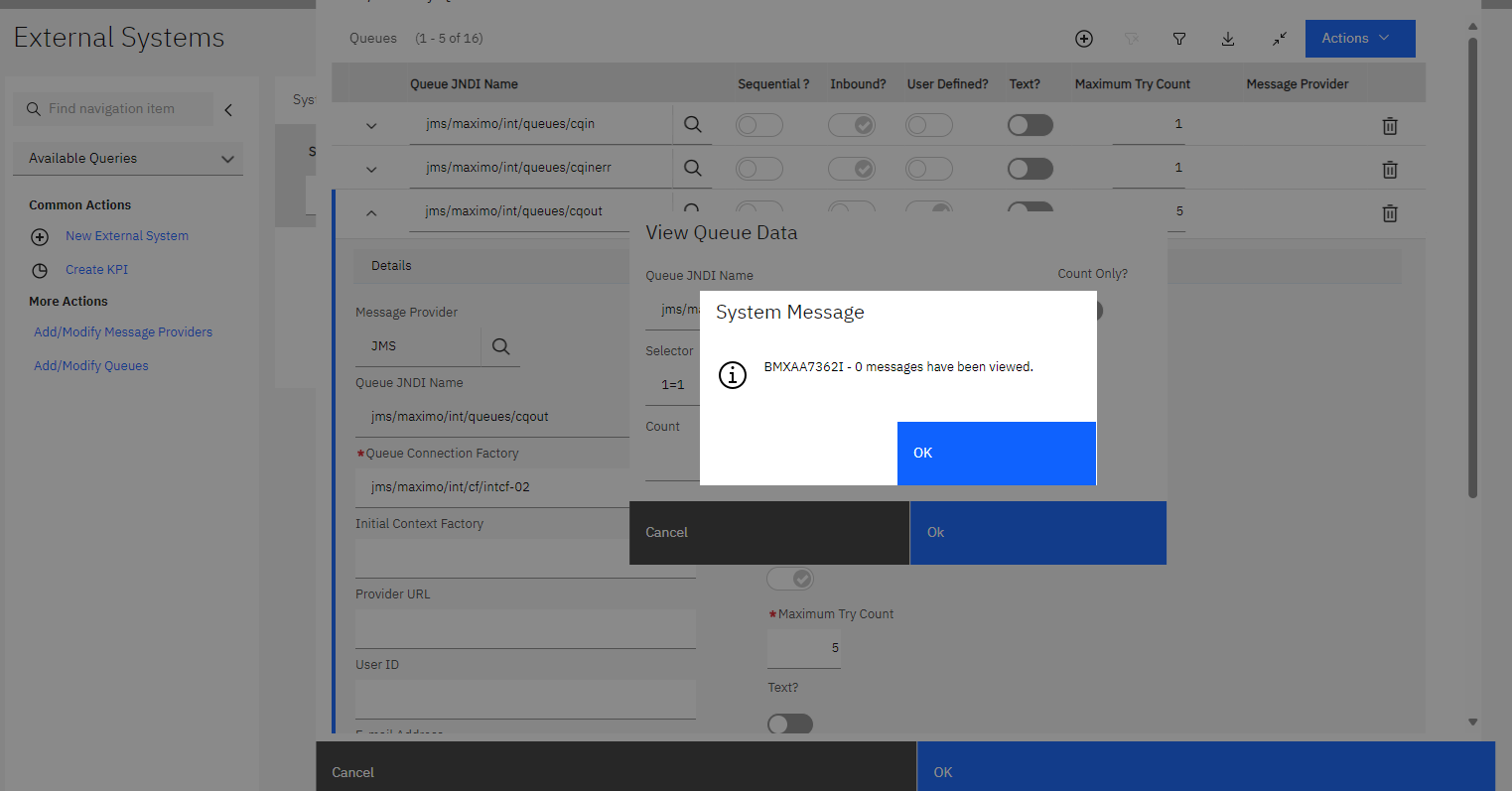

We will use these jmsQueueConnectionFactory JNDI names for the external systems in Maximo. Add or modify the queues for sqin, sqout, cqin, cqinerr, cqouterr, and notf.

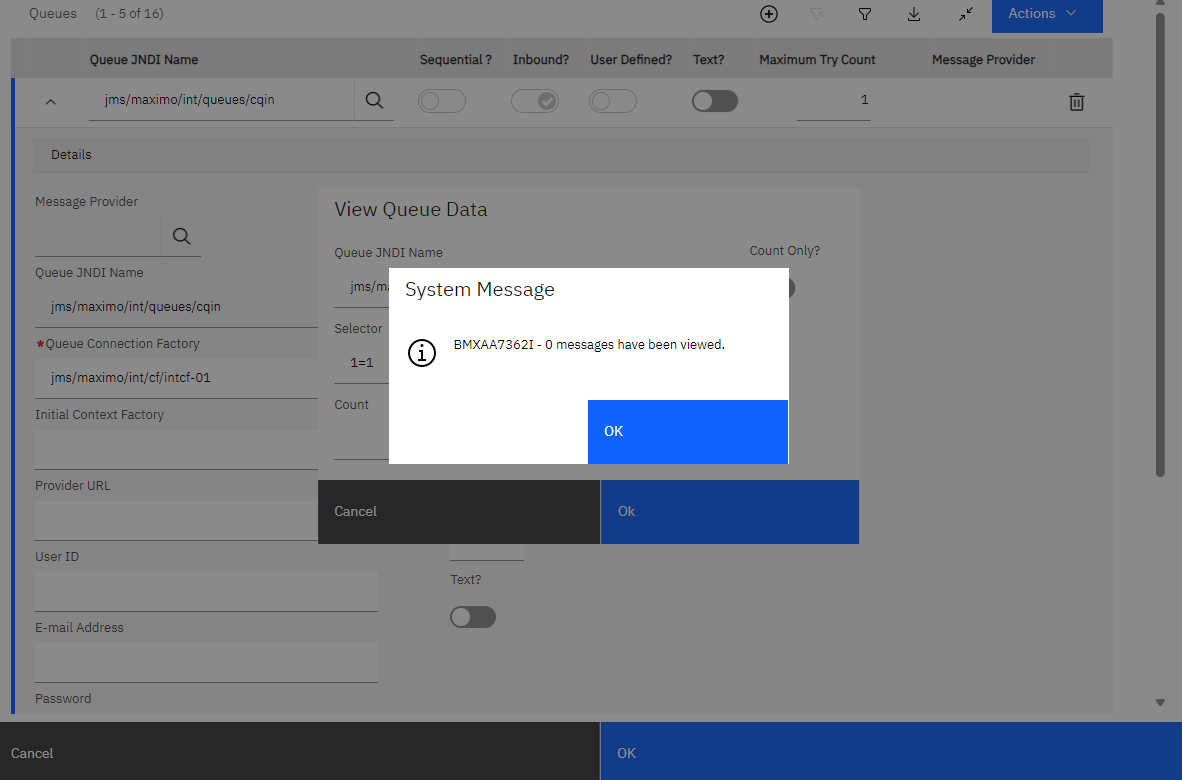

You can go to Action on the Add/Modify dialog and view the queues. You should not encounter any errors.

Conclusion

All the JMS queue ( cqin, cgout, sqin, and sqout) messages should be written to a dedicated folder created inside a parent jmsstore folder. Hence, we should be able to delete a single queue (permanent and log) file at any point in time without impacting the other queues.

Published at DZone with permission of Wasia Maya. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments