6 Ways to Leverage Insomnia as a gRPC Client

Building a simple gRPC server in Node.js and testing it with Insomnia.

Join the DZone community and get the full member experience.

Join For FreeWhether you’re fully immersed in the world of gRPC development or just diving in now, you’re sure to recognize the power and simplicity of this cross-platform, language-neutral approach to connecting services across networks. If you’re tasked with gRPC server development, you might be looking for a simple client to test gRPC requests against your server. Running a pre-built client for gRPC requests certainly beats implementing one from scratch.

Enter Insomnia. (Learn more about Insomnia here.)

Most developers are familiar with Insomnia as a desktop application for API design and testing and an API client for making HTTP requests, with a powerful templating engine and support for plugins. What many don’t know is that Insomnia is also a gRPC client.

Core Tech Concepts

gRPC

A Remote Procedure Call (RPC) is one computer’s execution of a procedure (think: function) on another computer over a network. The computer making the procedure call may know little to nothing about the procedure’s implementation on the remote computer.

Likewise, the remote computer may know very little about the environment or intentions of the calling computer. The only information that the two parties must share is a common understanding of how to communicate with each other—a standard protocol for request and response message structures. The result is the potential to interconnect previously orthogonal services.

Google initially built an RPC framework called Stubby to connect its own set of microservices. Eventually, Google made the project open source as gRPC, which by now has enjoyed heavy adoption by organizations seeking to connect mobile and IoT devices and servers.

The Four Kinds of Service Methods

When using gRPC, four types of service methods are available, differing based on request or response type.

1. Unary RPCs

The Unary RPC involves a single request from the client, resulting in a single response from the server. It’s the most straightforward type of interaction in gRPC, somewhat analogous to a standard client/server HTTP interaction.

2. Client-Streaming RPCs

In a client-streaming RPC, the client sends a sequence of messages. The server listens and captures the entire stream of client messages. Once the client finishes sending messages, the client sends a message indicating the end of the stream. The server processes all of the messages and then returns a single response.

3. Server-Streaming RPCs

In a server-streaming RPC, the client sends a single request. In response, the client receives a stream with a sequence of messages from the server. The message sequence is preserved, and server messages continually stream until there are no more messages or until either side forcefully disconnects the stream.

4. Bidirectional Streaming RPCs

As the name suggests, bidirectional streaming involves both client streaming and server streaming. After making a connection, both sides can stream their messages independently of each other. The client could wait for a message from the server before responding with its own message and vice versa. Or the client and server could both send messages in rapid fire without regard for the other side’s responses.

Our Mini-Project

For our project, we will use Insomnia to demonstrate various gRPC requests on a simple gRPC server that we will build. Using Node.js, we will build a gRPC server that gives us real-time information about the International Space Station. Our server will make some behind-the-scenes requests to the free API at http://open-notify.org.

We’ll walk through the code for building the gRPC server request or response type. Then, we’ll use Insomnia to demonstrate unary, client-streaming, and server-streaming requests. (We’ll leave bidirectional streaming requests as an exercise for the reader.)

If you’d rather play than build, simply check out the GitHub repository for the server, then skip down to the Send Requests with Insomnia section.

Are you ready to build? Strap in.

Build a gRPC Server With Node.js

Project Setup

In a terminal window, create a new folder for your project.

~$ mkdir project

~$ cd projectWe’ll use yarn as our package manager, which you will need to have installed first. Initialize a new project, accepting all the defaults.

~/project$ yarn init

# Accept all the defaultsWe’ll need to add three packages for our project:

~/project$ yarn add @grpc/proto-loader grpc axiosOur entire server will contain four files. Let’s walk through them one at a time.

Protocol Buffer File: space_station.proto

We’ll start by writing our Protocol Buffer (protobuf) file, a language-neutral way of serializing data. It gives the client and the server a common specification upon which to build their communication. Our protobuf file, space_station.proto, will define the types of services that our RPC will provide, along with the expected structure of requests and responses.

In your project folder, create a file called space_station.proto. It should contain the following:

syntax = "proto3";

service SpaceStation {

rpc getAstronautCount (CountRequest) returns (CountResponse) {}

rpc getAstronautNames (stream AstronautIndex) returns (NamesResponse) {}

rpc getLocation (LocationRequest) returns (stream LocationResponse) {}

}

message CountRequest {

}

message CountResponse {

int32 count = 1;

}

message AstronautIndex {

int32 index = 1;

}

message NamesResponse {

string names = 1;

}

message LocationRequest {

int32 seconds = 1;

}

message LocationResponse {

string datetime = 1;

float latitude = 2;

float longitude = 3;

}Let’s step through our protobuf file. First, we specify that we are using the proto3 version of the protocol buffers language syntax. Next, we define our SpaceStation service, which exposes three RPCs:

- The

getAstronautCountRPC is unary, taking a single request (CountRequest) and emitting a single response (CountResponse). The client is essentially asking the server, “How many astronauts are on the International Space Station right now?” The server responds with a count. - The

getAstronautNamesRPC takes streaming requests (AstronautIndex) and emits a single response (NamesResponse). It’s a client-streaming RPC. For example, the client says, “Hey server, I’m going to give you a few numbers: 2, 5, 1. Okay, I’m done sending you my numbers.” Then, the server responds, “Here is a string with the names of astronauts 2, 5 and 1.” - The getLocation RPC takes a single request (

LocationRequest) and emits a streaming response (LocationResponse). It’s a server-streaming RPC. For this RPC, the client says, “Hey server, give me the updated geo-coordinates of the International Space Station every… 10 seconds.” In response, the server streams a timestamp and geo-coordinates every 10 seconds.

Lastly, we define all of our messages, the various requests and responses used with our RPCs. Each message has a list of parameters (except for CountRequest, which has none). A parameter has a type (for example, string, int32 or float), a name (for example, names or latitude), and finally, an integer index. This index simply specifies the ordering of the parameters in the message.

Load protobuf for Node.js Consumption: space_station.js

Next, create a file called space_station.js. It should look like this:

const grpc = require('grpc');

const path = require('path');

const protoLoader = require('@grpc/proto-loader');

const PROTO_FILE_PATH = path.join(__dirname, '/space_station.proto');

const packageDefinition = protoLoader.loadSync(PROTO_FILE_PATH);

module.exports = grpc.loadPackageDefinition(packageDefinition).SpaceStation;This wrapper code simply loads the protobuf file, using the grpc-node library to compile the protobuf into a format consumable by our Node.js application.

External API Requests With Axios: axios_requests.js

Our server will be making some external HTTP requests to Open Notify, which provides a free API with up-to-date information on the International Space Station. We’ll use axios to make these requests. Create a file called axios_requests.js with the following content:

const axios = require('axios');

const URL_ROOT = 'http://api.open-notify.org';

module.exports = {

getAstronauts: async () => {

const { data } = await axios.get(`${URL_ROOT}/astros.json`);

return {

count: data.number,

names: data.people.map(a => a.name)

}

},

getLocation: async () => {

const { data } = await axios.get(`${URL_ROOT}/iss-now.json`);

return {

datetime: (new Date(data.timestamp * 1000)).toUTCString(),

latitude: data.iss_position.latitude,

longitude: data.iss_position.longitude

}

}

}This module has two async functions: getAstronauts and getLocation. These functions abstract away the complexity of sending GET requests to the Open Notify API and processing the response. The request to astros.json returns a JSON object that looks like this:

{"number": 7, "message": "success", "people": [{"name": "Mark Vande Hei", "craft": "ISS"}, {"name": "Oleg Novitskiy", "craft": "ISS"}, {"name": "Pyotr Dubrov", "craft": "ISS"}, {"name": "Thomas Pesquet", "craft": "ISS"}, {"name": "Megan McArthur", "craft": "ISS"}, {"name": "Shane Kimbrough", "craft": "ISS"}, {"name": "Akihiko Hoshide", "craft": "ISS"}]}There is a count of astronauts along with an ordered list of astronauts, including the names of each. We’ll use that ordering as a reference when our client requests, for example, “the names of astronauts 3, 1 and 6.”

The request to iss-now.json returns the following:

{"timestamp": 1621536430, "iss_position": {"longitude": "-97.4780", "latitude": "-46.0757"}, "message": "success"}Our getLocation wrapper converts the timestamp to something human-readable and fetches the latitude and longitude from the nested object.

Put It All Together: server.js

Finally, we have our server.js file, which puts all the pieces together.

const grpc = require('grpc');

const requests = require('./axios_requests.js');

const spaceStation = require('./space_station.js');

const SECONDS_TO_MILLISECONDS = 1000;

const STREAM_SERVER_DEFAULT_SECONDS = 10;

const PORT = 4500;

const getAstronautCount = async (call, callback) => {

const { count } = await requests.getAstronauts();

callback(null, { count });

};

const getAstronautNamesAsArray = async (indices) => {

const { names } = await requests.getAstronauts();

const result = []

for (index of indices) {

if (index < names.length && !result.includes(names[index])) {

result.push(names[index]);

}

}

return result;

}

const getAstronautNames = (call, callback) => {

const indices = []

call.on('data', (data) => {

indices.push(data.index);

});

call.on('end', async () => {

const namesAsArray = await getAstronautNamesAsArray(indices)

callback(null, { names: namesAsArray.join(', ') });

});

}

const getLocation = async (call) => {

const data = await requests.getLocation();

call.write(data);

const seconds = call.request.seconds || STREAM_SERVER_DEFAULT_SECONDS;

let timeout = setTimeout(

async () => getLocation(call),

seconds * SECONDS_TO_MILLISECONDS

);

call.on('cancelled', () => {

clearTimeout(timeout);

call.end();

})

}

const server = new grpc.Server();

server.addService(spaceStation.service, {

getAstronautCount,

getAstronautNames,

getLocation

});

server.bind(`localhost:${PORT}`, grpc.ServerCredentials.createInsecure());

server.start();Starting at the bottom, we see that we set up a gRPC server and a service as defined by our compiled spaceStation protobuf. This service maps the names of methods defined in the protobuf ( getAstronautCount, getAstronautNames and getLocation) to the names of their corresponding implementation methods. We use the same names for our corresponding implementation methods. Lastly, we bind the server to an address and port ( localhost:4500) and start it.

Now, let’s walk through the implementation of our gRPC methods. The simplest, getAstronautCount, doesn’t deal with any parameters from the client. It makes a request to the Open Notify API, fetches the count of astronauts, and then returns a response that conforms to the CountResponse message in our protobuf file—that is, an object with a single count integer.

The implementation of the client streaming getAstronautNames method is slightly more involved. Each time the data event fires, that means the client has sent a message (an AstronautIndex message, if you refer to the space_station.proto file).

The server stores all of these messages in an indices array. When the client finishes sending messages, the end event fires. When this happens, the server takes its indices array and cross-references the array of astronauts from the Open Notify API response. The server joins the appropriate names together into a comma-separated string and then returns its single response, conforming to the protobuf file’s NamesResponse message structure.

Lastly, we implement the server streaming getLocation method. This method fetches the current location data from the Open Notify API, then writes its first response message back to the client. The server looks at the seconds parameter sent with the client request.

It calls setTimeout to do this all over again after that many seconds. When the client manually cancels the connection—essentially saying, “Thanks, server, I don’t need any more responses.”—this fires a cancelled event. The server stops its continuous calls and then ends the process.

The Github repository for this code is also publicly available. Altogether, this is what your folder structure should look like:

~/project$ tree -L 1

.

├── axios_requests.js

├── node_modules

├── package.json

├── server.js

├── space_station.js

└── space_station.protoNow that we wrote all of our code, let’s start our server:

~/project$ node server.jsWe’re ready to send requests with Insomnia.

Send gRPC Requests With Insomnia

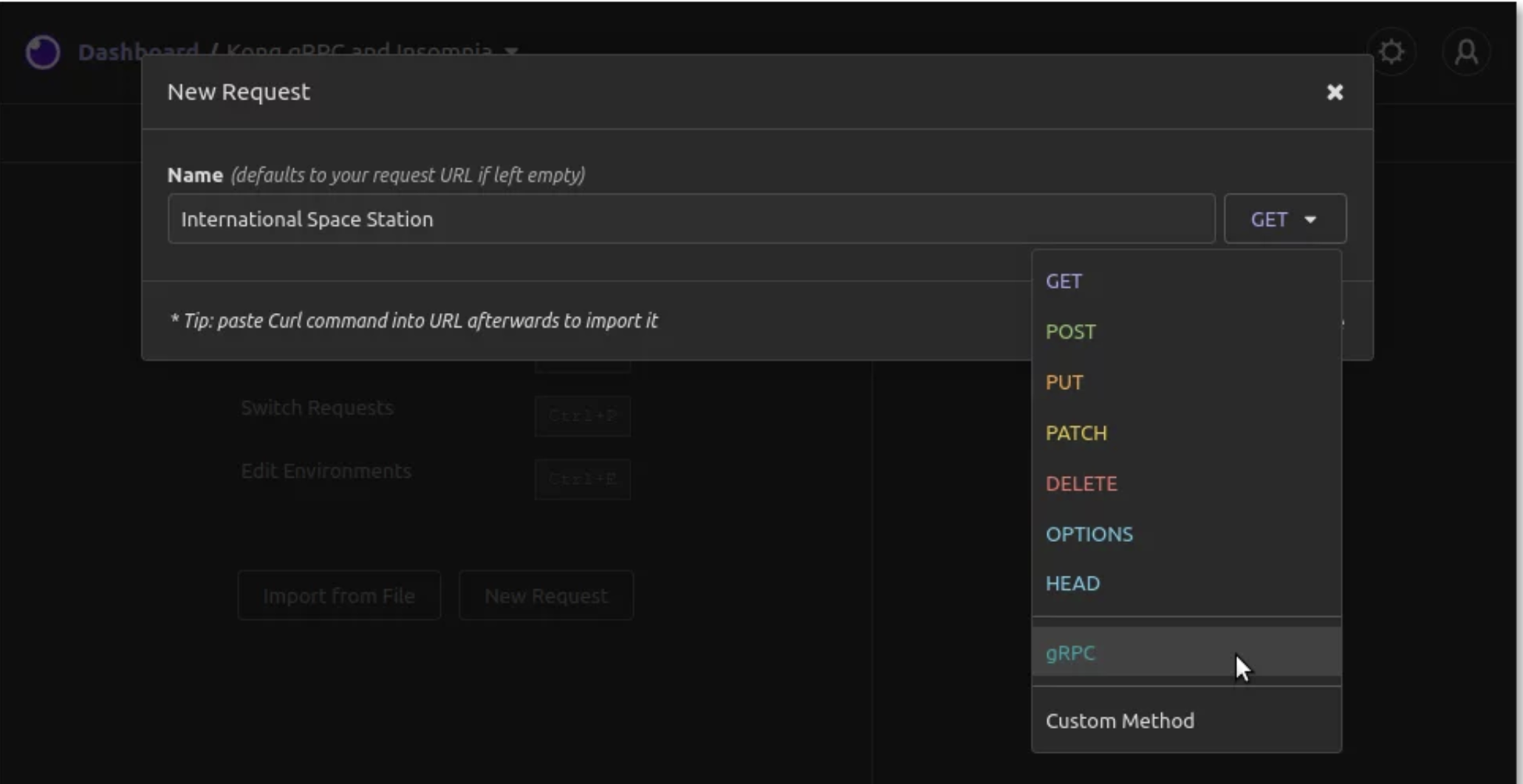

Open up your Insomnia application, create a request collection and then create a new request and give it a name. For the request method, choose gRPC.

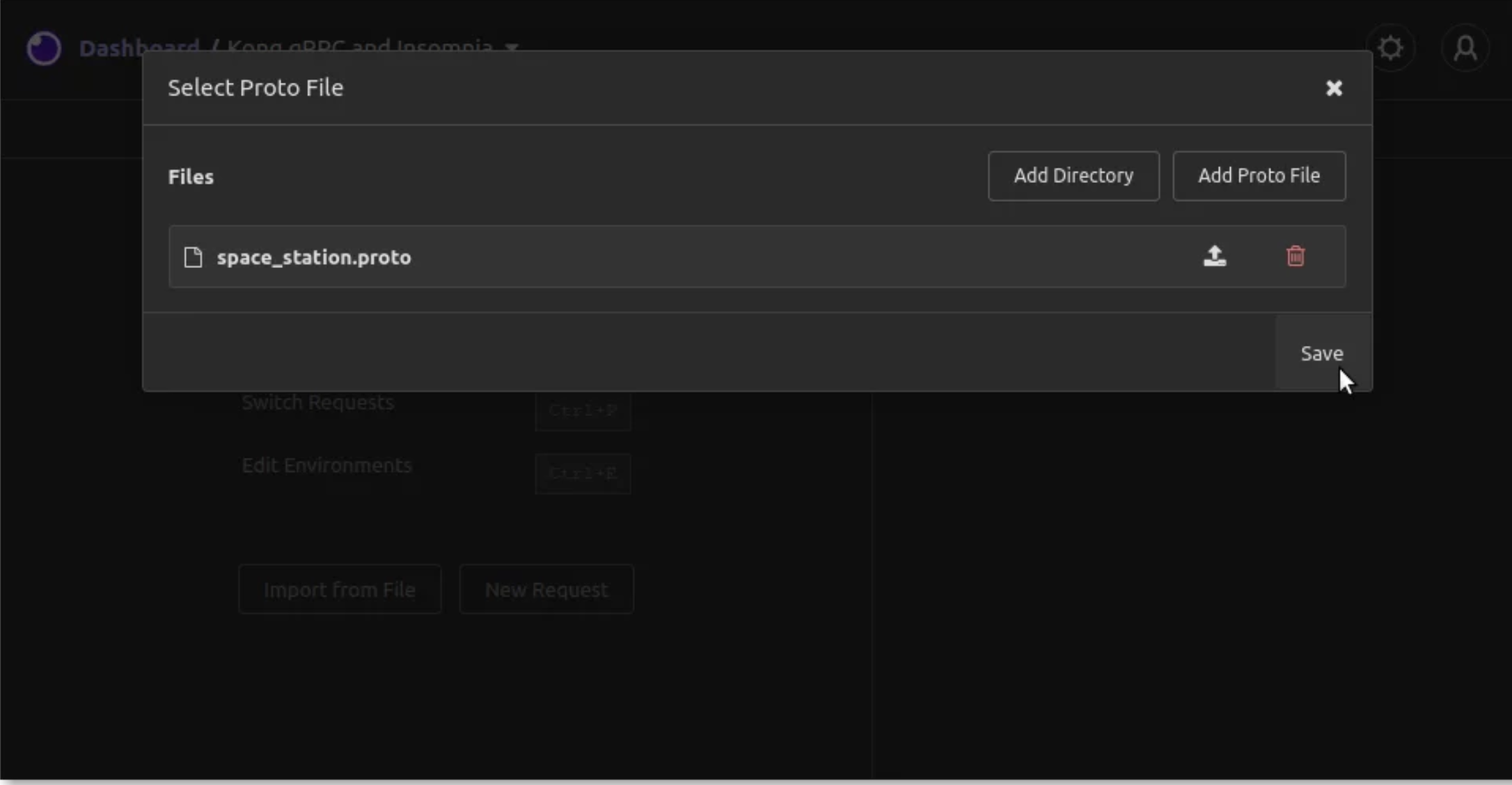

Since you have chosen gRPC, the system will ask you to add a proto file, which the Insomnia client will use as a specification to understand the expected requests and responses. Click on the “Add Proto File” button, and then select the space_station.proto file from your project folder. The system will select the file as the working proto file for this request. Click on the “Save” button.

For the request URL, enter localhost:4500, which is where our server is listening. You’ll also see a “Select Method” dropdown. There, you’ll see getAstronautCount, getAstronautNames and getLocation.

The client (Insomnia) uses the proto file to understand how it should make its requests and what it should expect for responses. The client also assumes that the server (at localhost:4500) implements these services according to the same proto file. Fortunately for us, we’re certain that the client and server are working off the same proto file.

1. Unary

We’ll begin with a basic unary request. Select the getAstronautCount method. Immediately, you’ll see that the request body starts with an empty JSON object. Our getAstronautCount request doesn’t require any parameters, so we will leave it empty. Click on the “Send” button.

We send a single request. We get back a single response: a JSON object with a count value of 7. At the time of this writing, there are seven astronauts aboard the International Space Station.

2. Client Streaming

For the client-streaming method, we choose getAstronautNames from the “Select Method” dropdown. Knowing that seven astronauts are aboard the International Space Station, we can now send any numbers from 1 through 7 as multiple streaming requests from the client to the server.

In Insomnia, we first click on the “Start” button. Insomnia presents a “Stream” button and a “Commit” button. Each time we want to send a new message, we modify our request body accordingly and then click on the “Stream” button. Each message will appear as a new tab. When we finish sending all the messages we want to, we click on “Commit.”

For example, we set our body to contain "index": 3 and then click on “Stream.” That will send the first message as the astronaut index 3. We modify the body to set "index": 1 and then click on “Stream” again. We do the same for "index": 6 and click on “Stream” once again. So far, we have sent three client messages for this client streaming request. We click on the “Commit” button.

We expect to see a single response from the server is a string with the names of astronauts 3, 1, and 6. The server responds with:

{

"names": "Thomas Pesquet, Oleg Novitskiy, Akihiko Hoshide"

}

3. Server Streaming

For our last demonstration, we’ll show a server-streaming request in Insomnia. This last request calls the getLocation method. In the single request body, we send a parameter, seconds, which tells the server how frequently we want it to stream back a response. For example, we set the body as follows:

{

"seconds": 2

}Once we click on the “Start” button, we see that the server streams back a response every two seconds. Each response contains the current date/time, along with the current latitude and longitude of the International Space Station. At any point, we can click on the “Cancel” button to end our request and stop the server from continuing to stream any more responses. The server can also finish sending messages if you implement it as such.

Additional Insomnia gRPC Use Cases

We’ve demonstrated quite a bit of what Insomnia can do when interacting with a gRPC server. Here are a few other features available which we did not demonstrate:

4. Bidirectional Streaming

As we mentioned, bidirectional streaming is the fourth kind of service method provided by gRPC. While we didn’t demonstrate it in our mini-project, Insomnia supports bidirectional streaming. The user interaction functions as a combination of what we saw in client streaming and server streaming.

5. Request Cancelling

If you were to build a gRPC client from scratch, you’d notice that implementing request cancelling is non-trivial. When the client is in the middle of streaming request messages, and the server is listening, what needs to happen on the client side if the user simply wants to cancel the request altogether? That same complexity on the client side exists for all three streaming type RPCs. Insomnia abstracts away the complexity, and a simple push of the “Cancel” button ensures the server receives a cancelled event.

6. Template Tags

Insomnia’s template tag support and plugin ecosystem open the door for powerful and innovative ways to enhance the gRPC client-server interaction. For example, Insomnia’s faker plugin allows for real-time insertion of fake or randomized data as environment variables or request values. This type of usage can greatly enhance test coverage.

To Infinity and Beyond!

When we started, we were looking to acquaint ourselves with building a simple gRPC server in Node.js. Beyond that, however, we wanted a working server upon which we could test and demonstrate the power of Insomnia for gRPC.

We built a server that supports unary, client-streaming, and server-streaming gRPC service methods. From here, it’s not a big stretch to build in bidirectional streaming methods. What’s more, we demonstrated how easy it is to use Insomnia on the client side, testing the different types of gRPC interaction.

You now have a foundation for gRPC server development, and you have experience using Insomnia for API testing your gRPC server. Development and testing in the gRPC space just got a lot easier!

If you have any questions, ask them in the Insomnia Community on Slack and GitHub.

Once you set up Insomnia gRPC, you may find these other tutorials helpful:

- Insomnia Tutorial: API Design, Testing, and Collaboration

- 3 Solutions for Avoiding Plain-Text Passwords in Insomnia

- What’s New (and Coming Soon) With Insomnia

- Or try testing your Kong Gateway plugins in Insomnia with these tutorials: Key Auth, Rate Limiting, OAuth2, and JWT.

Published at DZone with permission of Alvin Lee. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments