HTTP for Inter-Service Communication?

After the evolution of distributed systems, microservices-based applications attracted the interest of nearly every organization wanting to survive market competition.

Join the DZone community and get the full member experience.

Join For FreeAfter the evolution of distributed systems, microservices-based applications attracted the interest of nearly every organization wanting to grow with time and survive the market competition. Microservices allows us to scale and manage systems easily. Development time reduced due to distributed effort among many teams and time-to-market new features reduced significantly.

Due to distributed nature, communication among different components is over the network. And there are so many factors that can affect communication, either it can be security, added latency, or abrupt termination of ongoing communication, leading to increased infrastructure cost. Hence either we can fix the network, which exists with numerous problems or we can architect our system to be resilient and reliable over time.

Services communicate with each other in a distributed environment using network protocols. Our solutions to make our system resilient, reliable, and faster lies with correct protocol usage too. We have various protocols for different needs and for different network layers (i.e., OSI network model). When we talk about service to service communication or browser to service communication, HTTP usually adopted as a De facto standard. All REST-based services adopt this as a standard.

But whether our too much relying on HTTP is correct? HTTP has been created for a different purpose, they are created for browsers to back end server communication to retrieve some data using the request-response model. And in the current world, it is being used for even inter-services communications, reducing our system’s real power.

Here, I’ll cover issues with HTTP and possible available solutions for common use cases. And later I’ll provide some details about RSocket, which can alleviate many of these existing issues and can make our application fully reactive.

Blocking Communication

HTTP is an application layer protocol in the OSI network model. Over time HTTP has evolved and provided different versions to adopt. Let’s go through them and visualize the issues that lie with them.

HTTP/1.0

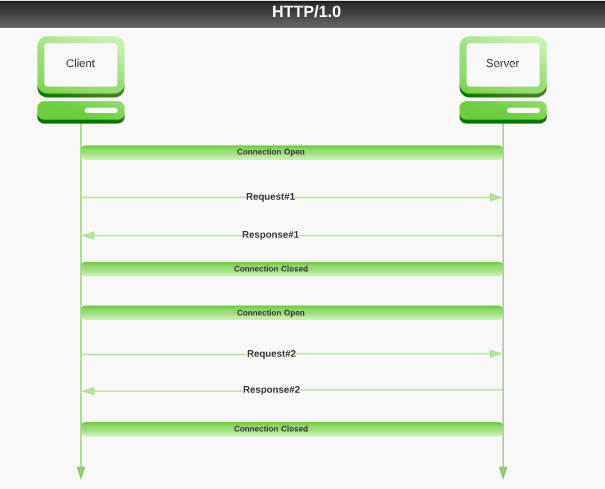

If a client service wanted to retrieve some data from another service, it will first open a connection with it and then send a request over it to the server. The server will send a response and closes the connection. For each request, opening a new connection is a must. Hence lots of additional overhead for each request-response cycle and the result will be, slow communication.

Fig.1 shows the working of HTTP/1.0 between client and server machines. It also shows the multiple connections required for each request-response cycle.

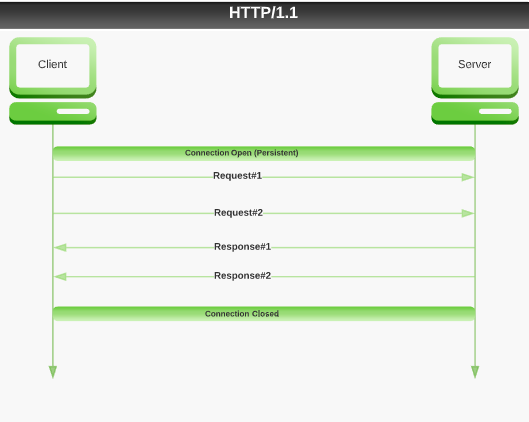

HTTP/1.1 improves over HTTP/1.0 and provided a solution in the form of persistent connection and introduced the feature of ‘Pipelining’. Due to this, a client can send multiple requests over a single connection, which can remain alive for a configured time only.

Though the situation relatively improved, this still has a problem, popularly known as ‘Head of Line Blocking’. Due to this issue, if there are multiple requests on a single connection, then those will be queued to the server and will be responded to in the same order only. And, if your client is fast in generating requests or the server is slow in responding then that will block other requests to be processed. Hence, congestion in the network causing unnecessary delay.

Fig2. Show the HTTP/1.1 working where Request#2 is facing the ‘Head Of Line Blocking’ due to Request#1. Until the server process the request and respond it with Response#1, Request#2 will wait for processing at the Server end.

HTTP/2

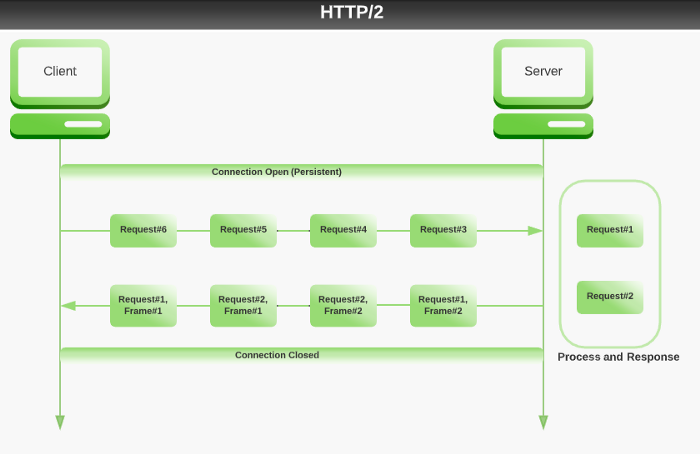

HTTP/2 improves over HTTP/1.1 and introduced the new feature of ‘Multiplexing’. This allowed sending multiple requests as separate streams to the server over a single connection and the server will send responses over the streams back to the client. This way inter-service communication is now relatively faster.

As shown in Fig.3, HTTP/2 uses multiplexed channel over a single connection. Over the same channel processed responses are sent which can be interleaved between other response frames. Any delayed or blocked response won’t affect other responses.

Textual Protocols

HTTP/1.x uses text formats JSON, XML, etc., for communication as these formats are intended for browsers. For obvious reasons, text formats make server responses human readable. But when services communicate with each other they don’t need a response in text format to interpret. Why would they need it either? If a service is working on an object then it resides in a binary format on that machine, optimized for its processing, and converting that to text before serializing over the network and then at receiver end again deserializing text and converting back to binary structure for further work, is an overhead which slows down the processing speed and increases the processing cost too.

HTTP/2 provides improvement over such issues too. It uses binary protocol instead, which is more efficient to parse and more compact, less error-prone compared to textual protocols. Recently developed gRPC, built on top of HTTP/2 only, uses further improved binary protocol Protobuf, as a mechanism to serialize binary data, and the resulting data is much simpler, smaller, and improves processing speed. As this is an RPC, so it is just like calling the remote method as a local method in client service and removes a lot of boilerplate code for application-level semantics for HTTP usage.

Note: gRPC Protobuf is currently for inter-service communication only and messages are not human-readable. gRPC is not for browsers, but support for HTTP/2 is available from many browsers.

By now, it seems HTTP/2 solves the major inter-service communication issue.

Message Flow Control

Let’s consider a use case, where the client is continuously bombarding requests to the server at a higher rate compared to what the server can process. This will overload the server and make it difficult to respond. We need some kind of flow control at the application level.

gRPC built on top of HTTP/2 and HTTP/2 uses TCP as transport layer protocol which provides byte-level flow control only. This type of flow control won’t be able to throttle requests at the application layer. We still need application-level flow control or need to implement circuit breakers to keep our system responsive. And we sometimes need to implement retry logic to make the system more resilient. This is an overhead to build and then manage, which still lies with gRPC and HTTP/2.

Real-Time Updates

Another use case is where the client always wanted to have the latest data with itself. In such a scenario, one of the options is to use HTTP and keep sending polling requests to the server to get the updates when available. This way lots of unnecessary requests will be generated resulting in unnecessary traffic and resource usage.

As HTTP provides a single interaction model, i.e., Request-Response, hence using HTTP is not the right option in such cases.

SSE(Server-Sent Events) is a way to stream real-time updates from the server to client. But this is a textual protocol.

Another option is to use Websockets (binary protocol) which can also provide real-time updates to clients over a single connection using bi-directional communication.

As Websockets are connection-oriented, any intermittent disconnection in an ongoing communication and response data resumption after connection reestablished within some time is difficult to manage.

Also, issues arising due to relatively faster producers or faster consumers are existing with Websockets too. Hence need of message level flow control is also required.

Adding to the list is the complications associated with application-level semantics that need to be managed while working with Websockets.

We have solutions for some issues but those are still trading off with some other issue. A need to have a protocol to hide all issues and complexity within it and provide a simple interface for inter-service communication with the capability to support all the use cases with ease.

RSocket

According to Reactive Manifesto, for optimum resource utilization, distributed systems need to be fully reactive. Such systems are more robust, more responsive, more resilient, more flexible, and better positioned to meet modern demands.

The application developed using Reactive Programming only makes service reactive and helps the service to better utilize available resources like CPU, memory, etc. But being a fully reactive system means all associated IO needs to be reactive too. Commonly IO is associated with DB interaction and service to service communication. R2DBC (Reactive Relational Database Connectivity) and reactive NoSql drivers take care of DB interaction and RSocket is to take care of other areas which deal with service to service communication in reactive style. For RSocket there is nothing like client and server. Once the client establishes a connection with the server, both become equal and anyone can initiate a request, i.e., communication is bi-directional.

Binary Protocol

RSocket (or Reactive Socket) is a binary protocol and it breaks down messages into frames, which are streams of bytes. But, this also allows employing any of the serialization/deserialization mechanisms like Protobuf, AVRO, or even JSON serialization.

Requests Multiplexing

It uses a single physical connection for multiple logical streams to send data between client and server, which resolved the 'Head of Line Blocking' issue.

Multiple Interaction Model

RSocket provides a simple communication interface, available with multiple interaction models:

- Request-Response (stream of 1).

- Fire-and-Forget (no response).

- Request-Stream (finite stream of many).

- Channel (bi-directional streams).

All these models represent asynchronous communications and return a deferred result in the form of Mono/Flux in reactive terminology.

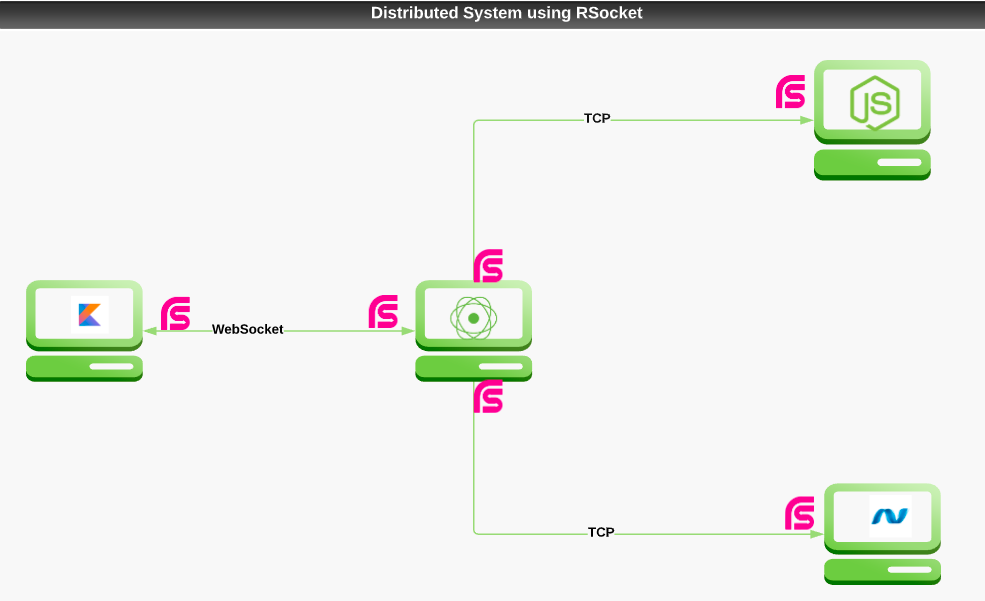

Transport Agnostic Communication

RSocket is an application layer protocol with an option to switch between different underlying transport layer protocols like TCP, Websockets, and Aeron. TCP is the typical choice for the server-server variant, Websockets will be more useful for server-browser variant and Aeron (UDP-based) can be used where throughput is really critical.

Application Semantic

Compared with HTTP, lots of unnecessary application semantics have been limited. Also compared with TCP and Websockets which are difficult to consume at the application layer directly due to the unavailability of easy application semantics, RSocket comes with easy-to-use semantics.

Build-In Flow Control

Available protocols use transport-level flow control which is just controlling the number of bytes, but RSocket provides the application level message flow-control, which is the need now. RSocket provides flow control for both client and server:

- Reactive Streams which is controlled by Requester.

- Lease Semantics which is controlled by Responder.

Session Resumption

This feature allows resuming long-lived streams, which is useful for mobile to server communication when network connections drop, switch and reconnect frequently.

Faster Than HTTP

Being binary protocol and use of asynchronous communication makes RSocket up to 10x faster than HTTP (source: https://www.netifi.com/rsocket).

RSocket perform better compared with gRPC too, which provides a performance improvement over HTTP/2 itself.

(source: https://dzone.com/articles/rsocket-vs-grpc-benchmark)

Opportunities With RSocket

These features clearly show the importance of looking beyond traditional protocols like HTTP for at least inter-service communication, which usually supports the backbone of any distributed system.

Microservices built on reactive programming tech stack can improve the performance and infrastructure utilization using reactive communication protocols i.e. RSocket.

- Although advantages not limited to a specific industry, better streaming features increase the scope of RSocket in real-time chat applications, GPS-based applications, online education with multimedia chats, and collaborative drawings.

- Any Cloud-based application where a lot of data is exchanged via inter-service communication.

- In distributed systems, wanted to reduce latency and make systems faster.

- In distributed Systems. wanted to reduce the operational cost by better CPU utilization and increasing the memory efficiency.

- In applications, where server wanted to query a specific set of clients to debug some issue at run-time. Due to bi-directional behavior, it is possible to send requests from the server to the client on an existing connection.

- The Fire-and-Forget feature can be used for noncritical tracing purposes.

Fig.4 shows a possible usage of RSocket protocol, where multiple microservices implemented using various language can communicate with each other on the different transport layer of choice. RSocket which gives application layer semantics makes interaction easier. Microservices are now not tightly coupled with protocols semantics and can use a simple and consistent RSocket interface.

Opinions expressed by DZone contributors are their own.

Comments