How We Built the New JSON API for Cassandra and Astra DB

We looked to the Mongoose project for the kinds of data access patterns that Node.js developers need to bring the scale of Cassandra to documents.

Join the DZone community and get the full member experience.

Join For FreeRecently, we began to consider how to make Apache Cassandra more accessible to a wider audience of developers, particularly for the largest community of all: Node.js developers. JSON is an important part of that developer ecosystem because of its flexibility.

Many Node.js applications use an object document mapper called Mongoose.js that simplifies the process of converting JavaScript objects to and from JSON documents stored in a document database. Mongoose has approximately 2 million downloads a week on npm, and 3.7 million public GitHub repositories list it as a dependency.

We began looking at the Mongoose project as a representation of the kinds of data-access patterns that Node.js developers need, especially in terms of filtering, projection, and updating. This includes features such as:

- Inserting and updating complex documents, including nested arrays and subdocuments

- Finding, updating, and deleting one or more documents based on criteria, including

- equality (including for arrays and subdocuments)

- multi-item equality (IN)

- inequality (NOT)

- comparison (<, <=, >, >=)

- existence

- and combinations of those criteria using AND and OR

- Sorting and projecting results based on a user-provided list of fields or subdocuments

Most of these data access patterns aren’t supported by Cassandra out of the box. Because it’s a distributed, table-oriented database, developers were traditionally encouraged to denormalize data to support reads and prioritize “no look” upserts. So, we began to explore how we could provide an API on top of Cassandra that could implement these access patterns with improved scalability and performance.

Our goal was to build the best backend for Mongoose. To accomplish this, we designed an API called the JSON API that is usable by Mongoose.js with only a configuration change or with any other language via the HTTP API. Earlier this month, we announced that the new JSON API is available in DataStax Astra DB vector databases and also can be used as part of the open source Stargate project, a data API gateway, against self-hosted Cassandra clusters.

In this article, we’ll explain the details of the JSON API design and describe how it takes advantage of new Cassandra features to yield a rich set of document-oriented functionality for Node.js with demonstrably good performance and scalability.

JSON API Architecture

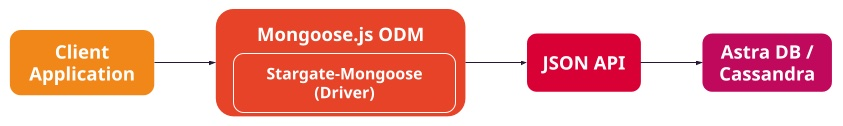

The key elements of this architecture are shown below. Client applications include the Mongoose JavaScript library along with the stargate-mongoose driver, packages available via npm. The developer just needs to configure the JSON API endpoint, and things are ready to go.

In designing the JSON API, we discovered that we could push the vast majority of the querying and filtering logic that Mongoose requires down into the Cassandra nodes themselves with a few key enhancements, especially improvements to the Storage Attached Index (SAI) implementation first introduced in Cassandra 4.0.

Introducing Super Shredding

To understand our design approach for the JSON API, it’s helpful to take a quick look back to set some context. Our first attempt back in 2021 at building a document-style API on top of Cassandra was the Stargate Docs API, based on a “document shredding” approach. While we were able to make some performance optimizations to this API, a key challenge was that the original shredding approach broke each document into components spread across multiple Cassandra rows.

Although the resulting schema provided useful flexibility to help implement some of the “exact match” desired filtering operations, more complex filtering required overfetching of documents and filtering in memory. This design also required multiple queries for document insertion, retrieval, update, and deletion operations. This hurt performance and added complexity to ensure consistency across multiple rows while those queries were in flight.

For the JSON API, we’re using an improved approach known as “super shredding,” which Aaron Morton described in a recent talk. The design of super shredding was developed via a logical thought process to create a performant, scalable solution:

- We started with the goal of pushing as much filtering and sorting as possible down to Cassandra, including the combination of filtering terms using logical AND and OR.

- To accomplish this pushdown, we took advantage of the “query engine” in SAI that can join the results from filtering on multiple indexes, a feature known as “match streaming.”

- To use match streaming, each JSON document must be stored in a single row, as this makes “Row ID” tracking in SAI more efficient.

- In order to store each document as a single row, we used sets and maps to store items of variable lengths.

Although the Mongoose.js API provides user-level control over indexing, we decided to build the JSON API to support efficient querying on all fields in a document without the user needing to create indexes. To achieve this, we separated the two concerns of the schema:

- Store the document for filtering and sorting. We create SAI indexes by default on all columns containing searchable (filterable) fields of a document.

- Store the document for projections, which must honor the field order of the original document. We also need the original document in order to process modifications made with reference to the full document contents.

Separating the two concerns led to optimizing the design for each concern, which created a more robust model.

Super Shredding Table Schema

Consider the following example, which we’ll use to describe the super shredding table schema and how it works.

The JSON API supports the concepts of namespaces and collections, which correspond to Cassandra keyspaces and tables, respectively. If a user created a namespace called purchase_database and a collection called products, the following Cassandra table would be created.

CREATE TABLE purchase_database.products (

key tuple<tinyint,text>,

tx_id timeuuid,

doc_json text,

exist_keys set<text>,

array_size map<text, int>,

array_contains set<text>,

query_bool_values map<text, tinyint>,

query_dbl_values map<text, double>,

query_timestamp_values map<text, timestamp>,

query_text_values map<text, text>,

query_null_values set<text>

PRIMARY KEY (key)

);

Let’s look at how these columns are used. Several of them are always populated for every document (row) that is inserted:

key: the primary key for the document. This corresponds to the_idfield in a Mongoose object. The primary key may be any of the supported atomic (non-null) JSON data types, such as text, double, or timestamp. The tuple for the key encodes the data type and the encoding of the value as a string.tx_id: a time-based (v1) UUID (universally unique identifier) that identifies the last version of the document. We’ll see below how this is used to update transactions to maintain consistency.doc_json: the full serialized text of the JSON document. This is used to materialize the document for projections and updates.exist_keys: a set of the JSON paths in the document that can be queried for existence. This is used to find documents that either have or do not have a specified path.

Other columns are optionally populated based on the contents of the document in order to support various application queries:

query_bool_values,query_dbl_values,query_timestamp_values,query_text_values: these map JSON paths to fields in the document that contain atomic values, including queries for array items. The fields can be of the supported JSON basic types, including text, doubles (used for all JSON number values), booleans and timestamps (part of Extended JSON). These are used to support equality and inequality filtering and sorting against fields in the document (except for arrays, as noted below). Thequery_text_valuesis also used to store hashed values of subdocuments, which supports searching for documents that contain a specified subdocument, for instance finding documents with a specified address.query_null_values: similar to the otherquerycolumns but represented as a set of keys since there are no actual values to store. This is used when filtering if a field is null or not null according to JSON null values.array_size: a map of JSON paths to fields in the document where the value is a JSON array, the value in the Cassandra Query Language map is the size of the array (including zero). This is used to find documents based on the exact size/length of an array in the document.array_contains: a set used for all equality checks on array elements (regardless of type). Each element includes a JSON path to an atomic value or item in an array field appended with a hash of the value. This is used to find documents that contain atomic values or arrays that have atomic values.

We created SAI indexes on the exist_keys, query_* and array_* columns to support fast filtering on lookups. We’ll see an example of this below.

Inserting Documents

Next, we’ll see what happens when a client application inserts a JSON document. We’ll focus on what happens in the JSON API and how it uses Cassandra.

Let’s assume the client application inserts the following document:

{

"insertOne": {

"document": {

"_id": "1",

"purchase_type": "Online",

"customer": {

"name": "Jim",

"phone": "123-123-1234",

"address": {

"address_line": "1234 Broadway",

"city": "New York",

"state": "NY"

}

},

"purchase_date": { "$date": 1695138780 },

"seller": {

"name": "Jon",

"location": "New York"

},

"items": [

{

"car" : "BMW",

"color": "Black"

},

"Extended warranty - 5 years"

],

"amount": 65000,

"status" : "active",

"preferred_customer" : true

}

}

}Another key design goal of the JSON API is not to leak “Cassandra-isms” into the interface. If you’re a Cassandra user, you know some of these, such as the Partition Key versus the Clustering Keys. Users will not see any CQL or “Cassandra-isms” when using the API or stargate-mongoose. However, curious Cassandra developers will be interested to see the CQL row that was inserted into the purchase table, which looks something like the output below (with some formatting and values omitted for readability):

cassandra@cqlsh:purchase_database> select key, tx_id, doc_json, query_text_values from purchase where key = (1, '1');

@ Row 1

-------------------+--------------------------------------------------

key | (1, '1')

tx_id | 94e5e560-51bc-11ee-bc96-432052799679

doc_json | {"_id":"1","purchase_type":"Online",...}

query_text_values | {'_id': '1',

'customer.address.address_line': '1234 Broadway',

'customer.address.city': 'New York',

'customer.address.state': 'NY',

'customer.name': 'Jim',

'customer.phone': '123-123-1234',

We’ll focus on the contents of the query_text_values field to demonstrate other aspects of the super shredding design.

Retrieving Documents

Next, let’s look at what happens if the client application queries for documents with a specific city. Here is the JSON API query:

cassandra@cqlsh:purchase_database> select key, tx_id, doc_json, query_text_values from purchase where key = (1, '1');

@ Row 1

-------------------+--------------------------------------------------

key | (1, '1')

tx_id | 94e5e560-51bc-11ee-bc96-432052799679

doc_json | {"_id":"1","purchase_type":"Online",...}

query_text_values | {'_id': '1',

'customer.address.address_line': '1234 Broadway',

'customer.address.city': 'New York',

'customer.address.state': 'NY',

'customer.name': 'Jim',

'customer.phone': '123-123-1234',

'items.0.car': 'BMW',

'items.0.color': 'Black',

'items.1': 'Extended warranty - 5 years',

'purchase_type': 'Online',

'seller.location': 'New York',

'seller.name': 'Jon',

'status': 'active',

... }

(1 rows)The JSON API takes this query and interprets the requested value for purchase_date as a string. Therefore, it performs the following CQL query using the query_text_valuescolumn:

SELECT key, tx_id, doc_json FROM purchase_database.purchase WHERE query_text_values["customer.address.city"] = "New York"

The document inserted above will match this query. This query works because when the client application created the purchases collection, the JSON API created an SAI index on the values of the query_text_values column:

CREATE CUSTOM INDEX IF NOT EXISTS purchase_query_text_values ON purchase_database.purchase (entries(query_text_values)) USING 'StorageAttachedIndex';

This is a simple equality query, but the SAI also supports more complex inequality or NOT queries. Make sure to check out the JSON API documentation to see all the supported options.

Updating Documents

The JSON API also supports the rich set of update commands expected by Mongoose.js for partial or full documents, including unsetting fields or removing subdocuments, as well as optionally returning projections of the original or updated document.

For example, the following JSON API query could be used to unset the preferred customer field from a document and return the updated document:

{

"findOneAndUpdate": {

"filter": {

"_id": "1"

},

"update" : {"$unset" : {"preferred_customer": ""}},

"options" : {"returnDocument" : "after"}

}

}

This demonstrates some of the complexity of dealing with JSON types; in this case, a Boolean value can be true, false, null or unset, and the app can use unset, falseor null to represent “not a preferred customer.

UPDATE purchase_database.purchase SET

tx_id = now(),

exist_keys = ?,

array_size = ?,

array_contains = ?,

query_bool_values = ?,

query_dbl_values = ?,

query_text_values = ?,

query_null_values = ?,

query_timestamp_values = ?,

doc_json = ?

WHERE key = (1, '1') IF tx_id = ? VALUES ...;The actual values have been omitted for brevity. Notice the use of the CQL IF clause, which checks to make sure that the tx_id has the value that was obtained from the initial document read. The IF clause implies the use of a lightweight transaction (LWT) to ensure consistent updates, in this case, that the document contents have not been changed since it was read. While not all possible update commands strictly require this protection, the correct design is to execute them all using this read-modify-write pattern. We designed this pattern to be able to take advantage of the new Accord-based transactions coming in Cassandra 5.0 for improved performance.

Performance Results

Experienced Cassandra users might have some questions about the performance implications of some elements of the design. For example:

- The use of collections may result in tombstones as items are removed.

- The use of indexes — but remember SAI is better!

- The use of LWTs for all document updates.

While these are valid concerns, it’s important to keep in mind that the performance expectations for a document store are different from those that many of us in the Cassandra community are accustomed to.

To validate that our implementation supports performance in keeping with the typical expectations of a document database, we executed benchmark tests of the JSON API running with a DataStax Astra serverless database. The tests were performed using the NoSQLBench and Fallout framework using a variety of queries and documents of different sizes and complexity. You can find the files used to execute these tests on the JSON API GitHub repository.

One test consisted of a warmup phase with multiple concurrent inserts, followed by a main phase including seven different operations running in parallel. The test executed each operation 10,000 times at a rate of 25 operations per second for a combined rate of 175 ops/s. The results are shown in the chart below:

| Operation | Median latency | 99th percentile latency |

| Find one by id | 7.84 ms | 16.25 ms |

| Find one by property | 10.53 ms | 18.00 ms |

| Find multi $eq and $exists | 13.62 ms | 24.77 ms |

| Find multi $eq and $exists with $projection | 13.27 ms | 24.26 ms |

| Find one, sort (narrow match) and update property | 10.80 ms | 18.43 ms |

| Delete one id | 14.175 ms | 23.78 ms |

| Find one id and replace | 14.11 ms | 23.56 ms |

As these results show, the JSON API was able to sustain consistent performance under a reasonably aggressive operational load. We’re continuing to work on performance testing and optimization and look forward to taking advantage of improvements in Cassandra to get even more speed and scalability.

Next Steps

Coming up, we’ll explain how we extended the JSON API to handle vector search, including how that affects the super shredding design. Future enhancements of the JSON API include the adoption of additional Cassandra features from 5.0 and beyond. For example, the new Accord feature will enable the JSON API to improve the performance of document update queries. In the meantime, we’ll be working on hardening and performance improvements as we look toward an official general availability release in the near future.

Published at DZone with permission of Aaron Morton. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments