How to Use Hugging Face Models for NLP, Audio Classification, and Computer Vision

When using Hugging Face for NLP, audio classification, or computer vision, users need to know what Hugging Face offers for each project type.

Join the DZone community and get the full member experience.

Join For FreeThose who have spent any time studying models and frameworks for things like audio classification projects, NLP, and/or computer vision, are likely wondering how to use Hugging Face for some of these models. Hugging Face is a platform that serves both as a community for those working with data models as well as a hub for data science models and information.

When using Hugging Face for NLP, audio classification, or computer vision users will need to know what Hugging Face has to offer for each project type as opposed to other options. Users will also need to have a deeper understanding of what a Hugging Face model is and how to use Hugging Face for their own data science projects.

A Hugging Face model is simply a machine learning (or AI) project that uses a framework provided by the Hugging Face community. Many of these models are ready-to-go and can be used right away, largely within the PyTorch framework. PyTorch is itself an iteration of the Python coding framework that is more directly applicable to data science. Many Hugging Face models are built within PyTorch and its sibling frameworks, all a user needs to supply is the data. More importantly, Hugging Face aims to make data science projects accessible to anyone who wants to learn. This means they are constantly working on ways for the community to collaborate on projects, make them simpler to use and implement, and find creative solutions to difficult problems in the data science community.

By using Hugging Face users will be able to start their NLP, computer vision, or audio classification project quickly and easily.

How to Use Hugging Face

Hugging Face uses pipelines that are ready to go for pre-trained models, whether using Hugging Face for NLP or something else. This makes start-up even faster because users can dive right in with a model they already know is going to work, at least in some capacity, for their needs.

With the community built around Hugging Face, users have access to a substantial amount of resources and individual expertise to pull from to make sure their Hugging Face models for audio classification and other projects go off without a hitch.

What Is Hugging Face for NLP?

Hugging Face models for NLP are the bread and butter of the Hugging Face community. Hugging Face initially started as a chat app years ago. The Hugging Face community was born from a desire to be able to communicate and connect more clearly and effectively. If users want to build out a machine learning model for Natural Language Processing, then Hugging Face is just a perfect solution.

In fact, Hugging Face, in recent months, has made extra efforts to bring the power of BERT (Bidirectional Encoder Representations from Transformers) to its models and platform. BERT is a technique originally developed by Google for more effective and efficient natural language processing frameworks. This has made the leap to using a Hugging Face model for NLP far better because of access to these top-of-the-line frameworks and models that are plug-and-play, for the most part.

What Is NLP?

As mentioned before, NLP stands for natural language processing. NLP is, at its core, about communication between technology and people. NLP models are used to help AI models and other technology 1) recognize human speech, 2) accurately understand what is being spoken, and 3) interpret what is being said based on context.

Natural language processing is used in many of our daily devices and the technology is ever-improving. The limits of NLP are only set by the creativity and imagination of those working on machine learning models for NLP. Hugging Face models for NLP give much more freedom and flexibility to data scientists and developers who wish to communicate more clearly and effectively between technology and the people using them.

What Is Hugging Face for Audio Classification?

Just as Hugging Face can be used for NLP by recognizing and understanding human speech, there are also Hugging Face models for audio classification. Many of the available machine learning model frameworks used with Hugging Face are similar to the ones used for NLP.

The major difference between the two is that one is trying to recognize speech and the other is looking for a larger array of possible, though more specific within the data search, sounds.

The ability to isolate specific frequencies and pitches are the determining factors for audio classification. Hugging Face models will largely allow for some of this data to be parsed out, but it requires much more in-depth training for the machine learning models before they can be put into regular usage.

For example, if a machine learning model is being trained to recognize what qualifies as classic rock from modern rock, then it will need to hear a huge sampling of songs and instruments. However, if it is seeking to recognize the different calls on one species of an animal, then that is a smaller subset of data that can be used.

What Is Audio Classification?

Audio classification, as mentioned earlier, is the ability of a machine learning model to process, understand, and interpret audio inputs. In the most simple of terms, think of this as how Shazam works to recognize what song is playing.

It listens, understands, and interprets what the audio data being fed into it means and then spits out a conclusion. Audio classification goes far beyond recognizing what song is playing on the radio, though. This technology has been used to predict and analyze environmental and meteorological information, help biologists identify and understand more about certain animal species, and even in industrial complexes to alert owners and workers when a system is malfunctioning.

What Is Hugging Face for Computer Vision?

There is a special Hugging Face model for computer vision known as HugsVision. With Hugging Face focusing so much on making data science accessible to all kinds of people, computer vision has become one of its main focuses for Hugging Face. Specifically, HugsVision is ideal for the healthcare industry but can also be used in other industries and use cases as well.

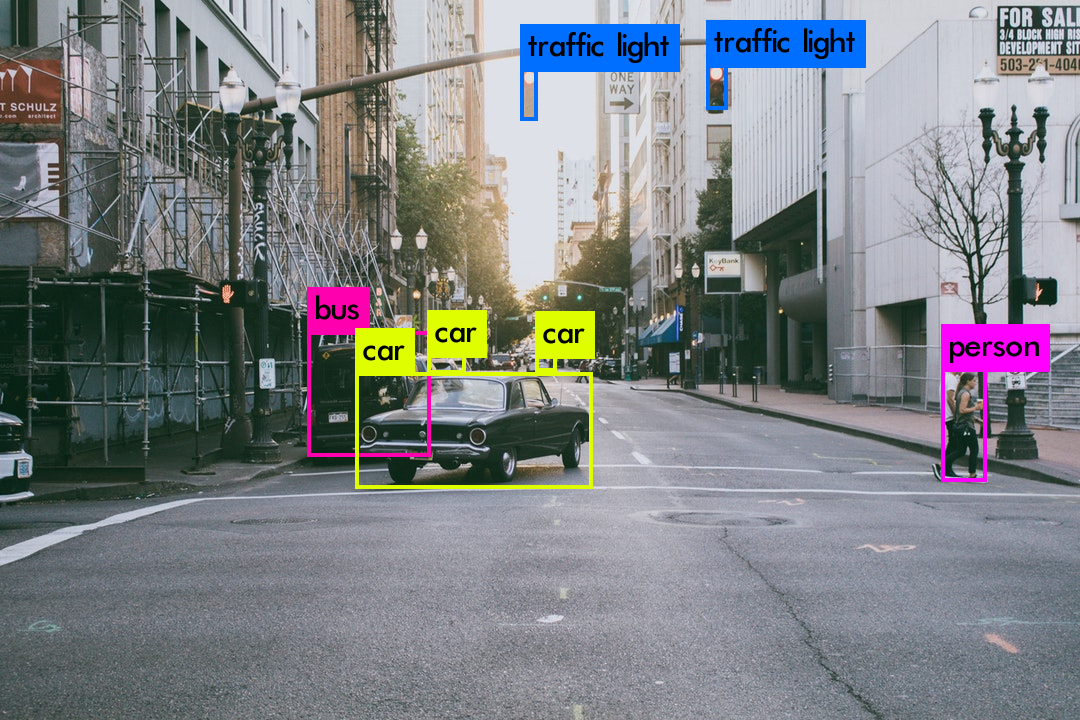

Within the Hugging Face community, there are many who are working to make more reliable technology that can “see” the world around it. These machine learning models can then be trained to alert authorities, generate predictions based on current data, and even decide on appropriate actions to take based on observed data. Some of these are more advanced than others, but computer vision has many use cases and a significant amount of interest within the Hugging Face community and platform.

What Is Computer Vision?

As stated above, computer vision is the ability of a machine learning model to be able to take in visual data and draw conclusions from that data. Sometimes, this is nothing more than the ability to generate or create expected outcomes and predictions, like determining when high traffic times might be for civil engineers to work with, and other times it is being able to predict the weather for crop rotations based on a wide variety of visual data inputs.

Either way, computer vision holds a significant amount of potential for communities and industries.

Hugging Face prides itself on being the most accessible and easy-to-pick-up machine learning framework available. It allows Hugging Face models for audio classification, computer vision, and NLP as well as a plethora of other frameworks.

Published at DZone with permission of Kevin Vu. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments