How Lufthansa Uses Apache Kafka for Data Integration and Machine Learning

The airline Lufthansa uses Apache Kafka as cloud-native middleware for data integration and as data fabric for analytics and machine learning.

Join the DZone community and get the full member experience.

Join For FreeAviation and travel are notoriously vulnerable to social, economic, and political events, as well as the ever-changing expectations of consumers. The coronavirus was just a piece of the challenge. This post explores how Lufthansa leverages data streaming powered by Apache Kafka as cloud-native middleware for mission-critical data integration projects and as data fabric for AI/machine learning scenarios such as real-time predictions in fleet management. An interactive conversation with Lufthansa as an on-demand video is added at the end as a highlight if you want to learn more.

Data Streaming in the Aviation Industry

The future business of airlines and airports will be digitally integrated into the ecosystem of partners and suppliers. Companies will provide more personalized customer experiences and be enabled by a new suite of the latest technologies, including automation, robotics, and biometrics.

The entire aviation industry leverages data streaming powered by Apache Kafka already. This includes airlines, airports, global distribution systems (GDS), aircraft manufacturers, travel agencies, etc. Why? Because real-time data beats slow data across almost all use cases.

This article focuses on data streaming in critical Lufthansa projects. Lufthansa is a major German airline and one of the largest in Europe. It is known for its extensive network of domestic and international flights. Lufthansa offers services ranging from passenger transportation to cargo logistics and is a member of the Star Alliance, one of the world's largest airline alliances.

Apache Kafka as Next-Generation Middleware Replacing ETL, ESB, and IPaaS

Typically, an enterprise service bus (ESB) or other integration solutions like extract-transform-load (ETL) tools have been used to decouple systems. However, the sheer number of connectors, as well as the requirement that applications publish and subscribe to the data at the same time, mean that systems are always intertwined. As a result, development projects depend on other systems, and nothing can be truly decoupled.

Many enterprises leverage the ecosystem of Apache Kafka for the successful integration of different legacy and modern applications. Data streaming differs but also complements existing integration solutions like ESB or ETL tools. Apache Kafka is unique because it combines the following characteristics into a single middleware platform:

- Real-time messaging at any scale

- Event store for true decoupling, backpressure handling, and replayability of historical events

- Data integration eliminates the need for additional integration tools

- Stream processing for stateless and stateful data correlation of real-time and historical data

"Apache Kafka vs. Enterprise Service Bus (ESB) – Friends, Enemies or Frenemies?" explores how data streaming with Kafka complements legacy middleware. If your workloads run mostly in the public cloud, you need to understand the difference between Integration Platform as a Service (iPaaS) and data streaming powered by fully managed Kafka infrastructure.

Lufthansa Uses Apache Kafka as Cloud-Native Middleware for Mission-Critical Integrations

Lufthansa leverages data streaming with Confluent as cloud-native middleware for its strategic integration project KUSCO (Kafka Unified Streaming Cloud Operations).

The team discussed the benefits of using Apache Kafka instead of traditional messaging queues (TIBCO EMS, IBM MQ) for data processing. My two favorite statements:

- “Scaling Kafka is inexpensive”

- “Kafka adopted and integrated within 3 months”

Lufthansa’s Kafka architecture does not have any surprises. A key lesson learned from many companies: The real added value is created when you leverage Kafka not just for messaging, but its entire ecosystem, including different clients/proxies, connectors, stream processing, and data governance.

The result at Lufthansa: A better, cheaper, and faster infrastructure for real-time data processing at scale.

Watch the full talk from Marcos Carballeira Rodríguez from Lufthansa Group recorded at the Confluent Streaming Days 2020 to see all the architectures and quotes from Lufthansa. More and more projects are onboarded on the KUSCO platform. Here are a few statistics on the adoption from 2022 to 2023 of the KUSCO project that System Architect Krzysztof Torunski of Lufthansa Group presented:

I see this typical pattern in customers across industries: The first use case is the hardest to get live. Afterward, new business units tap into the data feeds and build their projects. It has never been easier to access data feeds in real-time and with good data quality at any scale. Just build a downstream application (with your favorite programming language, tool, or SaaS) and start innovating.

Apache Kafka for Analytics and AI/Machine Learning

Apache Kafka serves thousands of enterprises as the mission-critical and scalable real-time data fabric for machine learning infrastructures. The evolution of Generative AI (GenAI) with large language models (LLM) like ChatGPT changed how people think about intelligent software and automation. In various blog posts, I explored the relationship between data streaming with the Kafka ecosystem and AI/machine learning.

Lufthansa Uses Apache Kafka With AI/Machine Learning for Real-Time Predictions

Lufthansa leverages the KUSCO platform to build new analytics use cases with real-time data for critical workloads. In the webinar, we learned about the following two projects from Lufthansa Groups's Domain Architect Sebastian Weber: anomaly detection for alerts and fleet management for aircraft operations.

Anomaly Detection With Apache Kafka and Ksqldb

Data is fed into the streaming platform from various data sources. Lufthansa consolidates and aggregates the data with stream processing before the analytics applications do real-time alerting.

Machine Learning and Apache Kafka for Real-Time Fleet Management

Lufthansa leverages the streaming platform as a data fabric for data ingestion, data processing, and model scoring.

Embedding analytic models into a Kafka application is a standard best practice. While the data lake or lakehouse (that receives data via Kafka) trains the model in batch, many use cases require real-time model scoring and predictions at scale with critical SLAs and low latency. That's exactly the sweet spot of the Kafka ecosystem.

You can either directly embed a model into the Kafka app or leverage a model server that supports streaming interfaces.

Interactive Conversation With Lufthansa

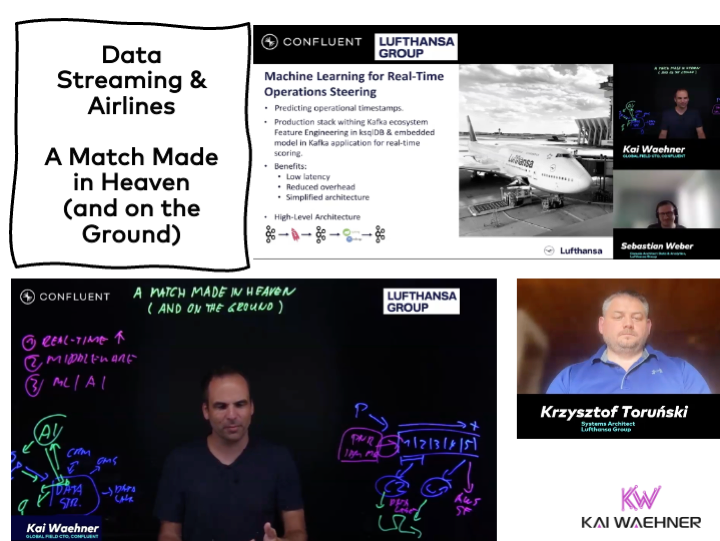

Here is an on-demand video of my conversation with Lufthansa. We talk about use cases for data streaming in the aviation industry and how Lufthansa leverages Apache Kafka as cloud-native middleware and as the data fabric for analytics and machine learning:

Data Streaming as Cloud-Native Middleware and for Mission-Critical Analytics

Lufthansa showed us how you can innovate in the airline industry with a fast time-to-market while still integrating with traditional technologies. The two projects show very different challenges and use cases solved with data streaming powered by the Apache Kafka ecosystem.

The aviation industry is changing rapidly. A good customer experience, valuable loyalty platforms, and competitive pricing (or better hard and soft products) require digitalization of the end-to-end supply chain. This includes topics like Industrial IoT (e.g., predictive maintenance), B2B communication with partners (like GDS, airports, and retailers), and customer 360 (including great mobile apps and omnichannel experiences).

How do you leverage data streaming with Apache Kafka in your projects and enterprise architecture? Let’s connect on LinkedIn and discuss it!

Published at DZone with permission of Kai Wähner, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments