GPU-Accelerated IoT Workloads at the Edge

Bring IoT to the Edge!

Join the DZone community and get the full member experience.

Join For Free

Introduction

In this article, we will look at how to create GPU accelerated IoT Edge workloads targeting the NVIDIA Jetson line of IoT devices. Portions of this content are planned to be delivered during an interactive workshop session as part of Microsoft Ignite 2019.

If you are unable to attend the conference in person, we will give you all of the details that you need to know to run this content on your own, or in a public workshop environment. Let's get started.

Background

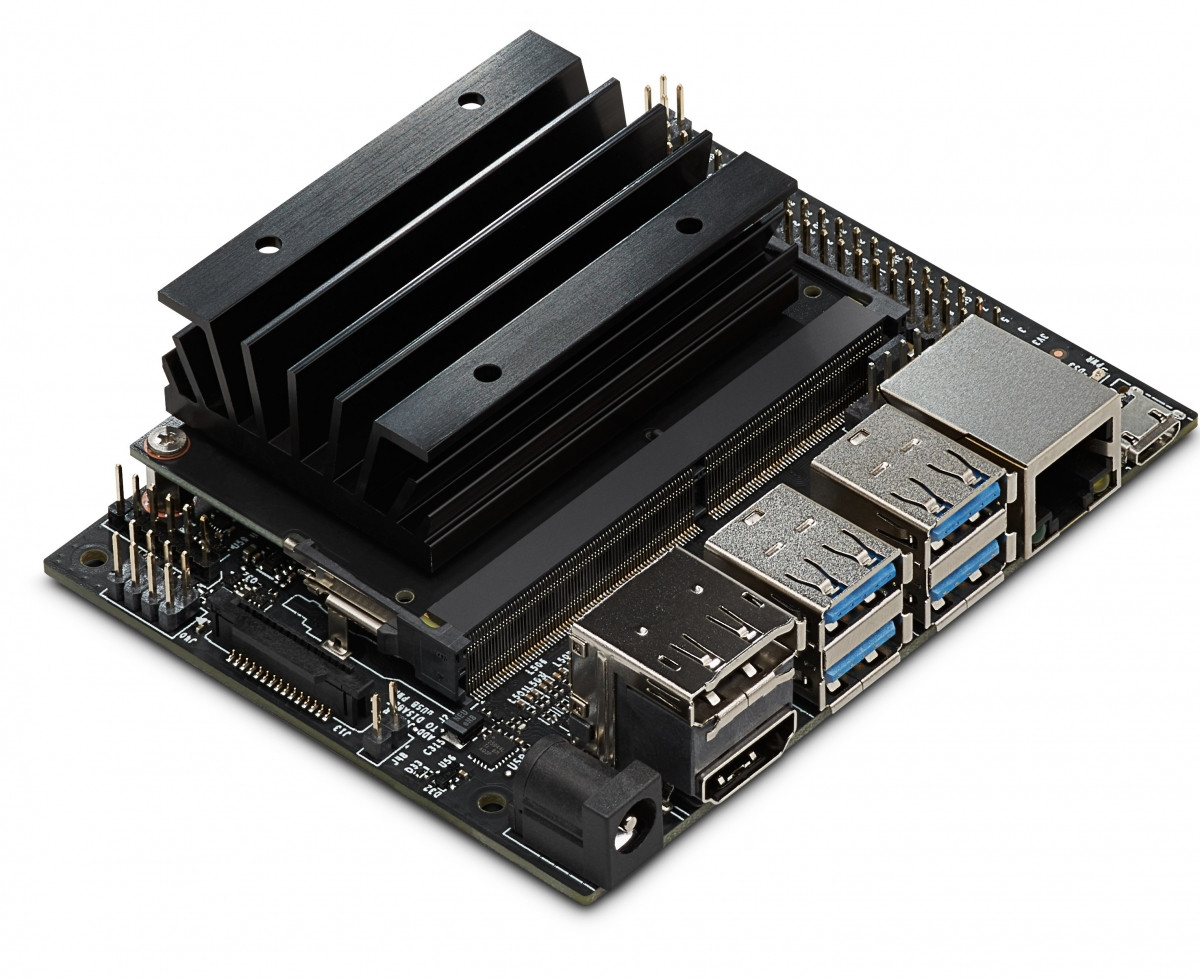

NVIDIA has recently produced a line of small-form-factor IoT devices under the brand name 'Jetson' that come equipped with onboard GPUs to enable complex video, machine learning, and AI-powered applications. This is a paradigm shift for IoT as it enables the dawning of the Artificial Intelligence of Things, or AIOT, in light and heavy edge scenarios. To accommodate various workloads, these devices come in a range of offerings, including the beefy 512-Core Jetson AGX Xavier, mid-range 256-Core Jetson TX2, and the entry-level $99 128-Core Jetson Nano.

Artificial Intelligence and Internet of Things applications aren't entirely new; however, these solutions typically rely on off-site processing of AI workloads in a heavy compute environment that then communicates results downstream for eventual use. AIOT changes this by allowing AI workloads to run on-site, potentially on a device in the field that is also producing telemetry in the form of sensor readings or video capture.

Artificial Intelligence and Internet of Things applications aren't entirely new; however, these solutions typically rely on off-site processing of AI workloads in a heavy compute environment that then communicates results downstream for eventual use. AIOT changes this by allowing AI workloads to run on-site, potentially on a device in the field that is also producing telemetry in the form of sensor readings or video capture.

When we are able to reduce our reliance on external compute for AI processing by taking advantage of accelerated workloads on-device, we are able to obtain and make use of results immediately, even if we are disconnected from the Internet. This is highly advantageous in scenarios that involve mission-critical computer vision workloads for producing insights in environments where outbound network connectivity isn't reliable or even possible, for example, in offshore vessels or off-network factory floors.

Solution

Microsoft's IoT Edge platform is designed specifically for these types of disconnected and intermittent network environments. The IoT Edge solution accomplishes this through the safe deployment of code to IoT devices using containerized modules for workload processing.

These modules can leverage the wide variety of public containers that you can find on DockerHub or your own custom container images that would typically deploy as cloud applications. You can also run popular Microsoft Azure Services as containerized modules; these include Serverless Functions, Stream Analytics, Machine Learning modules, Custom Vision AI services, and local storage with SQL Server.

In both cases, you can leverage containerization to optimize where you want your workloads to run (cloud or edge), based on your scenario and constraints. These modules can even be designed to take advantage of onboard capabilities that may be available on a given device, including access to GPU hardware.

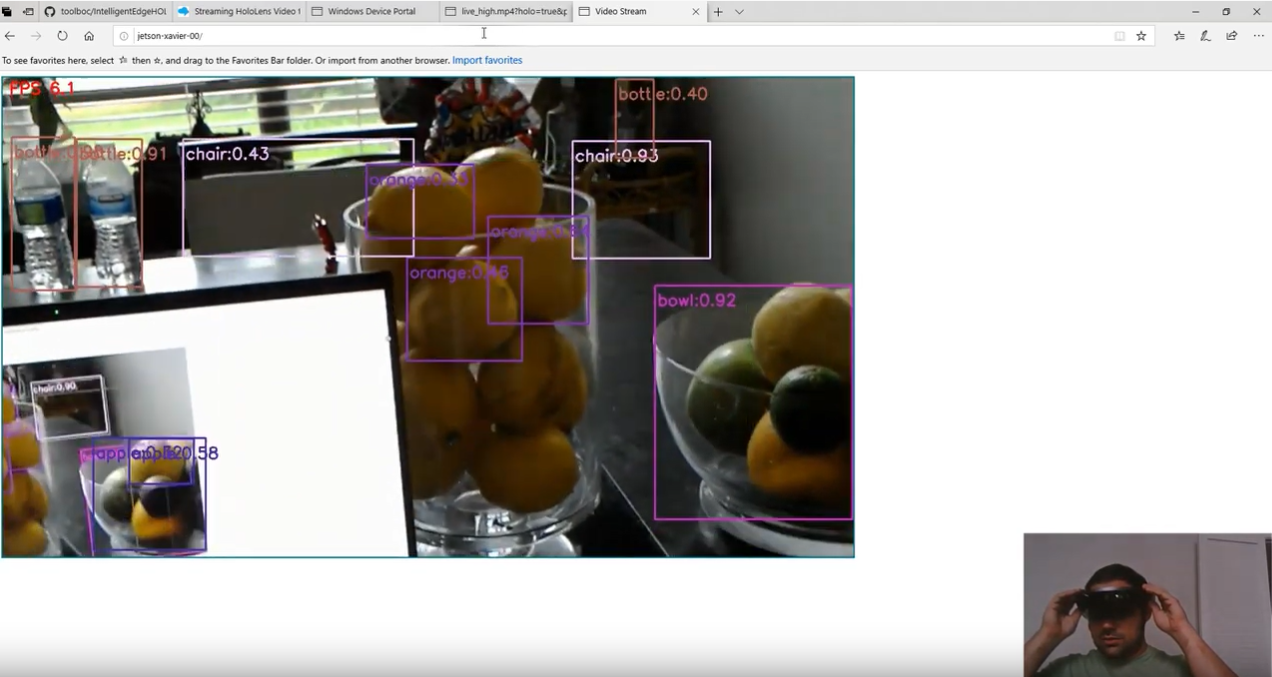

In the remainder of this article, we will show how we can build out containerized software packages as modules for these devices using Azure IoT Edge. We will leverage dedicated tooling in Visual Studio Code to configure those modules, and then deploy and manage them through Azure IoT Hub. This will culminate in an Intelligent Edge Hands-On-Lab that walks through the process of deploying an IoT Edge module to an Nvidia Jetson Nano device to allow for the detection of objects in YouTube videos, RTSP streams, HoloLens Mixed Reality Capture, or an attached webcam.

Getting Started

This lab requires that you have access to the following materials:

Hardware:

- Nvidia Jetson Nano Device

- A cooling fan installed on or pointed at the NVIDIA Jetson Nano device

- USB Webcam (Optional)

- Note: The power consumption will require that your device is configured to use a 5V/4A barrel adapter as mentioned here with an Open-CV compatible camera.

Development Environment:

- Visual Studio Code (VSCode)

- VSCode Extensions

- Git tool(s) Git command line

Workshop Content

Once you have verified that you have the appropriate materials listed above in the "Getting Started" section, you are ready to proceed in following along with the workshop content.

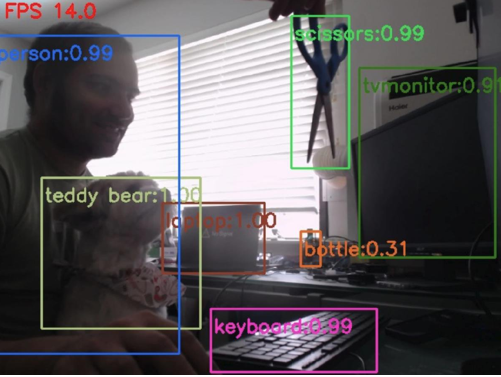

The workshop goes by the title "Intelligent Edge Hands-On Lab," or IntelligentEdgeHOL, and walks through the process of deploying an IoT Edge module to an Nvidia Jetson Nano device to allow for the detection of objects in YouTube videos, RTSP streams, Hololens Mixed Reality Capture, or an attached webcam. It achieves a performance of around 10 frames per second for most video data.

The module ships as a fully self-contained Docker image totaling around 5.5GB. This image contains all necessary dependencies including the NVIDIA Linux for Tegra Drivers for Jetson Nano, CUDA Toolkit, NVIDIA CUDA Deep Neural Network library (CUDNN), OpenCV, and Darknet.

Object Detection is accomplished using YOLOv3-tiny with Darknet, which supports detection of the following:

person, bicycle, car, motorbike, aeroplane, bus, train, truck, boat, traffic light, fire hydrant, stop sign, parking meter, bench, bird, cat, dog, horse, sheep, cow, elephant, bear, zebra, giraffe, backpack, umbrella, handbag, tie, suitcase, frisbee, skis, snowboard, sports ball, kite, baseball bat, baseball glove, skateboard, surfboard, tennis racket, bottle, wine glass, cup, fork, knife, spoon, bowl, banana, apple, sandwich, orange, broccoli, carrot, hot dog, pizza, donut, cake, chair, sofa, potted plant, bed, dining table, toilet, tv monitor, laptop, mouse, remote, keyboard, cell phone, microwave, oven toaster, sink, refrigerator, book, clock, vase, scissors, teddy bear, hair drier, toothbrush

You can follow along with the content to create your very own GPU accelerated IoT Edge workload by going through the project's README. The workshop content is available in its entirety on GitHub at http://aka.ms/IntelligentEdgeHOL.

However, if you would like to follow along using the content the way, it is intended to be delivered in a workshop environment. There is also a video presentation and walkthrough of the content available at http://aka.ms/IntelligentEdgeHOLVideo.

The video content can be followed along on your own, used as a "train the trainer" video for running your own workshop, or used as-is to run a workshop with minimal effort. A link to the presentation deck used in this video can be found at http://aka.ms/IntelligentEdgeHOLDeck.

Conclusion

This article is intended to give interested developers the chance to walk through the workshop content presented in the WRK3031 session at Ignite 2019. If you have ideas to run this content at a future event or interest in running it by yourself, please feel free to reach out to me on Twitter @pjdecarlo for any assistance.

If you are able to successfully reproduce the project and/or have ideas to improve it, I would love to hear about it. Please let us know in the comments how the overall experience was, we look forward to hearing from you!

Happy Hacking!

Opinions expressed by DZone contributors are their own.

Comments