MuleSoft (Event-Based Process) With Enhanced Execution Engine

To opt benefits of asynchronous processes, MuleSoft has introduced thread management which has no dependency on the configuration.

Join the DZone community and get the full member experience.

Join For FreeTo opt benefits of asynchronous processes, MuleSoft has introduced thread management which has no dependency on the configuration. It works based on available resources on platforms.

MuleSoft follows and recommends an asynchronous process hence came with an event-based approach along with an asynchronous approach. It allows the application to be more responsive by not waiting for long processes like your I/O processes to finish.

Below is a major difference to follow event-based (Reactive Programming) pattern:

- Reactive Programming — Focusing on computation through ephemeral data streams, tend to be event-driven.

- Messages have a unique clear destination, while Events are made for others to observe.

With definition:

Reactive Systems: Defined by the Reactive Manifesto — is a set of architectural design principles for building modern systems that are well prepared to meet the increasing demands that applications face today.

Proactor Pattern: A fully asynchronous design pattern made for handling events. It’s very much like multi-threaded programming, without the need for you to think of thread management.

- Pros and Cons (Proactor Pattern)

- The obvious benefit is the asynchronous execution. As mentioned, it allows the application to be more responsive by not waiting for long processes like your I/O processes to finish. Also, with a centralized thread pooling and dispatching mechanism, there is no need for the user of the Proactor implementation to deal with thread management directly. The implementation can be further improved so that the thread pool can grow and shrink dynamically based on the available physical resources and the number of pending tasks. Tasks can also be made to queue based on priority.

- As for the downsides, like many asynchronous or multi-threaded paradigms, debugging can be quite a hassle. Although usually not needed, you may have to consider thread synchronization when multiple tasks use a shared resource (and this increases the risk of getting deadlocks, starving away your thread pool). For a small application, I feel that the Proactor pattern may add unnecessary complexity to the overall architecture.

Reactive Programming: It has a major benefit in event-driven programming.. as follows:

It is the flow of data rather than the flow of control;

- This is information that drives the logic forward rather than having control flow driven by a thread-of-execution.

- It supports decomposing to execute an asynchronous and non-blocking fashion.

- Allows for non-blocking execution.

The Benefits (And Limitations) Of Reactive Programming;

- Increased utilization of computing resources on multi-core and multi-CPU hardware and increased performance by reducing serialization points.

- Developer productivity as a straightforward and maintainable approach to dealing with asynchronous and non-blocking computation and IO.

- Inclusion of back-pressure is crucial to avoid over-utilization or rather unbounded consumption of resources

The primary benefits of Reactive Programming are:

- Increased utilization of computing resources on multi-core and multi-CPU hardware; and increased performance by reducing serialization points.

- Developer productivity as a straightforward and maintainable approach to dealing with asynchronous and non-blocking computation and IO.

- Inclusion of back-pressure is crucial to avoid over-utilization or rather unbounded consumption of resources

MuleSoft Threads — Uber vs. Dedicated

MuleSoft introduced an approach to self manages approach for thread availability and executions. In the current release, MuleSoft has two major thread pooling approach as below.

UBER: It is a unified scheduling strategy to manage threads and its allocation to process events. All individual thread pool (cpu_light, cpu_intensive, and I/O) backed by Uber thread pool. In Uber, type of threads same as previous processes, it only follows an algorithm to support the optimum performance of threads in processing.

DEDICATED: It is a self thread managed thread pool and allocates based on component process requirements.

NOTE: Both approaches follow the strategy to best utilization of thread management in Mulesoft runtime. This strategy works on existing threads (cpu_light, cpu_intensive, and I/O).

Recommended: Always run mule using the UBER strategy.

Back-Pressure Management

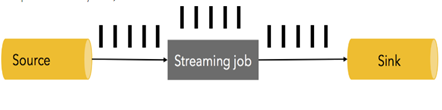

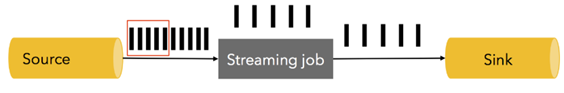

With enhanced execution engine, MuleSoft has an approach of back-pressure which helps application for highly responsive and self-tuning in request processing...

This back-pressure is an important feedback mechanism that allows systems to gracefully respond to load rather than collapse under it. The back-pressure may cascade up to the user, at which point responsiveness may degrade, but this mechanism will ensure that the system is resilient under load, and will provide information that may allow the system itself to apply other resources to help distribute the load, see Elasticity.

Below a few scenarios for the back-pressure mechanism:

Thread Pool at Mule Runtime

In on-prem MuleSoft runtime, below configuration file and attribute.

Mule runtime engine on-premises:

- Modify these global formulas by editing the

- MULE_HOME/conf/schedulers-pools.conf

Configure the org.mule.runtime.scheduler.SchedulerPoolStrategy parameter to switch between the two available strategies:

UBER: Unified scheduling strategy. Default.

DEDICATED: Separated pools strategy. Legacy.

Configuration at the Application Level:

<ee:scheduler-pools poolStrategy="UBER" gracefulShutdownTimeout="15000">

<ee:uber corePoolSize="1" maxPoolSize="9"

queueSize="5" keepAlive="5"/> </ee:scheduler-pools>

Configure three pools scheme at the Application Level:

<ee:scheduler-pools gracefulShutdownTimeout="15000"> <ee:cpu-light poolSize="2" queueSize="1024"/> <ee:io corePoolSize="1" maxPoolSize="2" queueSize="0" keepAlive="30000"/> <ee:cpu-intensive poolSize="4" queueSize="2048"/> </ee:scheduler-pools>

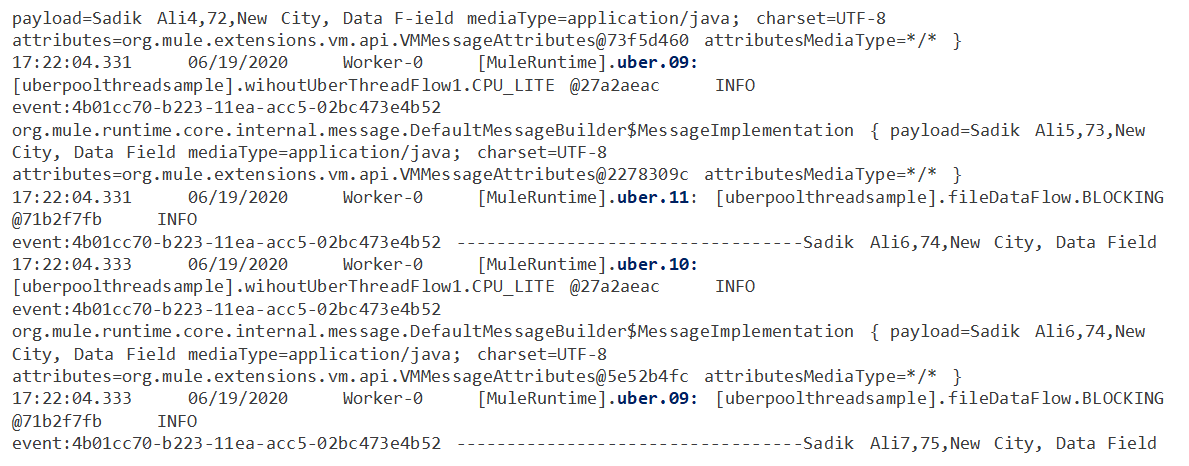

With default configuration in Cloudhub runtime (Uber): As explained above, Uber thread-pool uses available threads (CPU/Intensive/Light). The major role of the Uber tread pool approach to follow an algorithm to perform optimum performance for request processing in the application while assigning threads to complete requests.

Application logs:

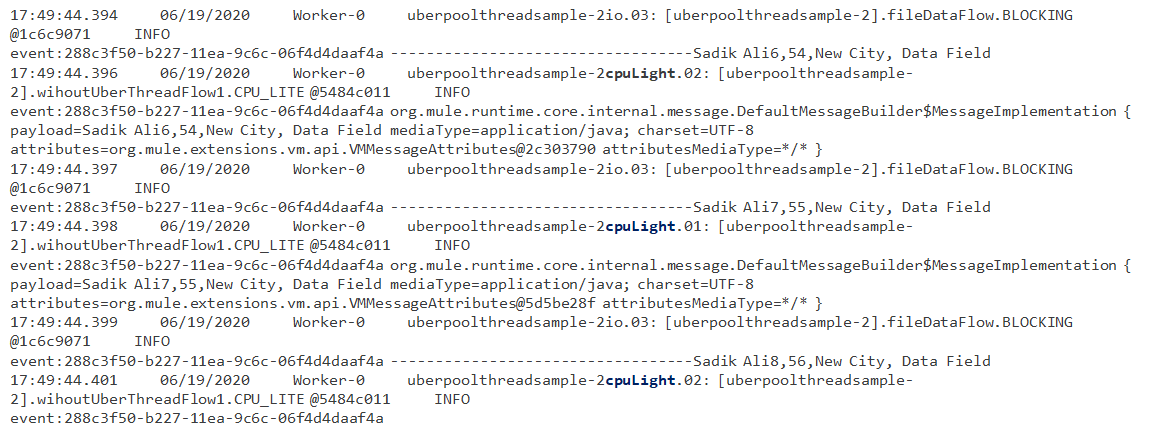

With application level (scheduler thread pool) configuration in Cloudhub runtime:

Application logs: In below logs, separate thread allocated which is configured in Mule flow level.

Opinions expressed by DZone contributors are their own.

Comments