From Data to Insights: Kubernetes-Powered AI/ML in Action

Discover how how Kubernetes can join forces with AI/ML to provide fine-grained control, security, and elasticity for AI/ML workloads.

Join the DZone community and get the full member experience.

Join For FreeThis is an article from DZone's 2023 Kubernetes in the Enterprise Trend Report.

For more:

Read the Report

Kubernetes streamlines cloud operations by automating key tasks, specifically deploying, scaling, and managing containerized applications. With Kubernetes, you have the ability to group hosts running containers into clusters, simplifying cluster management across public, private, and hybrid cloud environments.

AI/ML and Kubernetes work together seamlessly, simplifying the deployment and management of AI/ML applications. Kubernetes offers automatic scaling based on demand and efficient resource allocation, and it ensures high availability and reliability through replication and failover features. As a result, AI/ML workloads can share cluster resources efficiently with fine-grained control. Kubernetes' elasticity adapts to varying workloads and integrates well with CI/CD pipelines for automated deployments. Monitoring and logging tools provide insights into AI/ML performance, while cost-efficient resource management optimizes infrastructure expenses. This partnership streamlines the AI/ML development process, making it agile and cost-effective.

Let's see how Kubernetes can join forces with AI/ML.

The Intersection of AI/ML and Kubernetes

The partnership between AI/ML and Kubernetes empowers organizations to deploy, manage, and scale AI/ML workloads effectively. However, running AI/ML workloads presents several challenges, and Kubernetes addresses those challenges effectively through:

- Resource management – This allocates and scales CPU and memory resources for AI/ML Pods, preventing contention and ensuring fair distribution.

- Scalability – Kubernetes adapts to changing AI/ML demands with auto-scaling, dynamically expanding or contracting clusters.

- Portability – AI/ML models deploy consistently across various environments using Kubernetes' containerization and orchestration.

- Isolation – Kubernetes isolates AI/ML workloads within namespaces and enforces resource quotas to avoid interference.

- Data management – Kubernetes simplifies data storage and sharing for AI/ML with persistent volumes.

- High availability – This guarantees continuous availability through replication, failover, and load balancing.

- Security – Kubernetes enhances security with features like RBAC and network policies.

- Monitoring and logging – Kubernetes integrates with monitoring tools like Prometheus and Grafana for real-time AI/ML performance insights.

- Deployment automation – AI/ML models often require frequent updates. Kubernetes integrates with CI/CD pipelines, automating deployment and ensuring that the latest models are pushed into production seamlessly.

Let's look into the real-world use cases to better understand how companies and products can benefit from Kubernetes and AI/ML.

| REAL-WORLD USE CASES | |

|---|---|

| Use Case | Examples |

| Recommendation systems | Personalized content recommendations in streaming services, e-commerce, social media, and news apps |

| Image and video analysis | Automated image and video tagging, object detection, facial recognition, content moderation, and video summarization |

| Natural language processing (NLP) | Sentiment analysis, chatbots, language translation, text generation, voice recognition, and content summarization |

| Anomaly detection | Identifying unusual patterns in network traffic for cybersecurity, fraud detection, and quality control in manufacturing |

| Healthcare diagnostics | Disease detection through medical image analysis, patient data analysis, drug discovery, and personalized treatment plans |

| Autonomous vehicles | Self-driving cars use AI/ML for perception, decision-making, route optimization, and collision avoidance |

| Financial fraud detection | Detecting fraudulent transactions in real-time to prevent financial losses and protect customer data |

| Energy management | Optimizing energy consumption in buildings and industrial facilities for cost savings and environmental sustainability |

| Customer support | AI-powered chatbots, virtual assistants, and sentiment analysis for automated customer support, inquiries, and feedback analysis |

| Supply chain optimization | Inventory management, demand forecasting, and route optimization for efficient logistics and supply chain operations |

| Agriculture and farming | Crop monitoring, precision agriculture, pest detection, and yield prediction for sustainable farming practices |

| Language understanding | Advanced language models for understanding and generating human-like text, enabling content generation and context-aware applications |

| Medical research | Drug discovery, genomics analysis, disease modeling, and clinical trial optimization to accelerate medical advancements |

Table 1

Example: Implementing Kubernetes and AI/ML

As an example, let's introduce a real-world scenario: a medical research system. The main purpose is to investigate and find the cause of Parkinson's disease. The system analyzes graphics (tomography data and images) and personal patient data (which allows the use of the data). The following is a simplified, high-level example:

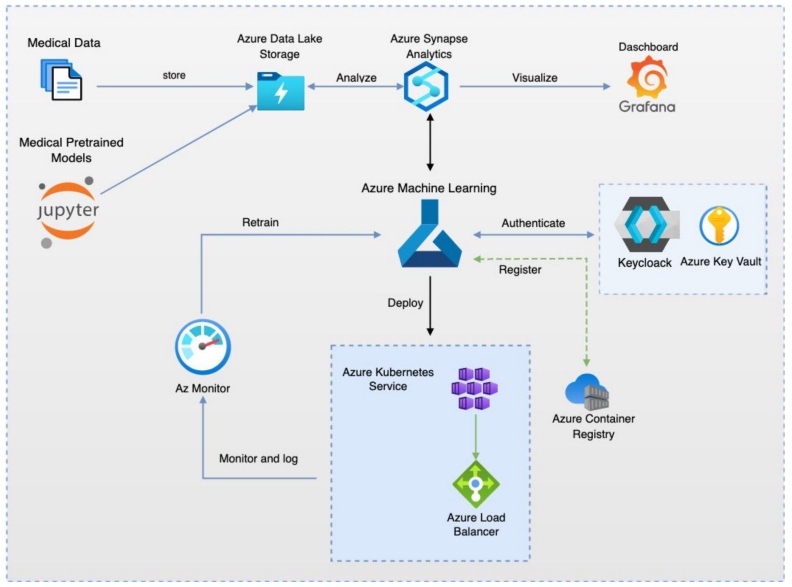

Figure 1: Parkinson's disease medical research architecture

The architecture contains the following steps and components:

- Data collection – gathering various data types, including structured, unstructured, and semi-structured data like logs, files, and media, in Azure Data Lake Storage Gen2

- Data processing and analysis – utilizing Azure Synapse Analytics, powered by Apache Spark, to clean, transform, and analyze the collected datasets

- Machine learning model creation and training – employing Azure Machine Learning, integrated with Jupyter notebooks, for creating and training ML models

- Security and authentication – ensuring data and ML workload security and authentication through the Key Cloak framework and Azure Key Vault

- Container management – managing containers using Azure Container Registry

- Deployment and management – using Azure Kubernetes Services to handle ML model deployment, with management facilitated through Azure VNets and Azure Load Balancer

- Model performance evaluation – assessing model performance using log metrics and monitoring provided by Azure Monitor

- Model retraining – retraining models as required with Azure Machine Learning

Now, let's examine security and how it lives in Kubernetes and AI/ML.

Data Analysis and Security in Kubernetes

In Kubernetes, data analysis involves processing and extracting insights from large datasets using containerized applications. Kubernetes simplifies data orchestration, ensuring data is available where and when needed. This is essential for machine learning, batch processing, and real-time analytics tasks.

Kubernetes ML analyses require a strong security foundation, and robust security practices are essential to safeguard data in AI/ML and Kubernetes environments. This includes data encryption at rest and in transit, access control mechanisms, regular security audits, and monitoring for anomalies. Additionally, Kubernetes offers features like role-based access control (RBAC) and network policies to restrict unauthorized access.

To summarize, here is an AL/ML for Kubernetes security checklist:

- Access control

- Set RBAC for user permissions

- Create dedicated service accounts for ML workloads

- Apply network policies to control communication

- Image security

- Only allow trusted container images

- Keep container images regularly updated and patched

- Secrets management

- Securely store and manage sensitive data (Secrets)

- Implement regular Secret rotation

- Network security

- Segment your network for isolation

- Enforce network policies for Ingress and egress traffic

- Vulnerability scanning

- Regularly scan container images for vulnerabilities

Last but not least, let's look into distributed ML in Kubernetes.

Distributed Machine Learning in Kubernetes

Security is an important topic; however, selecting the proper distributed ML framework allows us to solve many problems. Distributed ML frameworks and Kubernetes provide scalability, security, resource management, and orchestration capabilities essential for efficiently handling the computational demands of training complex ML models on large datasets.

Here are a few popular open-source distributed ML frameworks and libraries compatible with Kubernetes:

- TensorFlow – An open-source ML framework that provides

tf.distribute.Strategyfor distributed training. Kubernetes can manage TensorFlow tasks across a cluster of containers, enabling distributed training on extensive datasets. - PyTorch – Another widely used ML framework that can be employed in a distributed manner within Kubernetes clusters. It facilitates distributed training through tools like PyTorch Lightning and Horovod.

- Horovod – A distributed training framework, compatible with TensorFlow, PyTorch, and MXNet, that seamlessly integrates with Kubernetes. It allows for the parallelization of training tasks across multiple containers.

These are just a few of the many great platforms available. Finally, let's summarize how we can benefit from using AI and Kubernetes in the future.

Conclusion

In this article, we reviewed real-world use cases spanning various domains, including healthcare, recommendation systems, and medical research. We also went into a practical example that illustrates the application of AI/ML and Kubernetes in a medical research use case.

Kubernetes and AI/ML are essential together because Kubernetes provides a robust and flexible platform for deploying, managing, and scaling AI/ML workloads. Kubernetes enables efficient resource utilization, automatic scaling, and fault tolerance, which are critical for handling the resource-intensive and dynamic nature of AI/ML applications. It also promotes containerization, simplifying the packaging and deployment of AI/ML models and ensuring consistent environments across all stages of the development pipeline.

Overall, Kubernetes enhances the agility, scalability, and reliability of AI/ML deployments, making it a fundamental tool in modern software infrastructure.

This is an article from DZone's 2023 Kubernetes in the Enterprise Trend Report.

For more:

Read the Report

Opinions expressed by DZone contributors are their own.

Comments