Container Attached Storage (CAS) vs. Software-Defined Storage - Which One to Choose?

Join the DZone community and get the full member experience.

Join For FreeHardware abstraction involves the creation of a programming layer that allows the computer operating system to interact with hardware devices at a general rather than detailed level. This layer involves logical code implementation that avails the hardware to any software program. For storage devices, abstraction provides a uniform interface for users accessing shared storage, concealing the hardware’s implementation from the operating system. This allows software running on user machines to get the highest possible performance from the storage devices. It also allows for device-independent programs since storage hardware abstraction enables device drivers to access each storage device directly.

Kubernetes is, by nature, infrastructure agnostic, for that it relies on plugins and volume abstractions to decouple storage hardware from applications and services. On the other hand, containers are ephemeral, and immediately lose data when they terminate. Kubernetes persists data created and processed by containerized applications on Physical Storage devices using Volumes and Persistent Volumes. These abstractions connect to storage hardware through various types of Hardware Abstraction Layer (HAL) implementations. Two commonly used HAL storage implementations for Kubernetes clusters are Container Attached Storage (CAS) and Software Designed Storage (SDS).

This blog delves into fundamental differences of CAS and SDS, the benefits of each, and the most appropriate use-cases for typical HAL storage implementations.

Container Attached Storage Vs Software-Defined Storage

Kubernetes employs abstracted storage for portable, highly available and distributed storage. The Kubernetes API supports various CAS and SDS storage solutions connecting through the CSI interface. Let us take a closer look into the functioning of both the abstraction models and the purpose each solves for storage in a Kubernetes cluster.

Container Attached Storage

Container Attached Storage (CAS) introduces a novel approach of persisting data for stateful workloads in Kubernetes clusters. With CAS, storage controllers are managed and run in containers as part of the Kubernetes cluster. This allows storage portability since these controllers can be run on any Kubernetes platform, whether on personal machines, on-premises data centres or public cloud offerings. Since a CAS leverages a microservice architecture, the storage solution remains closely associated with the application that binds to physical storage devices, reducing I/O times.

Container Attached Storage Architecture

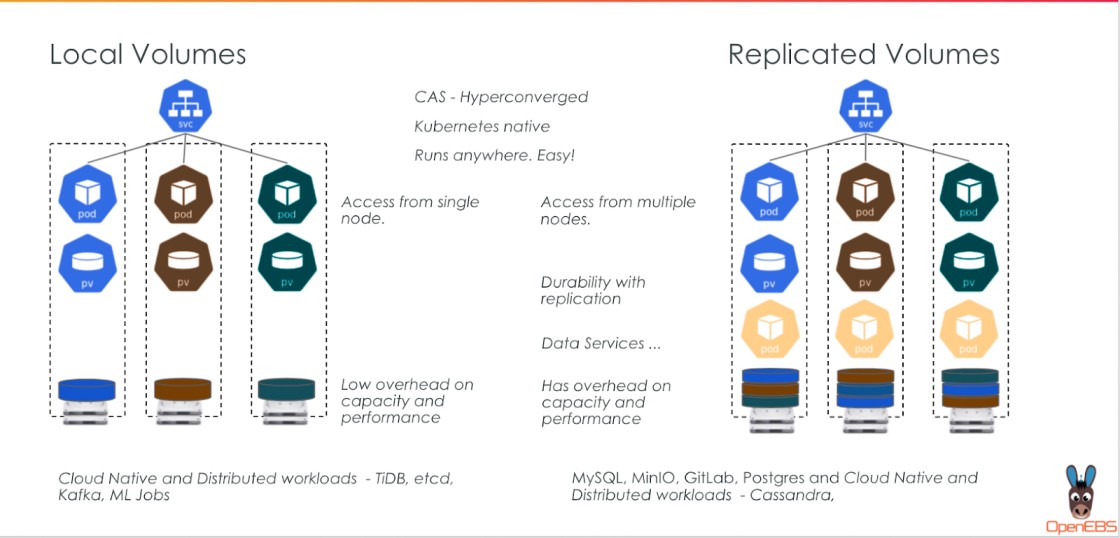

CAS leverages the Kubernetes environment to enable the persistence of cluster data. The storage solution runs storage targets in containers. These targets are microservices that can be replicated for independent scaling and management. For enhanced autonomy and agility, these microservice-based storage targets can then be orchestrated using a platform like Kubernetes.

A CAS cluster uses the control plane layer for storage management while the data plane layer is used to run storage targets/workloads. Storage controllers in the control plane provision volumes, spin up storage target replicas and perform other management associated tasks. Data plane components execute storage policies and instructions from control plane elements. These instructions typically include file paths, storage and access methods. The data plane additionally contains the storage engine which is responsible for implementing the actual Input-Output Path for file storage.

Benefits of Container Attached Storage

Container Attached Storage enables agile storage for stateful containerized applications. This is because it follows a microservice-based pattern which allows the storage controller and target replicas to be upgraded seamlessly. Containerization of storage software means that administrative teams can dynamically allocate and update storage policies for each volume. With CAS, low-level storage resources are represented using Kubernetes Custom Resource Definitions. This allows for seamless integration between storage and cloud-native tooling, which enables easier management and monitoring. CAS also ensures storage is vendor-agnostic since stateful workloads can be moved from one Kubernetes deployment environment to another without disrupting services.

Container Attached Storage Use-Cases

CAS uses storage target replication to ensure high availability, avoiding blast radius limitations of traditional distributed storage architecture. This makes CAS the top storage choice for cloud-native applications. CAS is also appropriate for organizations looking to orchestrate their storage across multiple clouds. This is because CAS can be deployed on any Kubernetes platform. Container Attached Storage enables simple storage backup and replication, making it perfect for applications that require scale-out storage. It is also perfect for development teams that want to improve read-write times for their Continuous Integration and Development (CI/CD) pipelines.

Popular CAS solutions providers for Kubernetes include:

- OpenEBS

- StorageOS

- Portworx

- Longhorn

Software-Defined Storage

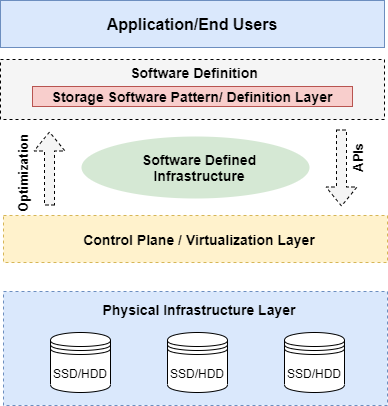

Software-Defined Storage architecture relies on data programs to decouple running applications from storage hardware. This simplifies the management of storage devices by abstracting them into virtual partitions. Management is then enabled on a Data Management Interface (DMI) that hosts command and control functions.

Features of Software-Defined Storage

With Software-Defined Storage, the data/service management interface is hosted on a master server that controls storage layers consisting of shared storage pools. This makes provisioning and allocation of storage easy and flexible. Following are some of the key features of software-defined storage:

Device Abstraction - Data I/O services should be delivered uniformly to users regardless of the underlying hardware. Through SDS, storage abstraction constructs, such as repositories, file shares, volumes, and Logical Unit Numbers (LUNs) are used to create a clear divide between physical hardware and logical aspects of data storage.

Automation - The SDS solution implements workflows and algorithms that reduce the amount of manual work performed by administrators. To enable efficient automation, SDS storage systems adapt to varying performance and data needs that require little human intervention.

Disaggregated, Pooled Storage - Physical storage devices are part of a shared tool from which the software can carve out storage for services and applications. This allows SDS to use available storage efficiently when required, thereby resulting in optimum usage of resources.

Advantages of Software-Defined Storage

Some benefits of using SDS include:

Enhanced Scalability - Decoupling hardware resources allows administrators to allocate physical storage dynamically depending on workload. Pooled, disaggregated storage enabled by SDS allows for both vertical and horizontal scaling of physical volumes, supporting larger capacity and higher performance.

Improved I/O Performance - SDS enables input-output parallelism to process host requests dynamically across multiple CPUs. SDS also supports large caching memory of up to 8TB, while enabling automatic data tiering. This allows faster input-output operations for quicker data processing.

Interoperability - SDS uses the Data Management Interface as a translator that allows storage solutions running on different platforms to interact with each other. It also groups physically isolated storage hardware into logical pools, allowing organizations to host shared storage from different vendors.

Reduced Costs - SDS storage solutions typically run on existing commodity hardware while optimizing the consumption of storage. SDS also enables automation that reduces the number of administrators required to manage storage infrastructure. These factors lead to lower upfront and operational expenses towards managing workloads.

When to Use Software-Defined Storage

SDS offers several benefits for teams looking to enhance storage flexibility at reduced costs. Some common use-cases for SDS include:

- Data centre infrastructure modernization

- Creating robust systems for mobile and challenging environments

- Creating Hybrid Cloud Implementations to be managed on the same platform

- Leveraging existing infrastructure for Remote and Branch Offices

Comparing Container Attached Storage with Software-Defined Storage

Similarities:

Both CAS and SDS enable isolation between physical storage hardware and running applications. While doing so, both technologies abstract data management from data storage resources. The two HAL implementations share several features in common, including:

Vendor-agnostic

Both CAS and SDS architectures allow multiple workloads running on a single host. This allows administrators to avail a separation between storage devices and the access software. As a result, organizations can choose either CAS or SDS to implement a storage solution that can run on any platform, regardless of who develops or manages the tooling.

Allow dynamic storage allocation

SDS and CAS allow for the dynamic attachment and detachment of storage tools, thereby enabling automatic provisioning of data backups and replicas for high availability applications. Both SDS and CAS allow for automatic deployment of storage infrastructure, which allows for storage technology diversity and heterogeneity.

Allow efficient infrastructure scaling

CAS and SDS allow horizontal and vertical infrastructure scaling to automate data workflows. The two HAL approaches enable the creation of a composable disaggregated infrastructure that enhances the creation of versatile, distributed environments.

Differences

While SDS enables distributed storage management and reduced hardware dependencies, CAS allows for disintegrated storage that can be run using any container orchestration platform. This introduces various differences between CAS and SDS, including:

- Software-Defined Storage relies on traditional shared software with limitations on blast radius, while Container Attached Storage (CAS) allows the replication of storage software, allowing for independent management and scaling.

- CAS allows for scaling up/sideways in both storage and volume performance, while SDS enables the scaling up of storage nodes to improve storage capacity.

- SDS enables a Hyper-Converged Infrastructure (HCI) while CAS enables Highly Disaggregated Storage Infrastructure.

Container Attached Storage and Software-Defined Storage both allow cluster administrators to leverage the benefits of hardware abstraction to persist data for stateful applications in Kubernetes. CAS allows the flexible management of storage controllers by allowing microservices-based storage orchestration using Kubernetes. On the other hand, Software-Defined Storage allows the abstraction of storage hardware using a programmable data control plane.

CAS has all the features that a typical SDS provides, albeit tailored for container workloads and built with the latest software and hardware primitives.

OpenEBS, a popular CAS based storage solution, has helped several enterprises run stateful workloads. Originally developed by MayaData, OpenEBS is now a CNCF project with a vibrant community of organizations and individuals alike. This was also evident from CNCF’s 2020 Survey Report that highlighted MayaData (OpenEBS) in the top-5 list of most popular storage solutions. To know more on how OpenEBS can help your organization run stateful workloads, contact us here.

This article has already been published on https://blog.mayadata.io/container-attached-storage-cas-vs.-software-defined-storage-which-one-to-choose and has been authorized by MayaData for a republish.

Opinions expressed by DZone contributors are their own.

Comments