Detecting Memory Leaks From a JVM Heap Dump

Want to learn more about detecting memory leaks from a JVM heap dump? Check out this post to learn more about Java and Garbage Collection.

Join the DZone community and get the full member experience.

Join For FreeOne of the main reasons for Java's popularity is Garbage Collection. At runtime, any object that is not reachable from a GC root will be automatically destroyed and its memory recycled (sooner or later). The most common GC roots are local variables in methods and static data fields; an object is reachable when a GC root points to it directly or through a chain of other objects. So, no object in Java can really "leak," i.e. become inaccessible to the running program yet use up memory — not for long time, anyway. So, why is "memory leak" still one of the common problems with memory in Java apps? If you are wondering about it or have ever struggled to try to understand why your app's used memory keeps growing, read on.

Briefly speaking, memory leaks in Java are not real leaks — they are simply data structures that grow uncontrolled due to errors or suboptimal code. One can argue that they are a side effect of the power of the language and its ecosystem. In the old, pre-Java days, writing a big program in C++ was a slow, sometimes painful, process that required a serious effort of a well-coordinated group of developers. Presently, with the rich set of JDK library classes and an even richer choice of third-party open-source code, with powerful IDEs and, above all, the powerful yet "forgiving" language that Java has become, the situation is different. A loose group or even a single developer can quickly put together a really big application that works. The catch? They would likely have a limited understanding of how exactly this app, or at least some of its parts, work.

Fortunately, a large part of such concerns can be addressed by unit tests. Well-tested libraries and app code ensure a well-behaved application. However, there is one caveat: unit tests are rarely written to model real-life amounts of data and/or real-life run duration. This is the main reason why suboptimal code causing a memory leak can sneak in.

One common source of memory leaks is memory caches that are used to avoid slow operations, such as repeated retrieval of the same objects from the database. Alternatively, some data generated at runtime, such as operation history, may be periodically dumped to disk, but also retained in memory, again to speed up access to it. Improving performance by avoiding repeated slow reads from the external storage, at the expense of some extra memory, is generally a smart choice. But if your cache has no size limit or other purge mechanisms; the amount of external data is high relative to the JVM heap; and if the app runs for long enough — you may run into problems. First, the GC pauses will become more frequent and take longer. Then, depending on some secondary factors, there are two scenarios. In the first one, the app would crash soon with an OutOfMemoryError. In the second one that usually occurs when on each GC invocation a little memory can be reclaimed, the app may run into another problem: it would not crash, but it would spend almost all its time in GC, giving the impression of hanging. The second scenario may be hard to diagnose if the used heap size is not monitored. But even if the first one unfolds, how do you know which data structure(s) are responsible for it?

Fortunately, since this problem is not new, various tools for diagnosing memory leaks have been developed over time. They fall in two broad categories: the tools that collect information continuously at runtime and the tools that analyze a single JVM memory snapshot, called a heap dump.

The tools that collect information continuously work via instrumenting (modifying) the app code and/or activating internal data collection mechanisms in the JVM. Examples of such tools are Visual VM or Mission Control, both of which come with the JDK. In principle, such tools are more precise in identifying memory leaks, since they can differentiate big, unchanging data structures and those that keep growing over time. However, collecting this information at the sufficiently detailed level often incurs high runtime overhead that renders this method unusable in production or even pre-production testing.

Thus, the second option: analyzing a single heap dump and identifying data structures that are "leak candidates", is really appealing. Taking a heap dump pauses the running JVM for a relatively brief period. Generating a dump takes about 2 sec per 1 GB of used heap. So if, for example, your app uses 4 GB, it would be stopped for 8 seconds. A dump can be taken on demand (using the jmap JDK utility) or when an app fails with OutOfMemoryError (if the JVM was started with the -XX:+HeapDumpOnOutOfMemoryError command line option).

A heap dump is a binary file of about the size of your JVM's heap, so it can only be read and analyzed with special tools. There is a number of such tools available, both open-source and commercial. The most popular open-source tool is Eclipse MAT; there is also VisualVM and some less powerful and lesser-known tools. The commercial tools include the general-purpose Java profilers: JProfiler and YourKit, as well as one tool built specifically for heap dump analysis called JXRay.

Unlike the other tools, JXRay analyzes a heap dump right away for a large number of common problems, such as duplicate strings and other objects, suboptimal data structures, and, yes, memory leaks. The tool generates a report with all the collected information in HTML format. Thus, you can view the results of analysis anywhere at any time and share it with others easily. It also means that you can run the tool on any machine, including big and powerful but "headless" machines in a data center.

Let's consider an example now. A simple Java program below simulates a situation when (a) a large part of the memory is occupied by big, unchanging objects, and (b) there is also a large number of threads, all of them maintaining identical, relatively small data structures ( ArrayLists in our example) that keep growing.

import java.util.ArrayList;

import java.util.List;

public class MemLeakTest extends Thread {

private static int NUM_ARRS = 300;

private static byte[][] STATIC_DATA = new byte[NUM_ARRS][];

static { // Initialize unchanging static data

for (int i = 0; i < NUM_ARRS; i++) STATIC_DATA[i] = new byte[2000000];

}

private static final int NUM_THREADS = 20;

public static void main(String args[]) {

MemLeakTest[] threads = new MemLeakTest[NUM_THREADS];

for (int i = 0; i < NUM_THREADS; i++) {

threads[i] = new MemLeakTest();

threads[i].start();

}

}

@Override

public void run() { // Each thread maintains its own "history" list

List<HistoryRecord> history = new ArrayList<>();

for (int count = 0; ; count++) {

history.add(new HistoryRecord(count));

if (count % 10000 == 0) System.out.println("Thread " + this + ": count = " + count);

}

}

static class HistoryRecord {

int id;

String eventName;

HistoryRecord(int id) {

this.id = id;

this.eventName = "Foo xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx" + id;

}

}

}This application would inevitably crash with an OutOfMemoryError. To achieve this quickly and generate a heap dump of a reasonable size, compile this code and run it as follows:

> java -Xms1g -Xmx1g -verbose:gc -XX:+UseConcMarkSweepGC -XX:+UseParNewGC \

-XX:OnOutOfMemoryError="kill -9 %p" -XX:+HeapDumpOnOutOfMemoryError \

-XX:HeapDumpPath="./memleaktest.hprof" MemLeakTestSeveral of the above command line flags deserve some explanation:

It is suggested to use the Concurrent Mark-Sweep GC, at least if you use JDK 8 or older since the default (parallel) garbage collector in these JDK versions is really suboptimal in many aspects. In this particular case, it will cause the app to struggle for a really long time until it finally runs out of memory.

The

-XX:OnOutOfMemoryError="kill -9 %p"flag tells the JVM to kill itself as soon as any thread throws anOutOfMemoryError. This is a very important flag that every multi-threaded app should use in production, unless it's specially designed to handleOutOfMemoryErrorand continue to run after that (which is hard to implement correctly).The

-XX:HeapDumpPathcan specify either a heap dump file name or a directory where to write the dump. In the former case, if a file with the same name already exists, it will be overwritten. In the latter, each dump will have a name that follows thejava_pid<pid>.hprofpattern.

When you start the above program, it will run for 30 seconds or so, logging more and more GC pauses until one of its threads throws an OutOfMemoryError. At that point, the JVM will generate a heap dump and stop.

To analyze the dump with JXRay, download the jxray.zip file from www.jxray.com, unzip it, and run

> jxray.sh memleaks.hprof memleaks.html

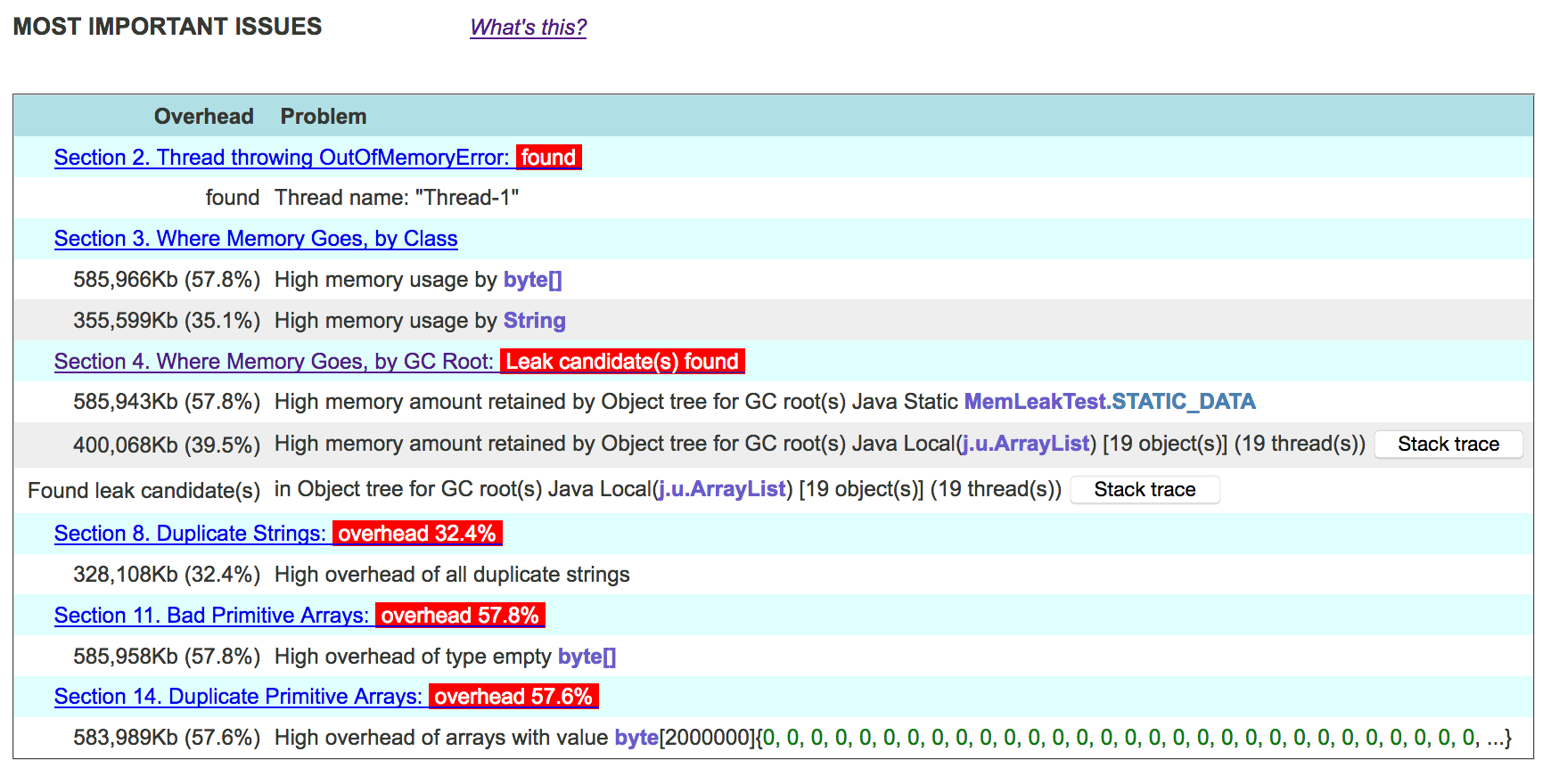

In 10-15 seconds, JXRay will generate the HTML file. Open it, and on the top, you will immediately see the list of the most important problems that the tool has detected:

As you can see, in addition to the memory leak, the tool found several other problems that cause a large memory waste. In our benchmark, these issues — duplicate strings, empty (all zero) byte arrays, and array duplication — are a side effect of the simplistic code. However, in real life, many unoptimized programs demonstrate exactly the same problems, sometimes causing similarly large memory waste!

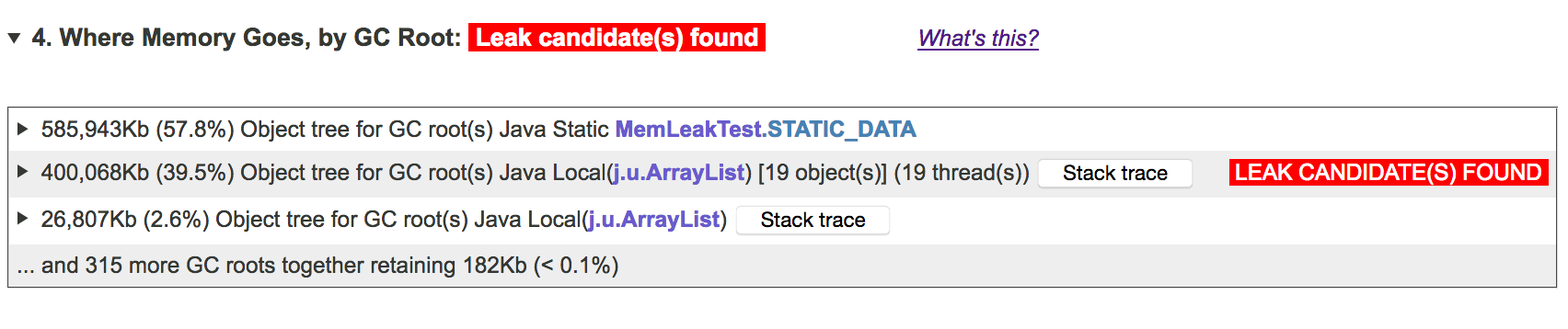

Now, let's look at the main problem that we want to illustrate here — the memory leak. For that, we jump to section 4 of the report and click to expand it:

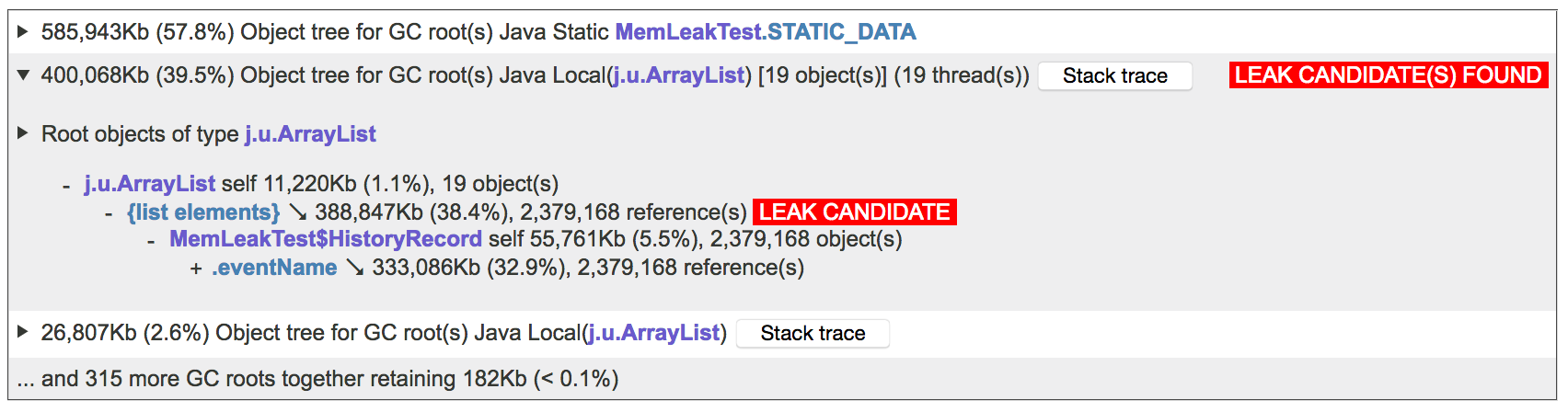

In this table, each expandable line contains a GC root with the amount of memory retained by it. The top GC root is our STATIC_DATA array, but as you can see, JXRay doesn't think that this is a source of a memory leak. However, the second GC root, which is an aggregation of all of the identical ArrayList s referenced by our worker threads, is marked as a leak candidate. If you click on this line, you will immediately see a reference chain (or, more precisely, multiple identical reference chains lumped together) leading to the problematic objects:

That's it. We are done! We can clearly see the data structures that manage the leaking objects. Note that each individual ArrayList only retains about 2 percent of memory, so if we looked at these lists separately, it would be hard to realize that they are leaking memory. However, JXRay treats these objects as identical, because they come from threads with identical stack traces and have identical reference chains. Once these objects and the memory that they retain are aggregated, it's much easier to see what's really going on.

How does JXRay decide which objects (usually collections or arrays) are the potential memory leak sources? In a nutshell, it first scans the heap dump starting from the GC roots and generates an object tree internally, aggregating identical reference chains whenever possible. Then, it checks this tree for objects that reference other objects so that (a) the number of referenced objects per each collection/array is high enough and (b) together, the referenced objects retain a sufficiently high amount of memory. It turns out that in practice, surprisingly, not many objects fit these criteria. Thus, the tool finds a real leak if it's serious enough and doesn't bother you with many false positives.

Of course, this method has its limitations. It cannot tell you that the given data structure is really a leak, i.e. it keeps growing over time. However, even if that's not the case, the above memory pattern is worth looking for when simpler things have already been optimized. In some situations, you may find that so many objects are not worth keeping in memory, or more compact data structures can be used, etc.

In summary, Java applications may have a problem with the unbounded growth of some data structures, and that's what is called a "Java memory leak." There are multiple ways to detect leaks. One way that incurs minimum overhead and results in not many false positives is heap dump analysis. There are tools that analyze JVM heap dumps for memory leaks, and JXRay is the one that requires a minimum effort from the user.

Opinions expressed by DZone contributors are their own.

Comments