Event-Based Data Warehousing with Azure Event Hubs and Logic Apps

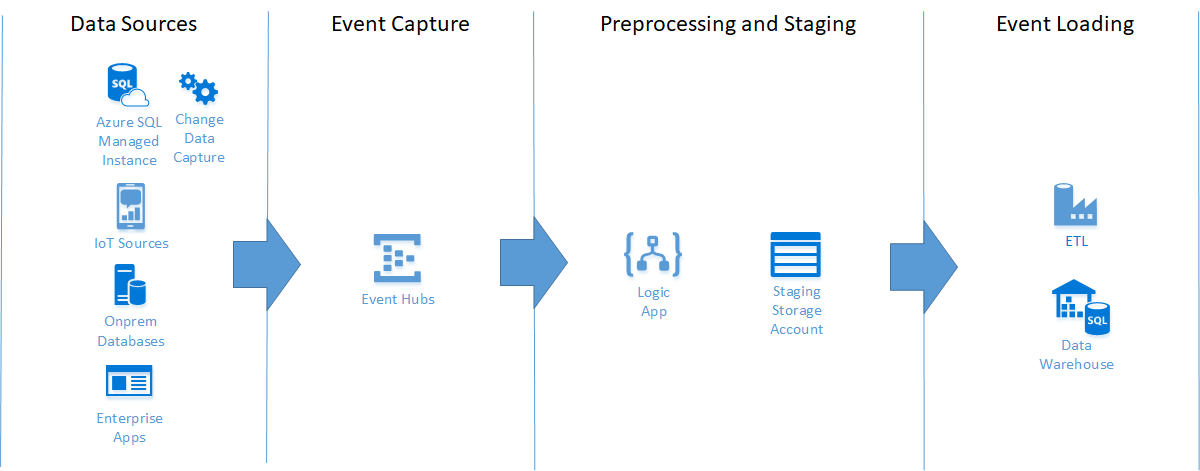

Using Azure technologies to ingest data from IoT, relational databases, and enterprise applications all through an event-based architecture pattern.

Join the DZone community and get the full member experience.

Join For FreeA traditional data warehouse will typically ingest data from operational databases which are hosted in RDBMSs such as SQL Server and Oracle. This data ingestion relies on complex and costly change-data detection methods such as row hashing. A modern data warehouse will also be designed to ingest data from streaming data sources such as the Internet of Things (IoT) devices using an event-driven architecture. By leveraging cloud infrastructure, a data warehouse can use the same event-driven approach to simplify the ingestion from relational databases in addition to processing streaming data. This article describes a pattern using Microsoft Azure Event Hubs along with other Azure products to build an event-driven architecture for both streaming and relational data sources.

Data Sources

Azure Event Hubs provides a highly resilient and scalable endpoint from which to receive event-based data from upstream systems, responding to HTTPS POST requests. This is perfect for IoT-style data and I have previously configured Event Hubs to capture data from a fleet of vehicles which are fitted with smart monitoring devices. However, Event Hubs can also be used to ingest event data from anything that can send JSON to an HTTPS endpoint.

One interesting pattern is to use SQL Server Change Data Capture to automatically detect data changes and send the changes to the Event Hub. Change Data Capture has been around since SQL 2017 but one gotcha is that Change Data Capture requires SQL Server Agent to be running - which rules out Azure SQL Database - but it will work with an Azure SQL Managed Instance or on-premise SQL Server. This pattern is much simpler than ETL based change data detection which requires (usually) row hashing and transfer of large volumes of data across the network.

Enterprise Applications can be configured to send events to Event Hub. For example SAP (along with SAP CPI) can send events as JSON to anEvent Hub. .NET applications can make use of SDKs to post directly to the Event Hub as it is based on Windows Service Bus technologies.

Event Hub’s security model requires an Access Token (SAS) to be generated based on a key and included in the HTTPS header. This is different from SAS for storage accounts which are generated one time within Azure. Event Hub’s SAS token is generated by the sending client based on an Access Policy and Key. Microsoft provides some sample code in various languages, but the sending system needs to be able to calculate the access token. In SAP CPI this is done in Groovy and Powershell is used in Windows environments such as SQL Server.

Event Capture and Staging

Event Hubs is easy to set up although some design decisions are required on the number of partitions and scale. Multi-region configuration for disaster recovery is also very simple to set up (although take a look at my article on the truth about cloud DR); Azure even provides a DNS-based load balancer and automated failover.

Event Hubs does have capability to automatically “Capture” events to Blob store or ADLS. This means that Event Hubs will take the events received and save them as a file in a Storage Account. This may be fine for some use cases, but in my experience it has enough limitations to make me look at other solutions, namely:

The events are saved as Avro files with the JSON embedded within Avro

The (non-configurable) folder structure is overly convoluted and hard for ETL tools to manage

The approach I have used is Azure Logic Apps. Azure Logic Apps is a tool for creating business workflows with a drag-n-drop low-code designer. Logic Apps have a multitude of different trigger types and connectors but one of the triggers is an event being received in an Event Hub. You can easily create a Logic Apps to save the event data as a more user-friendly JSON file, and can also carry out some data pre-processing such as saving the JSON in different locations based on the type of event received.

Event Loading

Once the event data has been staged into the Blob store, the next step is to load it into the data warehouse. The approach for this depends on the warehouse technology you are using and the frequency and volume of data being ingested. Platforms such as Azure Synapse and Snowflake are able to ingest the data directly from Blob Store by mounting them as External Tables (Azure Synapse) or through Snowpipe (Snowflake). However, some thought needs to be given on how to remove the staged files once they have been loaded in, plus further processing may be required on the data before loading it into the warehouse. Therefore an ETL tool can be used to process and load the data. Azure Data Factory is an obvious choice when operating in the Azure ecosystem, however other ETL tools will also work if they can read from Azure Blob store.

Conclusion

When building a data warehouse in Azure Cloud, event-based architecture allows the ingestion of data not only from IoT sources but also from traditional database and enterprise applications without having to build change-data detection into the data warehouse. Azure Event Hubs, Logic Apps and Storage Accounts provide for a flexible and scalable way of ingesting event data into your warehouse.

Opinions expressed by DZone contributors are their own.

Comments