Enhanced API Security: Fine-Grained Access Control Using OPA and Kong Gateway

Kong secures APIs and OPA handles policy decisions. This custom plugin caches user data, minimizing database checks and ensuring fast, accurate access control.

Join the DZone community and get the full member experience.

Join For FreeKong Gateway is an open-source API gateway that ensures only the right requests get in while managing security, rate limiting, logging, and more. OPA (Open Policy Agent) is an open-source policy engine that takes control of your security and access decisions. Think of it as the mind that decouples policy enforcement from your app, so your services don’t need to stress about enforcing rules. Instead, OPA does the thinking with its Rego language, evaluating policies across APIs, microservices, or even Kubernetes. It’s flexible, and secure, and makes updating policies a breeze. OPA works by evaluating three key things: input (real-time data like requests), data (external info like user roles), and policy (the logic in Rego that decides whether to "allow" or "deny"). Together, these components allow OPA to keep your security game strong while keeping things simple and consistent.

![Kong and OPA logos]() What Are We Seeking to Accomplish or Resolve?

What Are We Seeking to Accomplish or Resolve?

Oftentimes, the data in OPA is like a steady old friend — static or slowly changing. It’s used alongside the ever-changing input data to make smart decisions. But, imagine a system with a sprawling web of microservices, tons of users, and a massive database like PostgreSQL. This system handles a high volume of transactions every second and needs to keep up its speed and throughput without breaking a sweat.

Fine-grained access control in such a system is tricky, but with OPA, you can offload the heavy lifting from your microservices and handle it at the gateway level. By teaming up with Kong API Gateway and OPA, you get both top-notch throughput and precise access control.

How do you maintain accurate user data without slowing things down? Constantly hitting that PostgreSQL database to fetch millions of records is both expensive and slow. Achieving both accuracy and speed usually requires compromises between the two. Let’s aim to strike a practical balance by developing a custom plugin (at the gateway level) that frequently loads and locally caches data for OPA to use in evaluating its policies.

Demo

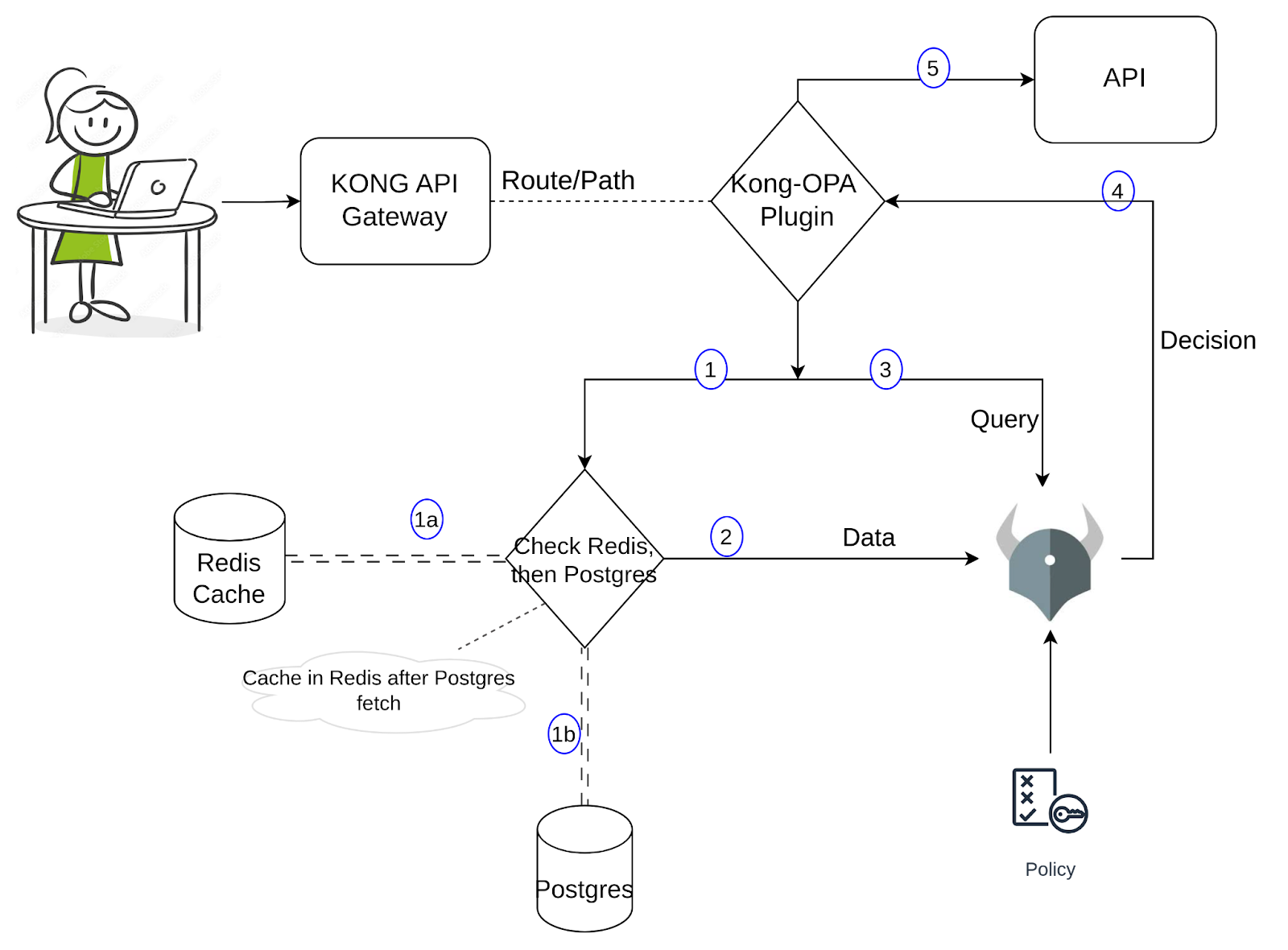

For the demo, I’ve set up sample data in PostgreSQL, containing user information such as name, email, and role. When a user tries to access a service via a specific URL, OPA evaluates whether the request is permitted. The Rego policy checks the request URL (resource), method, and the user’s role, then returns either true or false based on the rules. If true, the request is allowed to pass through; if false, access is denied. So far, it's a straightforward setup. Let’s dive into the custom plugin. For a clearer understanding of its implementation, please refer to the diagram below.

When a request comes through the Kong Proxy, the Kong custom plugin would get triggered. The plugin would fetch the required data and pass it to OPA along with the input/query. This data fetch has two parts to it: one would be to look up Redis to find the required values, and if found, pass it along to OPA; if else, it would further query the Postgres and fetch the data and cache it in Redis before passing it along to OPA. We can revisit this when we run the commands in the next section and observe the logs. OPA makes a decision (based on the policy, input, and data) and if it's allowed, Kong will proceed to send that request to the API. Using this approach, the number of queries to Postgres is significantly reduced, yet the data available for OPA is fairly accurate while preserving the low latency.

To start building a custom plugin, we need a handler.lua where the core logic of the plugin is implemented and a schema.lua which, as the name indicates, defines the schema for the plugin’s configuration. If you are starting to learn how to write custom plugins for Kong, please refer to this link for more info. The documentation also explains how to package and install the plugin. Let’s proceed and understand the logic of this plugin.

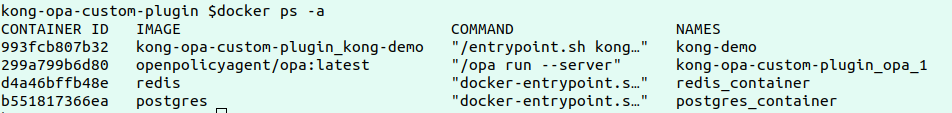

The first step of the demo would be to install OPA, Kong, Postgres, and Redis on your local setup or any cloud setup. Please clone into this repository.

Review the docker-compose yaml which has the configurations defined to deploy all four services above. Observe the Kong Env variables to see how the custom plugin is loaded.

Run the below commands to deploy the services:

docker-compose build

docker-compose up

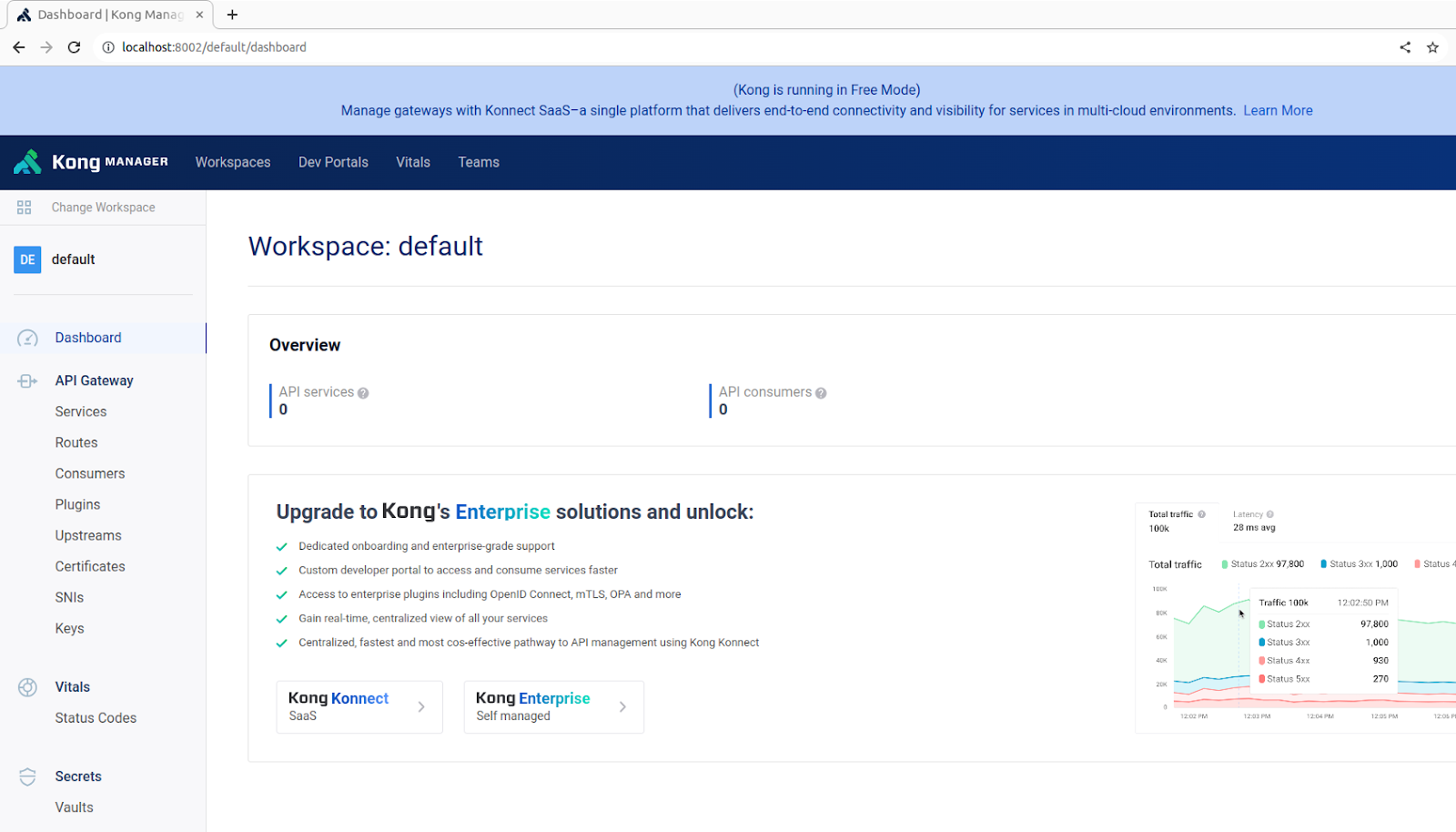

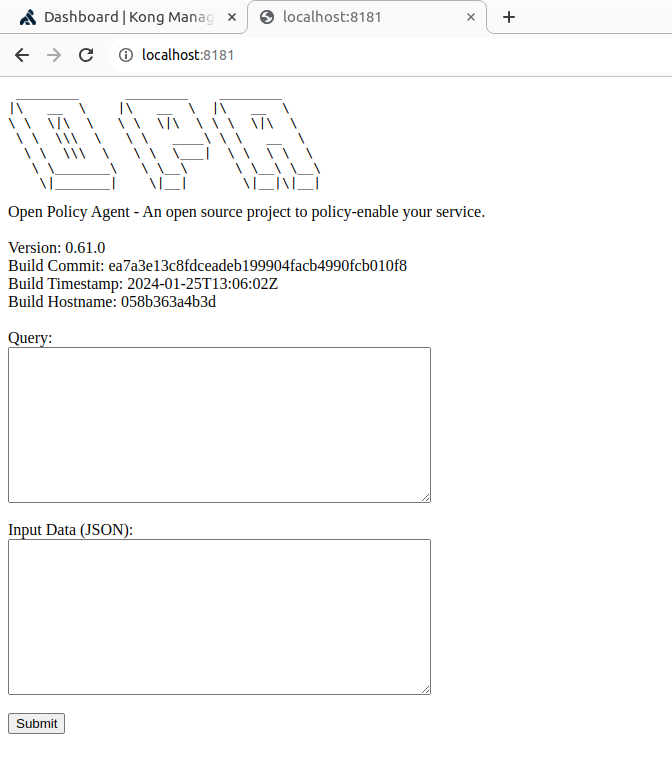

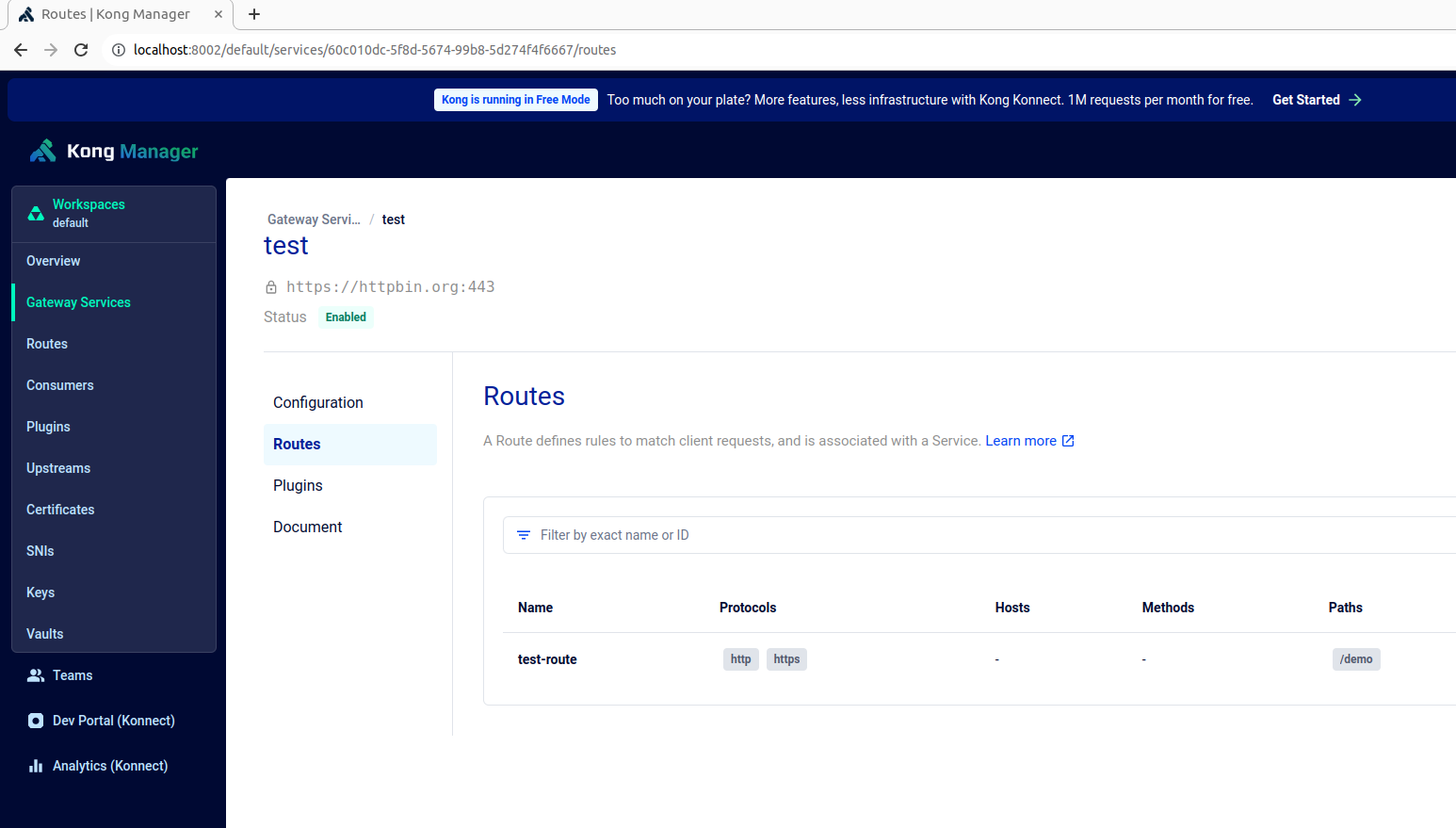

Once we verify the containers are up and running, Kong manager and OPA are available on respective endpoints https://localhost:8002 and https://localhost:8181 as shown below:

Create a test service, route and add our custom kong plugin to this route by using the below command:

curl -X POST http://localhost:8001/config -F config=@config.yaml

The OPA policy, defined in authopa.rego file, is published and updated to the OPA service using the below command:

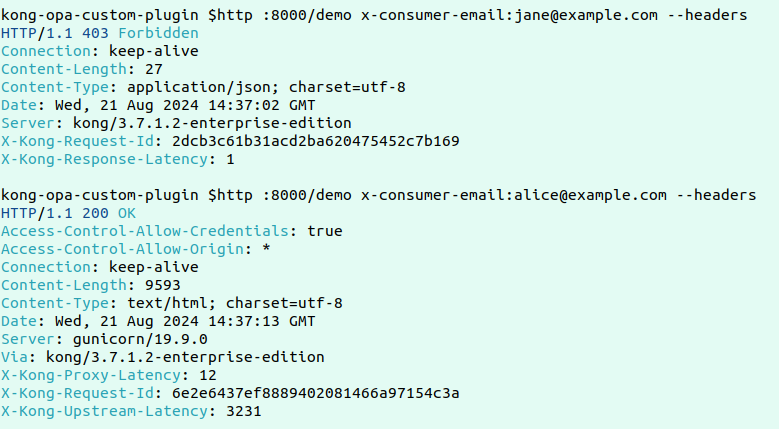

curl -X PUT http://localhost:8181/v1/policies/mypolicyId -H "Content-Type: application/json" --data-binary @authopa.regoThis sample policy grants access to user requests only if the user is accessing the /demo path with a GET method and has the role of "Moderator". Additional rules can be added as needed to tailor access control based on different criteria.

opa_policy = [

{

"path": "/demo",

"method": "GET",

"allowed_roles": ["Moderator"]

}

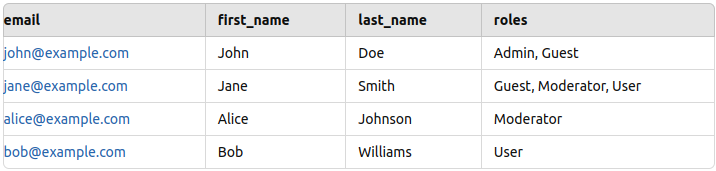

]Now the setup is ready, but before testing, we need some test data to add in Postgres. I added some sample data (name, email, and role) for a few employees as shown below (please refer to the PostgresReadme).

Here’s a sample failed and successful request:

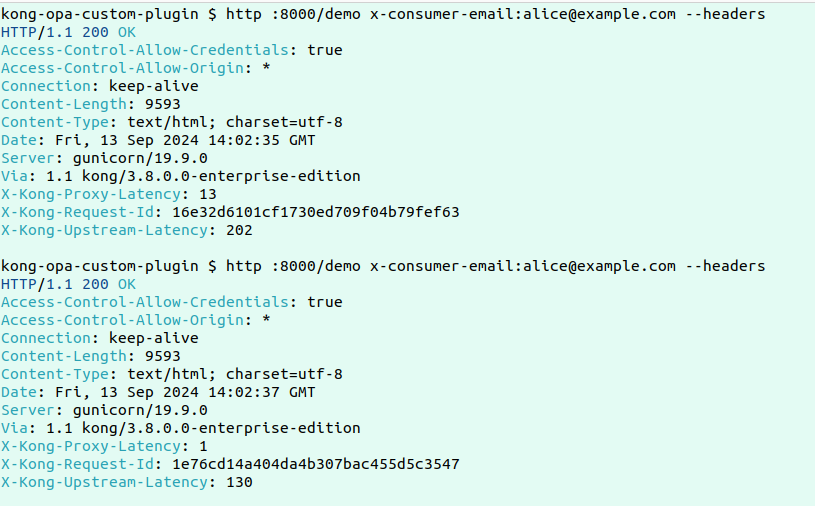

Now, to test the core functionality of this custom plugin, let’s make two consecutive requests and check the logs for how the data retrieval is happening.

Here are the logs:

Here are the logs:

2024/09/13 14:05:05 [error] 2535#0: *10309 [kong] redis.lua:19 [authopa] No data found in Redis for key: alice@example.com, client: 192.168.96.1, server: kong, request: "GET /demo HTTP/1.1", host: "localhost:8000", request_id: "ebbb8b5b57ff4601ff194907e35a3002"

2024/09/13 14:05:05 [info] 2535#0: *10309 [kong] handler.lua:25 [authopa] Fetching roles from PostgreSQL for email: alice@example.com, client: 192.168.96.1, server: kong, request: "GET /demo HTTP/1.1", host: "localhost:8000", request_id: "ebbb8b5b57ff4601ff194907e35a3002"

2024/09/13 14:05:05 [info] 2535#0: *10309 [kong] postgres.lua:43 [authopa] Fetched roles: Moderator, client: 192.168.96.1, server: kong, request: "GET /demo HTTP/1.1", host: "localhost:8000", request_id: "ebbb8b5b57ff4601ff194907e35a3002"

2024/09/13 14:05:05 [info] 2535#0: *10309 [kong] handler.lua:29 [authopa] Caching user roles in Redis, client: 192.168.96.1, server: kong, request: "GET /demo HTTP/1.1", host: "localhost:8000", request_id: "ebbb8b5b57ff4601ff194907e35a3002"

2024/09/13 14:05:05 [info] 2535#0: *10309 [kong] redis.lua:46 [authopa] Data successfully cached in Redis, client: 192.168.96.1, server: kong, request: "GET /demo HTTP/1.1", host: "localhost:8000", request_id: "ebbb8b5b57ff4601ff194907e35a3002"

2024/09/13 14:05:05 [info] 2535#0: *10309 [kong] opa.lua:37 [authopa] Is Allowed by OPA: true, client: 192.168.96.1, server: kong, request: "GET /demo HTTP/1.1", host: "localhost:8000", request_id: "ebbb8b5b57ff4601ff194907e35a3002"

2024/09/13 14:05:05 [info] 2535#0: *10309 client 192.168.96.1 closed keepalive connection

------------------------------------------------------------------------------------------------------------------------

2024/09/13 14:05:07 [info] 2535#0: *10320 [kong] redis.lua:23 [authopa] Redis result: {"roles":["Moderator"],"email":"alice@example.com"}, client: 192.168.96.1, server: kong, request: "GET /demo HTTP/1.1", host: "localhost:8000", request_id: "75bf7a4dbe686d0f95e14621b89aba12"

2024/09/13 14:05:07 [info] 2535#0: *10320 [kong] opa.lua:37 [authopa] Is Allowed by OPA: true, client: 192.168.96.1, server: kong, request: "GET /demo HTTP/1.1", host: "localhost:8000", request_id: "75bf7a4dbe686d0f95e14621b89aba12"The logs show that for the first request when there’s no data in Redis, the data is being fetched from Postgres and cached in Redis before sending it forward to OPA for evaluation. In the subsequent request, since the data is available in Redis, the response would be much faster.

Conclusion

In conclusion, by combining Kong Gateway with OPA and implementing the custom plugin with Redis caching, we effectively balance accuracy and speed for access control in high-throughput environments. The plugin minimizes the number of costly Postgres queries by caching user roles in Redis after the initial query. On subsequent requests, the data is retrieved from Redis, significantly reducing latency while maintaining accurate and up-to-date user information for OPA policy evaluations. This approach ensures that fine-grained access control is handled efficiently at the gateway level without sacrificing performance or security, making it an ideal solution for scaling microservices while enforcing precise access policies.

Opinions expressed by DZone contributors are their own.

What Are We Seeking to Accomplish or Resolve?

What Are We Seeking to Accomplish or Resolve?

Comments