End-To-End Automated Testing of a Home Robot Using April Tags

In this article, the author will explain how to perform E2E automated testing for a home robot using real-world examples.

Join the DZone community and get the full member experience.

Join For FreeIn my previous article, I gave an overview of robotics software design, and what are the different approaches to testing we could do, and how we can approach testing at different layers. In this article, I will explain how to perform E2E automated testing for a home robot.

Challenges in End-To-End Automation

Challenges in robotic automation are plenty. The complexity of the software stack itself makes this type of automation super challenging. Testing could be done at any different layer, starting from the front end all the way to the low layer. End-to-end becomes difficult as it introduces a newer challenge with respect to the 3D space. Testing within a 3D space involving real home features is challenging too. Top of all, there are no specific tools and technology available to automate the testing process. This is a space that requires an immense understanding of the problem and great expertise in solving this challenge. Don't worry. I will share my experience here to help you understand and provide an overview of how things could be done.

Real World Example

Let's dive straight into a real-world example. You are working as a lead Software Engineer in Test in a robotic company making home robots, and you are responsible for a robot that is used in factories and warehouses for moving things around. The first thing you may face is getting overwhelmed with what are the scenarios to be tested and how you are going to implement your automation skills in this area to get started. In the example of a home robotic vacuum cleaner, a couple of simple real-world scenarios could be:

- How can I validate the robot moving from the living room to the kitchen? The robot could go to the bedroom and claim it is in the kitchen. How do we ensure 100/100 times it only goes to the kitchen?

- How do you ensure when there is a low battery, the robot goes back to the charging dock and charges itself?

It might be overwhelming initially, but I am here to explain how these can be done.

Tools and Technologies Available

Unfortunately, there is no single tailor-made tool to solve this problem. There are some technologies that we can modify based on our use case. Below are some of the tools and technologies used in different streams which we can modify to solve our problem.

- April Tag and Raspberry Pi Combo

- HTC Vive motion tracking gaming software

April Tag and Raspberry Pi Combo

What Is a Raspberry Pi?

Raspberry Pi is a small Linux-based computer that can do most of the tasks that a Linux machine can do. In the world of devices, Raspberry Pi is used because of its capabilities which it can offer and the cost it can save compared to large Linux/Ubuntu machines.

What Is April Tag?

Aril tag is something similar to QR codes, but it's different. Some Python modules can help understand the April tag and are very use full in our case.

E2E Test Automation Architecture:

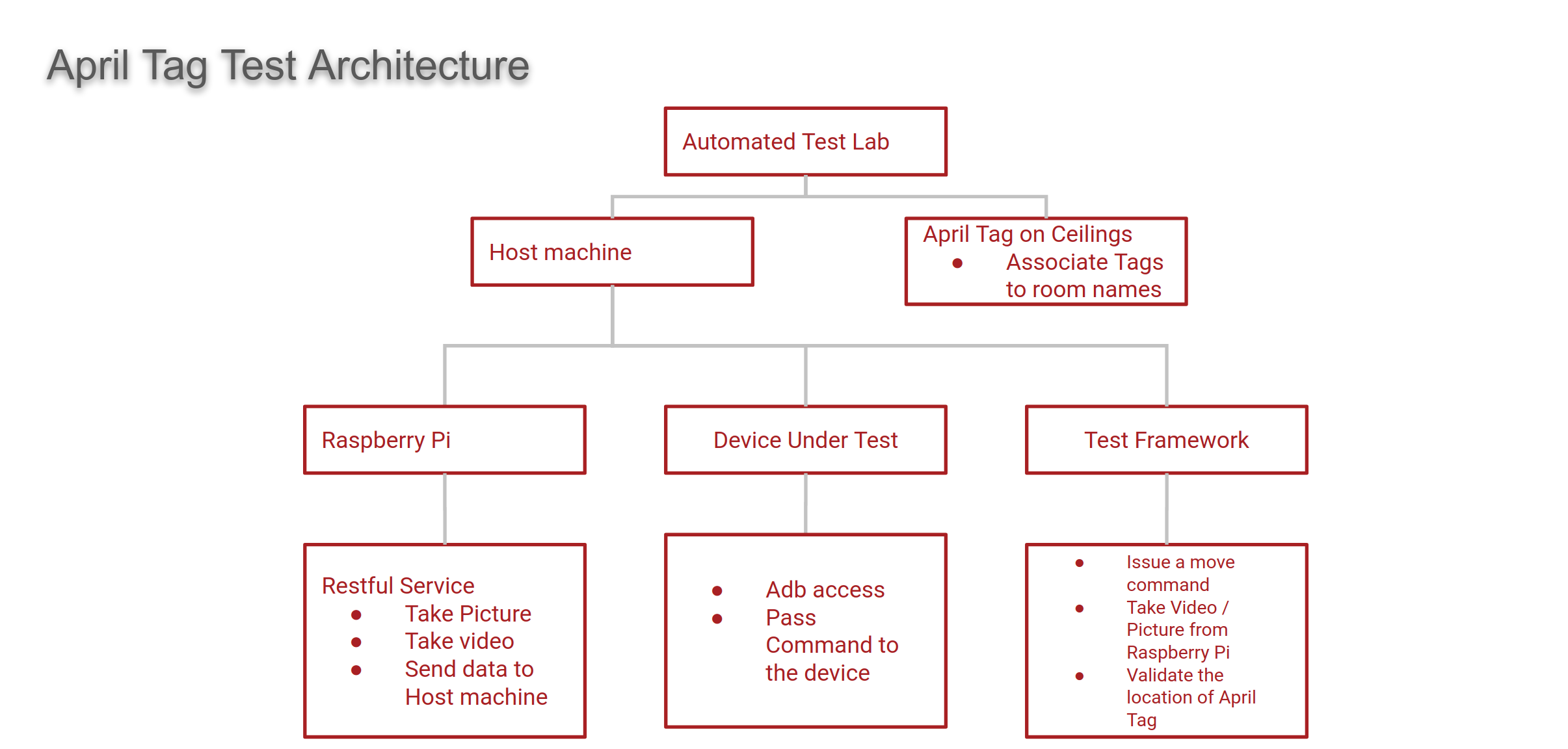

Key items in this architecture are the Hostmachine, Raspberry Pi, Device Under Test, and April Tags.

Host machine: Typically, a Linux/Ubuntu-based machine is used to drive the tests. This is the key element that talks to the Device under test, Raspberry Pi and runs the test framework. This is integral in pulling logs, uploading results, etc.

Raspberry Pi: This hosts a restful service that will be mounted on the robot. Raspberry Pi has an inbuilt camera which is controlled by the restful service and can be invoked from the host machine.

April Tags: These tags go on the ceiling, and each tag is associated with an ID, and the Id is mapped to a location or space in the real world. In our case, it will be mapped to Living Room, Kitchen, Bedroom, and other spaces which exist in the real world.

Test Framework: The test framework handles sending commands to the device, pulling logs, decoding the April tag, and calculating the robot position with respect to the captured tag. This also talks to our Ci systems like Jenkins for continuous integration.

Test Flow

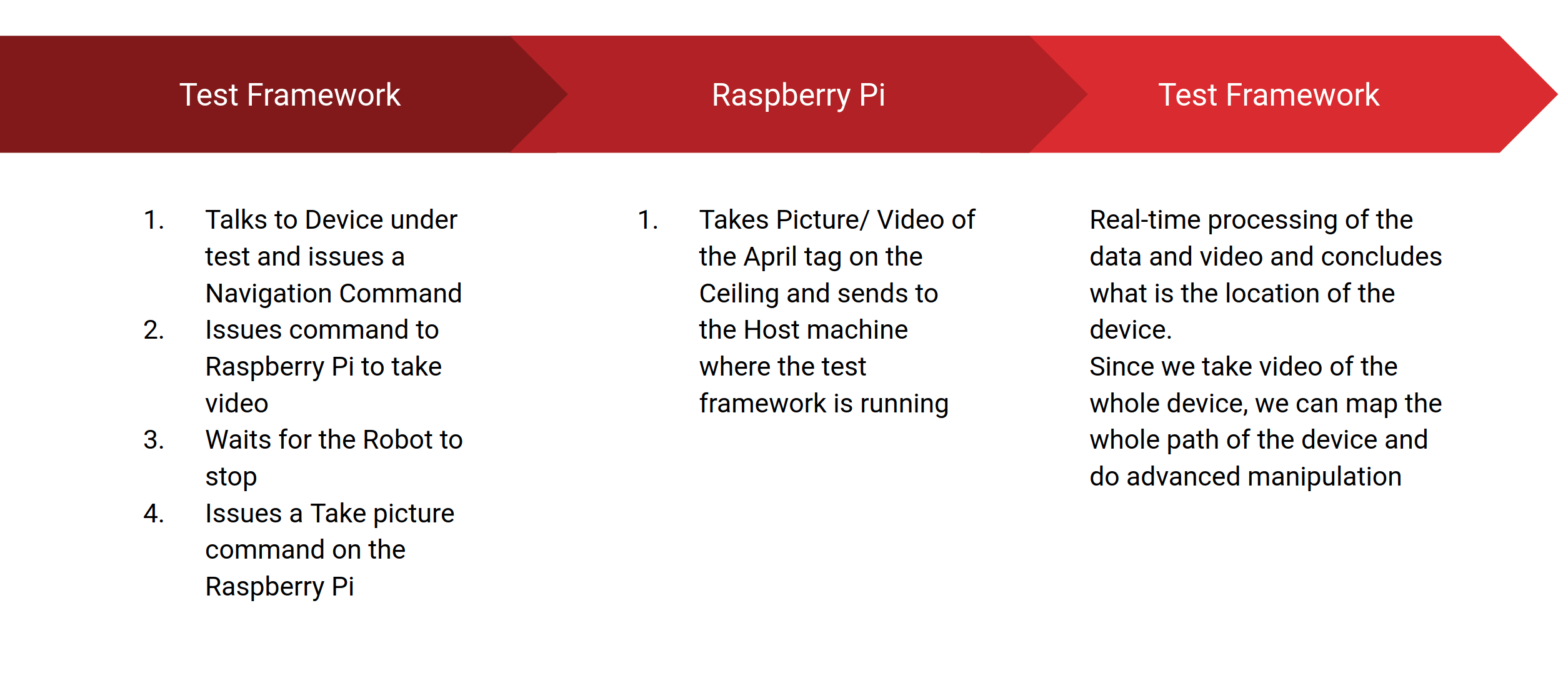

The test framework issues a command to the DUT to start the navigation. As the device is moving, the Raspberry Pi takes a complete video of the April tags. This video can be post-processed to understand what route the robot took. Later once the device comes to a complete stop, the Raspberry Pi is instructed to take a video where this is used to find the exact location of the robot in real space.

With this architecture, we can automate the real scenario of a robot moving from one space to another by tagging each space with a name and post-processing the space in the real world.

In the next topic, I will explain how HTC Vive, a motion detection/gaming software helps us solve the automation of robots.

Opinions expressed by DZone contributors are their own.

Comments