Duplicate Objects in Java: Not Just Strings

Did you ever consider that instances of other classes can sometimes be duplicate and waste a considerable amount of memory?

Join the DZone community and get the full member experience.

Join For FreeWhen a Java application consumes a lot of memory, it can be a problem on its own and can lead to increased GC pressure and long GC pauses. In one of my previous articles, I discussed one common source of memory waste in Java: duplicate strings. Two java.lang.String objects a and b are duplicates when a != b && a.equals(b). In other words, there are two (or more) separate strings with the same contents in the JVM memory. This problem occurs very frequently, especially in business applications. In such apps, strings represent a lot of real-world data, and yet, the respective data domains (e.g. customer names, country names, product names) are finite and often small. From my experience, in an unoptimized Java application, duplicate strings typically waste between 5 and 30 percent of the heap. However, did you ever think that instances of other classes, including arrays, can sometimes be duplicate as well, and waste a considerable amount of memory? If not, read on.

Object Duplication Scenarios

Object duplication in memory occurs whenever the number of distinct objects of a certain type is limited but the app keeps creating such objects without trying to cache/reuse the existing ones. Here are just a few concrete examples of object duplication that I've seen:

In Hadoop File System (HDFS) NameServer,

byte[]arrays rather thanStrings are used to store file names. When there are files with the same name in different directories, the respectivebyte[]arrays are duplicates. See this ticket for more details.In some monitoring systems, periodic "events" or "updates" received from the monitored entities (machines, applications, components, etc.) are represented as small objects with two main fields: timestamp and value. When many updates arrive at the same time and all have the same value (for example, 0 or 1 to signal that the monitored entity's health is good), many duplicate objects get created.

The Hive data warehouse once had the following problem. When multiple concurrent queries were executed against the same DB table with a large number of partitions, a separate per-query copy of metadata for each partition was loaded into memory. Partition metadata is represented as a

java.util.Propertiesinstance. Thus, running 50 concurrent queries against 2000 partitions used to create 50 identical copies ofPropertiesfor each of these partitions, or 100,000 such collections in total, that consumed a lot of memory. See this ticket for more details.

These are just a few examples. Other, less-obvious ones include multiple identical byte buffers storing identical messages, multiple (usually small) object sets with same contents representing certain frequently occurring data combinations, and so on.

Getting Rid of Duplicate Objects

As mentioned above, strings are one category of objects that are especially prone to duplication. This problem has been realized by the JDK developers very long ago and addressed with the String.intern() method. The article mentioned above discusses it in detail. In brief, this method uses a global string cache (pool) with effectively weak references. It saves and returns the given string instance if it's not in the cache yet or returns the cached string instance with the same value. Performance and scalability of String.intern() , that once was not great, has substantially improved starting from JDK 7. Thus, when not overused, it is likely a good solution for many applications. However, as discussed in this article, in highly concurrent or performance-critical apps, it may become a bottleneck, and a different, "hand-rolled" interning method may be needed.

Let's consider other object interning implementations. Keep in mind that the following discussion applies only to immutable objects, that is, those that don't change after creation. If object contents can change, eliminating duplicates becomes much harder and requires custom, case-by-case solutions.

The most widely used off-the-shelf interning functionality is provided by the Guava library via the com.google.commmon.collect.Interners class. This class has two key methods that return an interner instance: newStrongInterner() and newWeakInterner(). The weak interner, which eventually releases objects that are not needed anymore (not referenced strongly from anywhere), generally uses less memory and is, therefore, used more frequently. It is implemented as a concurrent hash set with weak keys that resemble the standard JDK ConcurrentHashMap. In many situations, it's a good choice that helps reduce the memory footprint substantially with a small CPU performance overhead, which is often offset by the reduced GC time. However, consider the following situation:

There are 20 million instances of some class C, each taking 32 bytes

10 million of these instances are all identical to each other, and another 10 million are all distinct. In practice, such a sharp division almost never occurs, but still, a situation when about a half of objects represent only a handful of unique values, and in another half most objects have very few or no duplicates, is very common. The simplified division just makes our calculations easier.

In this scenario, when we don't intern any C instances, they use 32 * 20M = 640M bytes. But what happens if we invoke the Guava intern() for each of them?

The first 10 million objects will be successfully reduced to just one instance of C, taking a negligible amount of memory. However, for each of the remaining 10 million objects, there will be no savings, since each of them is unique. Still, the Guava weak interner will maintain an internal table with 10 million entries to accommodate each of these objects. This table will use one instance of com.google.common.collect.MapMakerInternalMap$WeakKeyDummyValueEntry class per each interned object, plus one slot in the internal array referencing each entry. The size of a WeakKeyDummyValueEntry is 40 bytes, so the interner will need 40+4 = 44 bytes per interned object. 44 * 10M = 440M. Add to that 32 * 10M = 320M that the unique instances of C still take, and the total memory footprint now is 760M bytes! In other words, we use more memory than before, not less.

This scenario may be a bit extreme, but in practice, the general rule holds: if in a given group of objects the percentage of unique objects is high, then memory savings achieved by a traditional interner, that stores a reference to each and every object given to it, may be small, if not negative. Can we do better?

Fixed-Size Array, Lock Free (FALF) Interner

It turns out that if we don't need to have a single copy of each unique object, but just want to save memory, and possibly still have a few duplicate objects — in other words, if we agree to deduplicate objects "opportunistically" — there is a solution that's surprisingly simple and efficient. I haven't seen it in the literature, so I named it "Fixed-Size Array, Lock Free (FALF) Interner."

This interner is implemented as a small, fixed-size, open-hashmap-based object cache. When there is a cache miss, a cached object in the given slot is always replaced with a new object. There is no locking and no synchronization, and thus, no associated overhead. Basically, this cache is based on the idea that an object with value X that has many copies has a higher chance of staying in the cache for long enough to guarantee several cache hits for itself before a miss evicts X and replaces it with an object with a different value Y. Here is one possible implementation of this interner:

/** Fixed size array, lock free object interner */

static class FALFInterner<T> {

static final int MAXIMUM_CAPACITY = 1 << 30;

private Object[] cache;

FALFInterner(int expectedCapacity) {

cache = new Object[tableSizeFor(expectedCapacity)];

}

T intern(T obj) {

int slot = hash(obj) & (cache.length - 1);

T cachedObj = (T) cache[slot];

if (cachedObj != null && cachedObj.equals(obj)) return cachedObj;

else {

cache[slot] = obj;

return obj;

}

}

/** Copied from java.util.HashMap */

static int hash(Object key) {

int h;

return (key == null) ? 0 : (h = key.hashCode()) ^ (h >>> 16);

}

/**

* Returns a power of two size for the given target capacity.

* Copied from java.util.HashMap.

*/

static int tableSizeFor(int cap) {

int n = cap - 1;

n |= n >>> 1;

n |= n >>> 2;

n |= n >>> 4;

n |= n >>> 8;

n |= n >>> 16;

return (n < 0) ? 1 : (n >= MAXIMUM_CAPACITY) ? MAXIMUM_CAPACITY : n + 1;

}

}To compare the performance of the FALF interner with the Guava weak interner, I wrote a simple multithreaded benchmark. The code generates and interns strings that are derived from random numbers obeying a Gaussian distribution. That is, strings with some values are generated much more frequently than others, and will, therefore, result in more duplicates. In this benchmark, the FALF interner runs faster than the Guava interner by about 17 percent. However, that's not all. When I measured the memory footprint with the jmap -histo:live command after the benchmark finished running, but before it exited, it turned out that with the FALF interner, the used heap size is nearly 30 times smaller than with the Guava interner! That's the difference in memory footprint of the fixed-size small cache versus a weak hashmap with an entry for each unique object ever seen.

To be fair, the FALF interner would typically require more tuning than the traditional, one-size-fits-all interners. For one thing, since the cache size is fixed, you need to choose it carefully. On the one hand, to minimize misses, this size should be big enough — ideally equal to the number of unique objects of the type that you intern. On the other hand, our goal is to minimize used memory, so in practice, you will likely choose a (much) smaller size that's roughly equal to the number of objects with a high number of duplicates.

Another important consideration is a choice of hash function for interned objects. In a small, fixed-size cache, it is very important to distribute objects across slots as uniformly as possible to avoid the situation when many slots are used infrequently, whereas there is a small group in which each slot is contended by several object values with many copies. This contention would result in many duplicates missed by the cache, and thus, a bigger memory footprint. When this happens for instances of a class with a very simple hashCode() method (e.g. with code similar to the one in java.lang.String class), it may signal that this hash function implementation is inadequate. A more advanced hash function, like one of those provided by the com.google.common.hash.Hashing class, can dramatically improve the efficiency of the FALF interner.

Detection of Duplicate Objects

So far, we haven's discussed how a developer can determine which objects in their app have many duplicates and thus need to be interned. For big applications, this can be non-trivial. Even if you can guess which objects are likely duplicate, it can be really hard to estimate their exact memory impact. From experience, the best way to solve this is to generate a heap dump of the app and then use a tool to analyze it.

A heap dump is essentially a full snapshot of the running JVM's heap. It can be either taken at an arbitrary moment by invoking the jmap utility, or the JVM can be configured to produce it automatically if it fails with OutOfMemoryError. If you Google "JVM heap dump," you will immediately see a bunch of relevant articles on this subject.

A heap dump is a binary file of about the size of your JVM's heap, so it can only be read and analyzed with special tools. There is a number of such tools available, both open source and commercial. The most popular open-source tool is Eclipse MAT; there is also VisualVM and some less-powerful, lesser-known tools. The commercial tools include the general-purpose Java profilers: JProfiler and YourKit, as well as JXRay - the tool built specifically for heap dump analysis.

Unlike most other tools, JXRay analyzes a heap dump right away for a large number of common problems, including duplicate strings and other objects. Currently, objects comparison is shallow. That is, two objects (for example ArrayLists) are considered duplicate only when they reference exactly the same group of objects x0, x1, x2, ... in the same order. To put it differently, for two objects a and b to be considered equal, all pointers to other objects in a and b should be equal, bit by bit.

JXRay runs through the given heap dump once and generates a report with all the collected information in the HTML format. The advantage of this approach is that you can view the results of analysis anywhere, at any time later, and share it with others easily. It also means that you can run the tool on any machine, including big and powerful yet "headless" machines in a data center.

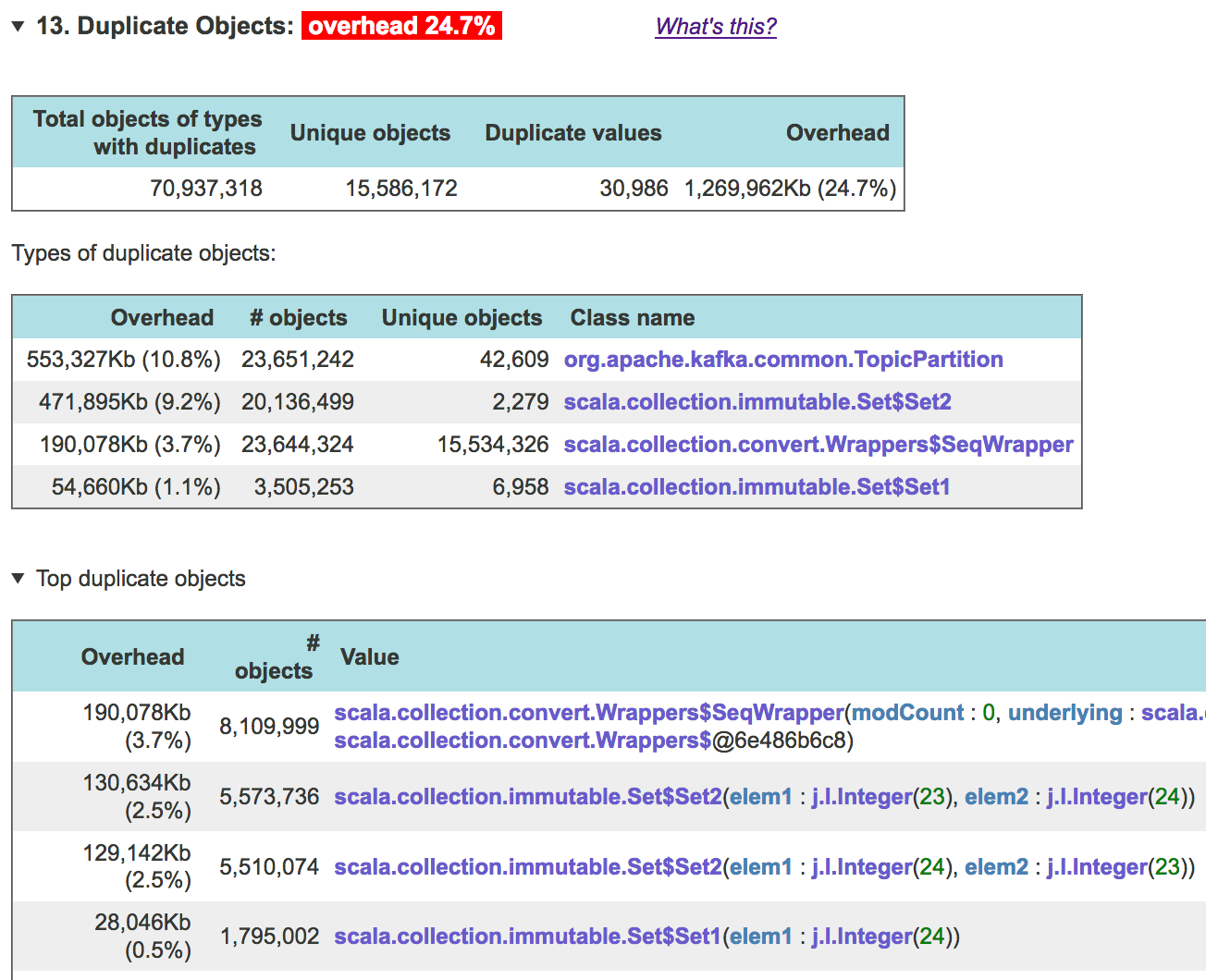

Once you get a report from JXRay, open it in your favorite browser and expand the relevant section. You may see something like this:

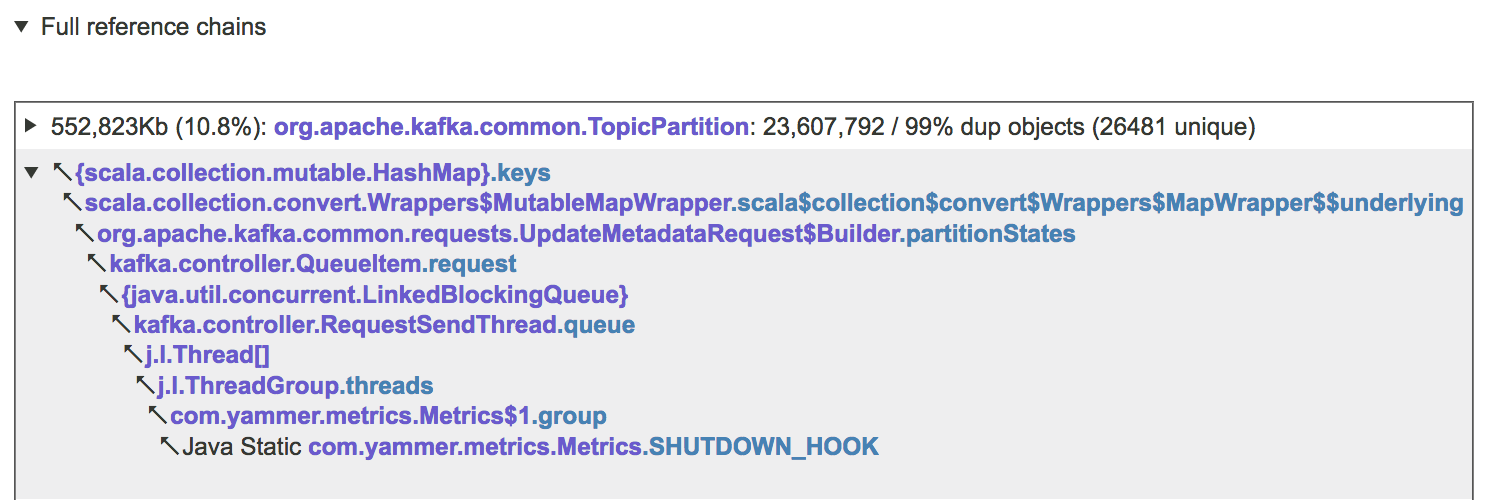

So, in this dump, 24.7 percent of the used heap is wasted by duplicate non-array, non-collection objects! The table above lists all classes whose instances contribute most significantly to this overhead. To see where these objects come from (what objects reference them, all the way up to GC roots), scroll down to the "Expensive data fields" or "Full reference chain" subsection of the report, expand it, and click on the relevant line in the table. Here is an example for one of the above classes, TopicPartition:

From here, we can get a good idea of what data structures manage problematic objects.

To summarize, duplicate objects, i.e. multiple instances of the same class with identical contents, can become a burden in a Java app. They may waste a lot of memory and/or increase the GC pressure. The best way to measure the impact of such objects is to obtain a heap dump and use a tool like JXRay to analyze it. When duplicate objects are immutable, you can use an off-the-shelf or custom interner implementation to reduce the number of such objects, and thus their memory impact. Mutable duplicate objects are much more difficult to get rid of, and may only be eliminated with case-by-case, customized solutions.

Opinions expressed by DZone contributors are their own.

Comments