Distributed Application Development Through Microservices

A microservice architecture design pattern has advantages over a monolithic design pattern. The idea is to divide the single application into interconnected applications.

Join the DZone community and get the full member experience.

Join For FreeThe microservice architecture design pattern has a lot of advantages over a monolithic design pattern. Instead of creating a large single application, the idea is to subdivide the single application into a set of different interconnected applications. Every microservice has its own layered architecture similar to the monolithic application.

Here are few advantages that can be achieved easily by microservices with the patterns followed.

Scalability. An application typically uses three types of scaling. X-axis scaling is horizontally cloning the application, Y-axis scaling is splitting the various application functionalities, and Z-axis scaling is partitioning or sharding the data. When Y-axis scaling is applied to monolithic applications, the application is broken into many smaller units aligned with business functions that are in line with microservice characteristics.

Pattern: each microservice has its own instance or container that is isolated. Service-level load balancing can be accomplished across same services hosted in multiple instances.

Availability: Microservices are deployed in different instances like the same Microservice is hosted on multiple instances which make the overall system highly available.

Pattern: service-level load balancing can be utilized to achieve high availability, the circuit breaker pattern can be utilized to achieve fault tolerance, and service configuration and discovery can enable the discovery of new service sto communicate.

Continuous deployment. Every microservice is independent of each other. This leads to the deployment of any service independent of each other for faster and more continuous deployment.

Loose coupling. Microservices provide different ways to achieve the loose coupling. Every microservice should have its own layered architecture at the service level and in the database as a persistence layer that runs in its own isolated environment.

Technology diversity. Considering microservices as an isolated feature, a mixture of diverse technologies can be used for implementing various microservices that function for an overall application.

Cost-effective. Service instances can be optimized based on the application usage. Low commodity instances can be used for least preferred service and high commodity instances can be used for business critical service.

Performance. Considering the technology diversity advantage of microservice, there will be a direct impact on the performance. For example, high blocking calls services are implemented in the single threaded technology stack and high CPU usage services are implemented in multiples in the threaded technology stack.

Microservices also have a few drawbacks compared to monolithic architecture.

Microservices also have a few drawbacks compared to monolithic architecture.

For one, developing and managing distributed application as compared to a standalone application is quite difficult. Microservices need an interprocess communication (IPC) mechanism to communicate between different microservices, which might impact a bit of performance (depending on network bandwidth).

Following are the functionalities to be implemented for effective microservices:

- To achieve IPC, each microservice should invoke the other using rest calls or RPC.

- It is possible that single microservice may communicate with a single microservices or multiple microservices. A service might be unavailable or not respond in a timely manner due to heavy load or some other service error. To achieve this situation there should be a partial failure or fallback mechanism.

- Deploying a microservices-based application is complex compared to a monolithic application because microservices-based applications have different services and each service may be running on a different container or different instance. There should be a service registry and discovery mechanism to register any new service and discover the service that has to be communicated.

- A client microservice might invoke multiple remote microservices for a single functionality. This might lead to heavy rest or RPC calls across the network. There should be a service gateway that will take one call from the microservice and internally spawns this call to various local service calls (local to service gateway) and aggregate the result from all these services and returns back to the client microservice.

- When the same microservice is hosted as a different container in the same instance, there should be a load balancing at the service level to distribute the load and achieve failover mechanism.

- Distributed applications also need some kind of central logging framework so that all data can be centralized to generate the log data.

Achieving all of the above features from scratch is quite complex and time-consuming, and the developer may spend a lot of time to developing and testing the basic framework setup. However, if you get all of these things ready-made, then you just need to focus on business logic.

The Spring Cloud framework provides tools to quickly build some of the common patterns in distributed services like configuration management, service discovery, circuit breaker, distributed session, the service gateway, rest client, and service-level load balancer. Spring Cloud projects can be initialized by adding a few Maven dependencies in projects. This can also be created by the project using spring initialize.

Many of the Spring Cloud components that are critical for microservices deployment came from the Netflix Open Source Software (Netflix OSS) center.

Spring Cloud is built on top of Spring Boot, where Spring Boot contains embedded Tomcat server with minimal configuration code.

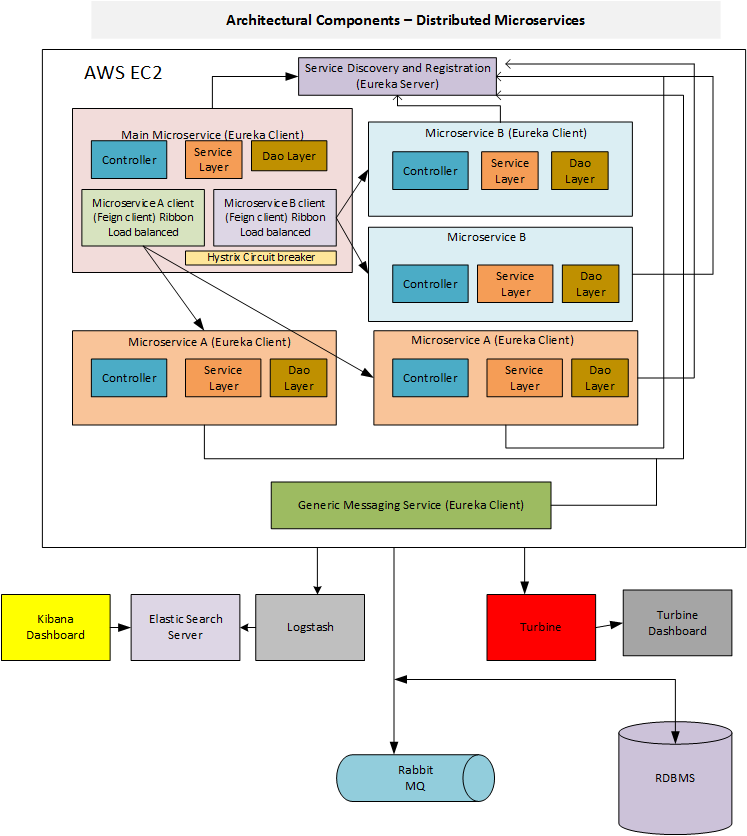

Here is the architectural diagram for the distributed application using microservices on the AWS cloud.

In the above diagram, we have three different microservices where the main microservice communicates with Microservice A and Microservice B using a REST client. Microservice A is launched in two containers and Microservice B is launched in two containers within the same instance. The main Microservice, which is exposed to a client, will interact with any instance of Microservice A or Microservice B through the service client, which is a load balancer. This will make the load balancing not only at instance level but also at the service levels within the same instance. This is called service-level load balancing. When making a request to a service, the client service obtains the address of service instance by querying to the Eureka Service registry and discovery.

Spring Cloud already supports Eureka for service registry, and Eureka service registry can be enabled by adding @EnableEurekaServer annotation.

@SpringBootApplication

@EnableEurekaServer

public class EurekaServer {

public static void main(String[] args) {

System.setProperty("spring.config.name", "registration-server");

SpringApplication.run(EurekaServer.class, args);

}

}And the application.yml for registry service:

eureka:

instance:

hostname: localhost

client: # Not a client, don't register with yourself

registerWithEureka: false

fetchRegistry: false

serviceUrl:

defaultZone: http://${eureka.instance.hostname}:${server.port}/eureka/

server:

port: 1111 # HTTP (Tomcat) portAny microservice can register itself to Eureka server by adding @EnableDiscoverClient annotation, the Eureka service registry address, and port into the application.yml or application.properties file.

eureka:

client:

serviceUrl:

defaultZone: http://localhost:1111/eureka/

fetchRegistry: trueSpring Cloud provides various ways for interprocess communication, including feign client and rest template. Feign client is very neat, clean, and easy to implement. Feign client can be enabled by adding the @EnableFeignClients annotation.

The client application has to create an interface with a method annotated with @RequestMapping and interface with @FeignClient along with service ID.

@FeignClient(value = "serviceA")

public interface ServiceClientA {

@RequestMapping(value = "/user/{userId}", method = RequestMethod.GET, produces = { MediaType.APPLICATION_JSON_VALUE })

public UserProfile getUserProfile(

@PathVariable("userId") Integer userId);

}We can call these interface methods by creating a new class, ServiceClientBean.java.

@Component

public class ServiceClientBean {

@Autowired

private ServiceClientA serviceClientA;

@HystrixCommand(fallbackMethod = "defaultMethod")

public UserProfile getUserProfile(Integer userId) {

UserProfile user=serviceClientA.getUSerProfile(userId);

return user;

}

public UserProfile defaultMethod() {

return new UserProfile();

}

}All @FeignClient classes are being registered as Spring bean by adding the basePackageClasses attribute value in the @EnableFeignClients.

@EnableFeignClients(basePackageClasses = ServiceClientA.class)A developer can share the same interface definition between client and server, but that will add tight coupling between client and server.

Feign client automatically supports the load balancing using Ribbon. The Ribbon is a client-side load balancer which gives a lot of control over HTTP and TCP request. We can configure ribbon client using external properties client.ribbon.*.

In ServiceClientBean.java, we have added a @HystrixCommand annotation to handle the partial failure. This command tells Spring that this method is prone to failure. Spring Cloud libraries wrap these methods to handle fault tolerance and latency tolerance by enabling circuit breaker. The Hystrix command typically follows with a fallback method. In the case of failure, Hystrix automatically enables the fallback method mentioned and diverts traffic to the fallback method.

If Microservice A is unable to send the response in a timely manner or may be down, then hystrix will call the fallback method to get the default response.

We can see the hystrix metrics and monitoring by adding the hystrix dashboard application.

There is one more component, called Zuul proxy, which acts a service gateway. This is not mentioned in the above architecture diagram. Service gateway internally calls multiple microservices, aggregate the result from these micro services and sends back to the client service.

The Zuul proxy internally uses the Eureka server for service discovery and Ribbon

for load balancing between service instances.

A distributed application needs some kind of central logging framework and it can be achieved easily by the ELK stack.

How to deploy Microservice application on docker Microservices Dockerization

Opinions expressed by DZone contributors are their own.

Comments