Develop XR With Oracle, Ep. 5: Healthcare, Vision AI, Training/Collaboration, and Messaging

In this fifth article of the series, we focus on XR applications of healthcare, vision AI, training and collaboration, and messaging.

Join the DZone community and get the full member experience.

Join For FreeThis is the fifth piece in a series on developing XR applications and experiences using Oracle and focuses on XR applications of healthcare, vision AI, training and collaboration, and messaging, including other topics such as multi-platform development, etc. Find the links to the first four articles below:

Develop XR With Oracle, Ep 1: Spatial, AI/ML, Kubernetes, and OpenTelemetry

Develop XR With Oracle, Ep 2: Property Graphs and Data Visualization

Develop XR With Oracle, Ep 3: Computer Vision AI, and ML

Develop XR With Oracle, Ep 4: Digital Twins and Observability

As with the previous posts, I will specifically show applications developed with Oracle database and cloud technologies using HoloLens 2, Oculus, iPhone, and PC and written using the Unity platform and OpenXR (for multi-platform support), Apple Swift, and WebXR.

Throughout the blog, I will reference the corresponding demo video below:

Extended Reality (XR) and Healthcare

I will refer the reader to the first article in this series (again, the link is above) for an overview of XR, and I will not go in-depth into the vast array of technology involved in the healthcare sector but will instead focus on the XR-enablement of these topics and the use of Oracle tech to this end particularly as Oracle has an increased focus in this area via the Cerner acquisition and other endeavors. It is well known that telehealth has grown tremendously since the pandemic, peaking at 78 times the rate just a month before the pandemic and even now still leveling off at 38 times that rate. These and other numbers and their impact are well documented in numerous publications, such as this McKinsey report and the VR/AR Associations Healthcare Forum, which will be referenced in this article. One only needs to have heard of the use of XR for live surgeries by Johns Hopkins back in 2021 to understand the extent to which XR will aid in this industry, and so again, I will not delve too deeply into attempting to prove this, but proceed to give some examples and ideas involving Oracle database and cloud technologies.

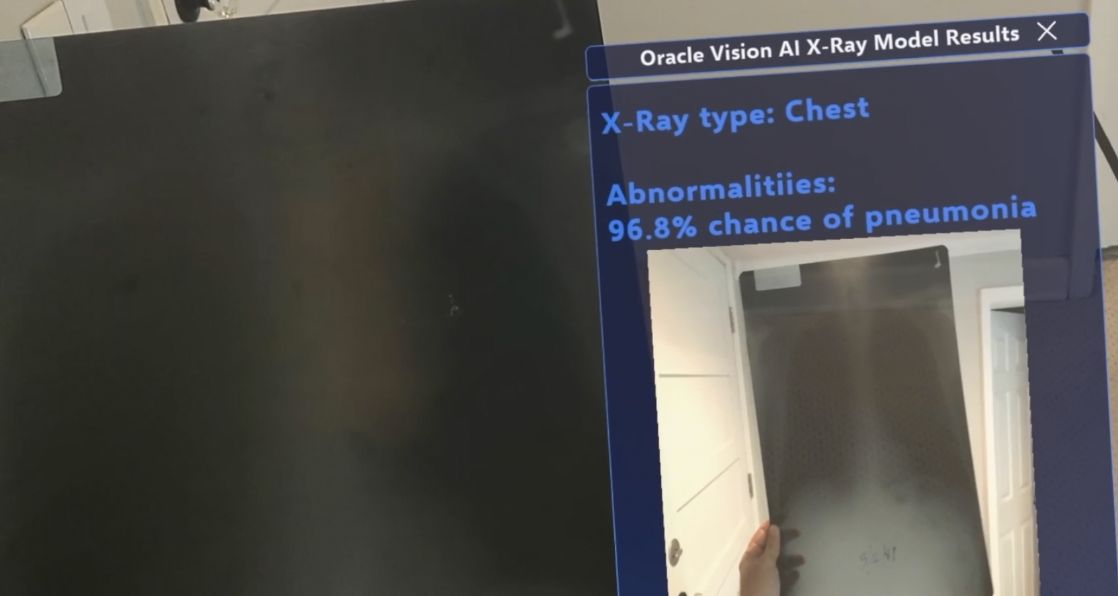

Vision AI and Contextual Intelligence: Real-Time Analysis and Diagnosis

The first example involves the use of the Hololens mixed-reality headset (though other headsets/devices could be used) and Oracle’s Vision AI service. It should not be much of a stretch of the imagination to envision healthcare workers wearing XR devices that provide additional functionality and information to them (and indeed, many already are). Today doctors, dentists, etc. constantly cross-reference a computer screen with a patient to check for information and orient what they see (being x-ray, MRI, etc.) and often where head-mounted lights, monocular magnifying glasses, etc. The XR solutions being discussed here are mere evolutions of that. More details on this application can be found in the research blog here, but the basic details of how it works are described here.

The application running on the Hololens takes pictures with its built-in camera using the wearer’s field of vision as a reference and does so at regular intervals. This provides a hands-free experience that is, at the very least, more convenient. Still, in many situations, like in the operating room, it’s necessary for users’ hands to interact with the real (or XR) world, thus making it a more optimal solution. This also means the system can pick up contextual information that the practitioner may not be aware of or have access to while quickly gathering and processing such information without the practitioner having to explicitly instruct it, thus saving time as well. This leads to the optimization that mixed reality provides, an exciting byproduct of immersion.

The Hololens then sends these pictures to OCI Object Storage via secure REST calls, where they can be conveniently accessed by the OCI Vision AI Service directly and also stored and/or accessed in the database. There are several different approaches and architectures that can be used from this point to conduct the logic and calls to the Oracle Vision AI APIs for processing the images sent by the XR device. For example, as far as language used, initial versions were written using the OCI CLI, Java, and Python, with a final Java GraalVM-native image version being used. Also, as the Java GraalM native image starts almost instantaneously when the service conducts a particular short-lived routine, it is a good candidate for a serverless function. Optionally, the OCI Notification Service can listen to Object Store changes and call serverless functions as well.

The Java service receives a notification of image upload to object storage and conducts a series of actions as follows:

- Conducts an OCI Vision AI service API call backed by an X-ray object detection model and provides the location of the image sent by the Hololens.

- Receives a reply from the object detection model with the percentage chance of the X-ray being in the image and the bounding coordinates of it.

- Crops the original image using the bounding coordinates.

- Conducts another OCI Vision AI service API call backed by an X-ray classification model and provides it the cropped image of the X-ray.

- Receives a reply from the image classification model with percentage change of X-ray containing signs of abnormalities/pneumonia.

- The Hololens application receives this reply and notifies the wearer with an audible notification — this is configurable and can also be visual. In the case of this application, the information includes the picture of the cropped x-ray with its discovered details listed and stored in a virtual menu located on the wrist and viewable by the wearer only. This approach prevents the interruption of the wearer though it is also possible to overlay the results on the real-life X-ray from which they were derived.

Vision AI and XR are a natural match for solutions in a number of areas, including both healthcare workers, as shown here, and people with conditions such as Parkinson’s, autism, Alzheimer’s, vision and hearing impairment, etc. (e.g., applications/solutions that can be used in this space is described in this blog).

Motion and Sentiment Study: Real-Time Face and Body Tracking Analytics

Today, 95% of healthcare facilities provide remote treatment and rehabilitation. XR technologies can be used to help patients better understand their conditions and treatment options. This can help patients feel more informed and empowered in their healthcare decisions.

The next example was implemented using the iPhone and Swift using Apple’s RealityKit for body tracking movements. The joint coordinates of a model movement are recorded and sent to the Oracle database via Rest calls to ORDS. A person (whether it be a patient, athlete, etc.) using the application attempts to conduct the same movement, and their joint coordinates are compared to those of the model movement stored in the database. If the allowable deviation/delta of the movements is exceeded, the corresponding joints and bones are shown in red rather than green. This feedback is given in real-time to measure progress (e.g., after a rotator cuff or other surgery) and/or to allow the user to modify their movement to match the control model as well as work on balance and coordination. These movements can, in turn, be analyzed (more on XR and Oracle Analytics in an upcoming blog), replayed, manipulated to carry out simulations, etc. These use cases, of course, extend into the sports, entertainment, etc. sectors as well.

![]()

Digital Twins + Doubles and Multiplayer/Participant Messaging: Training and Collaboration

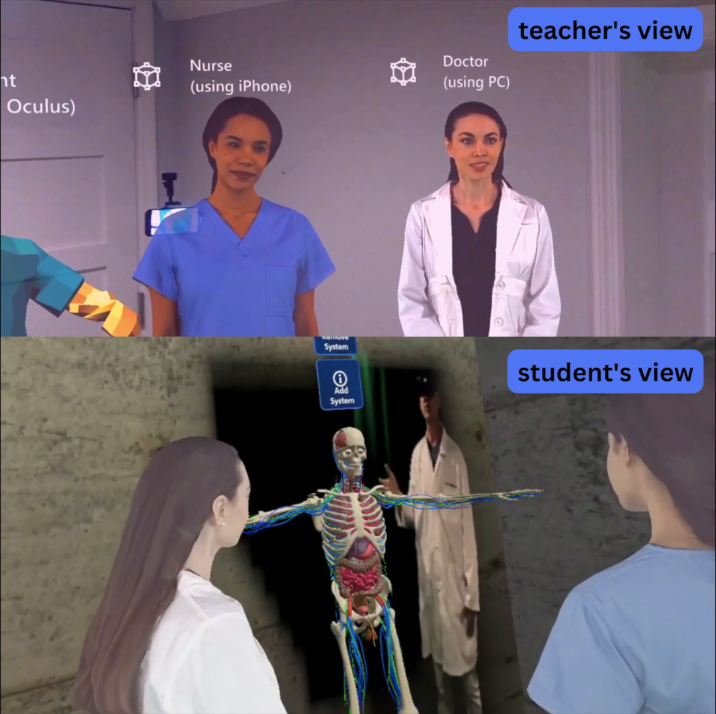

A recent study reported by Unity shows that approximately 94% of companies using real-time 3D technology find it valuable for staff training and are using it to create interactive guided learning experiences. This applies across industries, including manufacturing, transportation, healthcare, retail, and many more, and some trends in the area (many of which coincide with what is shown in this blog) are discussed here. XR technologies can be used to create realistic simulations of medical scenarios, allowing healthcare professionals to practice procedures and techniques in a safe and controlled environment. For example, medical students and surgeons can use XR simulations to practice surgeries with tactile sensations giving the feedback of real surgery, and nurses can use AR simulations to practice administering medications. This can help healthcare professionals gain valuable experience without risking patient safety.

In addition to teaching, XR provides a unique ability for collaboration between individuals in different locations and specialties, creating a shared virtual space where multiple users can interact with each other and with virtual objects. There are several different techniques and software options available for creating XR multiplayer/participant training and collaboration, including XR conferencing software and metaverses, Photon and others like it, Rest, WebSockets, and different types of messaging.

XR conferencing software and platforms, such as Alakazam, are becoming increasingly popular and allow multiple users to participate in virtual meetings, events, training sessions, etc.

Photon is a platform that allows developers to create multiplayer games and applications using Unity, Unreal Engine, and other game engines and can easily be installed on and take advantage of Oracle Cloud compute (including NVIDIA GPU). It is perhaps the most famous of such platforms, but there are others.

WebSockets are a protocol for real-time communication between web clients and servers and can be a faster and more efficient method for such use cases than Rest though Rest calls are a simple, viable option in many cases as well, and more APIs are available via Rest in general than any of the other methods.

Messaging is another technique that can be used to create XR training and collaboration experiences. Systems such as Kafka and JMS have both pub/sub (multi-consumer topics) and producer/consumer (single-consumer topics) and are very flexible for different training and collaboration needs.

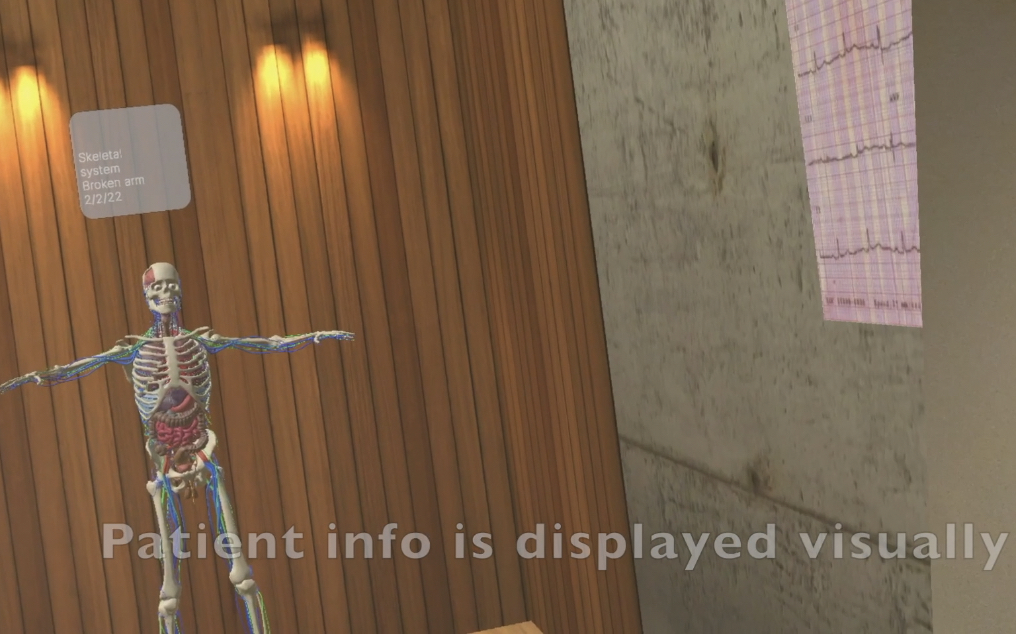

In the training application shown in the video, I used a number of the techniques above but focused on using Oracle’s TxEventQ messaging engine (formerly known as AQ). This is a very powerful and unique offering for a number of reasons, one key one being its ability to conduct database work and messaging work in the same local transaction. This is unique and perfectly suited for microservices as it provides a transaction outbox pattern and also exactly-once message delivery so that there is no message loss and no need for the developer to write deduplication logic. This may not be necessary for conventional gaming or movie streaming but is a must for mission-critical systems and provides some extremely interesting and unique aspects for XR where the ability to reliably store a shared (3D) object (especially those that are dynamic or created via generative AI, e.g.) and the interactions/messages that have been made upon it by various participants is a very powerful tool. That is precisely what is done in the app shown in the video. The collaborative session is not only recorded in 3d for viewing as a video, but the actual objects and their interactions by participants are recorded in the database and available for playback so that they can be intercepted and manipulated later on. This allows for the ability to do deeper learning and run further models (AI or otherwise), simulations, scenarios, etc., by tweaking the objects and interactions like/with playbooks.

With the development of more advanced technology and more powerful devices, XR training will become more prevalent in the near future, making the training experience more immersive and interactive.

One other small note is that the app in the video has healthcare workers in full 3d volumetric video captures made professionally in a studio but also one that is a simple 2d video with the green screen alpha-channel removed via a Unity shader providing a similar holographic effect with minimal effort (the quality could be better with more effort re lighting, etc.). A Zoom virtual greenscreen could be used to this end as well as free assets with animation done in Blender. Unity, Zoom, and Blender all being free and use the Oracle always-free Autonomous database, making creating the solution very accessible as far as software and cloud costs.

Data EcoSystem: Telehealth and Virtual Health Centers

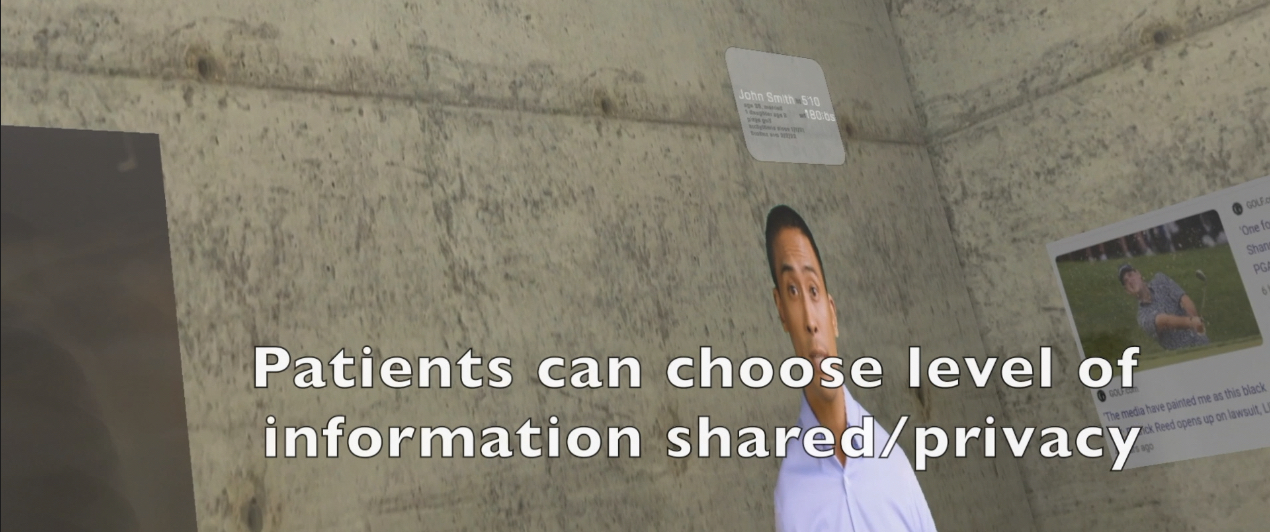

A virtual healthcare center or hospital is a healthcare facility that provides medical services through digital channels such as video conferencing, online chat, remote monitoring, and, increasingly, XR technologies. These digital tools allow patients to access healthcare services from the comfort of their homes or remote locations without the need to physically visit a hospital or clinic.

The concept of a virtual healthcare center is becoming increasingly popular due to the growing demand for telemedicine services, especially in the wake of the COVID-19 pandemic. A virtual healthcare center can provide a wide range of medical services such as primary care, specialty consultations, diagnostic tests, and prescription refills and offers convenience and flexibility to patients who can access medical services from anywhere and at any time. This can be especially beneficial for patients with mobility issues, those living in rural areas, or patients who need to consult with a specialist who is not available in their local area. It can also reduce healthcare costs for both patients and healthcare providers. By using remote consultations and monitoring, healthcare providers can reduce the need for expensive in-person consultations and hospital stays. Patients can also save money on transportation costs and time off from work.

A virtual healthcare center can improve the quality of care for patients. With remote monitoring, patients can receive personalized and continuous care, with healthcare providers able to monitor their health in real-time and intervene quickly if necessary. This can result in better health outcomes and reduced hospital readmissions.

However, there are also some challenges to implementing virtual healthcare centers. These include issues around data privacy and security, as well as the need for adequate internet access and digital literacy among patients. In addition, some patients may still prefer traditional in-person care, and, though decreasing in number, there are limitations to what medical services can be provided remotely. The concept of a virtual healthcare center has the potential to revolutionize the way healthcare is delivered, with benefits for both patients and healthcare providers. However, careful consideration and planning are needed to ensure that the implementation of virtual healthcare centers is safe, effective, and equitable for all patients.

Oracle, with its acquisition of Cerner and increased focus on the future of healthcare, is in a one-of-a-kind position to facilitate such XR solutions by its ability to “Deliver better health insights and human-centric experiences for patients, providers, payers, and the public. Oracle Health offers the most secure and reliable healthcare solutions, which connect clinical, operational, and financial data to improve care and advance decision-making around health and well-being.” (Oracle Health page). Larry Ellison made this clear at his Oracle OpenWorld presentation, where healthcare was the priority for Oracle’s future, and earlier in the year when he stated, “Together, Cerner and Oracle have all the technology required to build a revolutionary new health management information system in the cloud.”

Mental Health

Over 20% of American adults experience mental illness, while over 2.5M youth struggle with severe depression, and 800,000 people commit suicide each year in the world due to mental illness. Simultaneously, there is a growing deficit of mental health professionals, which is a nationwide issue but is particularly severe for minors and adolescents. According to the U.S. Department of Health and Human Services, the country is expected to have a shortage of 10,000 mental health professionals by 2025.

Mental health is one of the areas where XR has been most widely researched and proven extremely effective as a way to deliver therapies and treatments for mental health conditions such as anxiety, depression, and PTSD. For example, VR exposure therapy can be used to help patients confront and overcome their fears by exposing them to simulations of the things they are afraid of in a controlled and safe environment. Cognitive Behavioral Therapy (CBT),

XR can be used to create immersive, meditative experiences to promote mindfulness, relaxation, and stress reduction. For example, VR environments can simulate peaceful and calming natural environments, such as beaches, forests, mountains, or space. Children, the elderly, and others that may spend prolonged periods in hospitals, etc., are using XR to explore the world and socialize.

Using XR and teletherapy, therapists can create virtual environments that can simulate in-person therapy sessions, providing a more immersive and personalized experience while reducing the inhibitions of the patient and even allowing the use of AR lenses and avatars for privacy.

NLP (Natural Language Processing) sentiment analysis, such as that provided by the corresponding Oracle AI service, can interpret emotions and intent from verbal communication, and with advances in facial recognition and facial sentiment analysis, it is now possible with great accuracy to detect the emotions a person is feeling and/or expressing non-verbally (the same follows for body/gesture tracking). Neuro-technology and neural interfaces in coordination with XR can now interpret and give insight into human intent and measure emotion, providing even greater insight into intent and emotion. And, of course, the world is quite familiar with the greater and simpler accessibility of AI interfaces such as ChatGPT, etc., to facilitate this. As with the X-ray example given earlier, these can, in some situations, provide greater and more consistent accuracy and speed than a human but can also act as assisting technologies for healthcare workers. More examples of this will be in upcoming blogs.

Additional Thoughts

I have given some ideas and examples of how healthcare and XR can be used together and facilitated by Oracle. I look forward to putting out more blogs on this topic and other areas of XR with Oracle Cloud and Database soon.

Please see my other publications for more information on XR and Oracle cloud and database, as well as various topics around microservices, observability, transaction processing, etc., as well as this recent blog about what AR is. Also, please feel free to contact me with any questions or suggestions for new blogs and videos, as I am very open to suggestions. Thanks for reading and watching.

Published at DZone with permission of Paul Parkinson. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments