Deep Dive Into .NET Garbage Collection

What is .Net Garbage collection, latency modes, GC phases, background GC, how it works and some performance tips explained by Inoxoft's Senior .Net Developer.

Join the DZone community and get the full member experience.

Join For FreeGarbage collection, and memory management in general, will be the first and last things to work on. It is the main source of the most obvious performance problems, those that are the quickest to fix but need to be constantly monitored. Many problems are actually caused by an incorrect understanding of the garbage collector’s behavior and expectations.

You have to think about memory performance just as much as about CPU performance. This is also true for unmanaged code performance, but in .NET it is easier to deal with. Once you understand how GC works, it becomes clear how to optimize your program for its operation. It can give you better overall heap performance in many cases because it deals with allocation and fragmentation better.

Basics of Operating System

Before we start, you should get acquainted with some basic operating system terminology:

- Physical Memory: The actual physical memory chips in a computer. Only the operating system manages physical memory directly.

- Virtual Memory: A logical organization of memory in a given process. Virtual memory size can be larger than physical memory. For example, 32-bit programs have a 4 GB address space, even if the computer itself only has 2 GB of RAM (random-access memory). Windows allows the program to access only 2 GB of that by default, but all 4 GB is possible if the executable has the large address aware bit set. (On 32-bit versions of Windows, large-address aware programs are limited to 3 GB, with 1 GB reserved for the operating system.) As of Windows 8.1 and Server 2012, 64-bit processes have a 128 TB address space, far larger than the 4 TB physical memory limit. Some of the virtual memory may be in RAM while other parts are stored on disk in a paging file.

- Reserved Memory: A region of virtual memory address space that has been reserved for the process and thus will not be allocated to a future requester. Reserved memory cannot be used for memory allocation requests because nothing is backing it — it is just a description of a range of memory addresses.

- Committed Memory: A region of memory that has a physical backing store. This can be RAM or disk.

- Page: An organizational unit of memory. Blocks of memory are allocated in a page, which is usually a few KB in size.

- Paging: The process of transferring pages between regions of virtual memory. The page can move to or from another process (soft paging) or the disk (hard paging). Soft paging can be accomplished very quickly by mapping the existing memory into the current process’s virtual address space. Hard paging involves a relatively slow transfer of data to or from a disk. Your program must avoid this at all costs to maintain good performance.

- Private Bytes: Committed virtual memory private to the specific process (not shared with any other processes).

- Virtual Bytes: Allocated memory in the process’s address space, some of which may be backed by the page file, possibly shared with other processes or private to the process.

- Working Set: The amount of virtual memory currently resident in physical memory (usually RAM).

- Working Set-Private: The number of private bytes currently resident in physical memory.

- Thread Count: The number of threads in the process. This may or may not be equal to the number of .NET threads.

Garbage Collection Operation

When you initialize a new process, the runtime reserves a contiguous region of address space for the process. This reserved address space is called the managed heap. The managed heap maintains a pointer to the address where the next object in the heap will be allocated. Initially, this pointer is set to the managed heap's base address. All reference types are allocated on the managed heap. When an application creates the first reference type, memory is allocated for the type at the base address of the managed heap. When the application creates the next object, the garbage collector allocates memory for it in the address space immediately following the first object. As long as address space is available, the garbage collector continues to allocate space for new objects in this manner.

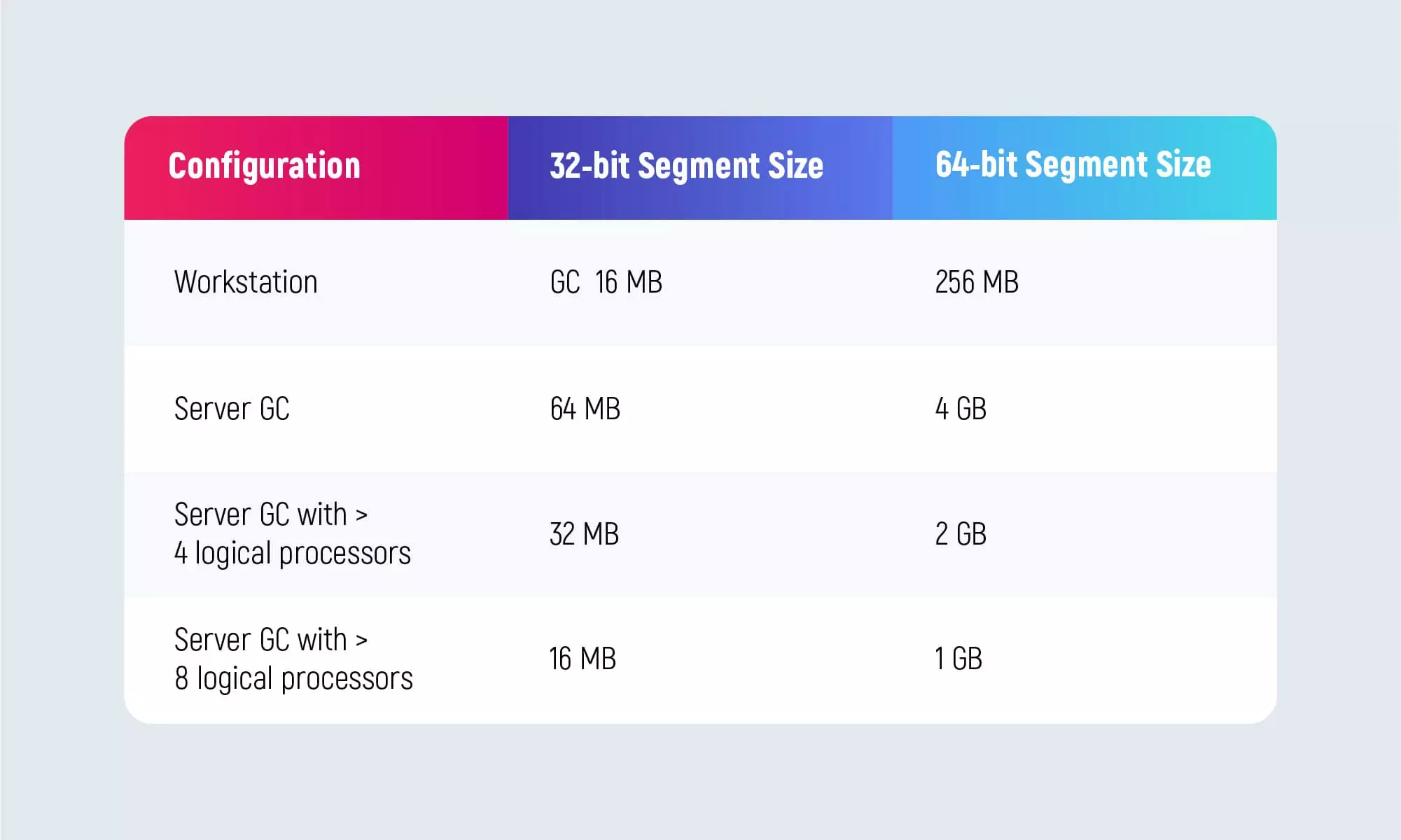

Unmanaged heaps are allocated with the VirtualAlloc Windows API and used by the operating system and CLR for unmanaged memory such as that for the Windows API, OS data structures, and even much of the CLR. The CLR allocates all managed .NET objects on the managed heap, also called the GC heap, because the objects on it are subject to garbage collection. The managed heap is further divided into two types of heaps: the small object heap and the large object heap (LOH). Each one is assigned its segments, which are blocks of memory belonging to that heap. Both the small object heap and the large object heap can have multiple segments assigned to them. The size of each segment can vary depending on your configuration and hardware platform.

Workstation GC is the default. In this mode, all GCs happen on the same thread that triggered the collection and run at the same priority. For simple apps, especially those that run on interactive workstations where many managed processes run, this makes the most sense. For computers with a single processor, this is the only option, and trying to configure anything else will not have any effect.

Server GC creates a dedicated thread for each logical processor or core. These threads run at highest priority (THREAD_PRIORITY_HIGHEST) but are always kept in a suspended state until a GC is required. All garbage collections happen on these threads, not the application’s threads. After the GC, they sleep again. In addition, the CLR creates a separate heap for each processor. Within each processor heap, there is a small object heap and a large object heap. From your application’s perspective, this is all logically the same heap—your code does not know which heap objects belong to and object references exist between all the heaps (they all share the same virtual address space).

You can enable Server GC by changing your runtimeconfig.json file as follow:

xxxxxxxxxx

{

"runtimeOptions": {

"configProperties": {

"System.GC.Server": true

}

}

}

Server garbage collection is available only on multiprocessor computers. Keep it in mind when plan to deploy your application in Azure. The minimum app service plan for 32-bit apps in Basic 2, for 64-bit Standard 2 (they both have 2 cores). If you don’t know which configuration is enabled now, just use code below:

xxxxxxxxxx

string result;

if (GCSettings.IsServerGC == true) result = "server";

else result = "workstation";

Having multiple heaps gives a couple of advantages: Garbage collection happens in parallel. Each GC thread collects one of the heaps. This can make garbage collection significantly faster than in workstation GC. In some cases, allocations can happen faster, especially on the large object portion of the heap, where allocations are spread across all the heaps. There are other internal differences as well such as larger segment sizes, which can mean a longer time between garbage collections.

Heap Segments and Generations

The small object heap segments are further divided into generations. There are three generations, referenced casually as gen 0, gen 1, and gen 2. Gen 0 and gen 1 are always in the same segment, but gen 2 can span multiple segments, as can the large object heap. The segment that contains gen 0 and gen 1 is called the ephemeral segment.

Objects allocated on the small object heap pass through a lifetime process that needs some explanation. The CLR allocates all objects that are less than 85,000 bytes (approximately 83 KB) in size on the small object heap. They are always allocated in gen 0, usually at the end of the current used space. This is why allocations in .NET are extremely fast. If the fast allocation path fails, then the objects may be placed anywhere they can fit inside gen 0’s boundaries. If it will not fit in an existing spot, then the allocator will expand the current boundaries of gen 0 to accommodate the new object. This expansion occurs at the end of the used space towards the end of the segment. If this pushes past the end of the segment, it may trigger a garbage collection. The existing gen 1 space is untouched.

For small objects (less than 85,000 bytes), objects always begin their life in gen 0. As long as they are still alive, the GC will promote them to subsequent generations each time a collection happens. When a garbage collection occurs, compaction may occur, in which case the GC physically moves the objects to a new location to free space in the segment. If no compaction occurs, the boundaries are merely redrawn.

If gen 2 continues to grow, then it can span multiple segments. The LOH can also span multiple segments. Regardless of how many segments there are, generations 0 and 1 will always exist in the same segment.

The large object heap obeys different rules. Any object that is at least 85,000 bytes in size is allocated on the LOH automatically and does not pass through the generational model — put another way, it is allocated directly in gen 2. The only types of objects that normally exceed this size are arrays and strings. For performance reasons, the LOH is not automatically compacted during collection and is, thus, easily susceptible to fragmentation. However, starting in .NET 4.5.1, you can compact it on-demand.

The following code compacts the LOH immediately:

GCSettings.LargeObjectHeapCompactionMode = GCLargeObjectHeapCompactionMode.CompactOnce; GC.Collect();

If you assign the property value of GCLargeObjectHeapCompactionMode.CompactOnce, the LOH is compacted during the next full blocking garbage collection, and the property value is reset to GCLargeObjectHeapCompactionMode.Default. Background garbage collections are not blocking. This means that, if you set the LargeObjectHeapCompactionMode property to GCLargeObjectHeapCompactionMode.CompactOnce, any background generation 2 collections that occur subsequently do not compact the LOH. Only the first blocking generation 2 collection compacts the LOH.

In containers using .NET Core 3.0 and later, the LOH is automatically compacted. You can specify the threshold size (in bytes) at which objects meet in a bunch of large objects (LOH) by changing your runtimeconfig.json file as follow:

xxxxxxxxxx

{

"runtimeOptions": {

"configProperties": {

"System.GC.LOHThreshold": 120000

}

}

}

The threshold value must be more than default value (85000).

Garbage Collection Phases

There are four phases to a garbage collection:

I. Suspension: All managed threads in the application are forced to pause before a collection can occur. It is worth noting that suspension can only occur at certain safe points in code like at a ret instruction. Native threads are not suspended and will keep running unless they transition into managed code, at which point they too will be suspended. If you have a lot of threads, a significant portion of garbage collection time can be spent just suspending threads.

II. Mark: Starting from each root, the garbage collector follows every object reference and marks those objects as seen. Roots include thread stacks, pinned GC handles, and static objects.

III. Compact: Reduce memory fragmentation by relocating objects to be next to each other and update all references to point to the new locations. This happens on the small object heap when needed and there is no way to control it. On the large object heap, compaction does not happen automatically at all, but you can instruct the garbage collector to compact it on-demand.

IV. Resume: The managed threads are allowed to resume.

Background GC

Background GC works by having a dedicated thread for garbage collecting generation 2. For server GC there will be an additional thread per logical processor, in addition to the one already created for server GC in the first place. Yes, this means if you use server GC and background GC, you will have two threads per processor dedicated to GC, but this is not particularly concerning. It is not a big deal for processes to have many threads, especially when most of them are doing nothing most of the time. One thread is for foreground GC and runs at the highest priority, but it is suspended most of the time.

The thread for background GC runs at a lower priority concurrently with your application’s threads and will be suspended when the foreground GC threads become active so that you do not have to compete GC modes occurring simultaneously. If you are using workstation GC, then background GC is always enabled. Starting with .NET 4.5, it is enabled on server GC by default, but you do have the ability to turn it off using runtimeconfig.json.

xxxxxxxxxx

{

"runtimeOptions": {

"configProperties": {

"System.GC.Concurrent": false

}

}

}

Latency Modes

The garbage collector has a number of latency modes, most of them accessed via the GCSettings.LatencyMode property. The mode should rarely be changed, but the options can be useful at times.

Interactive is the default GC latency mode when concurrent garbage collection is enabled (which is on by default). This mode allows collections to run in the background.

Batch mode disables all concurrent garbage collection and forces collections to occur in a single batch. It is intrusive because it forces your program to stop completely during all GCs. It should not regularly be used, especially in programs with a user interface. There are two low-latency modes you can use for a limited time. If you have periods of time that require critical performance, you can tell the GC not to perform expensive gen 2 collections.

- LowLatency: For workstation GC only, it will suppress gen 2 collections.

- SustainedLowLatency: For workstation and server GC, it will suppress full gen 2 collections, but it will allow background gen 2 collections. You must enable background GC for this option to take effect.

Both modes will greatly increase the size of the managed heap because compaction will not occur. If your process uses a lot of memory, you should avoid this feature. Only turn on a low-latency mode if all of the following criteria apply:

- The latency of a full garbage collection is never acceptable during normal operation.

- The application’s memory usage is far lower than the available memory. (If you want low-latency mode, then max out your physical memory.)

- Your program can survive long enough until it turns off low-latency mode, restarts itself, or manually performs a full collection.

Finally, starting in .NET 4.6, you can declare regions where garbage collections are disallowed, using the NoGCRegion mode. This attempts to put the GC in a mode where it will not allow a GC to happen at all. It cannot be set via this property, however. Instead, you must use the TryStartNoGCRegion method as in the example below.

xxxxxxxxxx

bool success = GC.TryStartNoGCRegion(totalSize: 2000000, lohSize: 1000000,

disallowFullBlockingGC: true);

if (success) {

try {

// do allocations

}

finally {

if (GCSettings.LatencyMode == GCLatencyMode.NoGCRegion) {

GC.EndNoGCRegion();

}

}

}

The low-latency or no-GC modes are not absolute guarantees. If the system is running low on memory and the garbage collector has the choice between doing a full collection or throwing an OutOfMemoryException, it will perform a full collection regardless of your mode setting.

Performance Tips

Reduce Allocation Rate

This almost goes without saying, but if you reduce the amount of memory you are allocating, you reduce the pressure on the garbage collector to operate. You can also reduce memory fragmentation and CPU usage. It can take some creativity to achieve this goal and it might conflict with other design goals.

Critically examine each object and ask yourself:

- Do I really need this object at all?

- Does it have fields that I can get rid of?

- Can I reduce the size of arrays?

- Can I reduce the size of primitives (Int64 to Int32, for example)? Are some objects used only in rare circumstances and can, therefore, be initialized only when needed?

- Can I convert some classes to structs so they live on the stack, or as part of another object, and have no per-instance overhead?

- Am I allocating a lot of memory, to use only a small portion of it?

- Can I get this information in some other way?

- Can I allocate memory upfront?

Collect Objects in Gen 0 or Not at All

Put differently, you want objects to have an extremely short life so that the garbage collector will never touch them at all, or, if you cannot do that, they should go to gen 2 as fast as possible and stay there forever, never to be collected. This means that you maintain a reference to long-lived objects forever. Often, this also means pooling reusable objects, especially anything on the large object heap. Garbage collections get more expensive in each generation. You want to ensure there are many gen 0/1 collections and very few gen 2 collections. Even with background GC for gen 2, there is still a CPU cost that you would rather not pay: a processor the rest of your program should be using.

Reduce Object Lifetime

The shorter an object’s lifetime, the less chance it has of being promoted to the next generation when a GC comes along. In general, you should not allocate objects until right before you need them. The exception would be when the cost of object creation is so high it makes sense to create them at an earlier point when it will not interfere with other processing.

Reduce References Between Objects

References between objects of different generations can cause inefficiencies in the garbage collector, specifically references from older objects to newer objects. For example, if an object in generation 2 has a reference to an object in generation 0, then every time a gen 0 GC occurs, a portion of gen 2 objects will also have to be scanned to see if they are still holding onto this reference to a generation 0 object. It is not as expensive as a full GC, but it is still unnecessary work if you can avoid it.

Avoid Pinning

Pinning an object fixes it in place so that the garbage collector cannot move it. Pinning exists so that you can safely pass managed memory references to unmanaged code. It is most commonly used to pass arrays or strings to unmanaged code but is also used to gain direct fixed memory access to data structures or fields. If you are not doing interop with unmanaged code and you do not have any unsafe code, then you haven't the need to pin at all.

Avoid Finalizers

Never implement a finalizer unless it is required. Finalizers are code, triggered by the garbage collector to clean up unmanaged resources. They are called from a single thread, one after the other, and only after the garbage collector declares the object dead after a collection. This means that if your class implements a finalizer, you are guaranteeing that it will stay in memory even after the collection that should have killed it.

There is also additional bookkeeping to be done on each GC as the finalizer list needs to be continually updated if the object is relocated. All of this combines to decrease overall GC efficiency and ensure that your program will dedicate more CPU resources to cleaning up your object.

If you do implement a finalizer, you must also implement the IDisposable interface to enable explicit cleanup, and call GC.SuppressFinalize(this) in the Dispose method to remove the object from the finalization queue. As long as you call Dispose before the next collection, it will clean up the object properly without the need for the finalizer to run. The following example correctly demonstrates this pattern.

xxxxxxxxxx

class Foo : IDisposable {

private bool disposed = false;

private IntPtr handle;

private IDisposable managedResource; ~Foo() // Finalizer { Dispose(false); }

public void Dispose() {

Dispose(true);

GC.SuppressFinalize(this);

}

protected virtual void Dispose(bool disposing) {

if (this.disposed) { return; }

if (disposing) {

// Not safe to do this from finalizer

this.managedResource.Dispose();

}

// Cleanup unmanaged resources that are safe to

// do so in a finalizer

UnsafeClose(this.handle);

// If the base class is IDisposable object

// make sure you call

base.Dispose(disposing);

this.disposed = true;

}

}

Dispose methods and finalizers should never throw exceptions. Should an exception occur during a

finalizer’s execution, then the process will terminate. Finalizers should also be careful doing any kind of I/O, even as simple as logging.

You may have heard that finalizers are guaranteed to run. This is generally true, but not absolutely so. If a program is force-terminated then no more code runs and the process dies immediately. The finalizer thread is triggered by a garbage collection, so if there are no garbage collections, finalizers will not run. There is also a time limit to how long all of the finalizers are given on process shutdown. If your finalizer is at the end of the list, it may be skipped. Moreover, because finalizers execute sequentially, if another finalizer has an infinite loop bug in it, then no finalizers after it will ever run. This can lead to memory leaks. For all these reasons, you should not rely on finalizers to clean up the state external to your process.

Avoid Large Object Allocations

Not all allocations go to the same heap. Objects over a certain size will go to the large object heap and immediately be in gen 2. You should avoid allocations on the large object heap as much as possible. Not only is collecting garbage from this heap more expensive, but it is also more likely to fragment, causing unbounded memory to increase over time. Continuous allocations to the large object heap send a strong signal to the garbage collector to do continuous garbage collections—not a good place to be in.

Avoid Copying Buffers

You should usually avoid copying data whenever you can. For example, suppose you have read file data into a MemoryStream (preferably a pooled one if you need large buffers). Once you have that memory allocated, treat it as read-only and every component that needs to access it will read from the same copy of the data.

A common requirement, then, is to refer to sub-ranges of a buffer, array, or memory range. .NET provides two ways to accomplish this at present. The first option, available only for arrays, is the ArraySegment struct to represent just a portion of the underlying array. This ArraySegment can be passed around to APIs independent of the original stream, and you can even attach a new MemoryStream to just that segment. Throughout all of this, no copy of the data has been made.

xxxxxxxxxx

var memoryStream = new MemoryStream(2048);

var segment = new ArraySegment(memoryStream.GetBuffer(), 100, 1024);

var blockStream = new MemoryStream(segment.Array, segment.Offset, segment.Count);

The biggest problem with copying memory is not the CPU necessarily, but the GC. If you find yourself needing to copy a buffer, then try to copy it into another pooled or existing buffer to avoid any new memory allocations. A newer option for representing pieces of existing buffers is the Span struct that is allocated on the stack rather than on the managed heap.

A Span<T> represents a contiguous region of arbitrary memory. A Span<T> instance is often used to hold the elements of an array or a portion of an array. Unlike an array, however, a Span<T> instance can point to managed memory, native memory, or memory managed on the stack.

xxxxxxxxxx

// Create a span over an array.

var array = new byte[100];

var arraySpan = new Span(array);

byte data = 0;

for (int ctr = 0; ctr < arraySpan.Length; ctr++)

arraySpan[ctr] = data++;

int arraySum = 0;

foreach (var value in array)

arraySum += value;

Console.WriteLine($"The sum is {arraySum}");

// Output: The sum is 4950

The following example creates a Span<Byte> from 100 bytes of native memory:

xxxxxxxxxx

// Create a span from native memory.

var native = Marshal.AllocHGlobal(100);

Span nativeSpan; unsafe {

nativeSpan = new Span(native.ToPointer(), 100);

}

byte data = 0;

for (int ctr = 0; ctr < nativeSpan.Length; ctr++)

nativeSpan[ctr] = data++;

int nativeSum = 0;

foreach (var value in nativeSpan)

nativeSum += value;

Console.WriteLine($"The sum is {nativeSum}");

Marshal.FreeHGlobal(native);

// Output: The sum is 4950

There are also utility methods to convert from arrays and ArraySegment structs to Span structs.

Pool Long-Lived and Large Objects

Objects live very briefly or forever. They should either go away in gen 0 collections or last forever in gen 2. Those objects do not obviously need to last forever, but their natural lifetime in the context of your program ensures they will live longer than the period of a gen 0 (and maybe gen 1) garbage collection. These types of objects are candidates for pooling. Another strong candidate for pooling is any object that you allocate on the large object heap, typically collections.

ArrayPool<T> is a high-performance pool of managed arrays. You can find it in System. Buffers package and it’s source code is available on GitHub.

xxxxxxxxxx

var samePool = ArrayPool.Shared;

byte[] buffer = samePool.Rent(minLength);

try {

Use(buffer);

}

finally {

samePool.Return(buffer);

// don't use the reference to the buffer after returning it!

}

void Use(byte[] buffer) // it's an array

How to Use it?

- Recommended: use the ArrayPool<T>. The shared property, which returns a shared pool instance. It’s thread-safe and all you need to remember is that it has a default max array length, equal to 2^20 (1024*1024 = 1 048 576).

- Call the static ArrayPool<T>. Create a method, which creates a thread-safe pool with custom maxArrayLength and maxArraysPerBucket. You might need it if the default max array length is not enough for you. Please be warned, that once you create it, you are responsible for keeping it alive.

- Derive a custom class from abstract ArrayPool<T> and handle everything on your own.

Remember that a pool that never dumps objects is indistinguishable from a memory leak. Your pool should have a bounded size (in either bytes or number of objects), and once that has been exceeded, it should drop objects for the GC to clean up. Ideally, your pool is large enough to handle normal operations without dropping anything and the GC is only needed after brief spikes of unusual activity.

Use Weak References For Caching

Weak references are references to an object that still allow the garbage collector to clean up the object. They are in contrast to the default strong references, which prevent collection completely (for that object). They are mostly useful for caching expensive objects that you would like to keep around but are willing to let go if there is enough memory pressure. Weak references are a core CLR concept that is exposed through a couple of .NET classes:

- WeakReference

- WeakReference<T>

Example of usage:

xxxxxxxxxx

// The underlying Foo object can be garbage collected at any time!

WeakReference weakRef = new WeakReference(new Foo());

// Create a strong reference to the object,

// now no longer eligible for GC Foo myFoo;

if (weakRef.TryGetTarget(out myFoo)) { ... }

You can still have other references, both strong and weak, to the same object. The collection will only happen if the only references to it are weak (or nonexistent).

Most applications do not need to use weak references at all, but some criteria may indicate good usage:

- Memory use needs to be tightly restricted (such as on mobile devices).

- Object lifetime is highly variable. If your object lifetime is predictable, you can just use strong references and control their lifetime directly.

- Objects are large, but easy to create. Weak references are ideal for objects that would be nice to have around, but if they are not, you can easily regenerate them or do without. (Note that this also implies that the object size should be significant compared to the overhead of using the additional WeakReference objects in the first place).

Bonus links:

https://github.com/Microsoft/Microsoft.IO.RecyclableMemoryStreamhttps://github.com/Microsoft/Microsoft.IO.RecyclableMemoryStream

https://www.codeproject.com/Articles/1191534/To-Heap-or-not-to-Heap-That-s-the-Large-Object-Que

https://www.amazon.com/Writing-High-Performance-NET-Code-Watson-ebook/dp/B07BF68842

https://www.amazon.com/Konrad-Kokosa-ebook/dp/B07KGKGK8K

Thanks for reading! Hope it will be helpful not only for .Net experts but also for .Net engineers who only start their journey into the programming world.

Published at DZone with permission of Nazar Kvartalnyi. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments