Data Orchestration: What Is it, Why Is it Important?

Everything you need to know about data orchestration.

Join the DZone community and get the full member experience.

Join For Free

I first heard the term "data orchestration" earlier this year at a technical meetup in the San Francisco Bay Area. The presenter was Bin Fan, founding engineer and PMC maintainer of the Alluxio open source project.

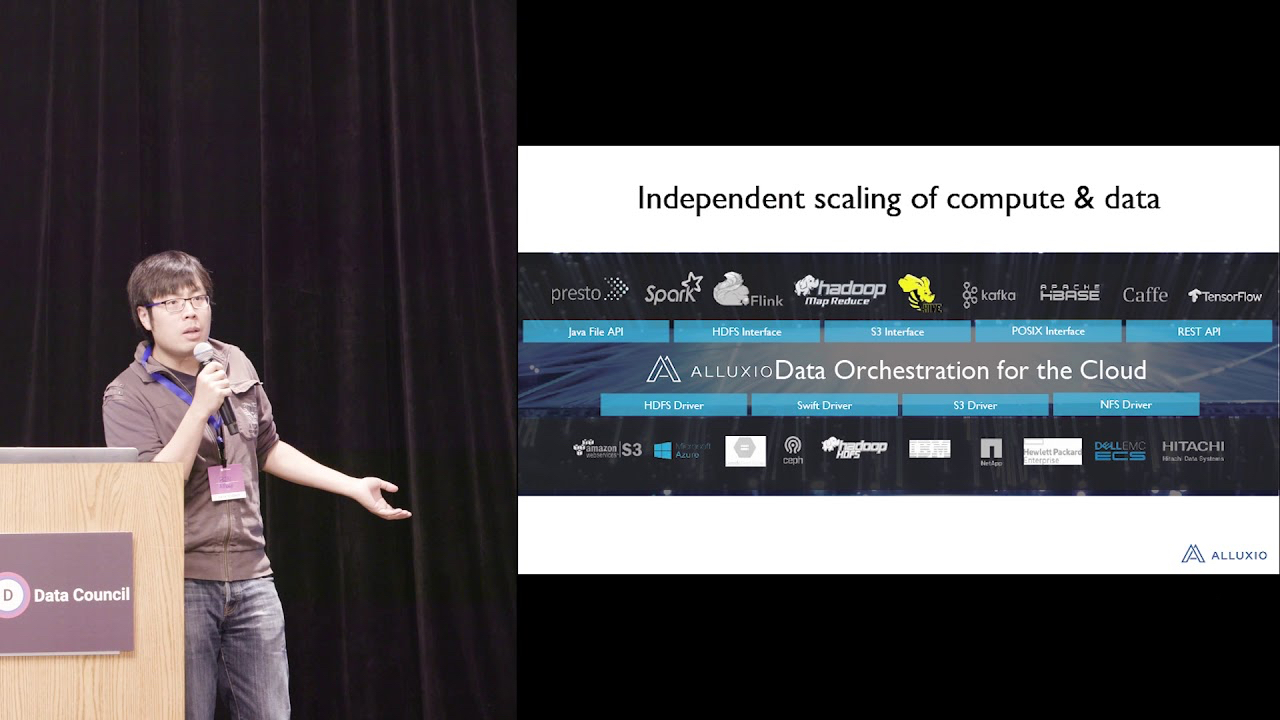

Bin explained that data orchestration is a relatively new term. A data orchestration platform, he said, "brings your data closer to compute across clusters, regions, clouds, and countries."

He described it as being similar to container orchestration, which is the automatic process of managing or scheduling the work of individual containers like Kubernetes or Docker for applications based on microservices within multiple clusters.

I wanted to learn more, so I scheduled a Skype interview with Bin a couple of days ago. Here's the conversation....

Tom: Could you explain the concept of data orchestration in a nutshell to people who may not be familiar with it?

Bin: Absolutely. Data orchestration is a relatively new concept to describe the set of technologies that abstracts data access across storage systems, virtualizes all the data, and presents the data via standardized APIs with a global namespace to data-driven applications. There is a clear need for data orchestration because of the increasing complexity of the data ecosystem due to new frameworks, cloud adoption/migration, as well as the rise of data-driven applications. Here is a blog post from one of our co-founders that goes into more detail on this concept.

Tom: What are the biggest pain points that you hear from data engineers in which data orchestration can help?

Bin: In the “old days” (maybe just two or three years ago) most data engineers were working in the environment of an on-premise data warehouse. They had their own self-managed cluster running Hive and Spark for ELT, analytics, or other workloads. There were many challenges associated with maintaining such a large and complex ecosystem. For system deployment, maintenance, upgrading, performance tuning or troubleshooting, engineers had to have a deep understanding of each part of the entire stack.

In the “new world,” more and more enterprises and users are moving to the public cloud like AWS, Google Cloud, or Microsoft Azure. These cloud providers are doing an extremely good job at simplifying tasks – such as launching a cluster or launching a query with one click. Nowadays, you typically just need a single command when using Alluxio, Presto, Spark, Hive, etc. The cloud providers are offering their own object stores as the data lake.

For data engineers, these developments mean faster ramp-up times, simplified installations and faster speeds to insight. On the other hand, because it’s more like a “black box,” a lot of existing popular data and metadata stores are being designed without having in mind that this data can be stored in the cloud. So, there can be a lot of inefficiencies associated with running an existing or legacy data pipeline directly on the cloud. The stack wasn’t designed for this purpose. This is another area in which Alluxio can help simplify the lives of data engineers working in the cloud.

Tom: You mentioned the increase in cloud adoption as one of the trends driving the need for data orchestration. What are you seeing?

Bin: We’ll touch upon industry trends and offer predictions about where the industry is headed to in the long term. For me, one obvious trend is that people are moving to the cloud and saying bye-bye to their self-maintained on-premise data warehouses. They are moving more and more workloads and data to the cloud. Alluxio’s data orchestration platform was born to help users embrace such trends faster and smoother.

Another trend we’ll share is the use of Kubernetes as the abstraction layer. Combined with the move to the cloud, this means a lot of services are getting more elastic and ephemeral. Running a service becomes so easy that when you don’t need that service or request traffic is low, you can size it down or turn it off. This was typically difficult before with on-premise data warehouses.

In the cloud, you “rent” everything so to speak. That means things are getting more ephemeral and more dynamic – and you need help on the tuning side to make everything more efficient. That’s when computational storage becomes more elastic. The question of how to embrace that elasticity then becomes challenging. And that’s another area where data orchestration can help.

Tom: We’ve been hearing a lot about the trend of moving to the cloud for a few years on an industry basis. But now it’s happening vs. just talk about such moves. What’s changed to finally motivate companies to take the leap to the cloud now?

Bin: Three or four years ago many people believed that startups are the organizations using the cloud because they don’t have to build anything upfront. But once they grew to a certain stage, they would leave the cloud and build their own data warehouse to reduce costs. That was the assumption, anyhow.

What we’re seeing, in reality, is the opposite. New companies are using the public cloud, but so are older or established companies. What’s driving this trend? In my view it’s because the cost operating in the public cloud today is cheaper than an on-premise data warehouse. Also, workloads are typically bursty. In the cloud, you just pay as you go. And today this just makes more sense. I believe moving to the cloud is the future.

* * *

Bin will be sharing more at the 1st-annual Data Orchestration Summit, November 7 at the Computer History Museum in Mountain View, California.

Further Reading

Opinions expressed by DZone contributors are their own.

Comments