A Comparison of Current Kubernetes Distributions

In this article, we discuss some of the many options for Kubernetes distributions to help as you choose the right flavor for your use case.

Join the DZone community and get the full member experience.

Join For FreeThis is an article from DZone's 2021 Kubernetes and the Enterprise Trend Report.

For more:

Read the Report

We have many options for native Kubernetes builds and deployments, like Google Kubernetes Engine (GKE), AWS Elastic Kubernetes Services (EKS), and Azure Kubernetes Service (AKS), or third-party services like Spinnaker and Jenkins. Large enterprises rely on a cloud-first strategy for digital transformation, and choosing a cloud-agnostic architecture is ideal. In this article, we discuss some of the many options for Kubernetes distributions, so please keep in mind: this is not an exhaustive list. So let’s dive into adopting a cloud-native architecture, various K8s distributions, and selecting the right option for your use case.

Some best practices to keep in mind when designing a cloud-agnostic architecture are:

- Use containers for microservices (Docker or Kubernetes) — e.g., GKE, EKS, or AKS.

- Use cloud-neutral solutions like Terraform templates to configure container orchestration in GKE, EKS, or AKS instead of a native solution like ARM, CloudFormation, or Deployment Manager so that it’s flexible enough for a multi-cloud scenario.

- Automate container packaging and deployment as much as possible.

- Avoid direct vendor lock-in services.

- Use Spring Boot microservices loaded in AWS, Azure, or GCP cloud storage.

- Automate configuration (Infrastructure as Code) to reduce cloud service provider dependency for networks, storage, and API configuration.

- Integrate cloud-native directory and authentication services (e.g., Cognito, Google Directory, Azure AD).

- Templatize application setup for microservices, build, test, and deployment.

- Be flexible to integrate with any API platform using native API gateway services.

Docker Kubernetes Service, OpenShift Kubernetes Engine, and Pivotal Cloud Foundry Container Service

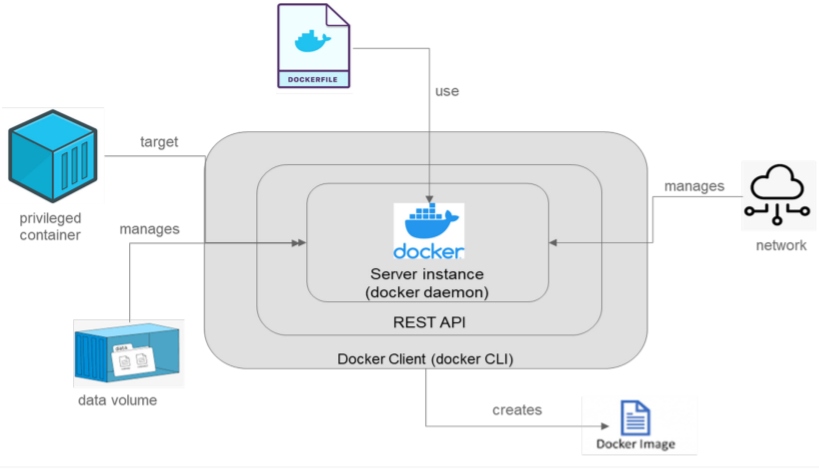

When bringing legacy applications to the cloud in containers, bundling Docker, Kubernetes, and OpenShift together offers a powerful architectural option. Docker is lightweight, simple to set up, and works directly on application nodes, whereas Kubernetes works on clustered instances and is abstracted to nodes and pods to deploy multiple runtime services.

DKS uses a certified Kubernetes distribution and Docker Enterprise 3.0, accelerating Kubernetes application development. You can build Kubernetes applications using Version packs, which run on Kubernetes Developer environments and can be synchronized with a production environment.

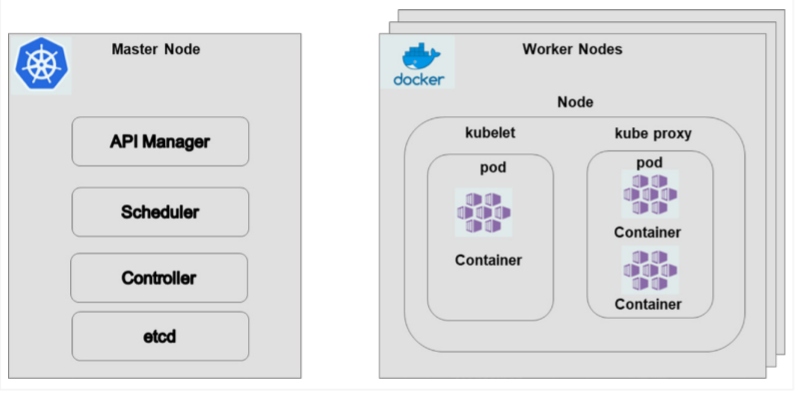

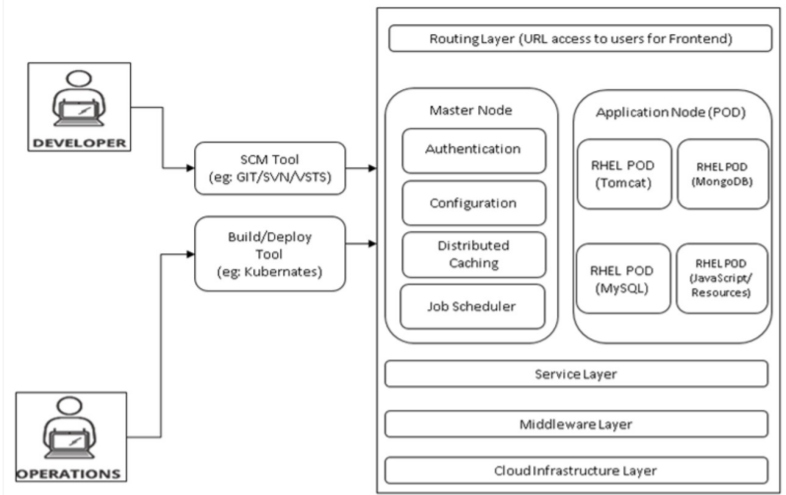

OpenShift and Pivotal Cloud Foundry are popular for private cloud environments, offering services for the flexible development and deployment of containerized applications that can be ported to a public cloud. OpenShift Kubernetes clusters have a master node that controls worker or application nodes. The master node contains centralized functions like caching, job scheduling for batch processing, configuration for pod setup, and authentication for gateway access. Meanwhile, application or worker nodes will house the application workload, such as a web app or database instance. Each application node can have multiple pods.

Since the Master node controls the entire container operation and helps build highly available clusters, it is called the control plane for the OpenShift Kubernetes Engine. Each node contains a small agent called a kubelet that monitors and manages the containers running in pods as determined by a configuration script. Each Kubernetes instance is connected to a web GUI or dashboard that helps manage, monitor, and troubleshoot application nodes running in the cluster. The OpenShift container architecture also includes native monitoring and logging services.

As for Pivotal Cloud Foundry, when large enterprises plan a cloud adoption roadmap, they tend to think about failing fast or having multiple short-term goals. Banking enterprises particularly want to take a two-fold approach during cloud migration — first, adopting architectural upgrades while transitioning from monolithic apps to microservices or adopting a private cloud with a containerized microservices solution, then moving to a public cloud for selected applications. Using Pivotal Cloud Foundry and OpenShift reduces migration complexity and helps create a lean, cost-effective implementation.

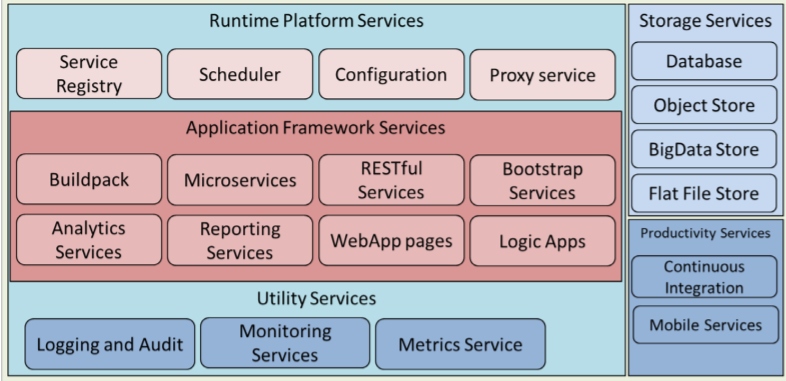

Pivotal Cloud Foundry has a multi-layered architecture, with groups of services for the runtime platform as the base platform integration service; application framework services, where user applications reside; and utility services, which cut across multiple applications. It also supports storage services like any other public cloud provider.

It also offers API integration flexibility for hybrid cloud solutions and security services for role-based access, component authorization, application gateway authentication, and application-level security filters. Containerization using Cloud Foundry buildpacks are handy and can be used as agnostic services that can easily be migrated to a public cloud as a lift-and-shift solution at a later point in time.

Google Kubernetes Engine

GKE is the preferred option for containerization when you have to deploy Dockerized applications using Kubernetes or when you have to quickly blend enhanced native security features with container orchestration or self-healed containers for node auto-repair during health checks and cluster monitoring. With GKE, you have two modes of operations. Standard mode is the classic way of manually managing Kubernetes configuration and infrastructure using, whereas the new Auto-pilot mode helps set up the operational activity of a fully managed control plane to reduce node management operations and improve cluster efficiency.

With an Auto-pilot-managed GKE setup, you can have automatically provisioned cluster infrastructure based on workload specifications and manage node infrastructure based on industry best practices. Auto-pilot mode helps reduce costs by paying only for optimized resource usage by GKE and, at the same time, improves container instance and cluster node performance while ensuring high availability and auto-scalability through self-healed container infrastructure nodes.

Rancher Kubernetes Service

Rancher is a popular open-source container orchestration and build service that supports both Docker and Kubernetes to build containers with predefined templates. With Rancher, there is no need to build complete node instances from the ground up. Rancher is desirable when you want to quickly build containers integrated with network and storage services using predefined templates without the confusion of manually configuring Kubernetes clusters and nodes for highly robust workloads, such as database or middleware applications.

Apache Mesos Kubernetes Engine

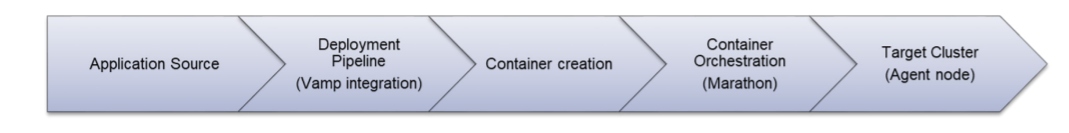

Mesos is built and deployed using a native tool called Vamp, which is a deployment and workflow tool capable of deploying container orchestrator services like Mesos and Marathon in agent nodes. It is an auto-scalable and self-healing service accessed through a web CLI or REST API integration with the cloud-native pipeline shown below.

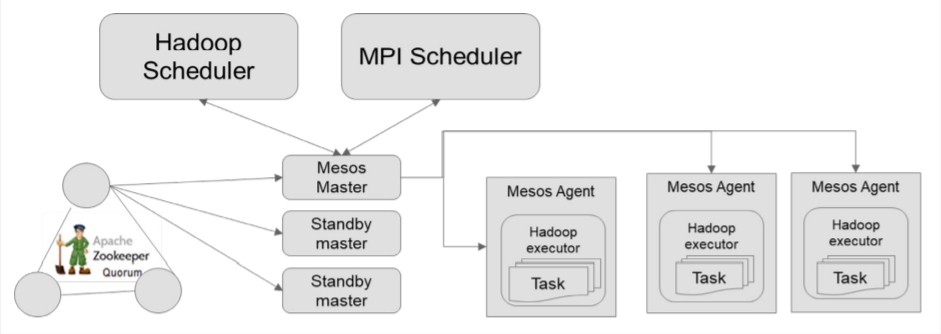

The heart of the Mesos framework is the master node, which is the daemon and manages the agent nodes. Agent nodes run on cluster nodes, which execute the Mesos tasks on these agents. The master node enables slicing of fine-grained resources like CPU, memory, etc. across the agent nodes to run the tasks. Schedulers and executors are two important components on top of a master node. The scheduler registers tasks with the master node and triggers starting points, and the executor runs tasks from there. The master node decides the resources needed for each framework run in agent nodes, and the scheduler decides resource usage for task execution.

Since Apache Mesos uses distributed resource scheduling as part of its engine, it is preferred for containerizing stream processing and batch processing workloads. Netflix is a popular implementation of Apache Mesos containers, and it is often noted for its streaming analytics and batch operations in media repurposing activities.

Microsoft Azure

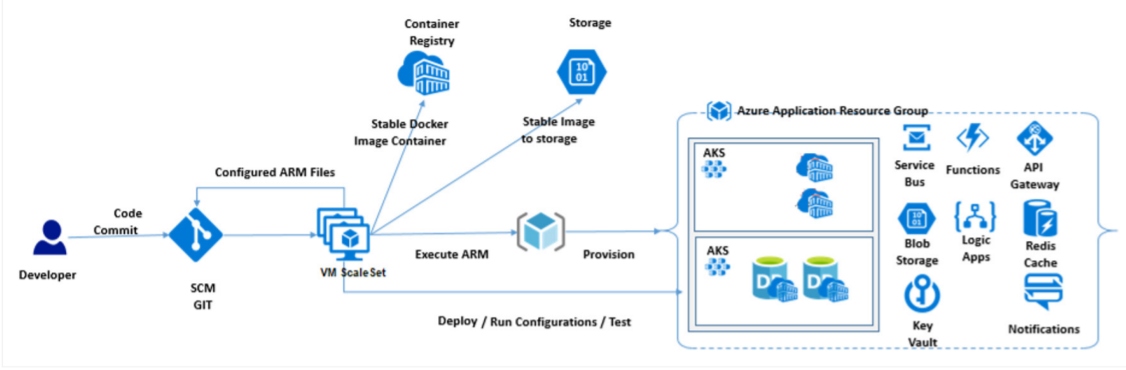

If you have an application estate running on the Microsoft tech stack (e.g., .NET, Windows runtime application), it would fit easily in Azure Container but it is not limited to do so. Azure Containers are flexible and can work on a public cloud platform as well as a private cloud platform in Azure Stack Hub. Azure offers several container services — there are two native container services: Azure Kubernetes Service and Azure Service Fabric. It supports third-party containerization solutions like OpenShift, which can be deployed on Azure to create the container environment. Services built with Pivotal Container Service can be deployed on Azure to create container environment and used for both public and private Azure cloud platforms.

Azure provides native services that help monitor and manage applications by analyzing application usage/consumption across different application containers. Tactically, we can configure native Azure monitoring services to watch and tune application groups as needed. As a strategic solution suitable for multi-cloud management, tools like Cisco AppDynamics can be extended to monitor different services on Azure.

Amazon Web Services (AWS)

AWS provides multiple containerization choices, such as Elastic Container Service (ECS), EKS, and the new EC2 Fargate. ECS and EKS are primarily meant for container orchestration. ECS is a pure AWS native cloud-managed service, and EKS is a Kubernetes-based native implementation. Fargate is more advanced than ECS and EKS. It provides a serverless computing engine to build and deploy containers on an ECS service. Users don’t have to worry about configuring the underlying infrastructure, so it enables faster time to market. Fargate is also cheaper since you pay only for computing time and not the underlying EC2 resources and instances.

If you are familiar with Docker container handling, choose an ECS-based architecture. If you are more familiar with Kubernetes, choose an EKS-based architecture. If you are new to native container programming, you can choose Fargate. EKS is better for cloud-agnostic solutions since it uses the underlying services in other cloud service providers (with less or no disruption/ change in the service level). But if you are looking for a "bin packing" solution with seamless automatic resource management for containers, then ECS helps automate deployment, scale services, and self-manage containerized applications.

Oracle Kubernetes Engine

Oracle Cloud Infrastructure (OCI) provides dozens of cloud services and infrastructure facilities to run popular database applications like Oracle DB and App Servers like Weblogic Servers for web applications. OCI has native facility to run Kubernetes Service through its Oracle Kubernetes Engine (OKE), which contains multi-node architecture to run container workloads in OCI platform. OKE has components like etcd, which is a key-value store for storing cluster data and it runs on separate resources for workload data handing.

OKE has exposed APIs through Kubernetes API Server to interact with node instances and its interfaces. Overall, management of OKE is managed by Kubernetes Controller to handle node failure, node creation, and node management activities. Individual application workload runs on Kubernetes nodes, which is also called a Kubernetes worker as VMs and scheduled pods/node instances are created through the Kubernetes scheduler. OKE can be deployed on multi-regions and balanced through a load balancer for high availability and better request management to application calls. Security services are well managed with OKE architecture through OAuth2 or SSH keys or authentication services.

Conclusion

Kubernetes is always improving its features and architecture, thanks to strong community support. For example, when we design a container solution, one important aspect that we think about is scalability and reliability so that applications can adjust to traffic changes. At the same time, we want to limit any costs incurred that lead to a "pay-as-you-go" approach. Upon its inception, a Kubernetes cluster required the scheduler component, which can be configured by a cloud administrator to schedule scaling up and down based on situations foreseen — like scaling up nodes at the end of the day for batch processing and scaling down nodes during idle time.

Now, many popular Kubernetes platforms (as explained above) contain an autoscaler facility that can handle both the cluster autoscaler and pod autoscaler, which greatly simplifies the Kubernetes architecture and implementation.

When we talk about containers, we typically care about application containers or data containers to handle service layers during containerization. But for transactional applications, application state plays an important role, and the default architecture is stateless containers. This includes Kubernetes features like PetSet, which was recently introduced to manage Stateful clusters. Also, Kubernetes supports native features like StatefulSet, which handles the above state mechanisms in a container architecture. This is supported in AKS for Azure, EKS for AWS, and GKE for Google Cloud with flexible configurations.

This is an article from DZone's 2021 Kubernetes and the Enterprise Trend Report.

For more:

Read the Report

Opinions expressed by DZone contributors are their own.

Comments