Counting Distinct Users in Real-Time With Redis Using Low Memory

In this article, take a look at how to handle a million distinct user records by allocating less than 2MB of memory in Redis Cache server.

Join the DZone community and get the full member experience.

Join For FreeIf you are developing an event-based application that handles many requests from different users, you most likely want to count distinct user action within a sliding window or a specified time range.

One of the quickest ways to count distinct user is to prepare an SQL like SELECT count(distinct user) from ACTION_TABLE. But, this might be expensive if there are millions of records produced in real time.

Another way that comes to mind is keeping a distinct user in a hashset.

But, this solution brings its own custom problems. If distinct user calculator application runs with multiple instances, we need an in-memory cache solution with huge RAM sizes. If you are dealing with 10 million distinct records, each of which allocates 10byte, you need at least 100MB ram just for one time frames. So, this is not a memory-efficient solution.

In this article, I want to show you how to handle a million distinct user records by allocating less than 2MB memory in Redis Cache server.

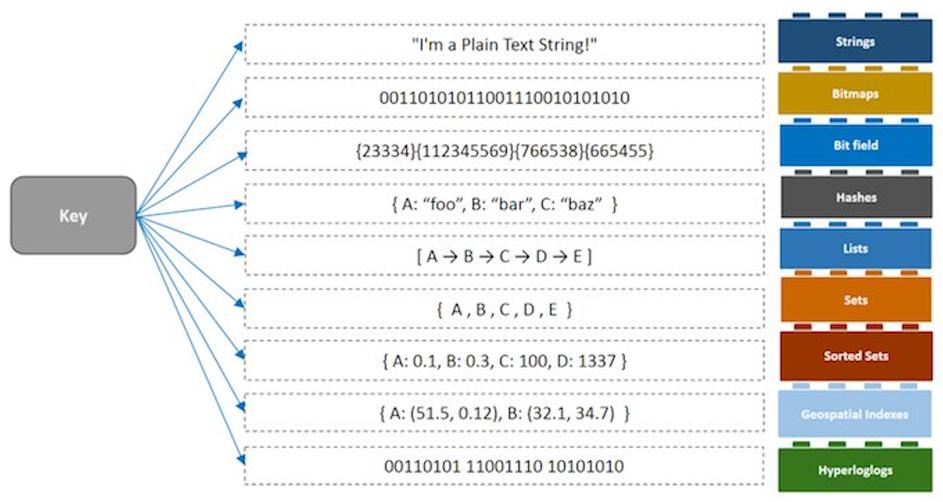

Redis has many data structures, such as Strings, Bitmaps, Bit fields, and so forth. I want to emphasize Hyperloglog, which is the most suitable for counting distinct user actions via consuming less memory.

Hyper LogLog

The Hyper LogLog Counter's name is self-descriptive. The name comes from the fact that you can estimate the cardinality of a set with cardinality Nmax using just loglog(Nmax) + O(1) bits.

Redis Hyperloglog Operations

There are three commands to make all hyperloglog operations possible.

- PFADD

- PFCOUNT

- PFMERGE

Let me explain these commands in a real-world scenario in which the user logs into the system and we need to count distinct users within an hour. So, we need a key name, like USER-LOGIN-2018123010. In other words, we will be keeping the distinct user count where user login action occurred between 10AM-11AM at 30th December in 2018. We will also use keys corresponding to the upcoming time, such as 2018123011, 2018123012, 2018123013, and so on.

Users A,B,C,D,E, and F logged on system at between 10am and 11 am.

redis:6379> pfadd USER-LOGIN-2018123010 A

(integer) 1

redis:6379> pfadd USER-LOGIN-2018123010 B

(integer) 1

redis:6379> pfadd USER-LOGIN-2018123010 C

(integer) 1

redis:6379> pfadd USER-LOGIN-2018123010 D

(integer) 1

redis:6379> pfadd USER-LOGIN-2018123010 E F

(integer) 1

redis:6379>

When you count them all, you get 6 as expected.

redis:6379> pfcount USER-LOGIN-2018123010

(integer) 6

If A and B logged into system many times, you will get same result because we are only skeeping distinct users.

redis:6379> pfadd USER-LOGIN-2018123010 A

(integer) 0

redis:6379> pfadd USER-LOGIN-2018123010 B

(integer) 0

redis:6379> pfcount USER-LOGIN-2018123010

(integer) 6

If the same users below and one more additional user(G) logged into the system between 11 am and 12 pm:

redis:6379> pfadd USER-LOGIN-2018123011 A

(integer) 1

redis:6379> pfadd USER-LOGIN-2018123011 B

(integer) 1

redis:6379> pfadd USER-LOGIN-2018123011 C

(integer) 1

redis:6379> pfadd USER-LOGIN-2018123011 D

(integer) 1

redis:6379> pfadd USER-LOGIN-2018123011 E F

(integer) 1

redis:6379> pfadd USER-LOGIN-2018123011 G

(integer) 1

redis:6379> pfcount USER-LOGIN-2018123011

(integer) 7

And now we have two keys USER-LOGIN-2018123010 and USER-LOGIN-2018123011 which are keeping distinct users if we want to know how many distinct users logged in to the system between 10 am and 12 pm (2 hours period) we just merge two keys. Therefore, we do not need any other key to count this one.

redis:6379> pfcount USER-LOGIN-2018123010 USER-LOGIN-2018123011

(integer) 7

If you need to keep key values without counting them again and again, you can merge keys into one key as following.

redis:6379> pfmerge USER-LOGIN-2018123010-11 USER-LOGIN-2018123010 USER-LOGIN-2018123011

OK

redis:6379> pfcount USER-LOGIN-2018123010-11

(integer) 7

After collected real-time data from production servers, we get the following result. As you can see that more than 185K distinct user action allocating only 10KB.

redis:6379> pfcount USER-LOGIN-20181009

(integer) 185987

redis:6379> debug object USER-LOGIN -20181009

Value at:0x7f3a89a7fa00 refcount:1 encoding:raw serializedlength:10567 lru:12770963 lru_seconds_idle:14

Sliding Window Distinct Count

To count distinct user in a sliding window, we need to specify less granularity, in our case, one minute is enough and keeping user actions within a key having format yyyyMMddHHmm like USER-LOGIN-201812301020.

When last-5 minutes distinct user action is queried, counting merged key at ones gives the result with 0.3% deviation.

>pfcount 201812301020 201812301021 201812301022 201812301023 201812301024

>12

So you need 60 keys for last one hour, 1440 keys for a last day, 10080 keys for last 7 days. The more keys we have, the more time we need to compute while merging them. Therefore, we should decrease the number of keys and started to keep user actions not only one key having yyyyMMddHHmm format but also hour, day and month time interval and used yyyyMM, yyyyMMdd, yyyyMMddHH.

With these new keys pfcount operation takes less time, for example:

If you want to count users’ last one day actions and use minute-key you need to merge all 1440 keys.

But if you use hour-key besides minutes-key, you need 60minute keys and 11 hour-key son we just need 71keys.

import org.apache.commons.lang3.time.DateUtils;

import java.text.SimpleDateFormat;

import java.util.*;

import java.util.stream.Collectors;

/**

* Created by Ahmet Karakaya on 25/09/2018.

*/

public class HLLUtils {

public static String TIME_FORMAT = "yyyyMMddHHmm";

private static String TIME_FORMAT_MONTH_DAY = "MMdd";

private static String TIME_FORMAT_DAY_MINUTES = "MMddHHmm";

private static String TIME_FORMAT_DAY_HOURS = "MMddHH";

static SimpleDateFormat FORMAT_MONTH_DAY = new SimpleDateFormat(TIME_FORMAT_MONTH_DAY);

static SimpleDateFormat FORMAT_DAY_HOURS = new SimpleDateFormat(TIME_FORMAT_DAY_HOURS);

static SimpleDateFormat FORMAT_DAY_MINUTES = new SimpleDateFormat(TIME_FORMAT_DAY_MINUTES);

public static List<String> parse(Date d1, Date d2) {

ArrayList list;

if (d1.compareTo(d2) == 0) return Collections.emptyList();

;

//if less than an hour, sum all minutes

long delta = d2.getTime() - d1.getTime();

if (delta == 0) return Collections.emptyList();

if (delta < DateUtils.MILLIS_PER_HOUR) {

int minutesDiff = (int) (delta / DateUtils.MILLIS_PER_MINUTE);

list = new ArrayList<String>();

Date d1_inc = d1;

while (d2.compareTo(d1_inc) == 1 && minutesDiff > 0) {

list.add(FORMAT_DAY_MINUTES.format(d1_inc));

d1_inc = DateUtils.addMinutes(d1_inc, 1);

}

//if less than an day, trim hours sum all, pass minutes part

} else if (delta < DateUtils.MILLIS_PER_DAY) {

list = new ArrayList<String>();

Date dateLastPortionHour = DateUtils.truncate(d2, Calendar.HOUR_OF_DAY);

list.addAll(parse(dateLastPortionHour, d2));

long delta2 = dateLastPortionHour.getTime() - d1.getTime();

int hoursDiff = (int) (delta2 / DateUtils.MILLIS_PER_HOUR);

Date d1_inc = DateUtils.addHours(dateLastPortionHour, -1 * hoursDiff);

while (dateLastPortionHour.compareTo(d1_inc) == 1 && hoursDiff > 0) {

list.add(FORMAT_DAY_HOURS.format(d1_inc));

d1_inc = DateUtils.addHours(d1_inc, 1);

}

list.addAll(parse(d1, DateUtils.addHours(dateLastPortionHour, -1 * hoursDiff)));

} else {

list = new ArrayList<String>();

Date dateLastPortionDay = DateUtils.truncate(d2, Calendar.DAY_OF_MONTH);

list.addAll(parse(dateLastPortionDay, d2));

long delta2 = dateLastPortionDay.getTime() - d1.getTime();

//if less than an month, trim days sum all, pass hours part

int daysDiff = (int) (delta2 / DateUtils.MILLIS_PER_DAY);

//Date d1_inc = d1;

Date d1_inc = DateUtils.addDays(dateLastPortionDay, -1 * daysDiff);

while (dateLastPortionDay.compareTo(d1_inc) == 1 && daysDiff > 0) {

list.add(FORMAT_MONTH_DAY.format(d1_inc));

d1_inc = DateUtils.addDays(d1_inc, 1);

}

list.addAll(parse(d1, DateUtils.addDays(dateLastPortionDay, -1 * daysDiff)));

}

return list;

}

public static List<String> getLastMinutes(Date date, int minutes) {

return parse(DateUtils.addMinutes(date, -1 * minutes), date);

}

public static List<String> getLastHours(Date date, int hours) {

return parse(DateUtils.addHours(date, -1 * hours), date);

}

public static List<String> getLastDays(Date date, int days) {

return parse(DateUtils.addDays(date, -1 * days), date);

}

public static List<String> addPrefix(List<String> keys, String prefix) {

return keys.stream().map(key -> prefix + key).collect(Collectors.toList());

}Let’s look at the same sample keys calculated between two dates. You can realize that the number of keys is as lowest as possible. We are not only dividing time frames into minute pieces but also finding more bigger parts either hour or day.

BEGIN=201810081000

END =201810081110

[USER-LOGIN-10081100, USER-LOGIN-10081101, USER-LOGIN-10081102, USER-LOGIN-10081103, USER-LOGIN-10081104, USER-LOGIN-10081105, USER-LOGIN-10081106, USER-LOGIN-10081107, USER-LOGIN-10081108, USER-LOGIN-10081109, USER-LOGIN-100810, USER-LOGIN-100811]

BEGIN=201810061012

END =201810081110

[USER-LOGIN-10081100, USER-LOGIN-10081101, USER-LOGIN-10081102, USER-LOGIN-10081103, USER-LOGIN-10081104, USER-LOGIN-10081105, USER-LOGIN-10081106, USER-LOGIN-10081107, USER-LOGIN-10081108, USER-LOGIN-10081109, USER-LOGIN-100800, USER-LOGIN-100801, USER-LOGIN-100802, USER-LOGIN-100803, USER-LOGIN-100804, USER-LOGIN-100805, USER-LOGIN-100806, USER-LOGIN-100807, USER-LOGIN-100808, USER-LOGIN-100809, USER-LOGIN-100810, USER-LOGIN-100811, USER-LOGIN-1007, USER-LOGIN-1008, USER-LOGIN-100611, USER-LOGIN-100612, USER-LOGIN-100613, USER-LOGIN-100614, USER-LOGIN-100615, USER-LOGIN-100616, USER-LOGIN-100617, USER-LOGIN-100618, USER-LOGIN-100619, USER-LOGIN-100620, USER-LOGIN-100621, USER-LOGIN-100622, USER-LOGIN-100623, USER-LOGIN-10061012, USER-LOGIN-10061013, USER-LOGIN-10061014, USER-LOGIN-10061015, USER-LOGIN-10061016, USER-LOGIN-10061017, USER-LOGIN-10061018, USER-LOGIN-10061019, USER-LOGIN-10061020, USER-LOGIN-10061021, USER-LOGIN-10061022, USER-LOGIN-10061023, USER-LOGIN-10061024, USER-LOGIN-10061025, USER-LOGIN-10061026, USER-LOGIN-10061027, USER-LOGIN-10061028, USER-LOGIN-10061029, USER-LOGIN-10061030, USER-LOGIN-10061031, USER-LOGIN-10061032, USER-LOGIN-10061033, USER-LOGIN-10061034, USER-LOGIN-10061035, USER-LOGIN-10061036, USER-LOGIN-10061037, USER-LOGIN-10061038, USER-LOGIN-10061039, USER-LOGIN-10061040, USER-LOGIN-10061041, USER-LOGIN-10061042, USER-LOGIN-10061043, USER-LOGIN-10061044, USER-LOGIN-10061045, USER-LOGIN-10061046, USER-LOGIN-10061047, USER-LOGIN-10061048, USER-LOGIN-10061049, USER-LOGIN-10061050, USER-LOGIN-10061051, USER-LOGIN-10061052, USER-LOGIN-10061053, USER-LOGIN-10061054, USER-LOGIN-10061055, USER-LOGIN-10061056, USER-LOGIN-10061057, USER-LOGIN-10061058, USER-LOGIN-10061059]

BEGIN=201808061012

END =201810081110

[USER-LOGIN-10081100, USER-LOGIN-10081101, USER-LOGIN-10081102, USER-LOGIN-10081103, USER-LOGIN-10081104, USER-LOGIN-10081105, USER-LOGIN-10081106, USER-LOGIN-10081107, USER-LOGIN-10081108, USER-LOGIN-10081109, USER-LOGIN-100800, USER-LOGIN-100801, USER-LOGIN-100802, USER-LOGIN-100803, USER-LOGIN-100804, USER-LOGIN-100805, USER-LOGIN-100806, USER-LOGIN-100807, USER-LOGIN-100808, USER-LOGIN-100809, USER-LOGIN-100810, USER-LOGIN-100811, USER-LOGIN-0807, USER-LOGIN-0808, USER-LOGIN-0809, USER-LOGIN-0810, USER-LOGIN-0811, USER-LOGIN-0812, USER-LOGIN-0813, USER-LOGIN-0814, USER-LOGIN-0815, USER-LOGIN-0816, USER-LOGIN-0817, USER-LOGIN-0818, USER-LOGIN-0819, USER-LOGIN-0820, USER-LOGIN-0821, USER-LOGIN-0822, USER-LOGIN-0823, USER-LOGIN-0824, USER-LOGIN-0825, USER-LOGIN-0826, USER-LOGIN-0827, USER-LOGIN-0828, USER-LOGIN-0829, USER-LOGIN-0830, USER-LOGIN-0831, USER-LOGIN-0901, USER-LOGIN-0902, USER-LOGIN-0903, USER-LOGIN-0904, USER-LOGIN-0905, USER-LOGIN-0906, USER-LOGIN-0907, USER-LOGIN-0908, USER-LOGIN-0909, USER-LOGIN-0910, USER-LOGIN-0911, USER-LOGIN-0912, USER-LOGIN-0913, USER-LOGIN-0914, USER-LOGIN-0915, USER-LOGIN-0916, USER-LOGIN-0917, USER-LOGIN-0918, USER-LOGIN-0919, USER-LOGIN-0920, USER-LOGIN-0921, USER-LOGIN-0922, USER-LOGIN-0923, USER-LOGIN-0924, USER-LOGIN-0925, USER-LOGIN-0926, USER-LOGIN-0927, USER-LOGIN-0928, USER-LOGIN-0929, USER-LOGIN-0930, USER-LOGIN-1001, USER-LOGIN-1002, USER-LOGIN-1003, USER-LOGIN-1004, USER-LOGIN-1005, USER-LOGIN-1006, USER-LOGIN-1007, USER-LOGIN-1008, USER-LOGIN-080611, USER-LOGIN-080612, USER-LOGIN-080613, USER-LOGIN-080614, USER-LOGIN-080615, USER-LOGIN-080616, USER-LOGIN-080617, USER-LOGIN-080618, USER-LOGIN-080619, USER-LOGIN-080620, USER-LOGIN-080621, USER-LOGIN-080622, USER-LOGIN-080623, USER-LOGIN-08061012, USER-LOGIN-08061013, USER-LOGIN-08061014, USER-LOGIN-08061015, USER-LOGIN-08061016, USER-LOGIN-08061017, USER-LOGIN-08061018, USER-LOGIN-08061019, USER-LOGIN-08061020, USER-LOGIN-08061021, USER-LOGIN-08061022, USER-LOGIN-08061023, USER-LOGIN-08061024, USER-LOGIN-08061025, USER-LOGIN-08061026, USER-LOGIN-08061027, USER-LOGIN-08061028, USER-LOGIN-08061029, USER-LOGIN-08061030, USER-LOGIN-08061031, USER-LOGIN-08061032, USER-LOGIN-08061033, USER-LOGIN-08061034, USER-LOGIN-08061035, USER-LOGIN-08061036, USER-LOGIN-08061037, USER-LOGIN-08061038, USER-LOGIN-08061039, USER-LOGIN-08061040, USER-LOGIN-08061041, USER-LOGIN-08061042, USER-LOGIN-08061043, USER-LOGIN-08061044, USER-LOGIN-08061045, USER-LOGIN-08061046, USER-LOGIN-08061047, USER-LOGIN-08061048, USER-LOGIN-08061049, USER-LOGIN-08061050, USER-LOGIN-08061051, USER-LOGIN-08061052, USER-LOGIN-08061053, USER-LOGIN-08061054, USER-LOGIN-08061055, USER-LOGIN-08061056, USER-LOGIN-08061057, USER-LOGIN-08061058, USER-LOGIN-08061059]

Redis Hyperloglog

Spring Redis Data packages are used for Redis Server operations. HyperLogLogOperations class is the main class to make 3 main operations; count, add and merge.

import org.apache.commons.lang3.StringUtils;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.redis.core.HyperLogLogOperations;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.stereotype.Service;

import org.springframework.util.StopWatch;

import javax.annotation.PostConstruct;

import java.util.List;

import java.util.concurrent.TimeUnit;

@Service

public class RedisManagerImpl implements IRedisManager {

private Logger LOGGER = LoggerFactory.getLogger(RedisManagerImpl.class);

@Autowired

private RedisTemplate<String, Object> redisTemplate;

HyperLogLogOperations hyperLogLogOperations;

@PostConstruct

private void init() {

hyperLogLogOperations = redisTemplate.opsForHyperLogLog();

}

@Override

public void put(int time, TimeUnit timeUnit, String key, String value) {

if (!redisTemplate.hasKey(key)) {

boolean result = redisTemplate.expire(key, time, timeUnit);

LOGGER.info("Setting TTL for key: {} time:{}, result:{}", key, time, result);

}

put(key, value);

}

@Override

public Long put(String key, String value) {

if (StringUtils.isEmpty(value)) {

LOGGER.error("Value cannot be empty or null, key: {}" + value);

return null;

}

LOGGER.info("Putting into -> {} ", key);

Long result = 0l;

try {

result = hyperLogLogOperations.add(key, value);

} catch (Exception e) {

LOGGER.error("Having Redis Error with key: {}", key, e);

}

return result;

}

@Override

public long countAll(List<String> keys) {

LOGGER.info("Calculating keys -> {} ", keys);

long size = 0;

try {

size = hyperLogLogOperations.size(keys.toArray(new String[0]));

} catch (Exception e) {

LOGGER.error("Having Redis Error with key: {}", keys, e);

}

return size;

}I have performed some test scenarios using the code portion above to know what the deviation from actual distinct record size is when adding many records as one by one or different batch size. I have added 10000000 different records and as you can see from the following table that adding records with less than 1K batch sizes give a more accurate result.

Batch Size |

Pfcount result |

% Accuracy |

1 |

9971838 |

99,71838 |

1000 |

9971838 |

99,71838 |

10000 |

9963647 |

99,63647 |

100000 |

9887187 |

98,87187 |

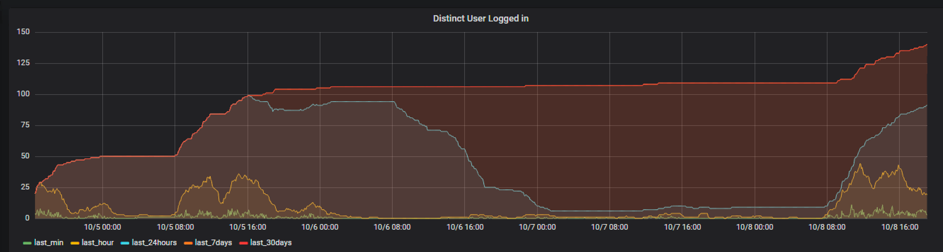

Realtime Monitoring by Graphic Tool

When these 5 type of values feeded into premetheus and displayed over graphana we have following graph.

References

Opinions expressed by DZone contributors are their own.

Comments