Content Detection Technologies in Data Loss Prevention (DLP) Products

Explore the key content detection technologies needed in a Data Loss Prevention (DLP) product developers need to focus on to develop a first-class solution.

Join the DZone community and get the full member experience.

Join For FreeHaving worked with enterprise customers for a decade, I still see potential gaps in data protection. This article addresses the key content detection technologies needed in a Data Loss Prevention (DLP) product that developers need to focus on while developing a first-class solution. First, let’s look at a brief overview of the functionalities of a DLP product before diving into detection.

Functionalities of a Data Loss Prevention Product

The primary functionalities of a DLP product are policy enforcement, data monitoring, sensitive data loss prevention, and incident remediation. Policy enforcement allows security administrators to create policies and apply them to specific channels or enforcement points. These enforcement points include email, network traffic interceptors, endpoints (including BYOD), cloud applications, and data storage repositories. Sensitive data monitoring focuses on protecting critical data from leaking out of the organization's control, ensuring business continuity. Incident remediation may involve restoring data with proper access permissions, data encryption, blocking suspicious transfers, and more.

Secondary functionalities of a DLP product include threat prevention, data classification, compliance and posture management, data forensics, and user behavior analytics, among others. A DLP product ensures data security within any enterprise by enforcing data protection across all access points. The primary differentiator between a superior data loss prevention product and a mediocre one is the breadth and depth of coverage. Breadth refers to the variety of enforcement points covered, while depth pertains to the quality of the content detection technologies.

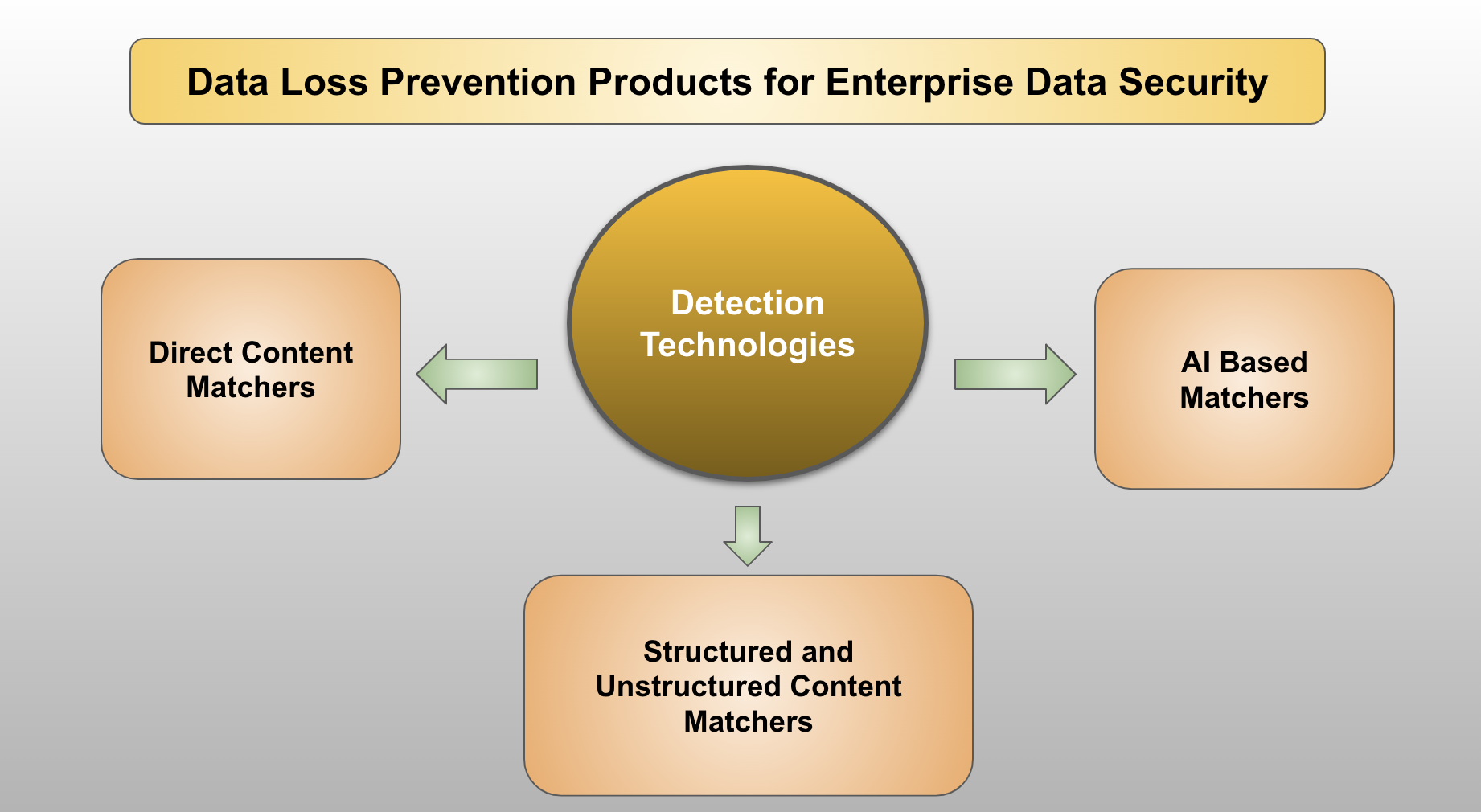

Detection Technologies

Detection technologies can be broadly divided into three categories. The first category includes simple matchers that directly match individual data, known as direct content matchers. The second category consists of more complex matchers that can handle both structured content, such as data found in databases, and unstructured content, like text documents and images/video data. The third category consists of AI-based matchers that can be configured by using both supervised and unsupervised training methods.

Direct Content Matchers

There are three types of direct content matches, namely matches based on keywords, regular expression patterns, and popular identifier matchers.

Keyword Matching

Policies that require keyword matchers should include rules with specific keywords or phrases. The keyword matcher can directly inspect the content and match it based on these rules. The keyword input can be a list of keywords separated by appropriate delimiters or phrases. Effective keyword-matching algorithms include the Knuth-Morris-Pratt (KMP) algorithm and the Boyer-Moore algorithm. The KMP algorithm is suitable for documents of any size as it preprocesses the input keywords before starting the matching. The Boyer-Moore Algorithm is particularly effective for larger texts because of its heuristic-based approach. Modern keyword matching also involves techniques, such as keyword pair matching based on word distances and contextual keyword matching.

Regular Expression Pattern Matching

Regular expressions defined in security policies need to be pre-compiled, and pattern matching can then be performed on the content that needs to be monitored. The Google RE2 algorithm is one of the fastest pattern-matching algorithms in the industry, alongside others such as Hyper Scan by Intel and the Tried Regular Expression Matcher based on Deterministic Finite Automaton (DFA). Regular expression pattern policies can also include multiple patterns in a single rule and patterns based on word distances.

Popular Identifier Matching

Popular identifier matching is similar to a regex pattern matcher but specializes in detecting common identifiers used in everyday life, such as Social Security Numbers, tax identifiers, and driving license numbers. Each country may have unique identifiers that they use. Many of these popular identifiers are part of Personally Identifiable Information (PII), making it crucial to protect data that contains them. This type of matcher can be implemented using regular expression pattern matching.

All these direct content matchers are known for generating a large number of false negatives. To address this issue, policies associated with these matcher rules should include data checkers to reduce the number of false positives. For example, not all 9-digit numbers can be US Social Security Numbers (SSNs). SSNs cannot start with 000 or 666, and the reserved range includes numbers from 900 to 999.

Structured and Unstructured Content Matchers

Both structured and unstructured content matchers require security administrators to pre-index the data, which is then fed into the content matchers for this type of matching to work. Developers can construct pre-filters to eliminate content from an inspection before it is passed on to this category of matchers.

Structured Matcher

Structured Data Matching, also known as Exact Data Matching (EDM), matches structured content found in spreadsheets, structured data repositories, databases, and similar sources. Any data that conforms to a specific structure can be matched using this type of matcher. The data to be matched must be pre-indexed so that the structured matchers can perform efficiently. Security policies, for instance, should specify the number of columns and the names of columns that need to match to qualify for a data breach incident when inspecting a spreadsheet. Typically, the pre-indexed content is large, in the order of gigabytes, and the detection matchers must have sufficient resources to load these files for matching. As the name suggests, this method exactly matches the pre-indexed data with the content being inspected.

Unstructured Matcher

Unstructured data matching, similar to EDM, involves pre-compiling and indexing the files provided by the security administrator when creating the policy. Unstructured content matching indexes include generating a rolling window of hashes for the documents and storing them in a format that allows for efficient content inspection. A video file might also be included under this type of matcher; however, once the transcript is extracted from the video, there is nothing preventing developers from using direct content matchers in addition to unstructured matchers for content monitoring.

AI-Based Matchers

AI matchers involve a trained model for matching. The model can be trained via a rigorous set of training data and supervision, or we can let the system train through unsupervised learning.

Supervised Learning

Training data should include both a positive set and a negative set with appropriate labels. The training data can also be based on a specific set of labels to classify the content within an organization. Most importantly, during training, critical features such as patterns and metadata should be extracted. Data Loss Prevention products generally use decision trees and support vector machine (SVM) algorithms for this type of matching. The model can be retrained or updated based on new training data or feedback from the security administrator. The key is to keep the model updated to ensure that this type of matcher performs effectively.

Unsupervised Learning

Unsupervised learning has been becoming increasingly popular in this AI era with the inception of large language models (LLMs). LLMs usually go through an initial phase of unsupervised learning followed by a supervised learning phase where fine-tuning takes place. Unsupervised learning algorithms popularly used by security vendors while creating DLP products are K-means, hierarchical clustering algorithms that can identify structural patterns and anomalies while performing data inspection. Methodologies — namely, Principal Component Analysis (PCA) and t-distributed Stochastic Neighbor Embedding (t-SNE) — can aid specifically in identifying sensitive patterns in documents that are sent for content inspection.

Conclusion

For superior data loss prevention products, developers and architects should consider including all the mentioned content-matching technologies. A comprehensive list of matchers allows security administrators to create policies with a wide variety of rules to protect sensitive content. It should be noted that a single security policy can include a combination of all the matchers, expressed as an expression joined using boolean operators such as OR, AND, and NOT. Protecting data will always be important, and it is becoming even more crucial in the AI era, where we must advocate for the ethical use of AI.

Opinions expressed by DZone contributors are their own.

Comments