Computer Vision Tutorial 1: Image Basics

Before we start building an image classifier or approach any computer vision problem, we need to understand what an image is.

Join the DZone community and get the full member experience.

Join For FreeThis tutorial is the foundation of computer vision delivered as “Lesson 1” of the series; there are more Lessons upcoming that outline building your own deep learning-based computer vision projects. You can find the complete syllabus and table of contents here.

Before we start building an image classifier or approach any computer vision problem, we need to understand what an image is.

Pixels Are the Building Blocks of Images

The textbook definition of pixel normally is considered the “color” or the “intensity” of light appearing in a given place in our image. If we think of an image as a grid, each square contains a single pixel.

Pixels are the raw building blocks of an image; there is no finer granularity than the pixel. Most pixels are represented in two ways:

- Grayscale/single-channel

- Color

Let's take an example image:

The image in Fig 1.1 is 4000 pixels wide and 3000 pixels tall, total = 4000 * 3000 = 1,20,00,000 pixels

You can find the pixels of your image by opening the image in MS Paint and finding the width * height of the images in pixels by checking the description of the image as highlighted(Yellow) in Fig 1.2. There are also other ways of finding the pixels of the image using OpenCV, which we will discuss in the upcoming sections of this article.

Fig 1.3 Grayscale Image with values 0 to 255. 0 = Black and 255 = White

Fig 1.3 Grayscale Image with values 0 to 255. 0 = Black and 255 = White

In a Grayscale or single channel image, each pixel is a scalar value between 0 to 255, where 0 = “Black” and 255= “white.” As shown in Fig 1.3, the values closer to 0 are darker, and values closer to 255 are lighter.

On the other hand, color images are represented in RGB (Red, Green, and Blue). Here, the pixel values are represented by a list of three values: one value for the Red component, one for Green, and another for Blue.

- R in RGB — values defined in the range of 0 to 255

- G in RGB — values defined in the range of 0 to 255

- B in RGB — values defined in the range of 0 to 255

All three components combine in an additive color space to form a colored single pixel usually represented as a tuple (red, green, blue). For example, consider the color “white” — we would fill each of the red, green, and blue buckets up completely, like this: (255, 255, 255). Then, to create the color black, we would empty each of the buckets out (0, 0, 0), as black is the absence of color. To create a pure red color, we would fill up the red bucket (and only the red bucket) completely: (255, 0, 0).

We can conceptualize an RGB image as consisting of three independent matrices of width W and height H, one for each of the RGB components. We can combine these three matrices to obtain a multi-dimensional array with the shape W×H×D where D is the depth or number of channels(for the RGB color space, D=3)

OK! Back to basics again, the Image is represented as a grid of pixels. Assume the grid is a piece of graph paper. Using this graph paper, the origin point (0,0) corresponds to the upper-left corner of the image. As we move down and to the right, both the x and y values increase. As shown in Fig 1.5 below, the letter “L” is placed on a piece of graph paper. Pixels are accessed by their (x,y)coordinates, where we go x columns to the right and y rows down, keeping in mind that Python is zero-indexed.

Images as NumPy Array

Image processing libraries such as OpenCV and scikit-image represent RGB images as multidimensional NumPy arrays with shape (height, width, depth). It has to be noted that the height comes first, and the width is due to the matrix notation. When defining the dimensions of the matrix, we always write it as rows x columns. The number of rows in an image is its height, whereas the number of columns is the image’s width. The depth will still remain the depth. Depth indicates if the image is a color or grayscale image. If the depth is 1, then the image is a grayscale. While a depth of three indicates, it is a colored RGB image.

Let’s write a small Python program to understand how to read an image using the OpenCV library and extract the shape of the image.

OpenCV (Open Source Computer Vision Library) is an open-source computer vision and machine learning software library. OpenCV library has more than 2500 optimized algorithms, which includes a comprehensive set of both classic and state-of-the-art computer vision and machine learning algorithms. These algorithms can be used to detect and recognize faces, identify objects, classify human actions in videos, track camera movements, track moving objects, etc…

For readers who are new to setting up a Python environment, follow the Python setup article (click on the link) to set up Python on your local machine. Follow the instructions as it is.

For this small Python program, I will be using Notepad++ and Windows command prompt to execute the program.

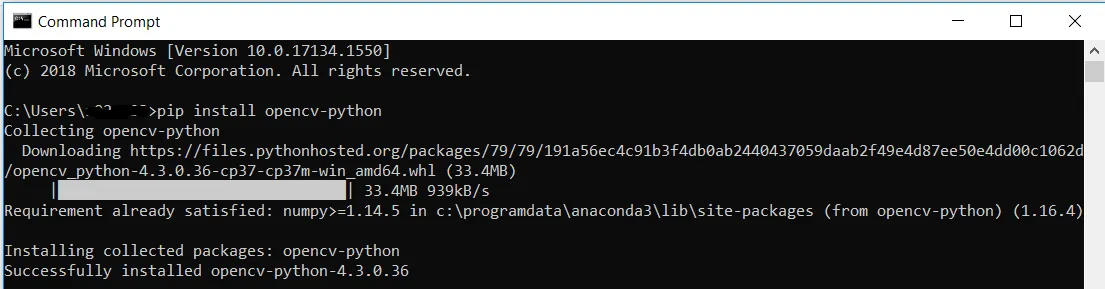

After the installation of Python, open the command prompt and install the OpenCV library using the below command.

pip install opencv-python

After successfully installing the OpenCV library, Open any editor of your choice or notepad++ and type the below lines of code.

#Reading Image from disk

import cv2

image = cv2.imread(“C:/Sample_program/example.jpg”)

print(f’(Height,Width,Depth) of the image is: {image.shape}’)

cv2.imshow(“Image”, image)

cv2.waitKey(0)Here, we load an image named example.jpg from the disk and display it on our screen. My terminal output follows:

This image has a width of 552 pixels (the number of columns), a height of 515 pixels (the number of rows), and a depth of 3 (the number of channels).

To access an individual pixel value from our image, we use simple NumPy array indexing: Copy and paste the below Python code into your editor and execute.

import cv2

image = cv2.imread(“C:/Sample_program/example.jpg”)

# Receive the pixel co-ordinate value as [y,x] from the user, for which the RGB values has to be computed

[y,x] = list(int(x.strip()) for x in input().split(‘,’))

# Extract the (Blue,green,red) values of the received pixel co-ordinate

(b,g,r) = image[y,x]

print(f’ The Blue Green Red component value of the image at position [y,x]:{(y,x)} is: {(b,g,r)}’)The above program outputs:

Again, notice how the y value is passed in before the x value — this syntax may feel uncomfortable at first, but it is consistent with how we access values in a matrix: first we specify the row number, then the column number. From there, we are given a tuple representing the Blue, Green, and Red components of the image.

We can validate the above output using MS Paint as follows.

Ok! Let's find the color of my lips in the image below:

Open the image in MSPaint and place the cursor on “lips” by checking the pixel coordinate (x, y) on the bottom left (highlighted in yellow), as shown below in Fig 1.9.

Input the obtained pixel coordinate to the Python program, extract the RGB values, and open Edit Colors in MS Paint to enter the obtained RGB values and validate the color of in edit colors against the image pixel coordinate point as shown in Figure 1.10.

It has to be noted that MS Paint gives the pixel coordinates in (x,y) opposite to the OpenCV convention, and similarly, RGB values are also upside down as compared to the OpenCV output format of BGR.

Bonus Reading

Image representation uses 4 types of data types — Unsigned character: type 1, int: type 2, float: type 4, and complex: type 8, with Unsigned Charter being the most popular, as shown in the table below. The number representation on the right, as shown in Figure 1.11, is the number of bytes we use to store pixels in the memory.

Note: 1 Byte is a collection of 8 Bits.

So for a 28 x 28 image, the total pixels would be 28 x 28 = 784 pixels, and the total memory for type 1 datatype would be 1B * 784 = 784 Bytes, meaning one Byte of memory is required to store one pixel of the image.

Similarly, for a type 4 datatype (float), the same image would occupy 4B * 784 = 1568 Bytes, meaning two bytes of memory are required to store one pixel of the image.

Published at DZone with permission of Rakesh Thoppaen Suresh Babu. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments