CockroachDB TIL: Volume 10

Explore ULID and UUID generation, tab completion in the CLI, third-party IDEs like Postico and Serverless clusters, and more in this "today I learned" post.

Join the DZone community and get the full member experience.

Join For FreeThis is my series of articles covering short "today I learned" topics as I work with CockroachDB. Read the previous installments:

Topics

- Topic 1: Generating ULID strings in CockroachDB

- Topic 2: Enable CLI tab completion for column names

- Topic 3: Using Postico with CockroachDB Serverless

- Topic 4: Nuances with

DISCARD ALL - Topic 5: Npgsql and follower reads

- Bonus Topic: Npgsql and pgpass

Topic 1: Generating ULID Strings in CockroachDB

CockroachDB adopted ULID several releases ago as yet another version of UUID type. There is no explicit ULID type in CockroachDB. There are functions available to generate ULID in UUID format. So in case you need to generate lexicographically sortable IDs, you can use the built-in gen_random_ulid() function. Also, there are several conversion functions to convert from ULID to UUID; i.e., ulid_to_uuid and vice versa, uuid_to_ulid. One point of friction in CockroachDB is where one needs to generate an actual ULID string and not a UUID-formatted ULID. This may or may not be obvious. Let's take a look:

Just to be clear, what we need is a string that looks like 01GCCCKGXGF41EDJ8HQENNYA3Y and not like 018318c8-6194-1e75-c9f3-04913630eca8.

SELECT gen_random_ulid();

gen_random_ulid ---------------------------------------- 018318c8-6194-1e75-c9f3-04913630eca8

Notice we are generating a ULID. gen_random_ulid() should not be confused with gen_random_uuid(). According to the documentation, the produced output is a valid UUID. So in order for us to return a valid ULID in string form, we have to do the following:

SELECT uuid_to_ulid(gen_random_ulid());

uuid_to_ulid ------------------------------ 01GCCCQECQRPVGE4FFENFQJXSA

Topic 2: Enable CLI Tab Completion for Column Names

I've been working with a product called FerretDB (see articles 1-4 linked above), and I found it frustrating to type the column names as they include a hash in them; i.e., sample_mflix.comments_5886d2d7. Remembering these column names is out of the question. I started digging for a way to enable tab completion in my CLI tool, and unfortunately, cockroach CLI does not support it today. Apparently, the psql client does support tab completion and I had to give it a try.

Originally it did not activate the tab completion and based on the documentation under the heading Command-Line Editing, I created an .inputrc file in my home directory. I included the following:

$if psql set disable-completion off $endif

I sourced the file and opened a new terminal, and finally have tab completion.

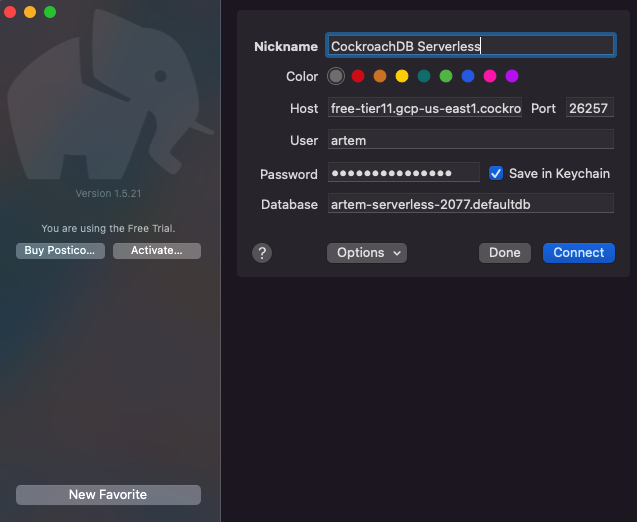

Topic 3: Using Postico With CockroachDB Serverless

Postico is a modern PostgreSQL client for Mac. A user in the community Slack reported issues connecting to a serverless instance of CockroachDB.

A quick way to install Postico is to use brew.

brew install postico

I must admit, it is not an obvious user experience. I've not been able to quickly get started based on my intuition. One approach that worked was to quote the URL and use CLI to open Postico. I have to mention that omitting the quotes will not work, at least with serverless. This behavior is not documented in their docs.

open "postgresql://user:pass@host.cockroachlabs.cloud:26257/cluster-routing-id.defaultdb?sslmode=verify-full"

If your local environment launches another app instead of Postico, you can also replace postgresql in the pgurl with postico. For example:

open "postico://artem:password@artem-serverless-2077.g8z.gcp-us-east1.cockroachlabs.cloud:26257/defaultdb?sslmode=verify-full&options=--cluster%3Dartem-serverless-2077"

The following works as well. Serverless now supports SNI and Postico supports it.

open "postgresql://artem:password@artem-serverless-2077.g8z.gcp-us-east1.cockroachlabs.cloud:26257/defaultdb?sslmode=verify-full&options=--cluster%3Dartem-serverless-2077"

As we enable SNI across our Serverless product, we will publish an SNI-compatible connection string which should alleviate the long-running problem with routing cluster ID.

Topic 4: Nuances With DISCARD ALL

I was working with a customer workload using a .Net application and Npgsql PostgreSQL provider. When I work with customer workloads, I typically treat the code as a black box. I try to make minimal changes to the app code unless absolutely necessary. In the current case, I've noticed a query like DISCARD ALL being aggressively called as part of the workload. I was observing at least 3x the number of DISCARD ALL queries for every business-critical statement. This led me to inquire internally if these queries have a cost to them. To my surprise, there is cost, albeit negligible. There is also additional network latency cost between client and server. The average query statement time can be tiny, but in aggregate can substantially contribute to the overall performance.

Luckily, in researching the issue, my search landed me on the following Npgsql issue, which led me to the following property in Npgsql: No Reset On Close=true. It states that it can improve performance in some cases. I must stress that there's a trade-off: some applications rely on a clean session state. In those cases, removing DISCARD ALL will likely break the application. But for all other cases, using the connection parameter makes for an easy fix; i.e., Server=cockroach-cluster-host;Port=26257;Database=dbname;User ID=dbuser;Password=password;No Reset On Close=true. I reran the workload again and no longer observed DISCARD ALL queries.

When I inquired internally about DISCARD ALL, I was pointed to another customer case where DISCARD ALL was impacting performance. In the latter case, the customer was using PGBouncer and from my work on PGBouncer, I remembered a property server_reset_query that can be set in the pgbouncer.ini to reset the session upon connection release. The default property is DISCARD ALL. In some cases, changing the property to DEALLOCATE ALL; i.e., "server_reset_query=DEALLOCATE ALL;" can be more efficient by only dropping the prepared statements.

I must mention that server_reset_query should only be used with pool_mode=session. The transaction mode does not use session-based features. Each transaction ends up in a different connection with a different session state. Pay extreme caution when changing these parameters as they can significantly impact the workload behavior.

Topic 5: Npgsql and Follower Reads

Since we're on the topic of Npgsql, let me discuss the other interesting anecdote of having a third-party tool working with CockroachDB. CockroachDB supports follower reads which operate on the local replicas, leading to faster read latencies and higher throughput. The trade-off for follower reads is increased data staleness. Follower reads are analogous to READ COMMITTED isolation level in the relational database world.

We've supported Npgsql for a while but unfortunately, CockroachDB-specific concepts like follower reads are not well documented in the Npgsql docs. I'm grateful to our engineering team for introducing a Follower Reads session parameter default_transaction_use_follower_reads=on; which makes integrating our specific features into third-party tools dead simple. We made a decision many releases ago because it is easier to add a session parameter than forcing all third-party tools to adopt our syntax. Additionally, with the session parameter, we can force queries on the read path to leverage follower reads implicitly.

This brings us back to the original topic: I was working with a black box .Net application and rewriting the application with AOST was a non-starter. I set out to use the session parameter, but it was unclear from the Npgsql docs how to leverage it. Postgresql supports a -c name=value-named run-time parameter, and I was sure Npgsql accepts arbitrary options in this manner.

Considering the sample code below, I will demonstrate how to use follower reads in your applications as well as adopt the practices of splitting up the traffic between read only and read/write.

using System;

using System.Data;

using System.Net.Security;

using Npgsql;

namespace Cockroach

{

class MainClass

{

static void Main(string[] args)

{

var connString = "Host=artem-mr-7xw.aws-us-east-1.cockroachlabs.cloud;Username=artem;Passfile=/Users/artem/.pgpass;Database=dotnet;RootCertificate=/Users/artem/Library/CockroachCloud/certs/artem-mr-ca.crt;Port=26257;SslMode=VerifyCA";

Simple(connString);

}

static void Simple(string connString)

{

using (var conn = new NpgsqlConnection(connString))

{

conn.Open();

new NpgsqlCommand("CREATE TABLE IF NOT EXISTS test (id UUID DEFAULT gen_random_uuid() PRIMARY KEY, val STRING)", conn).ExecuteNonQuery();

using (var cmd = new NpgsqlCommand())

{

cmd.Connection = conn;

cmd.CommandText = "UPSERT INTO test(val) VALUES(@val1), (@val2)";

cmd.Parameters.AddWithValue("val1", Guid.NewGuid().ToString("n").Substring(0, 10));

cmd.Parameters.AddWithValue("val2", Guid.NewGuid().ToString("n").Substring(0, 10));

cmd.ExecuteNonQuery();

}

System.Console.WriteLine("Results:");

using (var cmd = new NpgsqlCommand("SELECT id, val FROM test", conn))

using (var reader = cmd.ExecuteReader())

while (reader.Read())

Console.Write("\rrecord {0}: {1}\n", reader.GetValue(0), reader.GetValue(1));

}

}

}

}

We can split the application code into two paths: one for read/write transactions and one for read only. It's a great method to separate the traffic to reduce contention. Here's the code to do that:

using System;

using System.Data;

using System.Net.Security;

using Npgsql;

namespace Cockroach

{

class MainClass

{

static void Main(string[] args)

{

var connReadWrite = "Host=artem-mr-7xw.aws-us-east-1.cockroachlabs.cloud;Username=artem;Passfile=/Users/artem/.pgpass;Database=dotnet;RootCertificate=/Users/artem/Library/CockroachCloud/certs/artem-mr-ca.crt;Port=26257;SslMode=VerifyCA";

var connReadOnly = "Host=artem-mr-7xw.aws-us-east-1.cockroachlabs.cloud;Username=artem;Passfile=/Users/artem/.pgpass;Database=dotnet;RootCertificate=/Users/artem/Library/CockroachCloud/certs/artem-mr-ca.crt;Port=26257;SslMode=VerifyCA;Options=-c default_transaction_use_follower_reads=on;";

ReadWrite(connReadWrite);

ReadOnly(connReadOnly);

}

static void ReadWrite(string connReadWrite)

{

using (var conn = new NpgsqlConnection(connReadWrite))

{

conn.Open();

new NpgsqlCommand("CREATE TABLE IF NOT EXISTS test (id UUID DEFAULT gen_random_uuid() PRIMARY KEY, val STRING)", conn).ExecuteNonQuery();

using (var cmd = new NpgsqlCommand())

{

cmd.Connection = conn;

cmd.CommandText = "UPSERT INTO test(val) VALUES(@val1), (@val2)";

cmd.Parameters.AddWithValue("val1", Guid.NewGuid().ToString("n").Substring(0, 10));

cmd.Parameters.AddWithValue("val2", Guid.NewGuid().ToString("n").Substring(0, 10));

cmd.ExecuteNonQuery();

}

}

}

static void ReadOnly(string connReadOnly)

{

using (var conn = new NpgsqlConnection(connReadOnly))

{

conn.Open();

System.Console.WriteLine("Results:");

using (var cmd = new NpgsqlCommand("SELECT id, val FROM test", conn))

using (var reader = cmd.ExecuteReader())

while (reader.Read())

Console.Write("\rrecord {0}: {1}\n", reader.GetValue(0), reader.GetValue(1));

}

}

}

}

Notice the connReadOnly has the session variable for follower reads; i.e., Options=-c default_transaction_use_follower_reads=on;. All of the SELECT queries will now route through a follower-read connection. Read/write traffic will not work with this connection, as follower reads only work with read-only transactions.

To confirm we're indeed using the follower reads, we have to capture a debug zip in CockroachDB and analyze the trace.json file. Inspecting the file will yield output similar to below:

{

"key": "event",

"value": "‹kv/kvserver/pkg/kv/kvserver/replica_follower_read.go:104 [n3,s3,r1345/3:/{Table/282-Max}] serving via follower read; query timestamp below closed timestamp by 1.162361555s›"

}

The range ID is r1345. You can see it from n3,s3,r1345/3:/{Table/282-Max}]. If we run SELECT range_id, lease_holder, replicas, replica_localities FROM [SHOW RANGES FROM TABLE test];, we can see the following:

SELECT range_id, lease_holder, replicas, replica_localities FROM [SHOW RANGES FROM TABLE test];

range_id | lease_holder | replicas | replica_localities

-----------+--------------+----------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

1345 | 5 | {3,5,7} | {"region=aws-us-east-1,az=aws-us-east-1b,dns=cockroachdb-1.cockroachdb.us-east-1.svc.cluster.local","region=aws-us-east-2,az=aws-us-east-2b,dns=cockroachdb-1.cockroachdb.us-east-2.svc.cluster.local","region=aws-us-west-2,az=aws-us-west-2a,dns=cockroachdb-0.cockroachdb.us-west-2.svc.cluster.local"}

Notice the range ID matches, there are 3 replicas and they are located on node 3, 5 and 7 with node 5 hosting the lease_holder replica. Since the read came from node 3, we read from the local replica and not the lease_holder.

Bonus Topic: Npgsql and pgpass

I decided to wrap up this volume with a bonus topic, as it is related to the topics above. Notice the connection string in my C# code above:

var connString = "Host=artem-mr-7xw.aws-us-east-1.cockroachlabs.cloud;Username=artem;Passfile=/Users/artem/.pgpass;Database=dotnet;RootCertificate=/Users/artem/Library/CockroachCloud/certs/artem-mr-ca.crt;Port=26257;SslMode=VerifyCA";Specifically, note the Passfile=/Users/artem/.pgpass; part. I was happy to see in the Npgsql that PGPASSFILE variable is supported. I set up my pgpass file and pointed to my client app. Lo and behold, it works as expected. Feel free to refer to articles 1-5 linked at the beginning of this article for more on pgpass.

Published at DZone with permission of Artem Ervits. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments