CockroachDB TIL: Volume 11

This is my series of articles covering short "Today I learned" topics as I work with CockroachDB. Today, we're covering auto-completion and syntax highlighting in CLI with pgcli, Sqlalchemy and ON UPDATE expressions, identifying sessions, naming transaction blocks and setting traces in CLI.

Join the DZone community and get the full member experience.

Join For FreePrevious Articles

Topics

- Topic 1: Using pgcli with CockroachDB

- Topic 2: Using ON UPDATE expression with Sqlachemy

- Topic 3: Identify the current session's transaction ID

- Topic 4: Identifying and naming transaction blocks

- Topic 5: Set trace for individual statements in CLI

Topic 1: Using Pgcli With CockroachDB

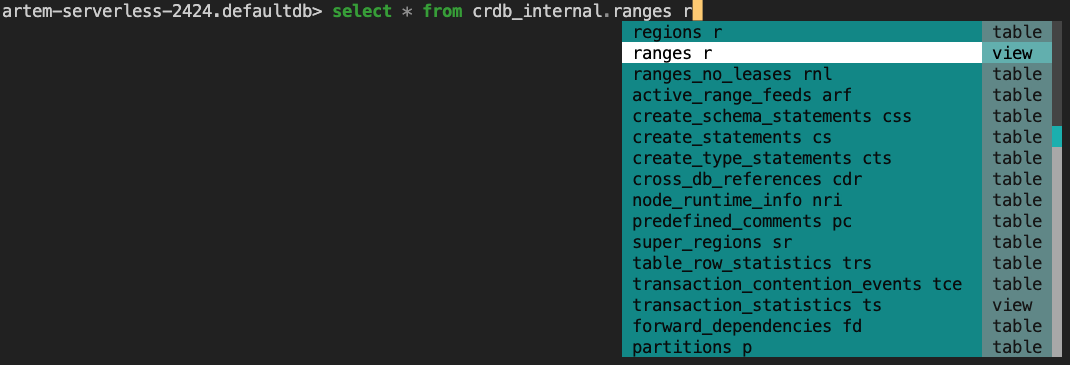

I stumbled on pgcli When I was writing my auto-completion topic from the last volume. I initially needed a CLI tool with auto-completion because I desperately needed it to deal with very long schema and table names. When I first tried it with version 3.4.1, which was the latest at the time, I was seeing many errors. I decided to pause my evaluation and come back later. Luckily, a month later, version 3.5.0 was released which seemed to fix those issues for me. I no longer see Python stack traces. Let's take it for a spin...

Pgcli describes itself as a PostgreSQL client with auto-completion and syntax highlighting. Get a pgurl connection string, i.e. postgresql:// or Postgresql environment variables to connect to a cluster.

$ pgcli [database_name]

or

$ pgcli postgresql://[user[:password]@][netloc][:port][/dbname][?extra=value[&other=other-value]]

I'm using a serverless cluster, you can capture the connection parameters from the Connect modal:

pgcli -h <host> -u <username> -p 26257 -d <routingID>.defaultdb

Password for artem:

Server: PostgreSQL 13.0.0

Version: 3.5.0

Home: http://pgcli.comSet the PGPASSWORD variable if you want to silently pass the password to the terminal, i.e. export PGPASSWORD=password or set up pgpass which pgcli supports!

Overall, it offers a pleasant CLI experience and saves on typing. It also exposes built-in functions, views and tables.

It's a great tool to have in my arsenal.

Topic 2: Using Update Expression With Sqlachemy

A community Slack user had a question about our ON UPDATE syntax and how to use it in Sqlachemy. The user wanted to update the timestamp for a row each time the row gets updated. I've looked into the question and want to provide a solution in case it arises again. The best option to start is to grab the CockroachDB Sqlalchemy tutorial and reproduce the problem. In the db-init.sql file, we're going to add two more fields for created_at and updated_at

CREATE TABLE accounts (

id UUID PRIMARY KEY,

balance INT8,

created_at TIMESTAMPTZ NULL DEFAULT now():::TIMESTAMPTZ,

updated_at TIMESTAMPTZ NULL DEFAULT now():::TIMESTAMPTZ ON UPDATE now():::TIMESTAMPTZ

);The created_at field has a default now() function to persist the date and time when the row issues are created. The updated_at field will capture the initial timestamp and additionally apply now() function when the row gets updated.

The other class we're going to modify is models.py. We need to map the SQL fields to the application code.

from sqlalchemy import Column, Integer

from sqlalchemy.dialects.postgresql import UUID

from sqlalchemy.orm import declarative_base

from sqlalchemy.dialects.postgresql import TIMESTAMP

from sqlalchemy.sql import func

Base = declarative_base()

class Account(Base):

"""The Account class corresponds to the "accounts" database table.

"""

__tablename__ = 'accounts'

id = Column(UUID(as_uuid=True), primary_key=True)

balance = Column(Integer)

Column("created_at", TIMESTAMP(timezone=True), default=func.now()),

Column("updated_at", TIMESTAMP(timezone=True), default=func.now(),onupdate=func.now())The user was specifying DateTime data type. I wanted to reduce the number of variables and opted for the PostgreSQL TIMESTAMP data type. After which, I've referenced the following Sqlachemy document to get the syntax right. At this point, the code above should do what is supposed to, created_at will capture the creation and updated_at will capture the creation as well as the updated timestamp. The first time around, I mistakenly assumed DateTime was a MySQL datatype but in conversation with a colleague, as well as referencing our python tutorials, I've noticed we use DateTime in our examples. I've set out to rewrite my example using DateTime instead of TIMESTAMP. The dbinit file is left intact and our models.py file is changed to:

from sqlalchemy import Column, Integer, DateTime

from sqlalchemy.dialects.postgresql import UUID

from sqlalchemy.orm import declarative_base

from sqlalchemy.sql import func

Base = declarative_base()

class Account(Base):

"""The Account class corresponds to the "accounts" database table.

"""

__tablename__ = 'accounts'

id = Column(UUID(as_uuid=True), primary_key=True)

balance = Column(Integer)

created_at= Column(DateTime, default=func.now())

updated_at = Column(DateTime, default=func.now(), onupdate=func.now())If we want to align with our docs, the following rewrite will adhere to our tutorials:

from sqlalchemy import Column, Integer, DateTime

from sqlalchemy.dialects.postgresql import UUID

from sqlalchemy.orm import declarative_base

from sqlalchemy.sql import func

import datetime

Base = declarative_base()

class Account(Base):

"""The Account class corresponds to the "accounts" database table.

"""

__tablename__ = 'accounts'

id = Column(UUID(as_uuid=True), primary_key=True)

balance = Column(Integer)

created_at = Column(DateTime, default=datetime.datetime.now())

updated_at = Column(DateTime, default=datetime.datetime.now(), onupdate=datetime.datetime.now())The DateTime example perhaps is more portable across database flavours though it seems harder to read.

Topic 3: Identify the Current Session’s Transaction ID

The following question is from our Community Slack. The user asks: how to identify the transaction ID of the current session. It seems obvious and should be easily achieved but I've never approached a problem from the current session's context.

WITH sid AS (SHOW session_id)

SELECT id AS transaction_id

FROM crdb_internal.node_transactions

WHERE session_id IN (SELECT * FROM sid);Firstly, I was struck by the SHOW session_id syntax, this is not something I was aware of. I've not looked at crdb_internal.node_transactions either before. I should dedicate some time and look at all crdb_internal tables available. Putting the two together makes the original problem go away.

We will get output similar to this

fb1d8fe4-75ef-4d5d-acce-ef718e8f94e3

(1 row)

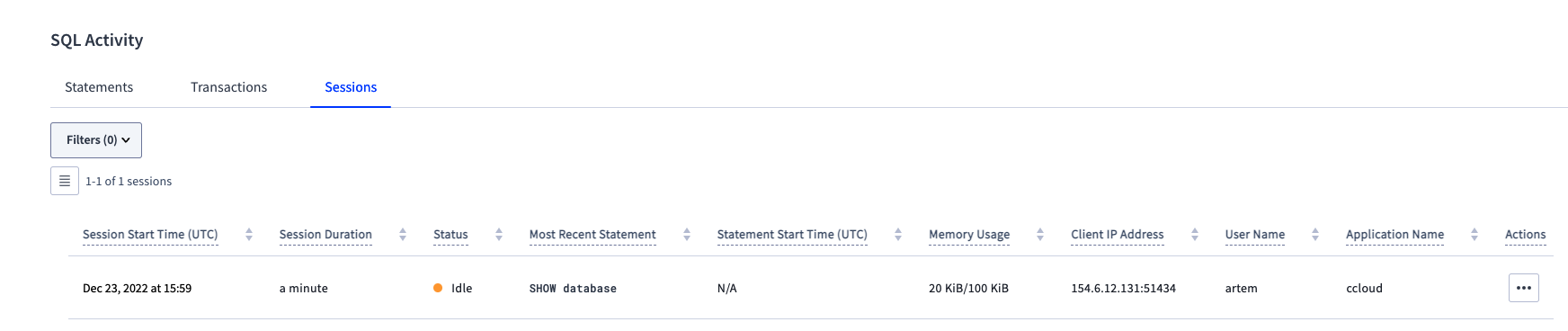

Time: 29ms total (execution 2ms / network 27ms)We can confirm it by going to the DB Console

If we click into it, we can cancel statements executing as part of the session:

artem@artem-serverless-2963.g8z.cockroachlabs.cloud:26257/defaultdb> select pg_sleep(1000);

ERROR: query execution canceled

SQLSTATE: 57014or the session itself

artem@artem-serverless-2963.g8z.cockroachlabs.cloud:26257/defaultdb> select pg_sleep(1000);

invalid syntax: statement ignored: unexpected error: driver: bad connection

warning: connection lost!

opening new connection: all session settings will be lostTopic 4 Identifying and Naming Transaction Blocks

I was working on a POC where a workload consisted of many business transactions. It was difficult to distinguish one transaction from another, i.e. buy vs. sell. The solution I was looking for was akin to the appliation_name session variable but for an individual transaction rather than the entire session. That way I would be able to filter them out in the SQL Activity view and narrow down my search. To my surprise, there is an option for explicit transactions. Looking at the session docs, we can see that we can set individual transactions using SET LOCAL syntax.

CockroachDB supports setting session variables for the duration of a single transaction, using the LOCAL keyword.

Here's an example from our docs

In my case, I'd have to set the variable like so:

SHOW application_name;

BEGIN;

SET LOCAL application_name=execute_sell_order;

-- a business transaction goes here

SHOW application_name;

COMMIT;

SHOW application_name;To demonstrate the result, I have to turn off the timing for the queries to see a better :

\unset show_times

application_name

--------------------

ccloud

(1 row)

BEGIN

SET

application_name

----------------------

execute_sell_order

(1 row)

COMMIT

application_name

--------------------

ccloudUnfortunately, this capability is exclusive to explicit transactions. Implicit transactions are not affected.

Topic 5 Set Trace for Individual Statements in CLI

I was researching the previous topic and stumbled on the CLI option to enable auto_trace. I was curious about what it meant and figured I'd give it a try. The description for the property states:

For every statement executed, the shell also produces the trace for that statement in a separate result below. A trace is also produced in case the statement produces a SQL error.

\set auto_trace on

Let's run a sample statement

artem@artem-serverless-2963.g8z.cockroachlabs.cloud:26257/defaultdb> select 1;

?column?

------------

1

(1 row)

Time: 39ms total (execution 1ms / network 37ms)

timestamp | age | message | tag | location | operation | span

--------------------------------+-----------------+------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+-------------------------------------------------------------------+---------------------------------------------+------------------------+-------

2022-12-23 16:43:57.293067+00 | 00:00:00 | === SPAN START: session recording === | | | session recording | 0

2022-12-23 16:43:57.293137+00 | 00:00:00.00007 | [NoTxn pos:53] executing Sync | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/conn_executor.go:1848 | session recording | 0

2022-12-23 16:43:57.321223+00 | 00:00:00.028156 | [NoTxn pos:54] executing ExecStmt: SELECT 1 | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/conn_executor.go:1848 | session recording | 0

2022-12-23 16:43:57.321241+00 | 00:00:00.028174 | executing: SELECT 1 in state: NoTxn | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/conn_executor_exec.go:128 | session recording | 0

2022-12-23 16:43:57.321253+00 | 00:00:00.028186 | === SPAN START: sql txn === | | | sql txn | 1

2022-12-23 16:43:57.321306+00 | 00:00:00.028238 | [Open pos:54] executing ExecStmt: SELECT 1 | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/conn_executor.go:1848 | sql txn | 1

2022-12-23 16:43:57.321315+00 | 00:00:00.028247 | executing: SELECT 1 in state: Open | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/conn_executor_exec.go:128 | sql txn | 1

2022-12-23 16:43:57.321316+00 | 00:00:00.028249 | === SPAN START: sql query === | | | sql query | 2

2022-12-23 16:43:57.321365+00 | 00:00:00.028297 | planning starts: SELECT | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/exec_util.go:2353 | sql query | 2

2022-12-23 16:43:57.321384+00 | 00:00:00.028316 | query cache hit | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/plan_opt.go:371 | sql query | 2

2022-12-23 16:43:57.321421+00 | 00:00:00.028353 | planning ends | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/conn_executor_exec.go:747 | sql query | 2

2022-12-23 16:43:57.321436+00 | 00:00:00.028369 | checking distributability | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/conn_executor_exec.go:747 | sql query | 2

2022-12-23 16:43:57.321445+00 | 00:00:00.028378 | will distribute plan: false | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/conn_executor_exec.go:747 | sql query | 2

2022-12-23 16:43:57.321458+00 | 00:00:00.02839 | executing after 0 retries, last retry reason: <nil> | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/conn_executor_exec.go:747 | sql query | 2

2022-12-23 16:43:57.321468+00 | 00:00:00.0284 | execution starts: distributed engine | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/conn_executor_exec.go:747 | sql query | 2

2022-12-23 16:43:57.321469+00 | 00:00:00.028401 | === SPAN START: consuming rows === | | | consuming rows | 3

2022-12-23 16:43:57.321488+00 | 00:00:00.028421 | creating DistSQL plan with isLocal=true | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/distsql_running.go:1436 | sql query | 2

2022-12-23 16:43:57.321518+00 | 00:00:00.028451 | running DistSQL plan | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/distsql_running.go:514 | sql query | 2

2022-12-23 16:43:57.321528+00 | 00:00:00.028461 | === SPAN START: flow === | | | flow | 4

2022-12-23 16:43:57.321549+00 | 00:00:00.028481 | setting up vectorized flow | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/colflow/vectorized_flow.go:237 | flow | 4

2022-12-23 16:43:57.321595+00 | 00:00:00.028528 | planning a row-execution processor in the vectorized flow: LocalPlanNode core needs to be wrapped | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/colexec/colbuilder/execplan.go:547 | flow | 4

2022-12-23 16:43:57.321624+00 | 00:00:00.028556 | vectorized flow setup succeeded | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/colflow/vectorized_flow.go:289 | flow | 4

2022-12-23 16:43:57.321639+00 | 00:00:00.028572 | starting (0 processors, 0 startables) asynchronously | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/flowinfra/flow.go:383 | flow | 4

2022-12-23 16:43:57.321648+00 | 00:00:00.028581 | running the batch flow coordinator in the flow's goroutine | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/colflow/vectorized_flow.go:315 | flow | 4

2022-12-23 16:43:57.321649+00 | 00:00:00.028582 | === SPAN START: batch flow coordinator === | | | batch flow coordinator | 5

| | cockroach.flowid: 2bbbb474-8f23-410c-8969-3c9c2ba0cf1a | | | |

| | cockroach.processorid: 0 | | | |

2022-12-23 16:43:57.321773+00 | 00:00:00.028705 | component:<flow_id:<2bbbb474-8f23-410c-8969-3c9c2ba0cf1a> type:PROCESSOR id:0 sql_instance_id:1 > net_rx:<latency:<> wait_time:<> deserialization_time:<> tuples_received:<> bytes_received:<> messages_received:<> > net_tx:<tuples_sent:<> bytes_sent:<> messages_sent:<> > kv:<bytes_read:<> tuples_read:<> kv_time:<> contention_time:<> num_interface_steps:<> num_internal_steps:<> num_interface_seeks:<> num_internal_seeks:<> > exec:<exec_time:<value_plus_one:<nanos:11583 > > max_allocated_mem:<> max_allocated_disk:<> > output:<num_batches:<value_plus_one:2 > num_tuples:<value_plus_one:2 > > flow_stats:<max_mem_usage:<> max_disk_usage:<> > | | | batch flow coordinator | 5

2022-12-23 16:43:57.321838+00 | 00:00:00.02877 | component:<flow_id:<2bbbb474-8f23-410c-8969-3c9c2ba0cf1a> type:FLOW id:0 sql_instance_id:1 > net_rx:<latency:<> wait_time:<> deserialization_time:<> tuples_received:<> bytes_received:<> messages_received:<> > net_tx:<tuples_sent:<> bytes_sent:<> messages_sent:<> > kv:<bytes_read:<> tuples_read:<> kv_time:<> contention_time:<> num_interface_steps:<> num_internal_steps:<> num_interface_seeks:<> num_internal_seeks:<> > exec:<exec_time:<> max_allocated_mem:<> max_allocated_disk:<> > output:<num_batches:<> num_tuples:<> > flow_stats:<max_mem_usage:<value_plus_one:10241 > max_disk_usage:<value_plus_one:1 > > | | | flow | 4

2022-12-23 16:43:57.321876+00 | 00:00:00.028808 | execution ends | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/conn_executor_exec.go:747 | sql query | 2

2022-12-23 16:43:57.321887+00 | 00:00:00.02882 | rows affected: 1 | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/conn_executor_exec.go:747 | sql query | 2

2022-12-23 16:43:57.322068+00 | 00:00:00.029 | Structured entry: {"Timestamp":1671813837321258041,"EventType":"sampled_query","Statement":"SELECT 1","Tag":"SELECT","User":"artem","ApplicationName":"ccloud","ExecMode":"exec","NumRows":1,"Age":0.886493,"TxnCounter":12,"CostEstimate":0.02,"Distribution":"local","PlanGist":"AgICAgYC","SessionID":"173379c923e663eb0000000000000001","Database":"defaultdb","StatementID":"173379d3d01eb35f0000000000000001","TransactionID":"82308af1-7f00-4891-98ef-0222589fb466","StatementFingerprintID":16085855936700855347,"OutputRowsEstimate":1,"StatsAvailable":true} | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | util/log/event_log.go:32 | sql query | 2

2022-12-23 16:43:57.322197+00 | 00:00:00.029129 | === SPAN START: commit sql txn === | | | commit sql txn | 6

2022-12-23 16:43:57.322241+00 | 00:00:00.029174 | AutoCommit. err: <nil> | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/conn_executor_exec.go:2160 | sql query | 2

2022-12-23 16:43:57.322314+00 | 00:00:00.029247 | releasing 0 descriptors | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/catalog/descs/leased_descriptors.go:237 | session recording | 0

2022-12-23 16:43:57.322346+00 | 00:00:00.029279 | [NoTxn pos:55] executing Sync | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/conn_executor.go:1848 | session recording | 0

2022-12-23 16:43:57.361029+00 | 00:00:00.067962 | [NoTxn pos:56] executing ExecStmt: SHOW LAST QUERY STATISTICS RETURNING parse_latency, plan_latency, exec_latency, service_latency, post_commit_jobs_latency | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/conn_executor.go:1848 | session recording | 0

2022-12-23 16:43:57.361047+00 | 00:00:00.067979 | executing: SHOW LAST QUERY STATISTICS RETURNING parse_latency, plan_latency, exec_latency, service_latency, post_commit_jobs_latency in state: NoTxn | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/conn_executor_exec.go:128 | session recording | 0

2022-12-23 16:43:57.361085+00 | 00:00:00.068018 | [NoTxn pos:57] executing Sync | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/conn_executor.go:1848 | session recording | 0

2022-12-23 16:43:57.390313+00 | 00:00:00.097246 | [NoTxn pos:58] executing ExecStmt: SET TRACING = off | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/conn_executor.go:1848 | session recording | 0

2022-12-23 16:43:57.390331+00 | 00:00:00.097264 | executing: SET TRACING = off in state: NoTxn | [nsql1,peer=10.0.1.10:47778,client=154.6.12.131:52049,user=artem] | sql/conn_executor_exec.go:128 | session recording | 0

(39 rows)

Time: 68ms total (execution 1ms / network 67ms)So the output is not new, you can find this sort of information in statement bundles but I've not seen the option inside the CLI before, which makes debugging user experience so much better!

Opinions expressed by DZone contributors are their own.

Comments